Miguel D. Mahecha

Context-Aware Multimodal Representation Learning for Spatio-Temporally Explicit Environmental Modelling

Nov 18, 2025

Abstract:Earth observation (EO) foundation models have emerged as an effective approach to derive latent representations of the Earth system from various remote sensing sensors. These models produce embeddings that can be used as analysis-ready datasets, enabling the modelling of ecosystem dynamics without extensive sensor-specific preprocessing. However, existing models typically operate at fixed spatial or temporal scales, limiting their use for ecological analyses that require both fine spatial detail and high temporal fidelity. To overcome these limitations, we propose a representation learning framework that integrates different EO modalities into a unified feature space at high spatio-temporal resolution. We introduce the framework using Sentinel-1 and Sentinel-2 data as representative modalities. Our approach produces a latent space at native 10 m resolution and the temporal frequency of cloud-free Sentinel-2 acquisitions. Each sensor is first modeled independently to capture its sensor-specific characteristics. Their representations are then combined into a shared model. This two-stage design enables modality-specific optimisation and easy extension to new sensors, retaining pretrained encoders while retraining only fusion layers. This enables the model to capture complementary remote sensing data and to preserve coherence across space and time. Qualitative analyses reveal that the learned embeddings exhibit high spatial and semantic consistency across heterogeneous landscapes. Quantitative evaluation in modelling Gross Primary Production reveals that they encode ecologically meaningful patterns and retain sufficient temporal fidelity to support fine-scale analyses. Overall, the proposed framework provides a flexible, analysis-ready representation learning approach for environmental applications requiring diverse spatial and temporal resolutions.

Transformers vs. Recurrent Models for Estimating Forest Gross Primary Production

Nov 14, 2025

Abstract:Monitoring the spatiotemporal dynamics of forest CO$_2$ uptake (Gross Primary Production, GPP), remains a central challenge in terrestrial ecosystem research. While Eddy Covariance (EC) towers provide high-frequency estimates, their limited spatial coverage constrains large-scale assessments. Remote sensing offers a scalable alternative, yet most approaches rely on single-sensor spectral indices and statistical models that are often unable to capture the complex temporal dynamics of GPP. Recent advances in deep learning (DL) and data fusion offer new opportunities to better represent the temporal dynamics of vegetation processes, but comparative evaluations of state-of-the-art DL models for multimodal GPP prediction remain scarce. Here, we explore the performance of two representative models for predicting GPP: 1) GPT-2, a transformer architecture, and 2) Long Short-Term Memory (LSTM), a recurrent neural network, using multivariate inputs. Overall, both achieve similar accuracy. But, while LSTM performs better overall, GPT-2 excels during extreme events. Analysis of temporal context length further reveals that LSTM attains similar accuracy using substantially shorter input windows than GPT-2, highlighting an accuracy-efficiency trade-off between the two architectures. Feature importance analysis reveals radiation as the dominant predictor, followed by Sentinel-2, MODIS land surface temperature, and Sentinel-1 contributions. Our results demonstrate how model architecture, context length, and multimodal inputs jointly determine performance in GPP prediction, guiding future developments of DL frameworks for monitoring terrestrial carbon dynamics.

Explainable Earth Surface Forecasting under Extreme Events

Oct 02, 2024

Abstract:With climate change-related extreme events on the rise, high dimensional Earth observation data presents a unique opportunity for forecasting and understanding impacts on ecosystems. This is, however, impeded by the complexity of processing, visualizing, modeling, and explaining this data. To showcase how this challenge can be met, here we train a convolutional long short-term memory-based architecture on the novel DeepExtremeCubes dataset. DeepExtremeCubes includes around 40,000 long-term Sentinel-2 minicubes (January 2016-October 2022) worldwide, along with labeled extreme events, meteorological data, vegetation land cover, and topography map, sampled from locations affected by extreme climate events and surrounding areas. When predicting future reflectances and vegetation impacts through kernel normalized difference vegetation index, the model achieved an R$^2$ score of 0.9055 in the test set. Explainable artificial intelligence was used to analyze the model's predictions during the October 2020 Central South America compound heatwave and drought event. We chose the same area exactly one year before the event as counterfactual, finding that the average temperature and surface pressure are generally the best predictors under normal conditions. In contrast, minimum anomalies of evaporation and surface latent heat flux take the lead during the event. A change of regime is also observed in the attributions before the event, which might help assess how long the event was brewing before happening. The code to replicate all experiments and figures in this paper is publicly available at https://github.com/DeepExtremes/txyXAI

Earth System Data Cubes: Avenues for advancing Earth system research

Aug 05, 2024Abstract:Recent advancements in Earth system science have been marked by the exponential increase in the availability of diverse, multivariate datasets characterised by moderate to high spatio-temporal resolutions. Earth System Data Cubes (ESDCs) have emerged as one suitable solution for transforming this flood of data into a simple yet robust data structure. ESDCs achieve this by organising data into an analysis-ready format aligned with a spatio-temporal grid, facilitating user-friendly analysis and diminishing the need for extensive technical data processing knowledge. Despite these significant benefits, the completion of the entire ESDC life cycle remains a challenging task. Obstacles are not only of a technical nature but also relate to domain-specific problems in Earth system research. There exist barriers to realising the full potential of data collections in light of novel cloud-based technologies, particularly in curating data tailored for specific application domains. These include transforming data to conform to a spatio-temporal grid with minimum distortions and managing complexities such as spatio-temporal autocorrelation issues. Addressing these challenges is pivotal for the effective application of Artificial Intelligence (AI) approaches. Furthermore, adhering to open science principles for data dissemination, reproducibility, visualisation, and reuse is crucial for fostering sustainable research. Overcoming these challenges offers a substantial opportunity to advance data-driven Earth system research, unlocking the full potential of an integrated, multidimensional view of Earth system processes. This is particularly true when such research is coupled with innovative research paradigms and technological progress.

Nonlinear spectral analysis extracts harmonics from land-atmosphere fluxes

Jul 27, 2024Abstract:Understanding the dynamics of the land-atmosphere exchange of CO$_2$ is key to advance our predictive capacities of the coupled climate-carbon feedback system. In essence, the net vegetation flux is the difference of the uptake of CO$_2$ via photosynthesis and the release of CO$_2$ via respiration, while the system is driven by periodic processes at different time-scales. The complexity of the underlying dynamics poses challenges to classical decomposition methods focused on maximizing data variance, such as singular spectrum analysis. Here, we explore whether nonlinear data-driven methods can better separate periodic patterns and their harmonics from noise and stochastic variability. We find that Nonlinear Laplacian Spectral Analysis (NLSA) outperforms the linear method and detects multiple relevant harmonics. However, these harmonics are not detected in the presence of substantial measurement irregularities. In summary, the NLSA approach can be used to both extract the seasonal cycle more accurately than linear methods, but likewise detect irregular signals resulting from irregular land-atmosphere interactions or measurement failures. Improving the detection capabilities of time-series decomposition is essential for improving land-atmosphere interactions models that should operate accurately on any time scale.

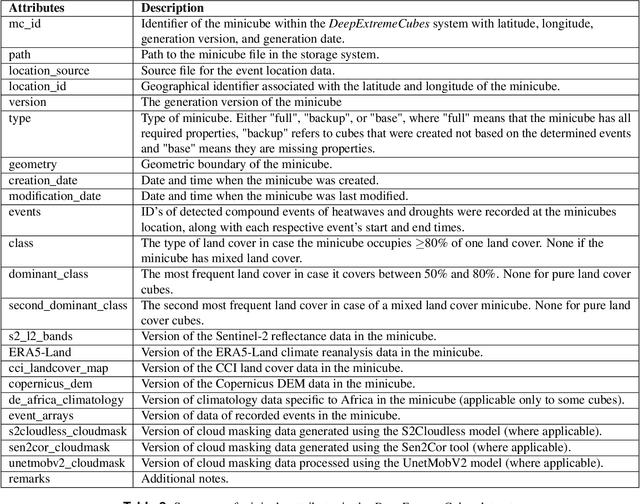

DeepExtremeCubes: Integrating Earth system spatio-temporal data for impact assessment of climate extremes

Jun 26, 2024

Abstract:With climate extremes' rising frequency and intensity, robust analytical tools are crucial to predict their impacts on terrestrial ecosystems. Machine learning techniques show promise but require well-structured, high-quality, and curated analysis-ready datasets. Earth observation datasets comprehensively monitor ecosystem dynamics and responses to climatic extremes, yet the data complexity can challenge the effectiveness of machine learning models. Despite recent progress in deep learning to ecosystem monitoring, there is a need for datasets specifically designed to analyse compound heatwave and drought extreme impact. Here, we introduce the DeepExtremeCubes database, tailored to map around these extremes, focusing on persistent natural vegetation. It comprises over 40,000 spatially sampled small data cubes (i.e. minicubes) globally, with a spatial coverage of 2.5 by 2.5 km. Each minicube includes (i) Sentinel-2 L2A images, (ii) ERA5-Land variables and generated extreme event cube covering 2016 to 2022, and (iii) ancillary land cover and topography maps. The paper aims to (1) streamline data accessibility, structuring, pre-processing, and enhance scientific reproducibility, and (2) facilitate biosphere dynamics forecasting in response to compound extremes.

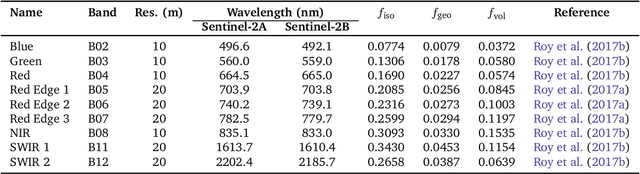

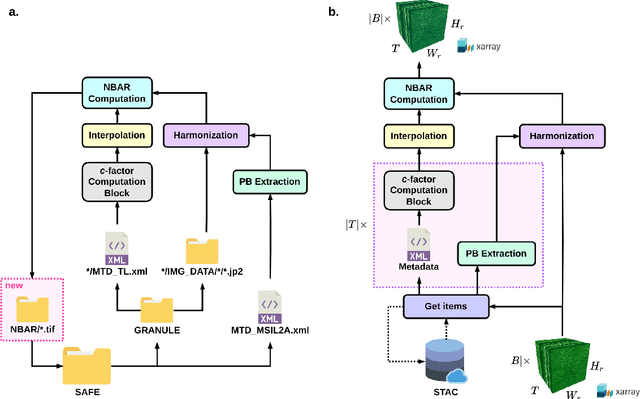

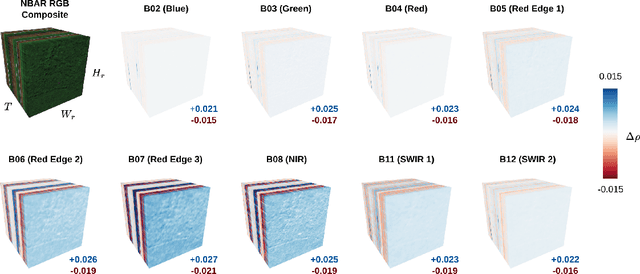

Facilitating Advanced Sentinel-2 Analysis Through a Simplified Computation of Nadir BRDF Adjusted Reflectance

Apr 24, 2024

Abstract:The Sentinel-2 (S2) mission from the European Space Agency's Copernicus program provides essential data for Earth surface analysis. Its Level-2A products deliver high-to-medium resolution (10-60 m) surface reflectance (SR) data through the MultiSpectral Instrument (MSI). To enhance the accuracy and comparability of SR data, adjustments simulating a nadir viewing perspective are essential. These corrections address the anisotropic nature of SR and the variability in sun and observation angles, ensuring consistent image comparisons over time and under different conditions. The $c$-factor method, a simple yet effective algorithm, adjusts observed S2 SR by using the MODIS BRDF model to achieve Nadir BRDF Adjusted Reflectance (NBAR). Despite the straightforward application of the $c$-factor to individual images, a cohesive Python framework for its application across multiple S2 images and Earth System Data Cubes (ESDCs) from cloud-stored data has been lacking. Here we introduce sen2nbar, a Python package crafted to convert S2 SR data to NBAR, supporting both individual images and ESDCs derived from cloud-stored data. This package simplifies the conversion of S2 SR data to NBAR via a single function, organized into modules for efficient process management. By facilitating NBAR conversion for both SAFE files and ESDCs from SpatioTemporal Asset Catalogs (STAC), sen2nbar is developed as a flexible tool that can handle diverse data format requirements. We anticipate that sen2nbar will considerably contribute to the standardization and harmonization of S2 data, offering a robust solution for a diverse range of users across various applications. sen2nbar is an open-source tool available at https://github.com/ESDS-Leipzig/sen2nbar.

On-Demand Earth System Data Cubes

Apr 19, 2024

Abstract:Advancements in Earth system science have seen a surge in diverse datasets. Earth System Data Cubes (ESDCs) have been introduced to efficiently handle this influx of high-dimensional data. ESDCs offer a structured, intuitive framework for data analysis, organising information within spatio-temporal grids. The structured nature of ESDCs unlocks significant opportunities for Artificial Intelligence (AI) applications. By providing well-organised data, ESDCs are ideally suited for a wide range of sophisticated AI-driven tasks. An automated framework for creating AI-focused ESDCs with minimal user input could significantly accelerate the generation of task-specific training data. Here we introduce cubo, an open-source Python tool designed for easy generation of AI-focused ESDCs. Utilising collections in SpatioTemporal Asset Catalogs (STAC) that are stored as Cloud Optimised GeoTIFFs (COGs), cubo efficiently creates ESDCs, requiring only central coordinates, spatial resolution, edge size, and time range.

Recurrent Neural Networks for Modelling Gross Primary Production

Apr 19, 2024

Abstract:Accurate quantification of Gross Primary Production (GPP) is crucial for understanding terrestrial carbon dynamics. It represents the largest atmosphere-to-land CO$_2$ flux, especially significant for forests. Eddy Covariance (EC) measurements are widely used for ecosystem-scale GPP quantification but are globally sparse. In areas lacking local EC measurements, remote sensing (RS) data are typically utilised to estimate GPP after statistically relating them to in-situ data. Deep learning offers novel perspectives, and the potential of recurrent neural network architectures for estimating daily GPP remains underexplored. This study presents a comparative analysis of three architectures: Recurrent Neural Networks (RNNs), Gated Recurrent Units (GRUs), and Long-Short Term Memory (LSTMs). Our findings reveal comparable performance across all models for full-year and growing season predictions. Notably, LSTMs outperform in predicting climate-induced GPP extremes. Furthermore, our analysis highlights the importance of incorporating radiation and RS inputs (optical, temperature, and radar) for accurate GPP predictions, particularly during climate extremes.

ReservoirComputing.jl: An Efficient and Modular Library for Reservoir Computing Models

Apr 08, 2022

Abstract:We introduce ReservoirComputing.jl, an open source Julia library for reservoir computing models. The software offers a great number of algorithms presented in the literature, and allows to expand on them with both internal and external tools in a simple way. The implementation is highly modular, fast and comes with a comprehensive documentation, which includes reproduced experiments from literature. The code and documentation are hosted on Github under an MIT license https://github.com/SciML/ReservoirComputing.jl.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge