Kernel Methods and their derivatives: Concept and perspectives for the Earth system sciences

Paper and Code

Jul 29, 2020

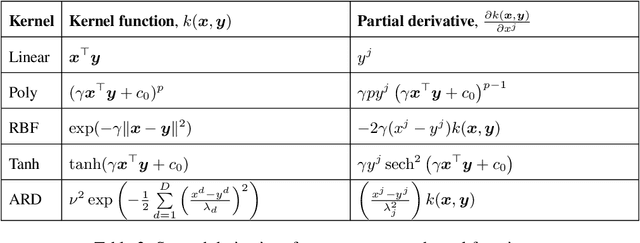

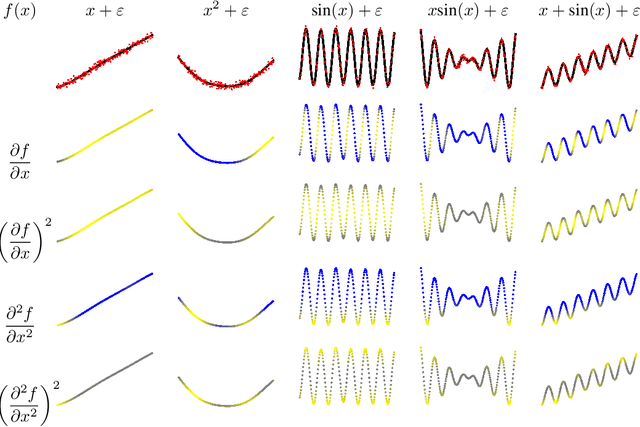

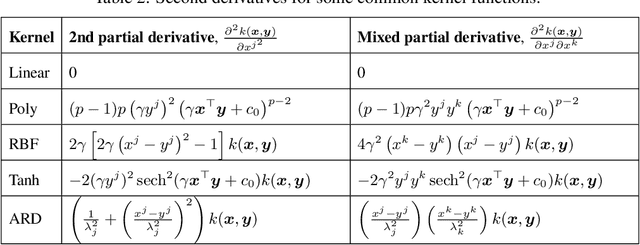

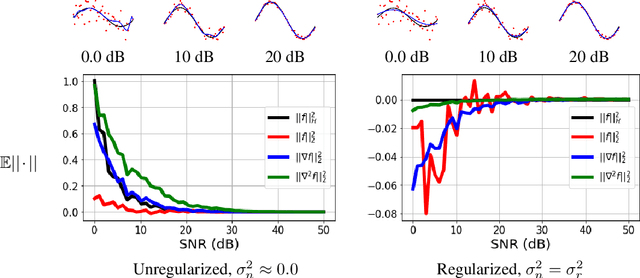

Kernel methods are powerful machine learning techniques which implement generic non-linear functions to solve complex tasks in a simple way. They Have a solid mathematical background and exhibit excellent performance in practice. However, kernel machines are still considered black-box models as the feature mapping is not directly accessible and difficult to interpret.The aim of this work is to show that it is indeed possible to interpret the functions learned by various kernel methods is intuitive despite their complexity. Specifically, we show that derivatives of these functions have a simple mathematical formulation, are easy to compute, and can be applied to many different problems. We note that model function derivatives in kernel machines is proportional to the kernel function derivative. We provide the explicit analytic form of the first and second derivatives of the most common kernel functions with regard to the inputs as well as generic formulas to compute higher order derivatives. We use them to analyze the most used supervised and unsupervised kernel learning methods: Gaussian Processes for regression, Support Vector Machines for classification, Kernel Entropy Component Analysis for density estimation, and the Hilbert-Schmidt Independence Criterion for estimating the dependency between random variables. For all cases we expressed the derivative of the learned function as a linear combination of the kernel function derivative. Moreover we provide intuitive explanations through illustrative toy examples and show how to improve the interpretation of real applications in the context of spatiotemporal Earth system data cubes. This work reflects on the observation that function derivatives may play a crucial role in kernel methods analysis and understanding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge