Michael Mitzenmacher

Harvard University

Queueing, Predictions, and LLMs: Challenges and Open Problems

Mar 10, 2025Abstract:Queueing systems present many opportunities for applying machine-learning predictions, such as estimated service times, to improve system performance. This integration raises numerous open questions about how predictions can be effectively leveraged to improve scheduling decisions. Recent studies explore queues with predicted service times, typically aiming to minimize job time in the system. We review these works, highlight the effectiveness of predictions, and present open questions on queue performance. We then move to consider an important practical example of using predictions in scheduling, namely Large Language Model (LLM) systems, which presents novel scheduling challenges and highlights the potential for predictions to improve performance. In particular, we consider LLMs performing inference. Inference requests (jobs) in LLM systems are inherently complex; they have variable inference times, dynamic memory footprints that are constrained by key-value (KV) store memory limitations, and multiple possible preemption approaches that affect performance differently. We provide background on the important aspects of scheduling in LLM systems, and introduce new models and open problems that arise from them. We argue that there are significant opportunities for applying insights and analysis from queueing theory to scheduling in LLM systems.

HACK: Homomorphic Acceleration via Compression of the Key-Value Cache for Disaggregated LLM Inference

Feb 05, 2025

Abstract:Disaggregated Large Language Model (LLM) inference has gained popularity as it separates the computation-intensive prefill stage from the memory-intensive decode stage, avoiding the prefill-decode interference and improving resource utilization. However, transmitting Key-Value (KV) data between the two stages can be a bottleneck, especially for long prompts. Additionally, the computation time overhead for prefill and decode is key for optimizing Job Completion Time (JCT), and KV data size can become prohibitive for long prompts and sequences. Existing KV quantization methods can alleviate the transmission bottleneck and reduce memory requirements, but they introduce significant dequantization overhead, exacerbating the computation time. We propose Homomorphic Acceleration via Compression of the KV cache (HACK) for disaggregated LLM inference. HACK eliminates the heavy KV dequantization step, and directly performs computations on quantized KV data to approximate and reduce the cost of the expensive matrix-multiplication step. Extensive trace-driven experiments show that HACK reduces JCT by up to 70.9% compared to disaggregated LLM inference baseline and by up to 52.3% compared to state-of-the-art KV quantization methods.

Fast Inference for Augmented Large Language Models

Oct 25, 2024

Abstract:Augmented Large Language Models (LLMs) enhance the capabilities of standalone LLMs by integrating external data sources through API calls. In interactive LLM applications, efficient scheduling is crucial for maintaining low request completion times, directly impacting user engagement. However, these augmentations introduce scheduling challenges due to the need to manage limited memory for cached information (KV caches). As a result, traditional size-based scheduling algorithms, such as Shortest Job First (SJF), become less effective at minimizing completion times. Existing work focuses only on handling requests during API calls by preserving, discarding, or swapping memory without considering how to schedule requests with API calls. In this paper, we propose LAMPS, a novel LLM inference framework for augmented LLMs. LAMPS minimizes request completion time through a unified scheduling approach that considers the total length of requests and their handling strategies during API calls. Recognizing that LLM inference is memory-bound, our approach ranks requests based on their consumption of memory over time, which depends on both the output sizes and how a request is managed during its API calls. To implement our scheduling, LAMPS predicts the strategy that minimizes memory waste of a request during its API calls, aligning with but improving upon existing approaches. We also propose starvation prevention techniques and optimizations to mitigate the overhead of our scheduling. We implement LAMPS on top of vLLM and evaluate its performance against baseline LLM inference systems, demonstrating improvements in end-to-end latency by 27%-85% and reductions in TTFT by 4%-96% compared to the existing augmented-LLM system, with even greater gains over vLLM.

Efficient Inference for Augmented Large Language Models

Oct 23, 2024

Abstract:Augmented Large Language Models (LLMs) enhance the capabilities of standalone LLMs by integrating external data sources through API calls. In interactive LLM applications, efficient scheduling is crucial for maintaining low request completion times, directly impacting user engagement. However, these augmentations introduce scheduling challenges due to the need to manage limited memory for cached information (KV caches). As a result, traditional size-based scheduling algorithms, such as Shortest Job First (SJF), become less effective at minimizing completion times. Existing work focuses only on handling requests during API calls by preserving, discarding, or swapping memory without considering how to schedule requests with API calls. In this paper, we propose LAMPS, a novel LLM inference framework for augmented LLMs. LAMPS minimizes request completion time through a unified scheduling approach that considers the total length of requests and their handling strategies during API calls. Recognizing that LLM inference is memory-bound, our approach ranks requests based on their consumption of memory over time, which depends on both the output sizes and how a request is managed during its API calls. To implement our scheduling, LAMPS predicts the strategy that minimizes memory waste of a request during its API calls, aligning with but improving upon existing approaches. We also propose starvation prevention techniques and optimizations to mitigate the overhead of our scheduling. We implement LAMPS on top of vLLM and evaluate its performance against baseline LLM inference systems, demonstrating improvements in end-to-end latency by 27%-85% and reductions in TTFT by 4%-96% compared to the existing augmented-LLM system, with even greater gains over vLLM.

Don't Stop Me Now: Embedding Based Scheduling for LLMs

Oct 01, 2024

Abstract:Efficient scheduling is crucial for interactive Large Language Model (LLM) applications, where low request completion time directly impacts user engagement. Size-based scheduling algorithms like Shortest Remaining Process Time (SRPT) aim to reduce average request completion time by leveraging known or estimated request sizes and allowing preemption by incoming jobs with shorter service times. However, two main challenges arise when applying size-based scheduling to LLM systems. First, accurately predicting output lengths from prompts is challenging and often resource-intensive, making it impractical for many systems. As a result, the state-of-the-art LLM systems default to first-come, first-served scheduling, which can lead to head-of-line blocking and reduced system efficiency. Second, preemption introduces extra memory overhead to LLM systems as they must maintain intermediate states for unfinished (preempted) requests. In this paper, we propose TRAIL, a method to obtain output predictions from the target LLM itself. After generating each output token, we recycle the embedding of its internal structure as input for a lightweight classifier that predicts the remaining length for each running request. Using these predictions, we propose a prediction-based SRPT variant with limited preemption designed to account for memory overhead in LLM systems. This variant allows preemption early in request execution when memory consumption is low but restricts preemption as requests approach completion to optimize resource utilization. On the theoretical side, we derive a closed-form formula for this SRPT variant in an M/G/1 queue model, which demonstrates its potential value. In our system, we implement this preemption policy alongside our embedding-based prediction method.

Learning-Augmented Frequency Estimation in Sliding Windows

Sep 17, 2024

Abstract:We show how to utilize machine learning approaches to improve sliding window algorithms for approximate frequency estimation problems, under the ``algorithms with predictions'' framework. In this dynamic environment, previous learning-augmented algorithms are less effective, since properties in sliding window resolution can differ significantly from the properties of the entire stream. Our focus is on the benefits of predicting and filtering out items with large next arrival times -- that is, there is a large gap until their next appearance -- from the stream, which we show improves the memory-accuracy tradeoffs significantly. We provide theorems that provide insight into how and by how much our technique can improve the sliding window algorithm, as well as experimental results using real-world data sets. Our work demonstrates that predictors can be useful in the challenging sliding window setting.

Beyond Throughput and Compression Ratios: Towards High End-to-end Utility of Gradient Compression

Jul 01, 2024

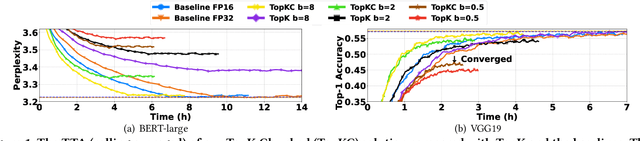

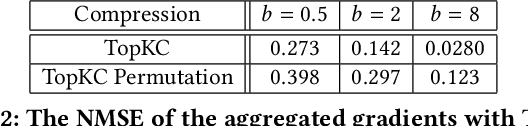

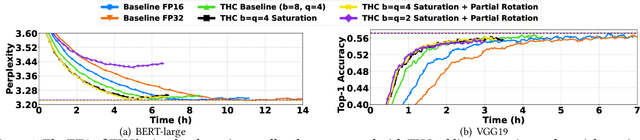

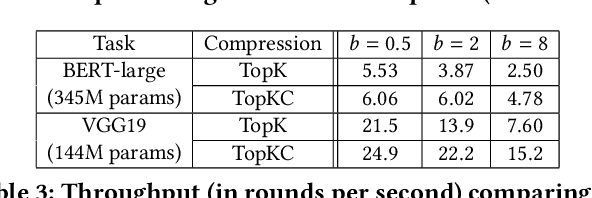

Abstract:Gradient aggregation has long been identified as a major bottleneck in today's large-scale distributed machine learning training systems. One promising solution to mitigate such bottlenecks is gradient compression, directly reducing communicated gradient data volume. However, in practice, many gradient compression schemes do not achieve acceleration of the training process while also preserving accuracy. In this work, we identify several common issues in previous gradient compression systems and evaluation methods. These issues include excessive computational overheads; incompatibility with all-reduce; and inappropriate evaluation metrics, such as not using an end-to-end metric or using a 32-bit baseline instead of a 16-bit baseline. We propose several general design and evaluation techniques to address these issues and provide guidelines for future work. Our preliminary evaluation shows that our techniques enhance the system's performance and provide a clearer understanding of the end-to-end utility of gradient compression methods.

Learning-Based Heavy Hitters and Flow Frequency Estimation in Streams

Jun 24, 2024Abstract:Identifying heavy hitters and estimating the frequencies of flows are fundamental tasks in various network domains. Existing approaches to this challenge can broadly be categorized into two groups, hashing-based and competing-counter-based. The Count-Min sketch is a standard example of a hashing-based algorithm, and the Space Saving algorithm is an example of a competing-counter algorithm. Recent works have explored the use of machine learning to enhance algorithms for frequency estimation problems, under the algorithms with prediction framework. However, these works have focused solely on the hashing-based approach, which may not be best for identifying heavy hitters. In this paper, we present the first learned competing-counter-based algorithm, called LSS, for identifying heavy hitters, top k, and flow frequency estimation that utilizes the well-known Space Saving algorithm. We provide theoretical insights into how and to what extent our approach can improve upon Space Saving, backed by experimental results on both synthetic and real-world datasets. Our evaluation demonstrates that LSS can enhance the accuracy and efficiency of Space Saving in identifying heavy hitters, top k, and estimating flow frequencies.

Optimal and Near-Optimal Adaptive Vector Quantization

Feb 05, 2024

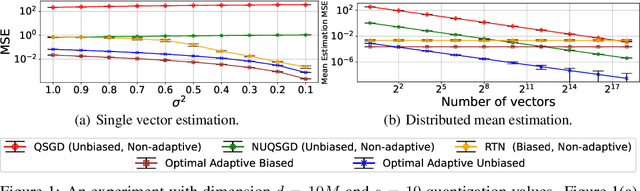

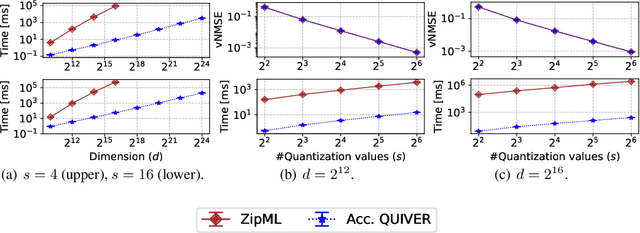

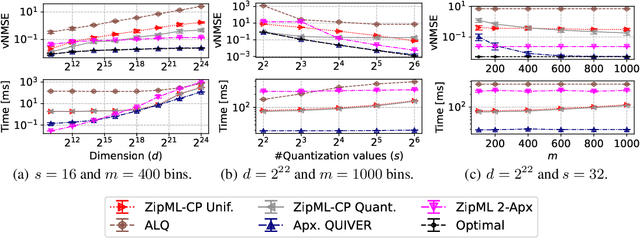

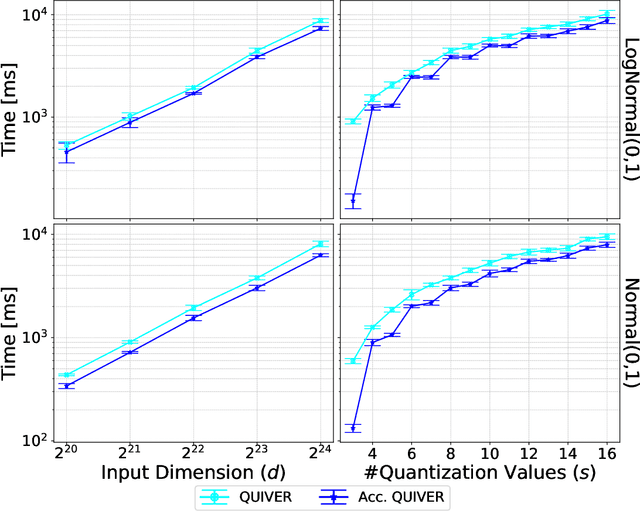

Abstract:Quantization is a fundamental optimization for many machine-learning use cases, including compressing gradients, model weights and activations, and datasets. The most accurate form of quantization is \emph{adaptive}, where the error is minimized with respect to a given input, rather than optimizing for the worst case. However, optimal adaptive quantization methods are considered infeasible in terms of both their runtime and memory requirements. We revisit the Adaptive Vector Quantization (AVQ) problem and present algorithms that find optimal solutions with asymptotically improved time and space complexity. We also present an even faster near-optimal algorithm for large inputs. Our experiments show our algorithms may open the door to using AVQ more extensively in a variety of machine learning applications.

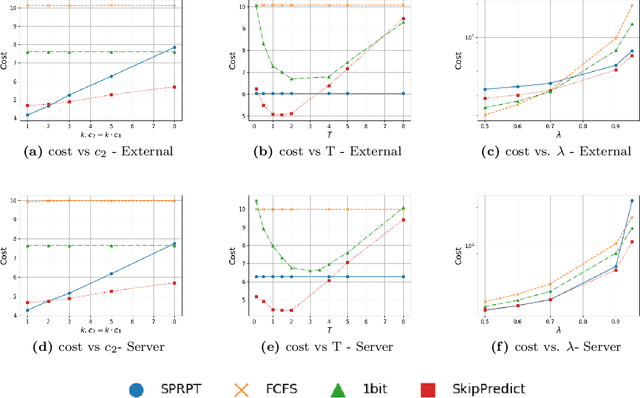

SkipPredict: When to Invest in Predictions for Scheduling

Feb 05, 2024

Abstract:In light of recent work on scheduling with predicted job sizes, we consider the effect of the cost of predictions in queueing systems, removing the assumption in prior research that predictions are external to the system's resources and/or cost-free. In particular, we introduce a novel approach to utilizing predictions, SkipPredict, designed to address their inherent cost. Rather than uniformly applying predictions to all jobs, we propose a tailored approach that categorizes jobs based on their prediction requirements. To achieve this, we employ one-bit "cheap predictions" to classify jobs as either short or long. SkipPredict prioritizes predicted short jobs over long jobs, and for the latter, SkipPredict applies a second round of more detailed "expensive predictions" to approximate Shortest Remaining Processing Time for these jobs. Our analysis takes into account the cost of prediction. We examine the effect of this cost for two distinct models. In the external cost model, predictions are generated by some external method without impacting job service times but incur a cost. In the server time cost model, predictions themselves require server processing time, and are scheduled on the same server as the jobs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge