Michael Mangan

Dynamical-VAE-based Hindsight to Learn the Causal Dynamics of Factored-POMDPs

Nov 12, 2024

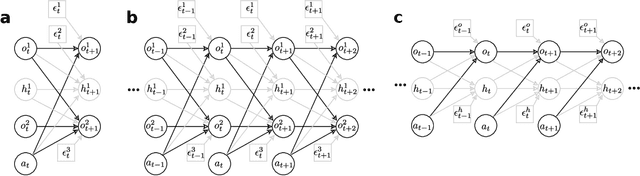

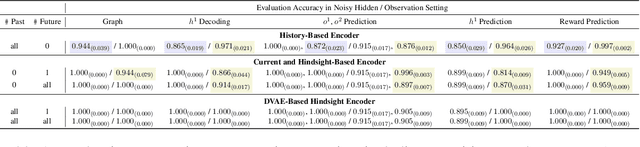

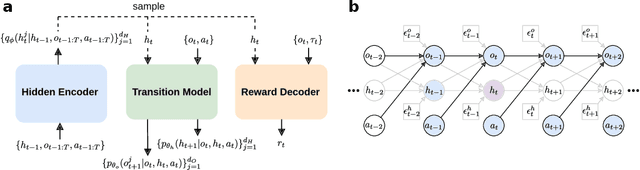

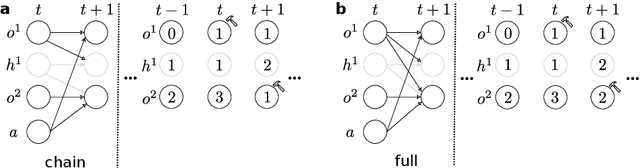

Abstract:Learning representations of underlying environmental dynamics from partial observations is a critical challenge in machine learning. In the context of Partially Observable Markov Decision Processes (POMDPs), state representations are often inferred from the history of past observations and actions. We demonstrate that incorporating future information is essential to accurately capture causal dynamics and enhance state representations. To address this, we introduce a Dynamical Variational Auto-Encoder (DVAE) designed to learn causal Markovian dynamics from offline trajectories in a POMDP. Our method employs an extended hindsight framework that integrates past, current, and multi-step future information within a factored-POMDP setting. Empirical results reveal that this approach uncovers the causal graph governing hidden state transitions more effectively than history-based and typical hindsight-based models.

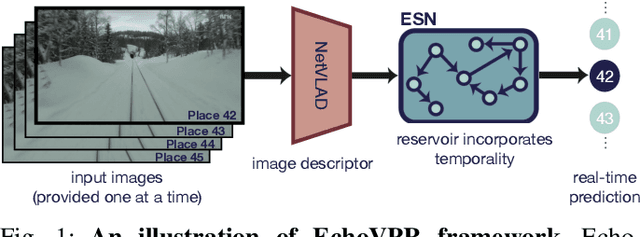

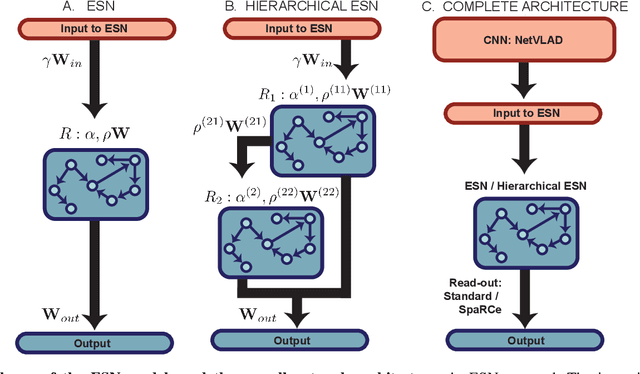

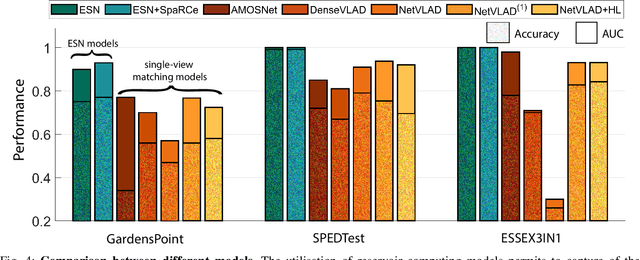

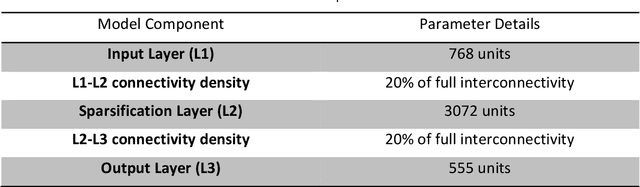

EchoVPR: Echo State Networks for Visual Place Recognition

Oct 11, 2021

Abstract:Recognising previously visited locations is an important, but unsolved, task in autonomous navigation. Current visual place recognition (VPR) benchmarks typically challenge models to recover the position of a query image (or images) from sequential datasets that include both spatial and temporal components. Recently, Echo State Network (ESN) varieties have proven particularly powerful at solving machine learning tasks that require spatio-temporal modelling. These networks are simple, yet powerful neural architectures that -- exhibiting memory over multiple time-scales and non-linear high-dimensional representations -- can discover temporal relations in the data while still maintaining linearity in the learning. In this paper, we present a series of ESNs and analyse their applicability to the VPR problem. We report that the addition of ESNs to pre-processed convolutional neural networks led to a dramatic boost in performance in comparison to non-recurrent networks in four standard benchmarks (GardensPoint, SPEDTest, ESSEX3IN1, Nordland) demonstrating that ESNs are able to capture the temporal structure inherent in VPR problems. Moreover, we show that ESNs can outperform class-leading VPR models which also exploit the sequential dynamics of the data. Finally, our results demonstrate that ESNs also improve generalisation abilities, robustness, and accuracy further supporting their suitability to VPR applications.

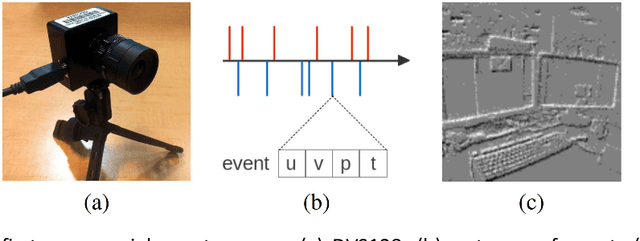

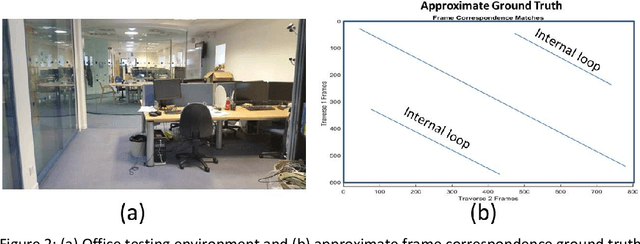

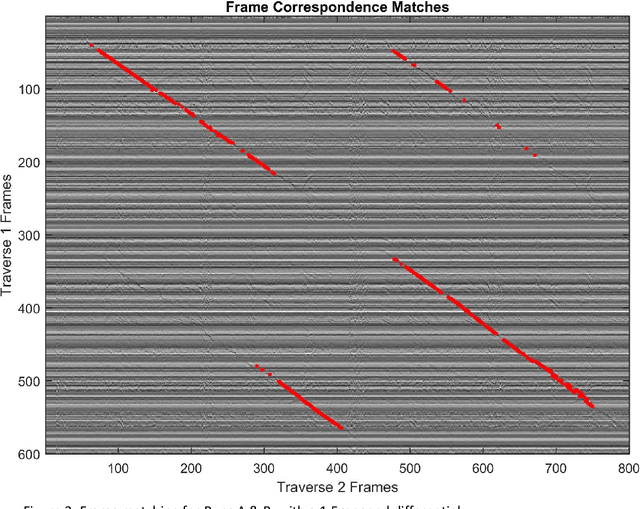

Place Recognition with Event-based Cameras and a Neural Implementation of SeqSLAM

May 18, 2015

Abstract:Event-based cameras offer much potential to the fields of robotics and computer vision, in part due to their large dynamic range and extremely high "frame rates". These attributes make them, at least in theory, particularly suitable for enabling tasks like navigation and mapping on high speed robotic platforms under challenging lighting conditions, a task which has been particularly challenging for traditional algorithms and camera sensors. Before these tasks become feasible however, progress must be made towards adapting and innovating current RGB-camera-based algorithms to work with event-based cameras. In this paper we present ongoing research investigating two distinct approaches to incorporating event-based cameras for robotic navigation: the investigation of suitable place recognition / loop closure techniques, and the development of efficient neural implementations of place recognition techniques that enable the possibility of place recognition using event-based cameras at very high frame rates using neuromorphic computing hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge