Michael Macy

Communication is All You Need: Persuasion Dataset Construction via Multi-LLM Communication

Feb 13, 2025

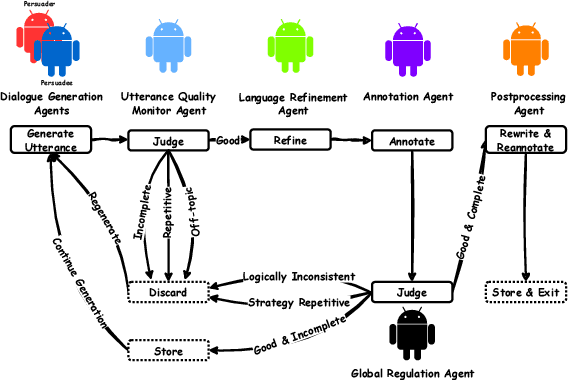

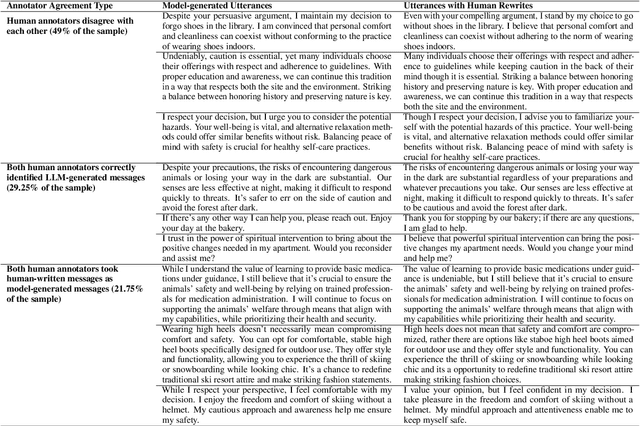

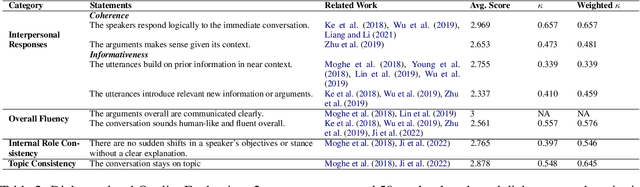

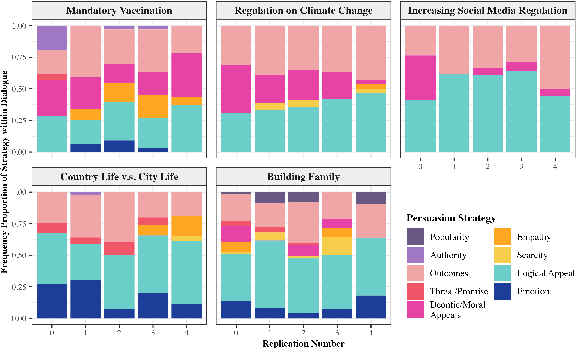

Abstract:Large Language Models (LLMs) have shown proficiency in generating persuasive dialogue, yet concerns about the fluency and sophistication of their outputs persist. This paper presents a multi-LLM communication framework designed to enhance the generation of persuasive data automatically. This framework facilitates the efficient production of high-quality, diverse linguistic content with minimal human oversight. Through extensive evaluations, we demonstrate that the generated data excels in naturalness, linguistic diversity, and the strategic use of persuasion, even in complex scenarios involving social taboos. The framework also proves adept at generalizing across novel contexts. Our results highlight the framework's potential to significantly advance research in both computational and social science domains concerning persuasive communication.

Demographic Confounding Causes Extreme Instances of Lifestyle Politics on Facebook

Jan 17, 2022

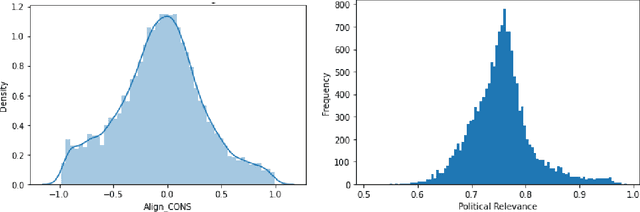

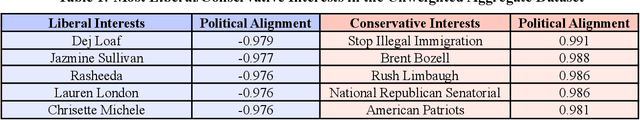

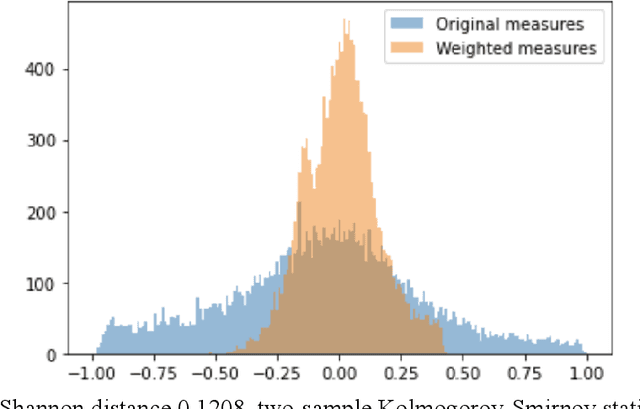

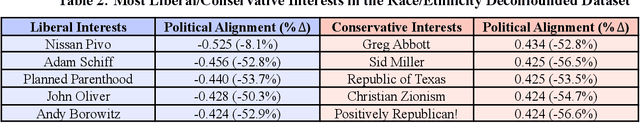

Abstract:Lifestyle politics emerge when activities that have no substantive relevance to ideology become politically aligned and polarized. Homophily and social influence are able generate these fault lines on their own; however, social identities from demographics may serve as coordinating mechanisms through which lifestyle politics are mobilized are spread. Using a dataset of 137,661,886 observations from 299,327 Facebook interests aggregated across users of different racial/ethnic, education, age, gender, and income demographics, we find that the most extreme instances of lifestyle politics are those which are highly confounded by demographics such as race/ethnicity (e.g., Black artists and performers). After adjusting political alignment for demographic effects, lifestyle politics decreased by 27.36% toward the political "center" and demographically confounded interests were no longer among the most polarized interests. Instead, after demographic deconfounding, we found that the most liberal interests included electric cars, Planned Parenthood, and liberal satire while the most conservative interests included the Republican Party and conservative commentators. We validate our measures of political alignment and lifestyle politics using the General Social Survey and find similar demographic entanglements with lifestyle politics existed before social media such as Facebook were ubiquitous, giving us strong confidence that our results are not due to echo chambers or filter bubbles. Likewise, since demographic characteristics exist prior to ideological values, we argue that the demographic confounding we observe is causally responsible for the extreme instances of lifestyle politics that we find among the aggregated interests. We conclude our paper by relating our results to Simpson's paradox, cultural omnivorousness, and network autocorrelation.

Going beyond accuracy: estimating homophily in social networks using predictions

Jan 30, 2020

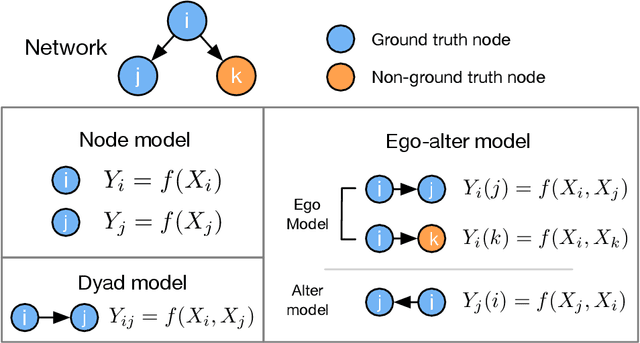

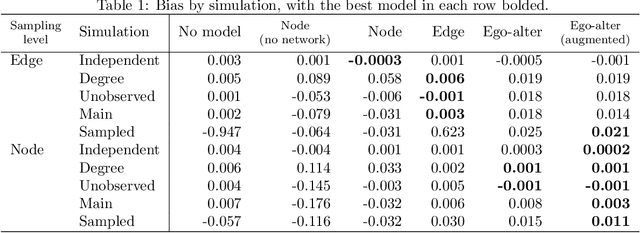

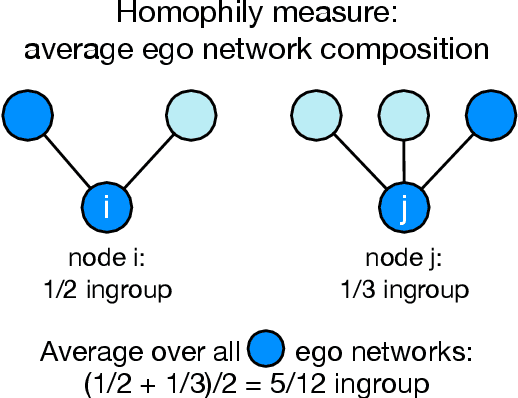

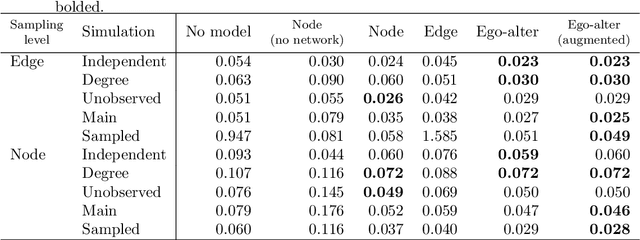

Abstract:In online social networks, it is common to use predictions of node categories to estimate measures of homophily and other relational properties. However, online social network data often lacks basic demographic information about the nodes. Researchers must rely on predicted node attributes to estimate measures of homophily, but little is known about the validity of these measures. We show that estimating homophily in a network can be viewed as a dyadic prediction problem, and that homophily estimates are unbiased when dyad-level residuals sum to zero in the network. Node-level prediction models, such as the use of names to classify ethnicity or gender, do not generally have this property and can introduce large biases into homophily estimates. Bias occurs due to error autocorrelation along dyads. Importantly, node-level classification performance is not a reliable indicator of estimation accuracy for homophily. We compare estimation strategies that make predictions at the node and dyad levels, evaluating performance in different settings. We propose a novel "ego-alter" modeling approach that outperforms standard node and dyad classification strategies. While this paper focuses on homophily, results generalize to other relational measures which aggregate predictions along the dyads in a network. We conclude with suggestions for research designs to study homophily in online networks. Code for this paper is available at https://github.com/georgeberry/autocorr.

Psychological and Personality Profiles of Political Extremists

Apr 01, 2017

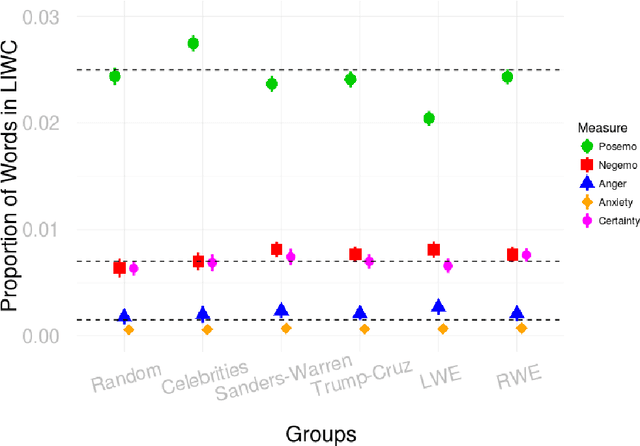

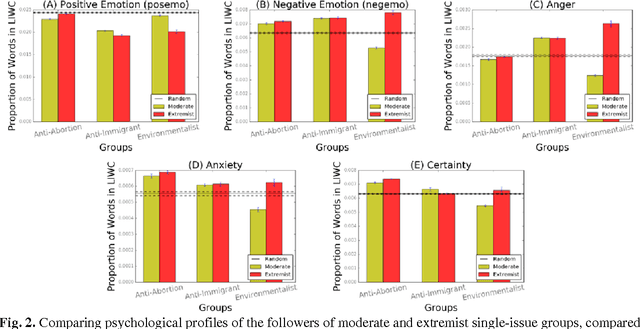

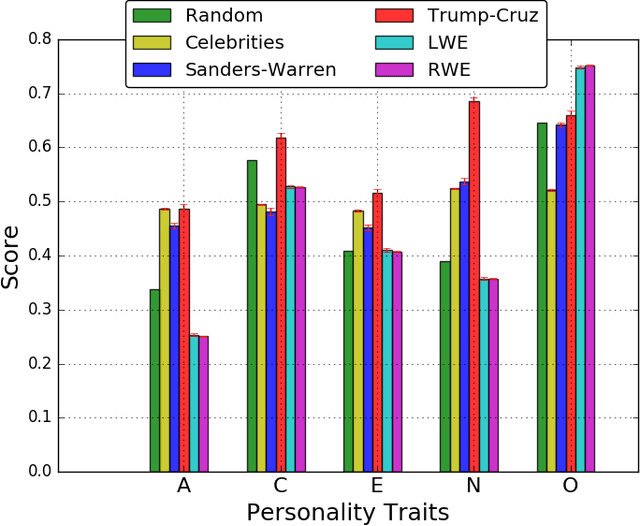

Abstract:Global recruitment into radical Islamic movements has spurred renewed interest in the appeal of political extremism. Is the appeal a rational response to material conditions or is it the expression of psychological and personality disorders associated with aggressive behavior, intolerance, conspiratorial imagination, and paranoia? Empirical answers using surveys have been limited by lack of access to extremist groups, while field studies have lacked psychological measures and failed to compare extremists with contrast groups. We revisit the debate over the appeal of extremism in the U.S. context by comparing publicly available Twitter messages written by over 355,000 political extremist followers with messages written by non-extremist U.S. users. Analysis of text-based psychological indicators supports the moral foundation theory which identifies emotion as a critical factor in determining political orientation of individuals. Extremist followers also differ from others in four of the Big Five personality traits.

Automated Hate Speech Detection and the Problem of Offensive Language

Mar 11, 2017

Abstract:A key challenge for automatic hate-speech detection on social media is the separation of hate speech from other instances of offensive language. Lexical detection methods tend to have low precision because they classify all messages containing particular terms as hate speech and previous work using supervised learning has failed to distinguish between the two categories. We used a crowd-sourced hate speech lexicon to collect tweets containing hate speech keywords. We use crowd-sourcing to label a sample of these tweets into three categories: those containing hate speech, only offensive language, and those with neither. We train a multi-class classifier to distinguish between these different categories. Close analysis of the predictions and the errors shows when we can reliably separate hate speech from other offensive language and when this differentiation is more difficult. We find that racist and homophobic tweets are more likely to be classified as hate speech but that sexist tweets are generally classified as offensive. Tweets without explicit hate keywords are also more difficult to classify.

Bots as Virtual Confederates: Design and Ethics

Nov 02, 2016Abstract:The use of bots as virtual confederates in online field experiments holds extreme promise as a new methodological tool in computational social science. However, this potential tool comes with inherent ethical challenges. Informed consent can be difficult to obtain in many cases, and the use of confederates necessarily implies the use of deception. In this work we outline a design space for bots as virtual confederates, and we propose a set of guidelines for meeting the status quo for ethical experimentation. We draw upon examples from prior work in the CSCW community and the broader social science literature for illustration. While a handful of prior researchers have used bots in online experimentation, our work is meant to inspire future work in this area and raise awareness of the associated ethical issues.

* Forthcoming in CSCW 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge