Metin Sezgin

An Overview of Affective Speech Synthesis and Conversion in the Deep Learning Era

Oct 06, 2022

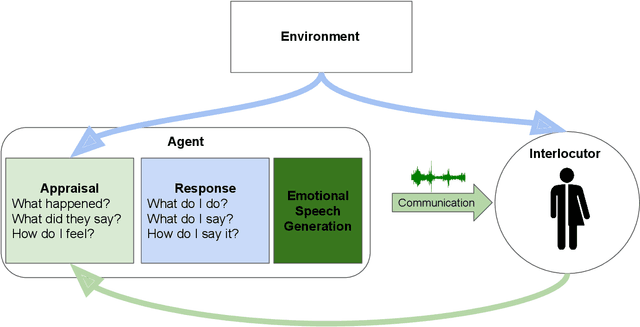

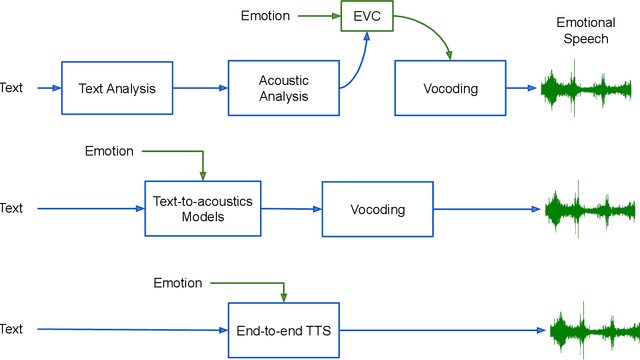

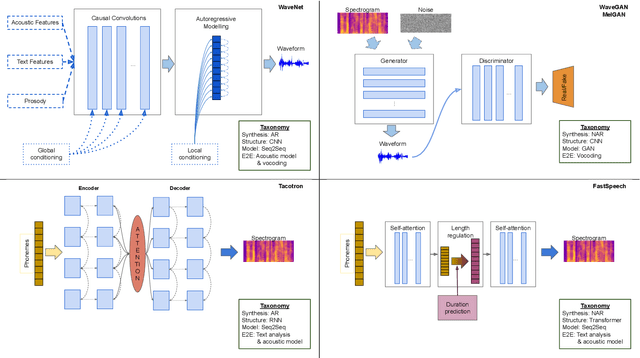

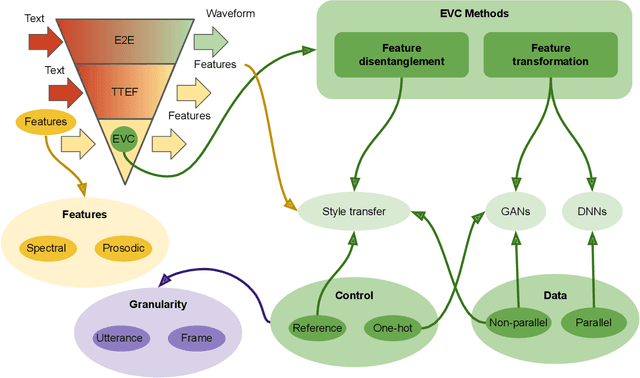

Abstract:Speech is the fundamental mode of human communication, and its synthesis has long been a core priority in human-computer interaction research. In recent years, machines have managed to master the art of generating speech that is understandable by humans. But the linguistic content of an utterance encompasses only a part of its meaning. Affect, or expressivity, has the capacity to turn speech into a medium capable of conveying intimate thoughts, feelings, and emotions -- aspects that are essential for engaging and naturalistic interpersonal communication. While the goal of imparting expressivity to synthesised utterances has so far remained elusive, following recent advances in text-to-speech synthesis, a paradigm shift is well under way in the fields of affective speech synthesis and conversion as well. Deep learning, as the technology which underlies most of the recent advances in artificial intelligence, is spearheading these efforts. In the present overview, we outline ongoing trends and summarise state-of-the-art approaches in an attempt to provide a comprehensive overview of this exciting field.

AffectON: Incorporating Affect Into Dialog Generation

Dec 12, 2020

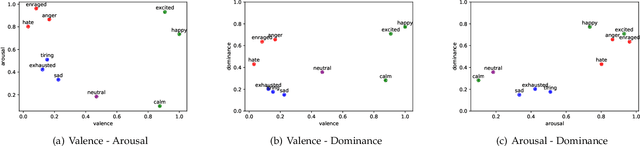

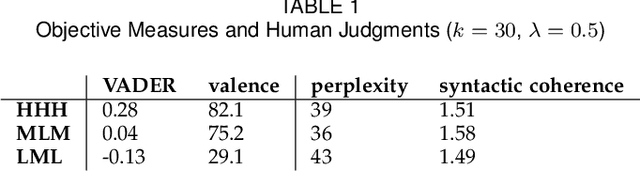

Abstract:Due to its expressivity, natural language is paramount for explicit and implicit affective state communication among humans. The same linguistic inquiry (e.g., How are you?) might induce responses with different affects depending on the affective state of the conversational partner(s) and the context of the conversation. Yet, most dialog systems do not consider affect as constitutive aspect of response generation. In this paper, we introduce AffectON, an approach for generating affective responses during inference. For generating language in a targeted affect, our approach leverages a probabilistic language model and an affective space. AffectON is language model agnostic, since it can work with probabilities generated by any language model (e.g., sequence-to-sequence models, neural language models, n-grams). Hence, it can be employed for both affective dialog and affective language generation. We experimented with affective dialog generation and evaluated the generated text objectively and subjectively. For the subjective part of the evaluation, we designed a custom user interface for rating and provided recommendations for the design of such interfaces. The results, both subjective and objective demonstrate that our approach is successful in pulling the generated language toward the targeted affect, with little sacrifice in syntactic coherence.

Proceedings of eNTERFACE 2015 Workshop on Intelligent Interfaces

Jan 19, 2018

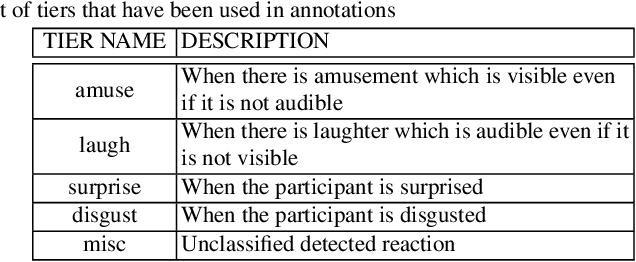

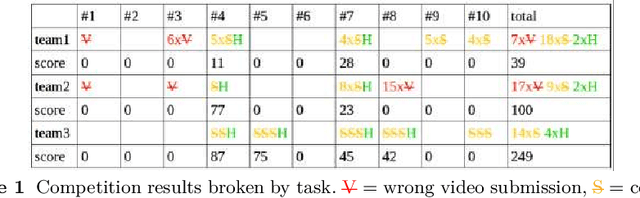

Abstract:The 11th Summer Workshop on Multimodal Interfaces eNTERFACE 2015 was hosted by the Numediart Institute of Creative Technologies of the University of Mons from August 10th to September 2015. During the four weeks, students and researchers from all over the world came together in the Numediart Institute of the University of Mons to work on eight selected projects structured around intelligent interfaces. Eight projects were selected and their reports are shown here.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge