Rebecca Fiebrink

Learning to See: You Are What You See

Feb 28, 2020

Abstract:The authors present a visual instrument developed as part of the creation of the artwork Learning to See. The artwork explores bias in artificial neural networks and provides mechanisms for the manipulation of specifically trained for real-world representations. The exploration of these representations acts as a metaphor for the process of developing a visual understanding and/or visual vocabulary of the world. These representations can be explored and manipulated in real time, and have been produced in such a way so as to reflect specific creative perspectives that call into question the relationship between how both artificial neural networks and humans may construct meaning.

* Presented as an Art Paper at SIGGRAPH 2019

Deep Meditations: Controlled navigation of latent space

Feb 27, 2020

Abstract:We introduce a method which allows users to creatively explore and navigate the vast latent spaces of deep generative models. Specifically, our method enables users to \textit{discover} and \textit{design} \textit{trajectories} in these high dimensional spaces, to construct stories, and produce time-based media such as videos---\textit{with meaningful control over narrative}. Our goal is to encourage and aid the use of deep generative models as a medium for creative expression and story telling with meaningful human control. Our method is analogous to traditional video production pipelines in that we use a conventional non-linear video editor with proxy clips, and conform with arrays of latent space vectors. Examples can be seen at \url{http://deepmeditations.ai}.

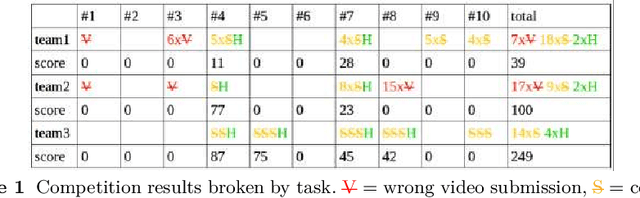

Proceedings of eNTERFACE 2015 Workshop on Intelligent Interfaces

Jan 19, 2018

Abstract:The 11th Summer Workshop on Multimodal Interfaces eNTERFACE 2015 was hosted by the Numediart Institute of Creative Technologies of the University of Mons from August 10th to September 2015. During the four weeks, students and researchers from all over the world came together in the Numediart Institute of the University of Mons to work on eight selected projects structured around intelligent interfaces. Eight projects were selected and their reports are shown here.

The Machine Learning Algorithm as Creative Musical Tool

Nov 01, 2016

Abstract:Machine learning is the capacity of a computational system to learn structures from datasets in order to make predictions on newly seen data. Such an approach offers a significant advantage in music scenarios in which musicians can teach the system to learn an idiosyncratic style, or can break the rules to explore the system's capacity in unexpected ways. In this chapter we draw on music, machine learning, and human-computer interaction to elucidate an understanding of machine learning algorithms as creative tools for music and the sonic arts. We motivate a new understanding of learning algorithms as human-computer interfaces. We show that, like other interfaces, learning algorithms can be characterised by the ways their affordances intersect with goals of human users. We also argue that the nature of interaction between users and algorithms impacts the usability and usefulness of those algorithms in profound ways. This human-centred view of machine learning motivates our concluding discussion of what it means to employ machine learning as a creative tool.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge