Mengyun Xu

A Map-matching Algorithm with Extraction of Multi-group Information for Low-frequency Data

Sep 18, 2022

Abstract:The growing use of probe vehicles generates a huge number of GNSS data. Limited by the satellite positioning technology, further improving the accuracy of map-matching is challenging work, especially for low-frequency trajectories. When matching a trajectory, the ego vehicle's spatial-temporal information of the present trip is the most useful with the least amount of data. In addition, there are a large amount of other data, e.g., other vehicles' state and past prediction results, but it is hard to extract useful information for matching maps and inferring paths. Most map-matching studies only used the ego vehicle's data and ignored other vehicles' data. Based on it, this paper designs a new map-matching method to make full use of "Big data". We first sort all data into four groups according to their spatial and temporal distance from the present matching probe which allows us to sort for their usefulness. Then we design three different methods to extract valuable information (scores) from them: a score for speed and bearing, a score for historical usage, and a score for traffic state using the spectral graph Markov neutral network. Finally, we use a modified top-K shortest-path method to search the candidate paths within an ellipse region and then use the fused score to infer the path (projected location). We test the proposed method against baseline algorithms using a real-world dataset in China. The results show that all scoring methods can enhance map-matching accuracy. Furthermore, our method outperforms the others, especially when GNSS probing frequency is less than 0.01 Hz.

Learning Orientation Distributions for Object Pose Estimation

Jul 02, 2020

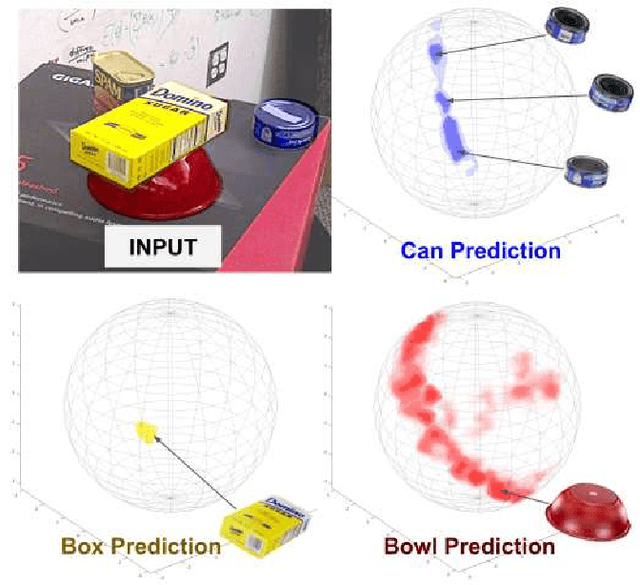

Abstract:For robots to operate robustly in the real world, they should be aware of their uncertainty. However, most methods for object pose estimation return a single point estimate of the object's pose. In this work, we propose two learned methods for estimating a distribution over an object's orientation. Our methods take into account both the inaccuracies in the pose estimation as well as the object symmetries. Our first method, which regresses from deep learned features to an isotropic Bingham distribution, gives the best performance for orientation distribution estimation for non-symmetric objects. Our second method learns to compare deep features and generates a non-parameteric histogram distribution. This method gives the best performance on objects with unknown symmetries, accurately modeling both symmetric and non-symmetric objects, without any requirement of symmetry annotation. We show that both of these methods can be used to augment an existing pose estimator. Our evaluation compares our methods to a large number of baseline approaches for uncertainty estimation across a variety of different types of objects.

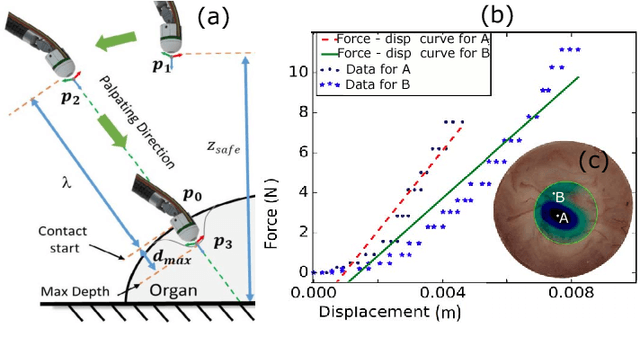

A surgical system for automatic registration, stiffness mapping and dynamic image overlay

Nov 23, 2017

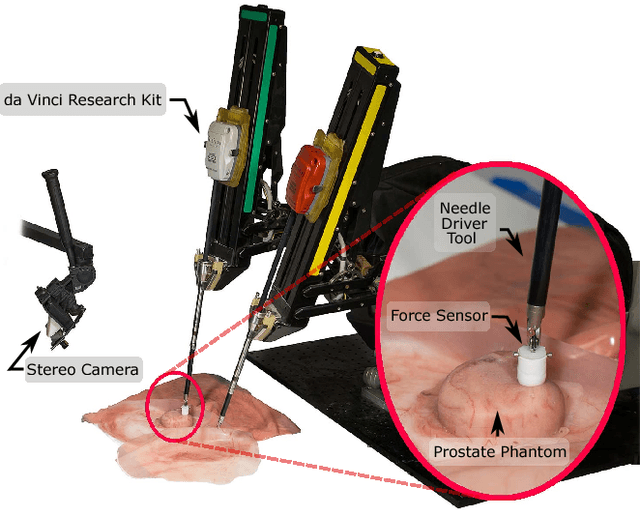

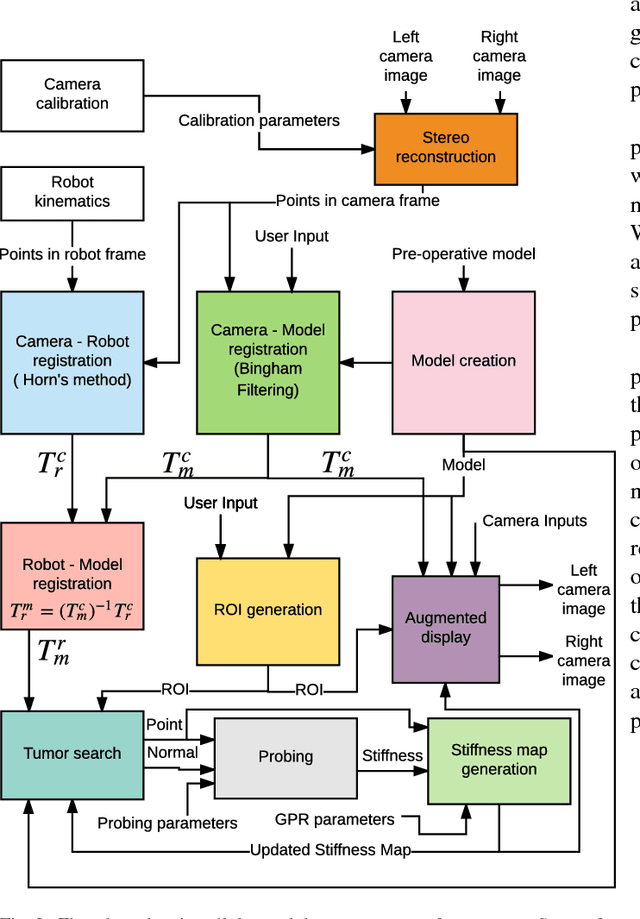

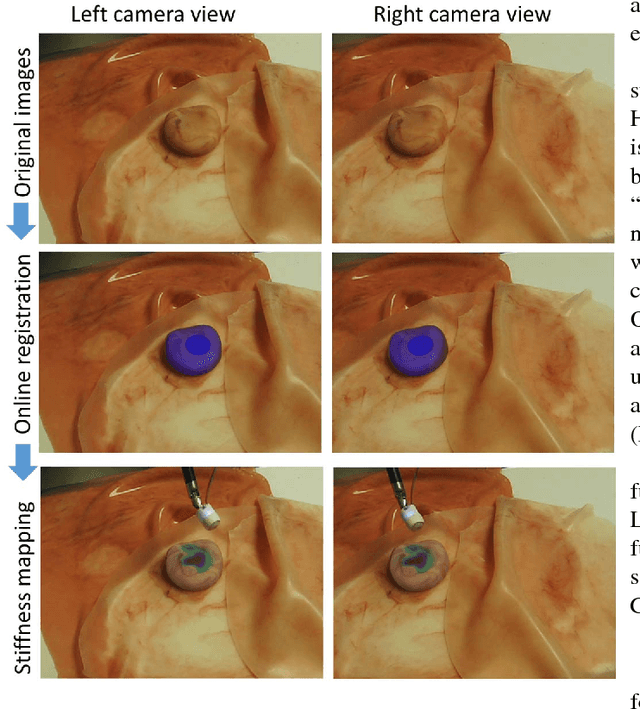

Abstract:In this paper we develop a surgical system using the da Vinci research kit (dVRK) that is capable of autonomously searching for tumors and dynamically displaying the tumor location using augmented reality. Such a system has the potential to quickly reveal the location and shape of tumors and visually overlay that information to reduce the cognitive overload of the surgeon. We believe that our approach is one of the first to incorporate state-of-the-art methods in registration, force sensing and tumor localization into a unified surgical system. First, the preoperative model is registered to the intra-operative scene using a Bingham distribution-based filtering approach. An active level set estimation is then used to find the location and the shape of the tumors. We use a recently developed miniature force sensor to perform the palpation. The estimated stiffness map is then dynamically overlaid onto the registered preoperative model of the organ. We demonstrate the efficacy of our system by performing experiments on phantom prostate models with embedded stiff inclusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge