Nicolas Zevallos

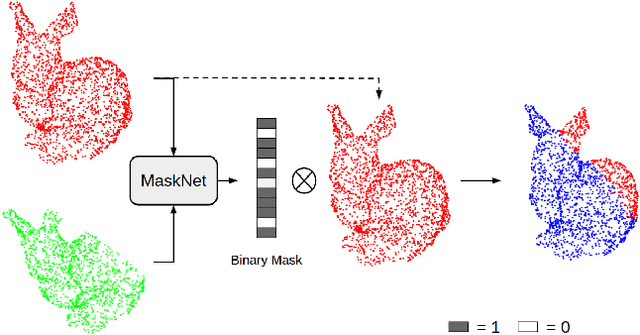

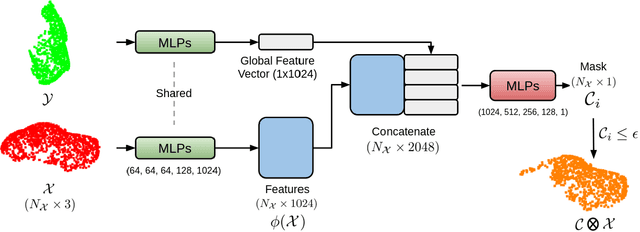

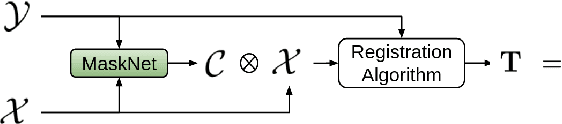

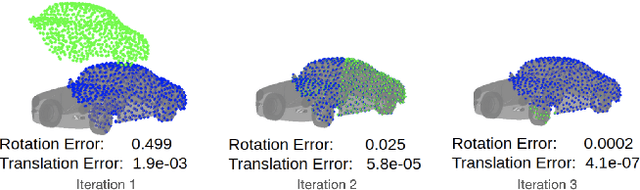

MaskNet: A Fully-Convolutional Network to Estimate Inlier Points

Oct 19, 2020

Abstract:Point clouds have grown in importance in the way computers perceive the world. From LIDAR sensors in autonomous cars and drones to the time of flight and stereo vision systems in our phones, point clouds are everywhere. Despite their ubiquity, point clouds in the real world are often missing points because of sensor limitations or occlusions, or contain extraneous points from sensor noise or artifacts. These problems challenge algorithms that require computing correspondences between a pair of point clouds. Therefore, this paper presents a fully-convolutional neural network that identifies which points in one point cloud are most similar (inliers) to the points in another. We show improvements in learning-based and classical point cloud registration approaches when retrofitted with our network. We demonstrate these improvements on synthetic and real-world datasets. Finally, our network produces impressive results on test datasets that were unseen during training, thus exhibiting generalizability. Code and videos are available at https://github.com/vinits5/masknet

Trajectory-Optimized Sensing for Active Search of Tissue Abnormalities in Robotic Surgery

May 16, 2018

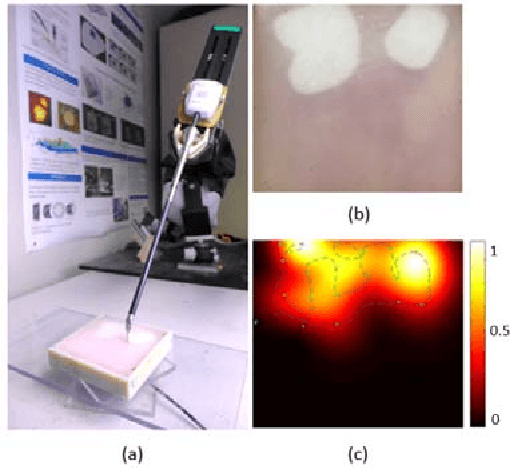

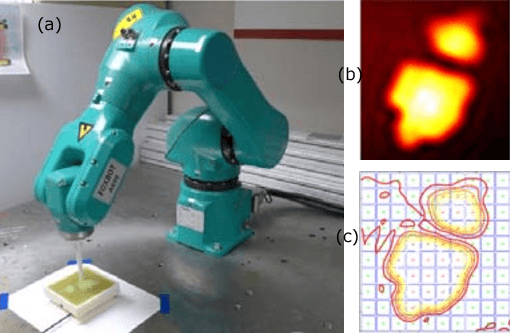

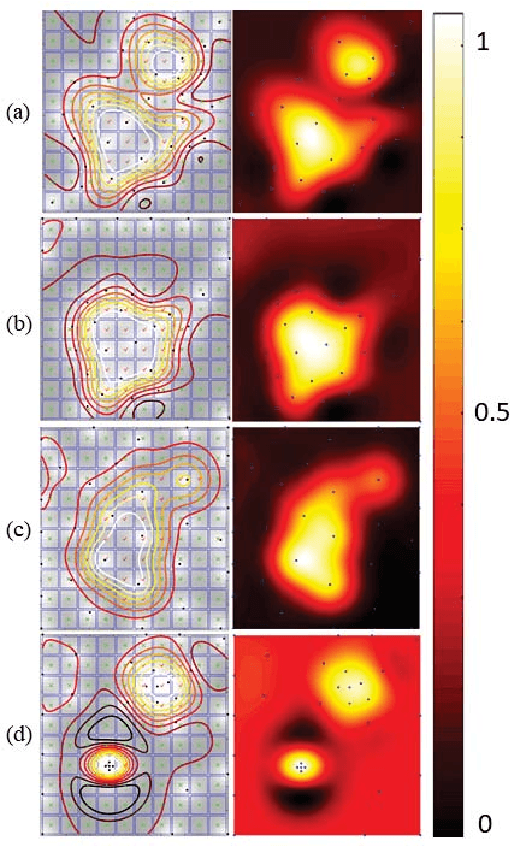

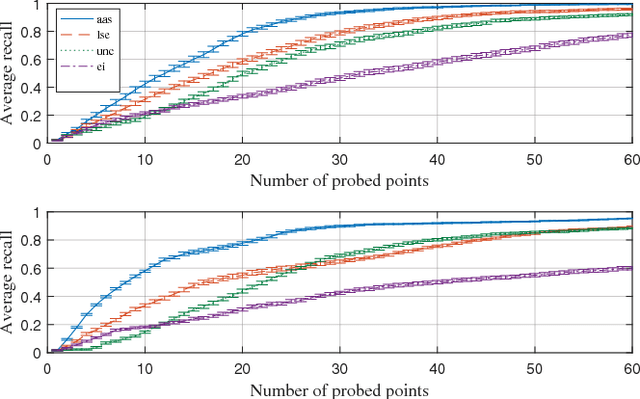

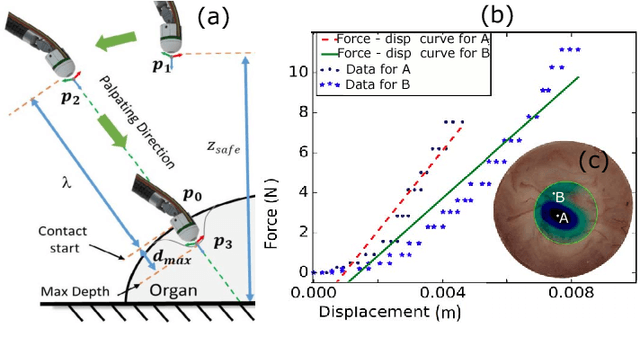

Abstract:In this work, we develop an approach for guiding robots to automatically localize and find the shapes of tumors and other stiff inclusions present in the anatomy. Our approach uses Gaussian processes to model the stiffness distribution and active learning to direct the palpation path of the robot. The palpation paths are chosen such that they maximize an acquisition function provided by an active learning algorithm. Our approach provides the flexibility to avoid obstacles in the robot's path, incorporate uncertainties in robot position and sensor measurements, include prior information about location of stiff inclusions while respecting the robot-kinematics. To the best of our knowledge this is the first work in literature that considers all the above conditions while localizing tumors. The proposed framework is evaluated via simulation and experimentation on three different robot platforms: 6-DoF industrial arm, da Vinci Research Kit (dVRK), and the Insertable Robotic Effector Platform (IREP). Results show that our approach can accurately estimate the locations and boundaries of the stiff inclusions while reducing exploration time.

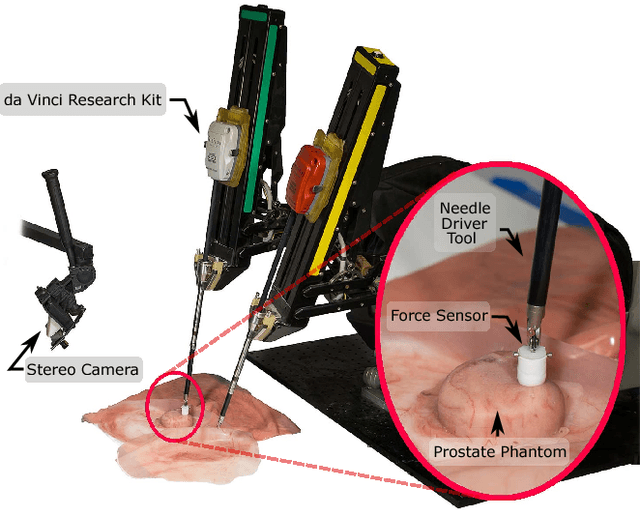

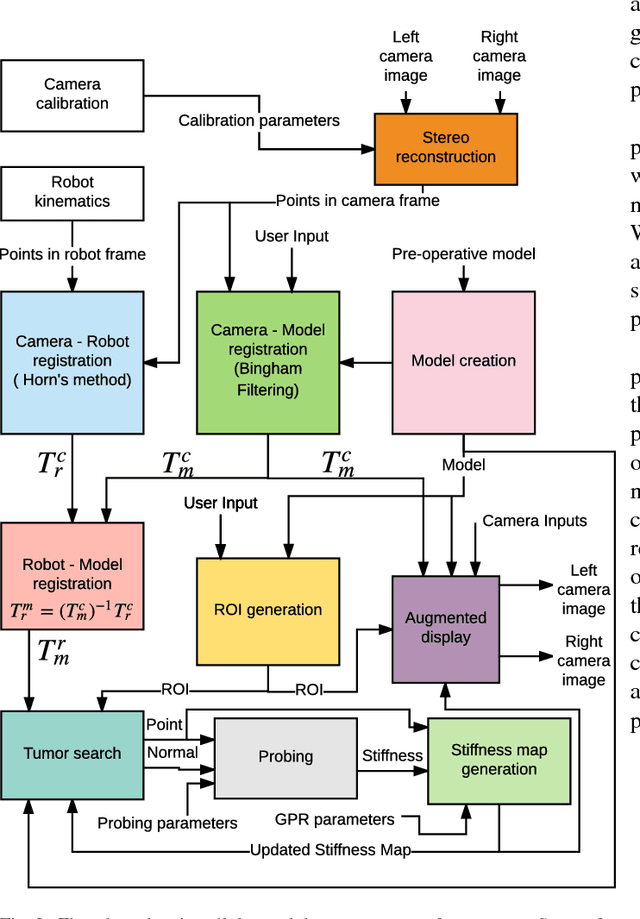

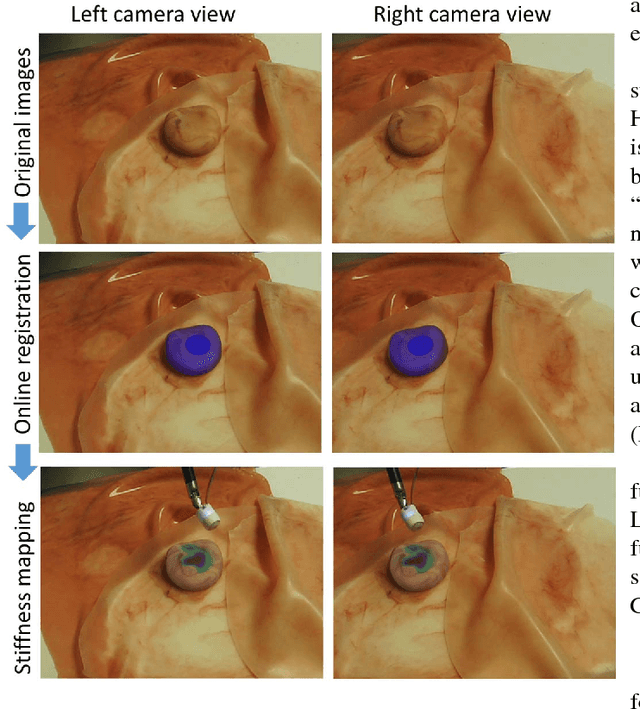

A surgical system for automatic registration, stiffness mapping and dynamic image overlay

Nov 23, 2017

Abstract:In this paper we develop a surgical system using the da Vinci research kit (dVRK) that is capable of autonomously searching for tumors and dynamically displaying the tumor location using augmented reality. Such a system has the potential to quickly reveal the location and shape of tumors and visually overlay that information to reduce the cognitive overload of the surgeon. We believe that our approach is one of the first to incorporate state-of-the-art methods in registration, force sensing and tumor localization into a unified surgical system. First, the preoperative model is registered to the intra-operative scene using a Bingham distribution-based filtering approach. An active level set estimation is then used to find the location and the shape of the tumors. We use a recently developed miniature force sensor to perform the palpation. The estimated stiffness map is then dynamically overlaid onto the registered preoperative model of the organ. We demonstrate the efficacy of our system by performing experiments on phantom prostate models with embedded stiff inclusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge