Meitang Li

VTool-R1: VLMs Learn to Think with Images via Reinforcement Learning on Multimodal Tool Use

May 25, 2025Abstract:Reinforcement Learning Finetuning (RFT) has significantly advanced the reasoning capabilities of large language models (LLMs) by enabling long chains of thought, self-correction, and effective tool use. While recent works attempt to extend RFT to vision-language models (VLMs), these efforts largely produce text-only reasoning conditioned on static image inputs, falling short of true multimodal reasoning in the response. In contrast, test-time methods like Visual Sketchpad incorporate visual steps but lack training mechanisms. We introduce VTool-R1, the first framework that trains VLMs to generate multimodal chains of thought by interleaving text and intermediate visual reasoning steps. VTool-R1 integrates Python-based visual editing tools into the RFT process, enabling VLMs to learn when and how to generate visual reasoning steps that benefit final reasoning. Trained with outcome-based rewards tied to task accuracy, our approach elicits strategic visual tool use for reasoning without relying on process-based supervision. Experiments on structured visual question answering over charts and tables show that VTool-R1 enhances reasoning performance by teaching VLMs to "think with images" and generate multimodal chain of thoughts with tools.

Cache-of-Thought: Master-Apprentice Framework for Cost-Effective Vision Language Model Inference

Feb 27, 2025Abstract:Vision Language Models (VLMs) have achieved remarkable success in a wide range of vision applications of increasing complexity and scales, yet choosing the right VLM model size involves a trade-off between response quality and cost. While smaller VLMs are cheaper to run, they typically produce responses only marginally better than random guessing on benchmarks such as MMMU. In this paper, we propose Cache of Thought (CoT), a master apprentice framework for collaborative inference between large and small VLMs. CoT manages high quality query results from large VLMs (master) in a cache, which are then selected via a novel multi modal retrieval and in-context learning to aid the performance of small VLMs (apprentice). We extensively evaluate CoT on various widely recognized and challenging general VQA benchmarks, and show that CoT increases overall VQA performance by up to 7.7% under the same budget, and specifically boosts the performance of apprentice VLMs by up to 36.6%.

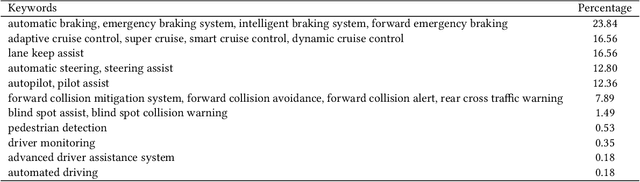

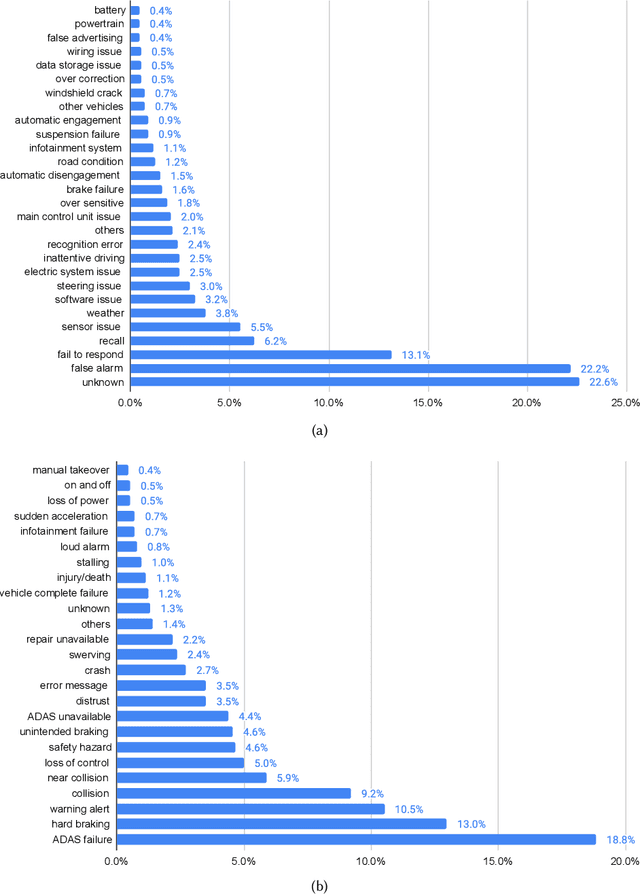

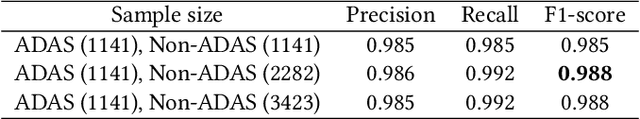

Cause-and-Effect Analysis of ADAS: A Comparison Study between Literature Review and Complaint Data

Jul 30, 2022

Abstract:Advanced driver assistance systems (ADAS) are designed to improve vehicle safety. However, it is difficult to achieve such benefits without understanding the causes and limitations of the current ADAS and their possible solutions. This study 1) investigated the limitations and solutions of ADAS through a literature review, 2) identified the causes and effects of ADAS through consumer complaints using natural language processing models, and 3) compared the major differences between the two. These two lines of research identified similar categories of ADAS causes, including human factors, environmental factors, and vehicle factors. However, academic research focused more on human factors of ADAS issues and proposed advanced algorithms to mitigate such issues while drivers complained more of vehicle factors of ADAS failures, which led to associated top consequences. The findings from these two sources tend to complement each other and provide important implications for the improvement of ADAS in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge