Jackie Ayoub

Building Trust Profiles in Conditionally Automated Driving

Jun 28, 2023

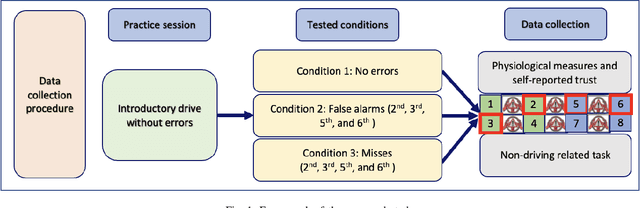

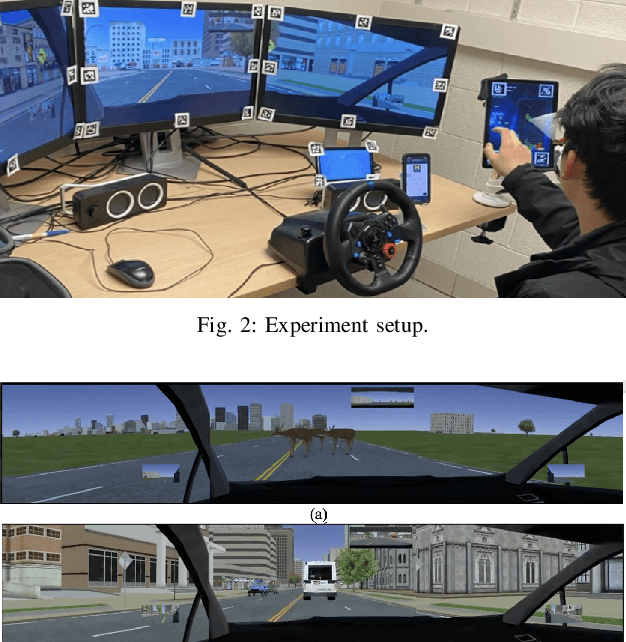

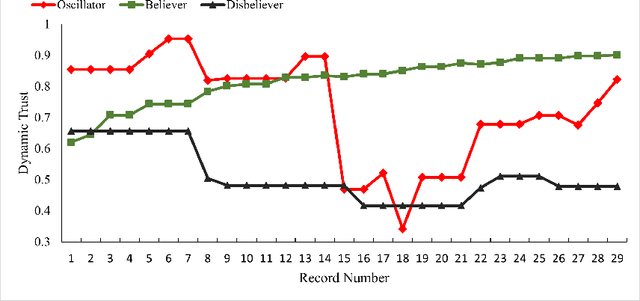

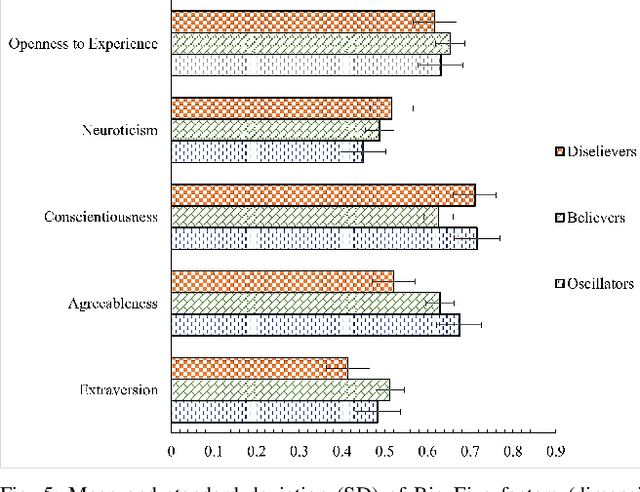

Abstract:Trust is crucial for ensuring the safety, security, and widespread adoption of automated vehicles (AVs), and if trust is lacking, drivers and the public may not be willing to use them. This research seeks to investigate trust profiles in order to create personalized experiences for drivers in AVs. This technique helps in better understanding drivers' dynamic trust from a persona's perspective. The study was conducted in a driving simulator where participants were requested to take over control from automated driving in three conditions that included a control condition, a false alarm condition, and a miss condition with eight takeover requests (TORs) in different scenarios. Drivers' dispositional trust, initial learned trust, dynamic trust, personality, and emotions were measured. We identified three trust profiles (i.e., believers, oscillators, and disbelievers) using a K-means clustering model. In order to validate this model, we built a multinomial logistic regression model based on SHAP explainer that selected the most important features to predict the trust profiles with an F1-score of 0.90 and accuracy of 0.89. We also discussed how different individual factors influenced trust profiles which helped us understand trust dynamics better from a persona's perspective. Our findings have important implications for designing a personalized in-vehicle trust monitoring and calibrating system to adjust drivers' trust levels in order to improve safety and experience in automated driving.

Cause-and-Effect Analysis of ADAS: A Comparison Study between Literature Review and Complaint Data

Jul 30, 2022

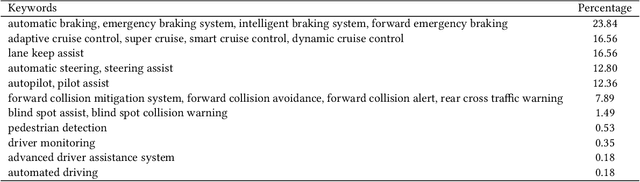

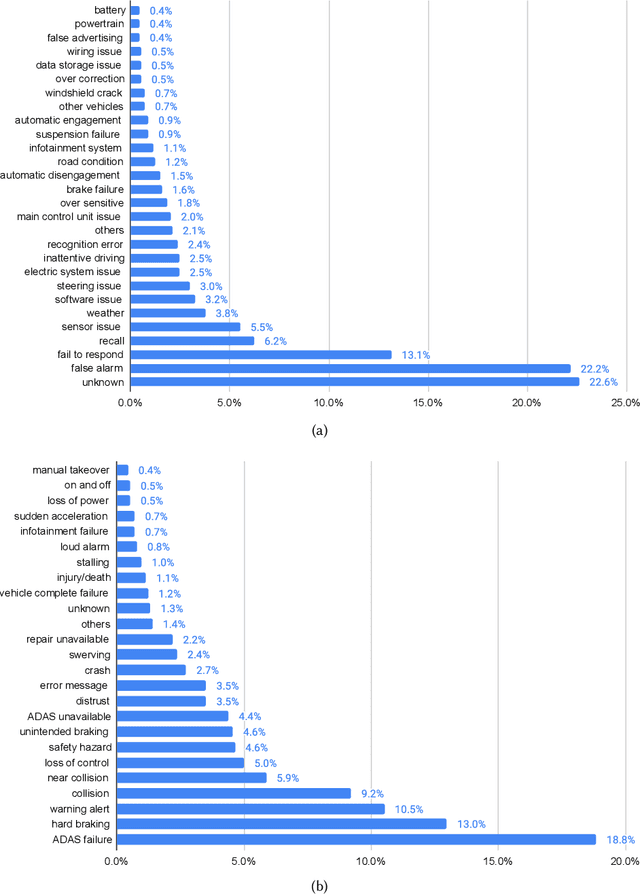

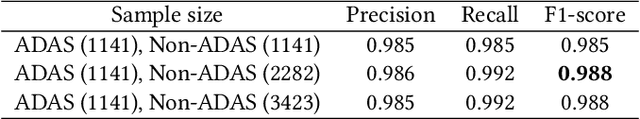

Abstract:Advanced driver assistance systems (ADAS) are designed to improve vehicle safety. However, it is difficult to achieve such benefits without understanding the causes and limitations of the current ADAS and their possible solutions. This study 1) investigated the limitations and solutions of ADAS through a literature review, 2) identified the causes and effects of ADAS through consumer complaints using natural language processing models, and 3) compared the major differences between the two. These two lines of research identified similar categories of ADAS causes, including human factors, environmental factors, and vehicle factors. However, academic research focused more on human factors of ADAS issues and proposed advanced algorithms to mitigate such issues while drivers complained more of vehicle factors of ADAS failures, which led to associated top consequences. The findings from these two sources tend to complement each other and provide important implications for the improvement of ADAS in the future.

An Investigation of Drivers' Dynamic Situational Trust in Conditionally Automated Driving

Dec 08, 2021

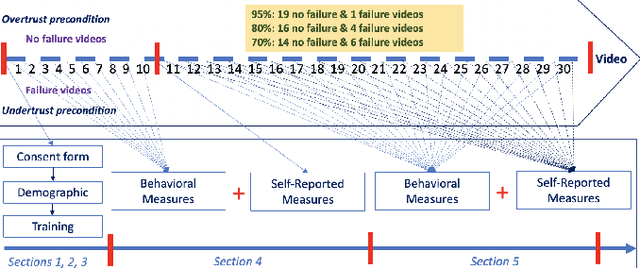

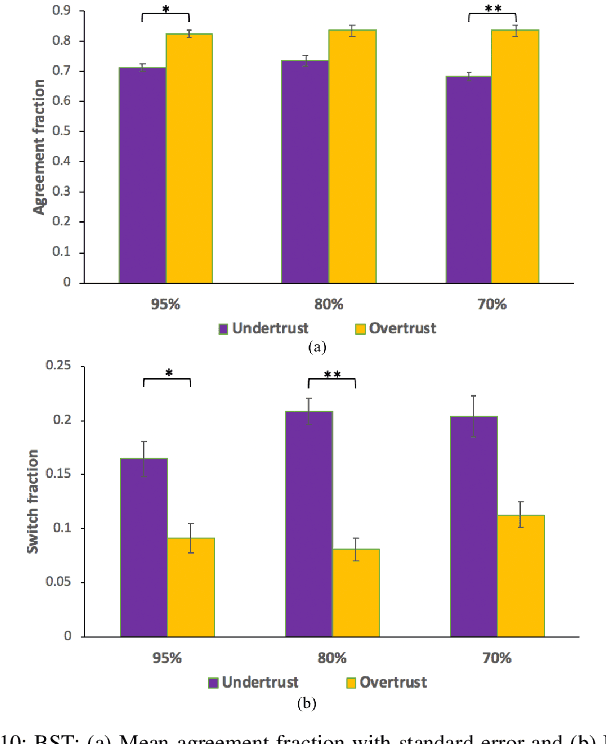

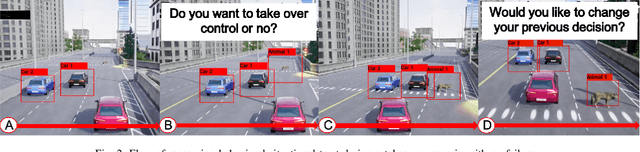

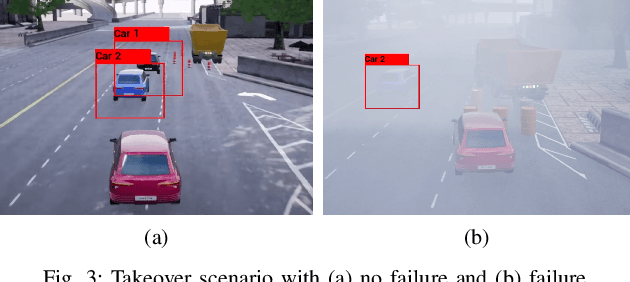

Abstract:Understanding how trust is built over time is essential, as trust plays an important role in the acceptance and adoption of automated vehicles (AVs). This study aimed to investigate the effects of system performance and participants' trust preconditions on dynamic situational trust during takeover transitions. We evaluated the dynamic situational trust of 42 participants using both self-reported and behavioral measures while watching 30 videos with takeover scenarios. The study was a 3 by 2 mixed-subjects design, where the within-subjects variable was the system performance (i.e., accuracy levels of 95\%, 80\%, and 70\%) and the between-subjects variable was the preconditions of the participants' trust (i.e., overtrust and undertrust). Our results showed that participants quickly adjusted their self-reported situational trust (SST) levels which were consistent with different accuracy levels of system performance in both trust preconditions. However, participants' behavioral situational trust (BST) was affected by their trust preconditions across different accuracy levels. For instance, the overtrust precondition significantly increased the agreement fraction compared to the undertrust precondition. The undertrust precondition significantly decreased the switch fraction compared to the overtrust precondition. These results have important implications for designing an in-vehicle trust calibration system for conditional AVs.

Predicting Driver Takeover Time in Conditionally Automated Driving

Jul 20, 2021

Abstract:It is extremely important to ensure a safe takeover transition in conditionally automated driving. One of the critical factors that quantifies the safe takeover transition is takeover time. Previous studies identified the effects of many factors on takeover time, such as takeover lead time, non-driving tasks, modalities of the takeover requests (TORs), and scenario urgency. However, there is a lack of research to predict takeover time by considering these factors all at the same time. Toward this end, we used eXtreme Gradient Boosting (XGBoost) to predict the takeover time using a dataset from a meta-analysis study [1]. In addition, we used SHAP (SHapley Additive exPlanation) to analyze and explain the effects of the predictors on takeover time. We identified seven most critical predictors that resulted in the best prediction performance. Their main effects and interaction effects on takeover time were examined. The results showed that the proposed approach provided both good performance and explainability. Our findings have implications on the design of in-vehicle monitoring and alert systems to facilitate the interaction between the drivers and the automated vehicle.

Combat COVID-19 Infodemic Using Explainable Natural Language Processing Models

Mar 01, 2021

Abstract:Misinformation of COVID-19 is prevalent on social media as the pandemic unfolds, and the associated risks are extremely high. Thus, it is critical to detect and combat such misinformation. Recently, deep learning models using natural language processing techniques, such as BERT (Bidirectional Encoder Representations from Transformers), have achieved great successes in detecting misinformation. In this paper, we proposed an explainable natural language processing model based on DistilBERT and SHAP (Shapley Additive exPlanations) to combat misinformation about COVID-19 due to their efficiency and effectiveness. First, we collected a dataset of 984 claims about COVID-19 with fact checking. By augmenting the data using back-translation, we doubled the sample size of the dataset and the DistilBERT model was able to obtain good performance (accuracy: 0.972; areas under the curve: 0.993) in detecting misinformation about COVID-19. Our model was also tested on a larger dataset for AAAI2021 - COVID-19 Fake News Detection Shared Task and obtained good performance (accuracy: 0.938; areas under the curve: 0.985). The performance on both datasets was better than traditional machine learning models. Second, in order to boost public trust in model prediction, we employed SHAP to improve model explainability, which was further evaluated using a between-subjects experiment with three conditions, i.e., text (T), text+SHAP explanation (TSE), and text+SHAP explanation+source and evidence (TSESE). The participants were significantly more likely to trust and share information related to COVID-19 in the TSE and TSESE conditions than in the T condition. Our results provided good implications in detecting misinformation about COVID-19 and improving public trust.

Modeling Dispositional and Initial learned Trust in Automated Vehicles with Predictability and Explainability

Dec 25, 2020

Abstract:Technological advances in the automotive industry are bringing automated driving closer to road use. However, one of the most important factors affecting public acceptance of automated vehicles (AVs) is the public's trust in AVs. Many factors can influence people's trust, including perception of risks and benefits, feelings, and knowledge of AVs. This study aims to use these factors to predict people's dispositional and initial learned trust in AVs using a survey study conducted with 1175 participants. For each participant, 23 features were extracted from the survey questions to capture his or her knowledge, perception, experience, behavioral assessment, and feelings about AVs. These features were then used as input to train an eXtreme Gradient Boosting (XGBoost) model to predict trust in AVs. With the help of SHapley Additive exPlanations (SHAP), we were able to interpret the trust predictions of XGBoost to further improve the explainability of the XGBoost model. Compared to traditional regression models and black-box machine learning models, our findings show that this approach was powerful in providing a high level of explainability and predictability of trust in AVs, simultaneously.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge