Lilit Avetisyan

The Mediating Effects of Emotions on Trust through Risk Perception and System Performance in Automated Driving

Apr 06, 2025Abstract:Trust in automated vehicles (AVs) has traditionally been explored through a cognitive lens, but growing evidence highlights the significant role emotions play in shaping trust. This study investigates how risk perception and AV performance (error vs. no error) influence emotional responses and trust in AVs, using mediation analysis to examine the indirect effects of emotions. In this study, 70 participants (42 male, 28 female) watched real-life recorded videos of AVs operating with or without errors, coupled with varying levels of risk information (high, low, or none). They reported their anticipated emotional responses using 19 discrete emotion items, and trust was assessed through dispositional, learned, and situational trust measures. Factor analysis identified four key emotional components, namely hostility, confidence, anxiety, and loneliness, that were influenced by risk perception and AV performance. The linear mixed model showed that risk perception was not a significant predictor of trust, while performance and individual differences were. Mediation analysis revealed that confidence was a strong positive mediator, while hostile and anxious emotions negatively impacted trust. However, lonely emotions did not significantly mediate the relationship between AV performance and trust. The results show that real-time AV behavior is more influential on trust than pre-existing risk perceptions, indicating trust in AVs might be more experience-based than shaped by prior beliefs. Our findings also underscore the importance of fostering positive emotional responses for trust calibration, which has important implications for user experience design in automated driving.

Building Trust Profiles in Conditionally Automated Driving

Jun 28, 2023

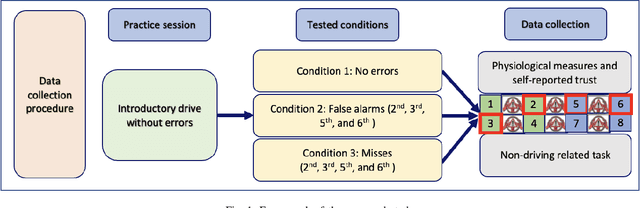

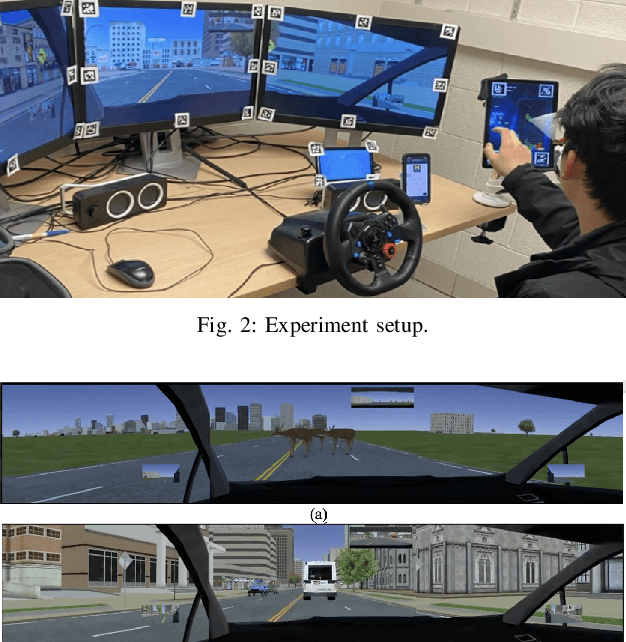

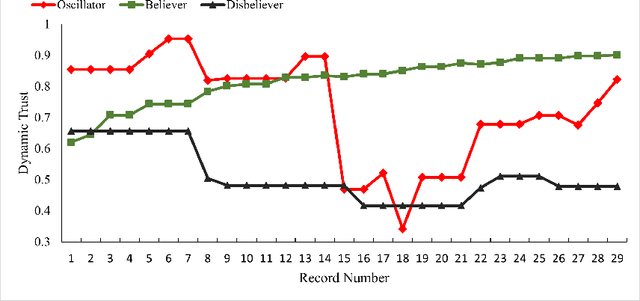

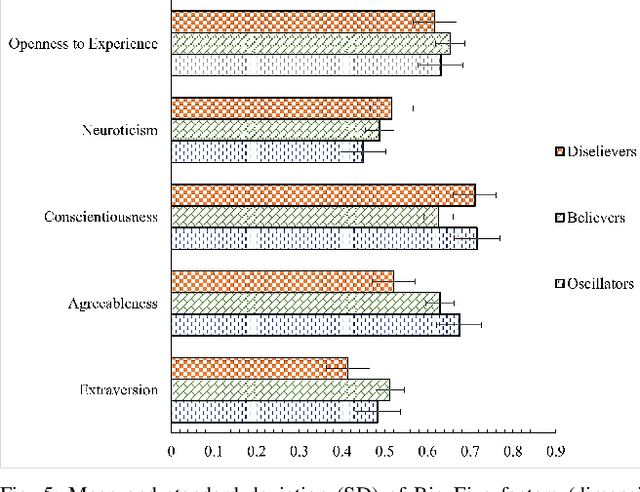

Abstract:Trust is crucial for ensuring the safety, security, and widespread adoption of automated vehicles (AVs), and if trust is lacking, drivers and the public may not be willing to use them. This research seeks to investigate trust profiles in order to create personalized experiences for drivers in AVs. This technique helps in better understanding drivers' dynamic trust from a persona's perspective. The study was conducted in a driving simulator where participants were requested to take over control from automated driving in three conditions that included a control condition, a false alarm condition, and a miss condition with eight takeover requests (TORs) in different scenarios. Drivers' dispositional trust, initial learned trust, dynamic trust, personality, and emotions were measured. We identified three trust profiles (i.e., believers, oscillators, and disbelievers) using a K-means clustering model. In order to validate this model, we built a multinomial logistic regression model based on SHAP explainer that selected the most important features to predict the trust profiles with an F1-score of 0.90 and accuracy of 0.89. We also discussed how different individual factors influenced trust profiles which helped us understand trust dynamics better from a persona's perspective. Our findings have important implications for designing a personalized in-vehicle trust monitoring and calibrating system to adjust drivers' trust levels in order to improve safety and experience in automated driving.

An Investigation of Drivers' Dynamic Situational Trust in Conditionally Automated Driving

Dec 08, 2021

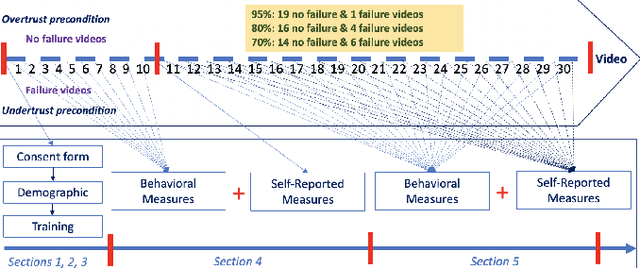

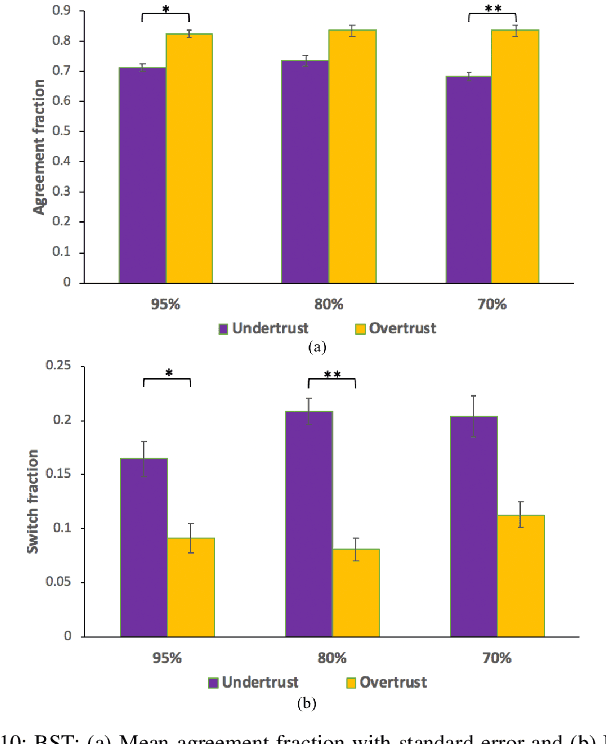

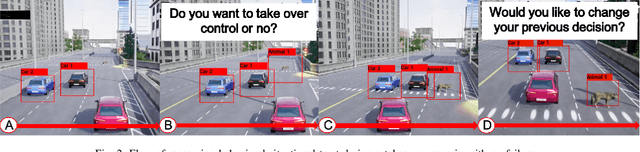

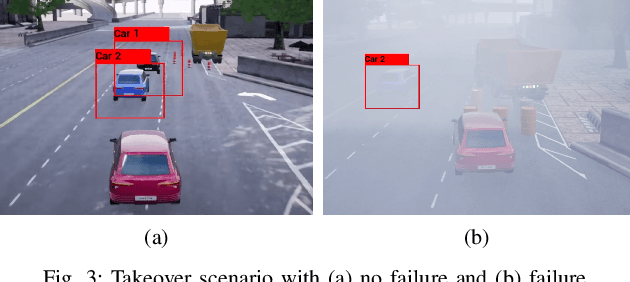

Abstract:Understanding how trust is built over time is essential, as trust plays an important role in the acceptance and adoption of automated vehicles (AVs). This study aimed to investigate the effects of system performance and participants' trust preconditions on dynamic situational trust during takeover transitions. We evaluated the dynamic situational trust of 42 participants using both self-reported and behavioral measures while watching 30 videos with takeover scenarios. The study was a 3 by 2 mixed-subjects design, where the within-subjects variable was the system performance (i.e., accuracy levels of 95\%, 80\%, and 70\%) and the between-subjects variable was the preconditions of the participants' trust (i.e., overtrust and undertrust). Our results showed that participants quickly adjusted their self-reported situational trust (SST) levels which were consistent with different accuracy levels of system performance in both trust preconditions. However, participants' behavioral situational trust (BST) was affected by their trust preconditions across different accuracy levels. For instance, the overtrust precondition significantly increased the agreement fraction compared to the undertrust precondition. The undertrust precondition significantly decreased the switch fraction compared to the overtrust precondition. These results have important implications for designing an in-vehicle trust calibration system for conditional AVs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge