Mehdi Afshari

Comments on 'Fast and scalable search of whole-slide images via self-supervised deep learning'

Apr 18, 2023Abstract:Chen et al. [Chen2022] recently published the article 'Fast and scalable search of whole-slide images via self-supervised deep learning' in Nature Biomedical Engineering. The authors call their method 'self-supervised image search for histology', short SISH. We express our concerns that SISH is an incremental modification of Yottixel, has used MinMax binarization but does not cite the original works, and is based on a misnomer 'self-supervised image search'. As well, we point to several other concerns regarding experiments and comparisons performed by Chen et al.

A Similarity Measure of Histopathology Images by Deep Embeddings

Jul 29, 2021

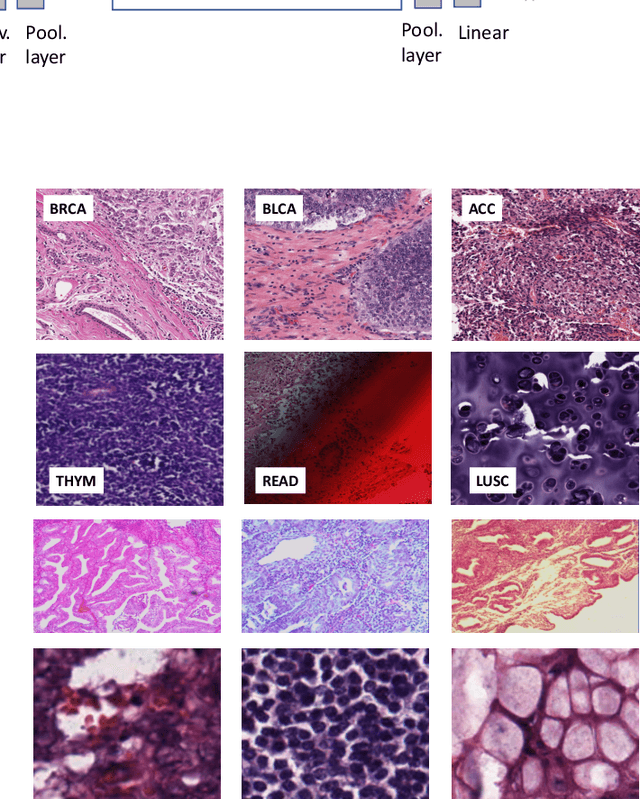

Abstract:Histopathology digital scans are large-size images that contain valuable information at the pixel level. Content-based comparison of these images is a challenging task. This study proposes a content-based similarity measure for high-resolution gigapixel histopathology images. The proposed similarity measure is an expansion of cosine vector similarity to a matrix. Each image is divided into same-size patches with a meaningful amount of information (i.e., contained enough tissue). The similarity is measured by the extraction of patch-level deep embeddings of the last pooling layer of a pre-trained deep model at four different magnification levels, namely, 1x, 2.5x, 5x, and 10x magnifications. In addition, for faster measurement, embedding reduction is investigated. Finally, to assess the proposed method, an image search method is implemented. Results show that the similarity measure represents the slide labels with a maximum accuracy of 93.18\% for top-5 search at 5x magnification.

Fine-Tuning and Training of DenseNet for Histopathology Image Representation Using TCGA Diagnostic Slides

Jan 20, 2021

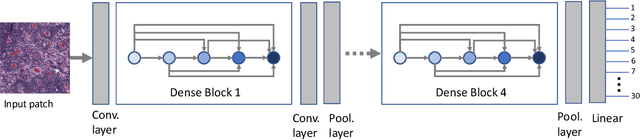

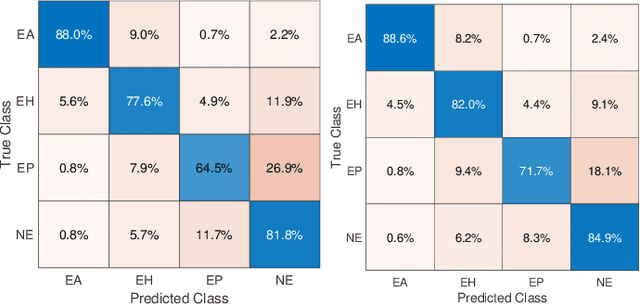

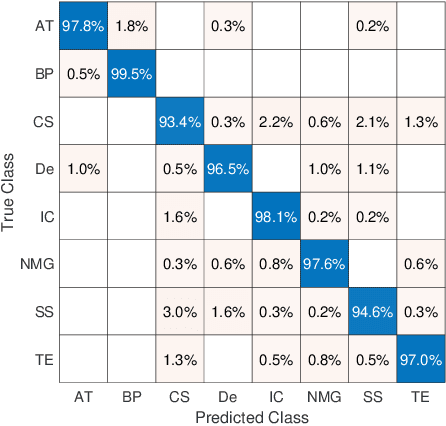

Abstract:Feature vectors provided by pre-trained deep artificial neural networks have become a dominant source for image representation in recent literature. Their contribution to the performance of image analysis can be improved through finetuning. As an ultimate solution, one might even train a deep network from scratch with the domain-relevant images, a highly desirable option which is generally impeded in pathology by lack of labeled images and the computational expense. In this study, we propose a new network, namely KimiaNet, that employs the topology of the DenseNet with four dense blocks, fine-tuned and trained with histopathology images in different configurations. We used more than 240,000 image patches with 1000x1000 pixels acquired at 20x magnification through our proposed "highcellularity mosaic" approach to enable the usage of weak labels of 7,126 whole slide images of formalin-fixed paraffin-embedded human pathology samples publicly available through the The Cancer Genome Atlas (TCGA) repository. We tested KimiaNet using three public datasets, namely TCGA, endometrial cancer images, and colorectal cancer images by evaluating the performance of search and classification when corresponding features of different networks are used for image representation. As well, we designed and trained multiple convolutional batch-normalized ReLU (CBR) networks. The results show that KimiaNet provides superior results compared to the original DenseNet and smaller CBR networks when used as feature extractor to represent histopathology images.

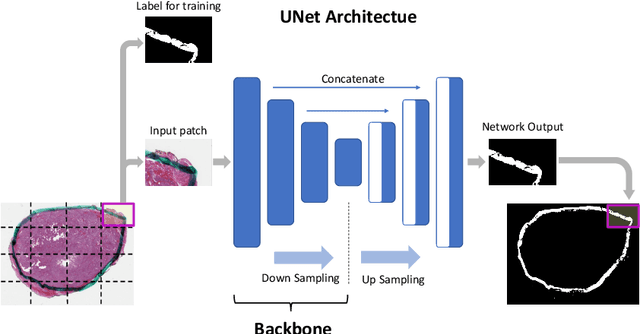

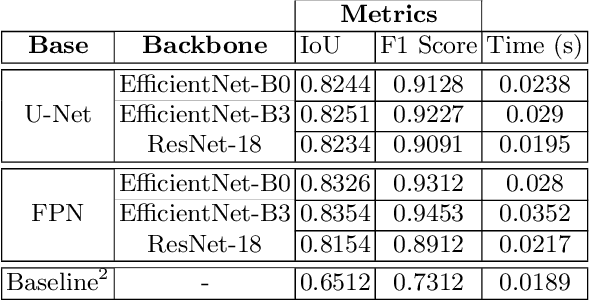

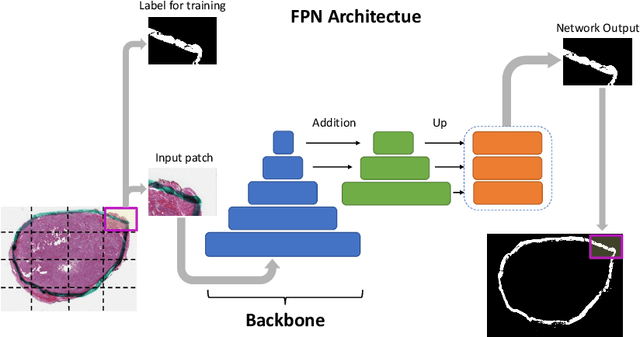

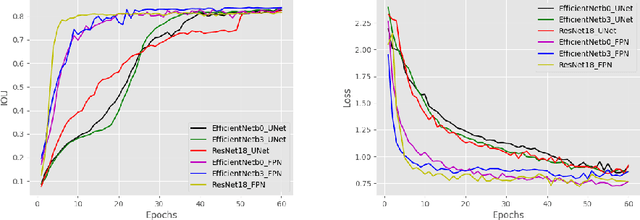

Ink Marker Segmentation in Histopathology Images Using Deep Learning

Oct 29, 2020

Abstract:Due to the recent advancements in machine vision, digital pathology has gained significant attention. Histopathology images are distinctly rich in visual information. The tissue glass slide images are utilized for disease diagnosis. Researchers study many methods to process histopathology images and facilitate fast and reliable diagnosis; therefore, the availability of high-quality slides becomes paramount. The quality of the images can be negatively affected when the glass slides are ink-marked by pathologists to delineate regions of interest. As an example, in one of the largest public histopathology datasets, The Cancer Genome Atlas (TCGA), approximately $12\%$ of the digitized slides are affected by manual delineations through ink markings. To process these open-access slide images and other repositories for the design and validation of new methods, an algorithm to detect the marked regions of the images is essential to avoid confusing tissue pixels with ink-colored pixels for computer methods. In this study, we propose to segment the ink-marked areas of pathology patches through a deep network. A dataset from $79$ whole slide images with $4,305$ patches was created and different networks were trained. Finally, the results showed an FPN model with the EffiecentNet-B3 as the backbone was found to be the superior configuration with an F1 score of $94.53\%$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge