Meha Kaushik

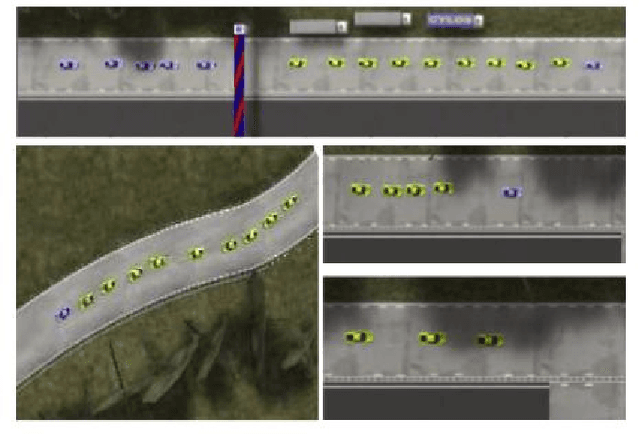

MADRaS : Multi Agent Driving Simulator

Oct 02, 2020

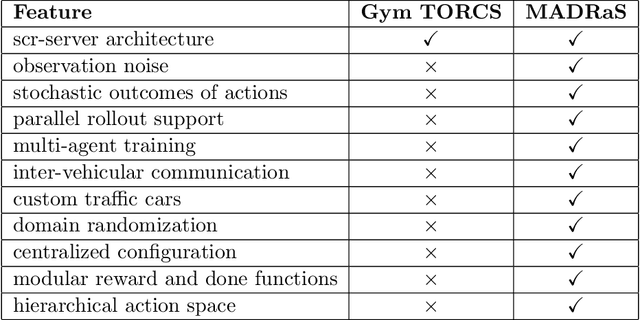

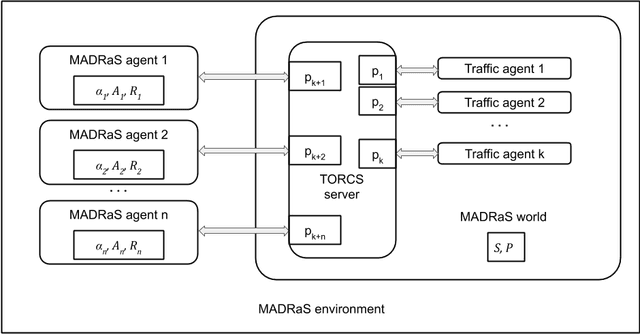

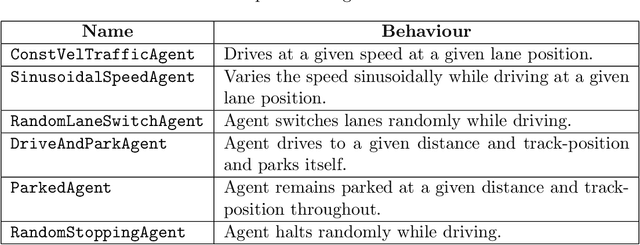

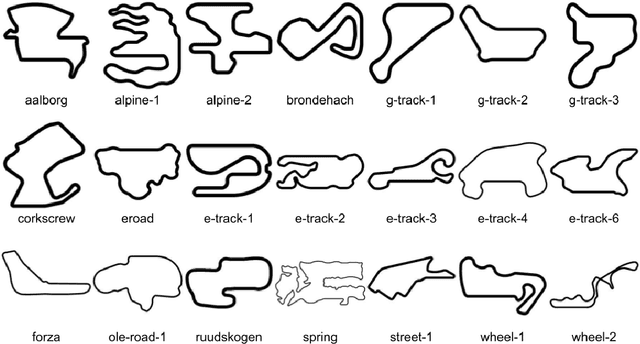

Abstract:In this work, we present MADRaS, an open-source multi-agent driving simulator for use in the design and evaluation of motion planning algorithms for autonomous driving. MADRaS provides a platform for constructing a wide variety of highway and track driving scenarios where multiple driving agents can train for motion planning tasks using reinforcement learning and other machine learning algorithms. MADRaS is built on TORCS, an open-source car-racing simulator. TORCS offers a variety of cars with different dynamic properties and driving tracks with different geometries and surface properties. MADRaS inherits these functionalities from TORCS and introduces support for multi-agent training, inter-vehicular communication, noisy observations, stochastic actions, and custom traffic cars whose behaviours can be programmed to simulate challenging traffic conditions encountered in the real world. MADRaS can be used to create driving tasks whose complexities can be tuned along eight axes in well-defined steps. This makes it particularly suited for curriculum and continual learning. MADRaS is lightweight and it provides a convenient OpenAI Gym interface for independent control of each car. Apart from the primitive steering-acceleration-brake control mode of TORCS, MADRaS offers a hierarchical track-position -- speed control that can potentially be used to achieve better generalization. MADRaS uses multiprocessing to run each agent as a parallel process for efficiency and integrates well with popular reinforcement learning libraries like RLLib.

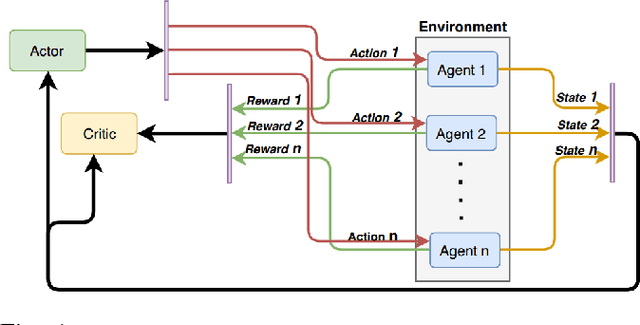

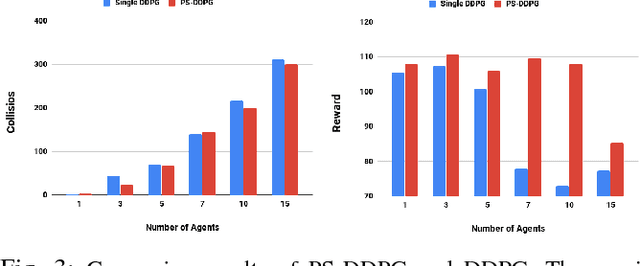

Parameter Sharing Reinforcement Learning Architecture for Multi Agent Driving Behaviors

Nov 17, 2018

Abstract:Multi-agent learning provides a potential framework for learning and simulating traffic behaviors. This paper proposes a novel architecture to learn multiple driving behaviors in a traffic scenario. The proposed architecture can learn multiple behaviors independently as well as simultaneously. We take advantage of the homogeneity of agents and learn in a parameter sharing paradigm. To further speed up the training process asynchronous updates are employed into the architecture. While learning different behaviors simultaneously, the given framework was also able to learn cooperation between the agents, without any explicit communication. We applied this framework to learn two important behaviors in driving: 1) Lane-Keeping and 2) Over-Taking. Results indicate faster convergence and learning of a more generic behavior, that is scalable to any number of agents. When compared the results with existing approaches, our results indicate equal and even better performance in some cases.

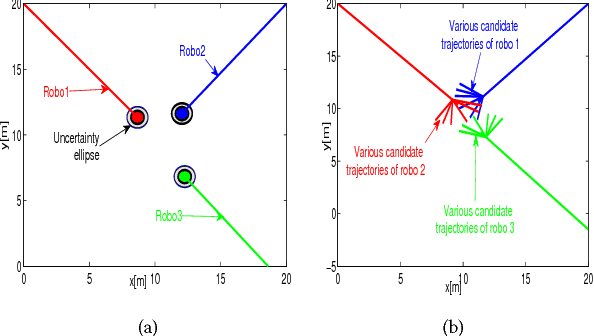

Chance constraint based multi agent navigation under uncertainty

Aug 20, 2016

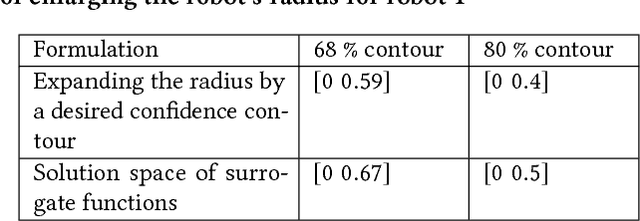

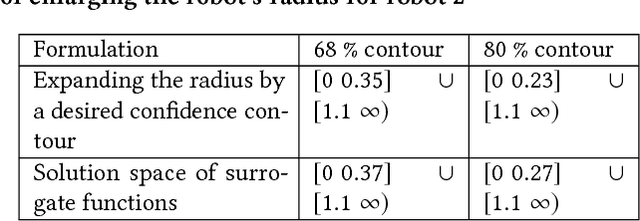

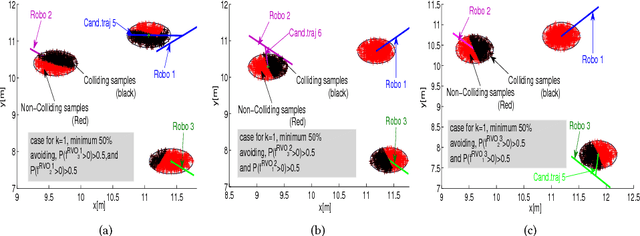

Abstract:We present Probabilistic Reciprocal Velocity Obstacle or PRVO as a general algorithm for navigating multiple robots under perception and motion uncertainty. PRVO is defined as the space of velocities that ensures dynamic collision avoidance between a pair of robots with a specified probability. Our approach is based on defining chance constraints over the inequalities defined by the deterministic Reciprocal Velocity Obstacle (RVO). The computational complexity of the proposed probabilistic RVO is comparable to the deterministic counterpart. This is achieved by a series of reformulations where we first substitute the computationally intractable chance constraints with a family of surrogate constraints and then adopt a time scaling based solution methodology to efficiently characterize their solution space. Further, we also show that the solution space of each member of the family of surrogate constraints can be mapped in closed form to the probability with which the original chance constraints are satisfied and thus consequently to probability of collision avoidance. We validate our formulations through numerical simulations where we highlight the importance of incorporating the effect of motion uncertainty and the advantages of PRVO over existing formulations which handles the effect of uncertainty by using conservative bounding volumes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge