Meenakshi Khosla

Representational Alignment Across Model Layers and Brain Regions with Hierarchical Optimal Transport

Oct 02, 2025Abstract:Standard representational similarity methods align each layer of a network to its best match in another independently, producing asymmetric results, lacking a global alignment score, and struggling with networks of different depths. These limitations arise from ignoring global activation structure and restricting mappings to rigid one-to-one layer correspondences. We propose Hierarchical Optimal Transport (HOT), a unified framework that jointly infers soft, globally consistent layer-to-layer couplings and neuron-level transport plans. HOT allows source neurons to distribute mass across multiple target layers while minimizing total transport cost under marginal constraints. This yields both a single alignment score for the entire network comparison and a soft transport plan that naturally handles depth mismatches through mass distribution. We evaluate HOT on vision models, large language models, and human visual cortex recordings. Across all domains, HOT matches or surpasses standard pairwise matching in alignment quality. Moreover, it reveals smooth, fine-grained hierarchical correspondences: early layers map to early layers, deeper layers maintain relative positions, and depth mismatches are resolved by distributing representations across multiple layers. These structured patterns emerge naturally from global optimization without being imposed, yet are absent in greedy layer-wise methods. HOT thus enables richer, more interpretable comparisons between representations, particularly when networks differ in architecture or depth.

Measuring the Measures: Discriminative Capacity of Representational Similarity Metrics Across Model Families

Sep 04, 2025Abstract:Representational similarity metrics are fundamental tools in neuroscience and AI, yet we lack systematic comparisons of their discriminative power across model families. We introduce a quantitative framework to evaluate representational similarity measures based on their ability to separate model families-across architectures (CNNs, Vision Transformers, Swin Transformers, ConvNeXt) and training regimes (supervised vs. self-supervised). Using three complementary separability measures-dprime from signal detection theory, silhouette coefficients and ROC-AUC, we systematically assess the discriminative capacity of commonly used metrics including RSA, linear predictivity, Procrustes, and soft matching. We show that separability systematically increases as metrics impose more stringent alignment constraints. Among mapping-based approaches, soft-matching achieves the highest separability, followed by Procrustes alignment and linear predictivity. Non-fitting methods such as RSA also yield strong separability across families. These results provide the first systematic comparison of similarity metrics through a separability lens, clarifying their relative sensitivity and guiding metric choice for large-scale model and brain comparisons.

Bridging Critical Gaps in Convergent Learning: How Representational Alignment Evolves Across Layers, Training, and Distribution Shifts

Feb 26, 2025Abstract:Understanding convergent learning -- the extent to which artificial and biological neural networks develop similar representations -- is crucial for neuroscience and AI, as it reveals shared learning principles and guides brain-like model design. While several studies have noted convergence in early and late layers of vision networks, key gaps remain. First, much existing work relies on a limited set of metrics, overlooking transformation invariances required for proper alignment. We compare three metrics that ignore specific irrelevant transformations: linear regression (ignoring affine transformations), Procrustes (ignoring rotations and reflections), and permutation/soft-matching (ignoring unit order). Notably, orthogonal transformations align representations nearly as effectively as more flexible linear ones, and although permutation scores are lower, they significantly exceed chance, indicating a robust representational basis. A second critical gap lies in understanding when alignment emerges during training. Contrary to expectations that convergence builds gradually with task-specific learning, our findings reveal that nearly all convergence occurs within the first epoch -- long before networks achieve optimal performance. This suggests that shared input statistics, architectural biases, or early training dynamics drive convergence rather than the final task solution. Finally, prior studies have not systematically examined how changes in input statistics affect alignment. Our work shows that out-of-distribution (OOD) inputs consistently amplify differences in later layers, while early layers remain aligned for both in-distribution and OOD inputs, suggesting that this alignment is driven by generalizable features stable across distribution shifts. These findings fill critical gaps in our understanding of representational convergence, with implications for neuroscience and AI.

Brain-Model Evaluations Need the NeuroAI Turing Test

Feb 22, 2025Abstract:What makes an artificial system a good model of intelligence? The classical test proposed by Alan Turing focuses on behavior, requiring that an artificial agent's behavior be indistinguishable from that of a human. While behavioral similarity provides a strong starting point, two systems with very different internal representations can produce the same outputs. Thus, in modeling biological intelligence, the field of NeuroAI often aims to go beyond behavioral similarity and achieve representational convergence between a model's activations and the measured activity of a biological system. This position paper argues that the standard definition of the Turing Test is incomplete for NeuroAI, and proposes a stronger framework called the ``NeuroAI Turing Test'', a benchmark that extends beyond behavior alone and \emph{additionally} requires models to produce internal neural representations that are empirically indistinguishable from those of a brain up to measured individual variability, i.e. the differences between a computational model and the brain is no more than the difference between one brain and another brain. While the brain is not necessarily the ceiling of intelligence, it remains the only universally agreed-upon example, making it a natural reference point for evaluating computational models. By proposing this framework, we aim to shift the discourse from loosely defined notions of brain inspiration to a systematic and testable standard centered on both behavior and internal representations, providing a clear benchmark for neuroscientific modeling and AI development.

Evaluating Representational Similarity Measures from the Lens of Functional Correspondence

Nov 21, 2024

Abstract:Neuroscience and artificial intelligence (AI) both face the challenge of interpreting high-dimensional neural data, where the comparative analysis of such data is crucial for revealing shared mechanisms and differences between these complex systems. Despite the widespread use of representational comparisons and the abundance classes of comparison methods, a critical question remains: which metrics are most suitable for these comparisons? While some studies evaluate metrics based on their ability to differentiate models of different origins or constructions (e.g., various architectures), another approach is to assess how well they distinguish models that exhibit distinct behaviors. To investigate this, we examine the degree of alignment between various representational similarity measures and behavioral outcomes, employing group statistics and a comprehensive suite of behavioral metrics for comparison. In our evaluation of eight commonly used representational similarity metrics in the visual domain -- spanning alignment-based, Canonical Correlation Analysis (CCA)-based, inner product kernel-based, and nearest-neighbor methods -- we found that metrics like linear Centered Kernel Alignment (CKA) and Procrustes distance, which emphasize the overall geometric structure or shape of representations, excelled in differentiating trained from untrained models and aligning with behavioral measures, whereas metrics such as linear predictivity, commonly used in neuroscience, demonstrated only moderate alignment with behavior. These insights are crucial for selecting metrics that emphasize behaviorally meaningful comparisons in NeuroAI research.

Soft Matching Distance: A metric on neural representations that captures single-neuron tuning

Nov 16, 2023Abstract:Common measures of neural representational (dis)similarity are designed to be insensitive to rotations and reflections of the neural activation space. Motivated by the premise that the tuning of individual units may be important, there has been recent interest in developing stricter notions of representational (dis)similarity that require neurons to be individually matched across networks. When two networks have the same size (i.e. same number of neurons), a distance metric can be formulated by optimizing over neuron index permutations to maximize tuning curve alignment. However, it is not clear how to generalize this metric to measure distances between networks with different sizes. Here, we leverage a connection to optimal transport theory to derive a natural generalization based on "soft" permutations. The resulting metric is symmetric, satisfies the triangle inequality, and can be interpreted as a Wasserstein distance between two empirical distributions. Further, our proposed metric avoids counter-intuitive outcomes suffered by alternative approaches, and captures complementary geometric insights into neural representations that are entirely missed by rotation-invariant metrics.

NeuroGen: activation optimized image synthesis for discovery neuroscience

May 15, 2021

Abstract:Functional MRI (fMRI) is a powerful technique that has allowed us to characterize visual cortex responses to stimuli, yet such experiments are by nature constructed based on a priori hypotheses, limited to the set of images presented to the individual while they are in the scanner, are subject to noise in the observed brain responses, and may vary widely across individuals. In this work, we propose a novel computational strategy, which we call NeuroGen, to overcome these limitations and develop a powerful tool for human vision neuroscience discovery. NeuroGen combines an fMRI-trained neural encoding model of human vision with a deep generative network to synthesize images predicted to achieve a target pattern of macro-scale brain activation. We demonstrate that the reduction of noise that the encoding model provides, coupled with the generative network's ability to produce images of high fidelity, results in a robust discovery architecture for visual neuroscience. By using only a small number of synthetic images created by NeuroGen, we demonstrate that we can detect and amplify differences in regional and individual human brain response patterns to visual stimuli. We then verify that these discoveries are reflected in the several thousand observed image responses measured with fMRI. We further demonstrate that NeuroGen can create synthetic images predicted to achieve regional response patterns not achievable by the best-matching natural images. The NeuroGen framework extends the utility of brain encoding models and opens up a new avenue for exploring, and possibly precisely controlling, the human visual system.

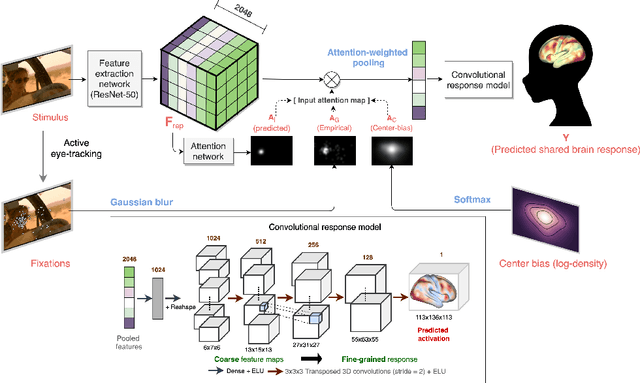

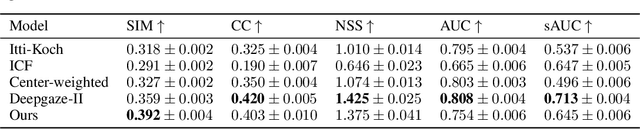

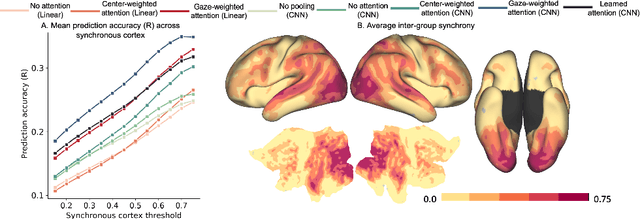

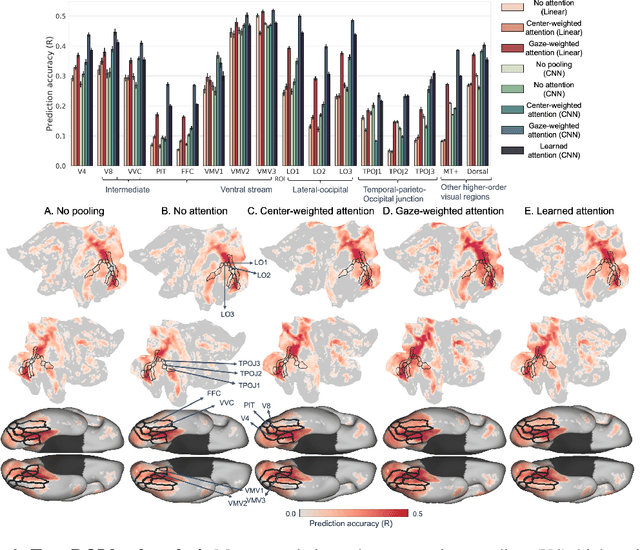

Neural encoding with visual attention

Oct 01, 2020

Abstract:Visual perception is critically influenced by the focus of attention. Due to limited resources, it is well known that neural representations are biased in favor of attended locations. Using concurrent eye-tracking and functional Magnetic Resonance Imaging (fMRI) recordings from a large cohort of human subjects watching movies, we first demonstrate that leveraging gaze information, in the form of attentional masking, can significantly improve brain response prediction accuracy in a neural encoding model. Next, we propose a novel approach to neural encoding by including a trainable soft-attention module. Using our new approach, we demonstrate that it is possible to learn visual attention policies by end-to-end learning merely on fMRI response data, and without relying on any eye-tracking. Interestingly, we find that attention locations estimated by the model on independent data agree well with the corresponding eye fixation patterns, despite no explicit supervision to do so. Together, these findings suggest that attention modules can be instrumental in neural encoding models of visual stimuli.

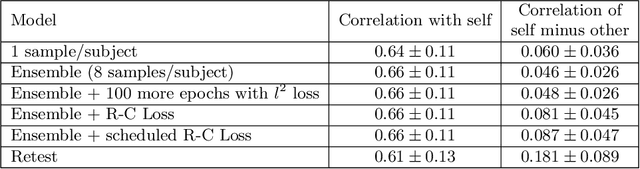

From Connectomic to Task-evoked Fingerprints: Individualized Prediction of Task Contrasts from Resting-state Functional Connectivity

Aug 07, 2020

Abstract:Resting-state functional MRI (rsfMRI) yields functional connectomes that can serve as cognitive fingerprints of individuals. Connectomic fingerprints have proven useful in many machine learning tasks, such as predicting subject-specific behavioral traits or task-evoked activity. In this work, we propose a surface-based convolutional neural network (BrainSurfCNN) model to predict individual task contrasts from their resting-state fingerprints. We introduce a reconstructive-contrastive loss that enforces subject-specificity of model outputs while minimizing predictive error. The proposed approach significantly improves the accuracy of predicted contrasts over a well-established baseline. Furthermore, BrainSurfCNN's prediction also surpasses test-retest benchmark in a subject identification task.

A shared neural encoding model for the prediction of subject-specific fMRI response

Jul 11, 2020

Abstract:The increasing popularity of naturalistic paradigms in fMRI (such as movie watching) demands novel strategies for multi-subject data analysis, such as use of neural encoding models. In the present study, we propose a shared convolutional neural encoding method that accounts for individual-level differences. Our method leverages multi-subject data to improve the prediction of subject-specific responses evoked by visual or auditory stimuli. We showcase our approach on high-resolution 7T fMRI data from the Human Connectome Project movie-watching protocol and demonstrate significant improvement over single-subject encoding models. We further demonstrate the ability of the shared encoding model to successfully capture meaningful individual differences in response to traditional task-based facial and scenes stimuli. Taken together, our findings suggest that inter-subject knowledge transfer can be beneficial to subject-specific predictive models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge