Medhat Moussa

Implicit Sensing in Traffic Optimization: Advanced Deep Reinforcement Learning Techniques

Sep 25, 2023

Abstract:A sudden roadblock on highways due to many reasons such as road maintenance, accidents, and car repair is a common situation we encounter almost daily. Autonomous Vehicles (AVs) equipped with sensors that can acquire vehicle dynamics such as speed, acceleration, and location can make intelligent decisions to change lanes before reaching a roadblock. A number of literature studies have examined car-following models and lane-changing models. However, only a few studies proposed an integrated car-following and lane-changing model, which has the potential to model practical driving maneuvers. Hence, in this paper, we present an integrated car-following and lane-changing decision-control system based on Deep Reinforcement Learning (DRL) to address this issue. Specifically, we consider a scenario where sudden construction work will be carried out along a highway. We model the scenario as a Markov Decision Process (MDP) and employ the well-known DQN algorithm to train the RL agent to make the appropriate decision accordingly (i.e., either stay in the same lane or change lanes). To overcome the delay and computational requirement of DRL algorithms, we adopt an MEC-assisted architecture where the RL agents are trained on MEC servers. We utilize the highly reputable SUMO simulator and OPENAI GYM to evaluate the performance of the proposed model under two policies; {\epsilon}-greedy policy and Boltzmann policy. The results unequivocally demonstrate that the DQN agent trained using the {\epsilon}-greedy policy significantly outperforms the one trained with the Boltzmann policy.

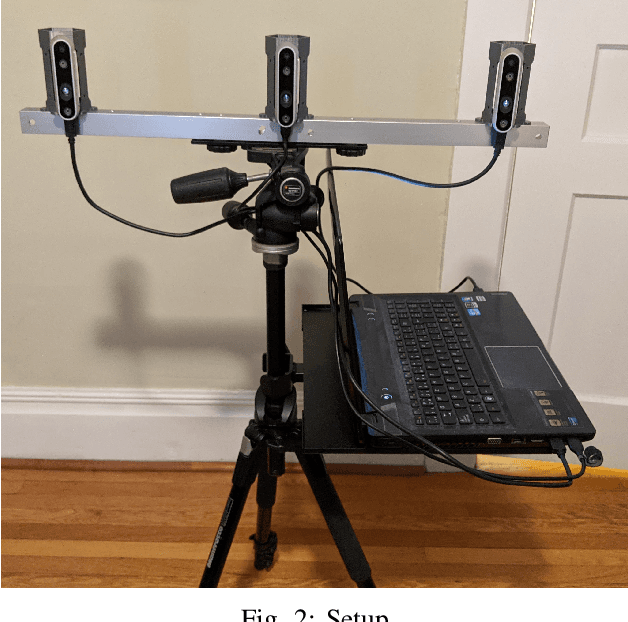

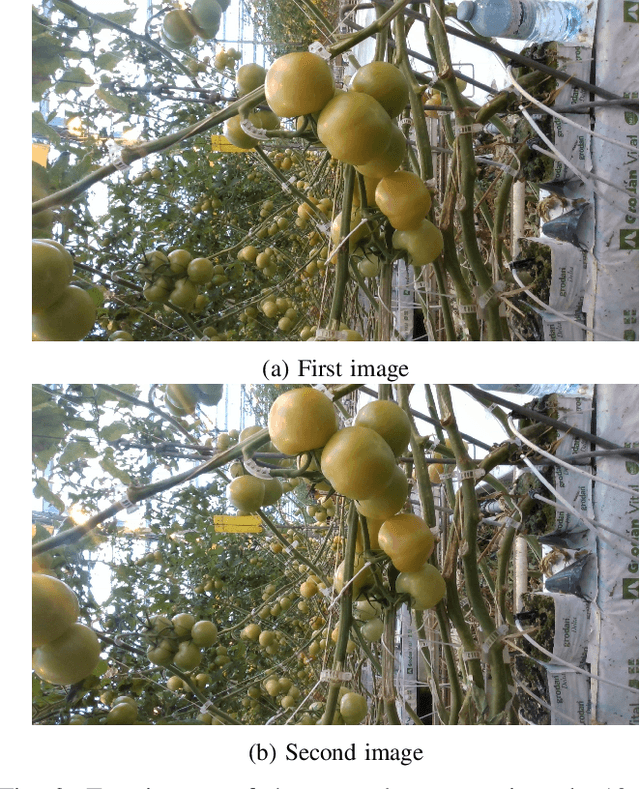

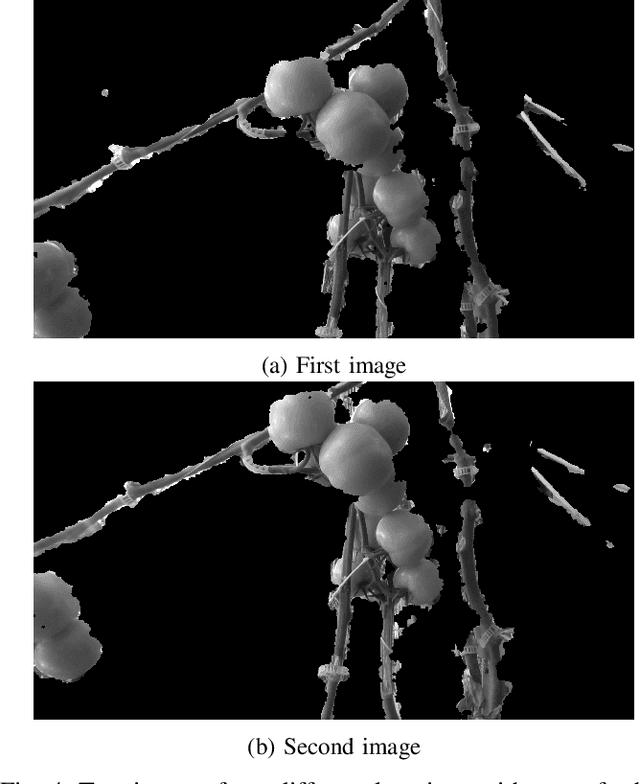

Towards developing a realistic robotics simulation environment of an indoor vegetable greenhouse

Jul 29, 2021

Abstract:This article presents a method for developing a realistic robotics simulation environment for application in vegetable greenhouses. The method pipeline starts with the construction of a 3D cloud images of the greenhouse rows. This data is then used to develop a robotics simulation environment using the CoppeliaSim simulation software. The method has been tested using images from a commercial greenhouse.

An Image Labeling Tool and Agricultural Dataset for Deep Learning

Apr 06, 2020

Abstract:We introduce a labeling tool and dataset aimed to facilitate computer vision research in agriculture. The annotation tool introduces novel methods for labeling with a variety of manual, semi-automatic, and fully-automatic tools. The dataset includes original images collected from commercial greenhouses, images from PlantVillage, and images from Google Images. Images were annotated with segmentations for foreground leaf, fruit, and stem instances, and diseased leaf area. Labels were in an extended COCO format. In total the dataset contained 10k tomatoes, 7k leaves, 2k stems, and 2k diseased leaf annotations.

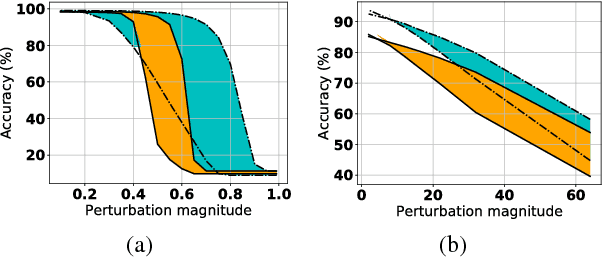

Batch Normalization is a Cause of Adversarial Vulnerability

May 29, 2019

Abstract:Batch normalization (batch norm) is often used in an attempt to stabilize and accelerate training in deep neural networks. In many cases it indeed decreases the number of parameter updates required to achieve low training error. However, it also reduces robustness to small adversarial input perturbations and noise by double-digit percentages, as we show on five standard datasets. Furthermore, substituting weight decay for batch norm is sufficient to nullify the relationship between adversarial vulnerability and the input dimension. Our work is consistent with a mean-field analysis that found that batch norm causes exploding gradients.

Predicting Adversarial Examples with High Confidence

Feb 13, 2018

Abstract:It has been suggested that adversarial examples cause deep learning models to make incorrect predictions with high confidence. In this work, we take the opposite stance: an overly confident model is more likely to be vulnerable to adversarial examples. This work is one of the most proactive approaches taken to date, as we link robustness with non-calibrated model confidence on noisy images, providing a data-augmentation-free path forward. The adversarial examples phenomenon is most easily explained by the trend of increasing non-regularized model capacity, while the diversity and number of samples in common datasets has remained flat. Test accuracy has incorrectly been associated with true generalization performance, ignoring that training and test splits are often extremely similar in terms of the overall representation space. The transferability property of adversarial examples was previously used as evidence against overfitting arguments, a perceived random effect, but overfitting is not always random.

Attacking Binarized Neural Networks

Jan 31, 2018

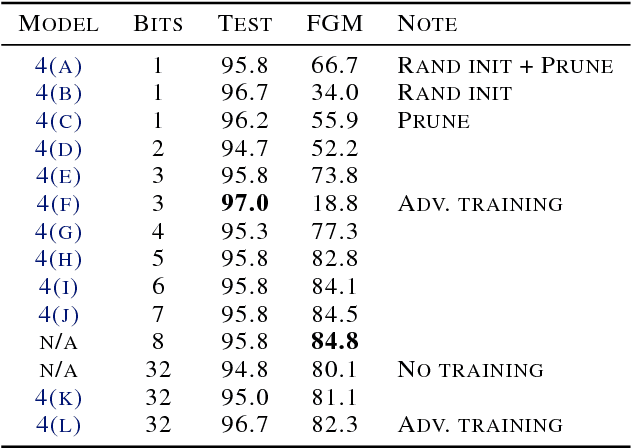

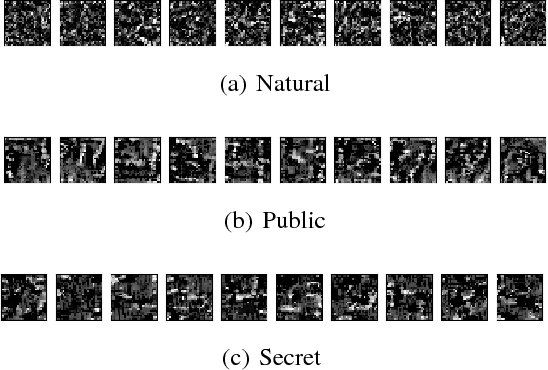

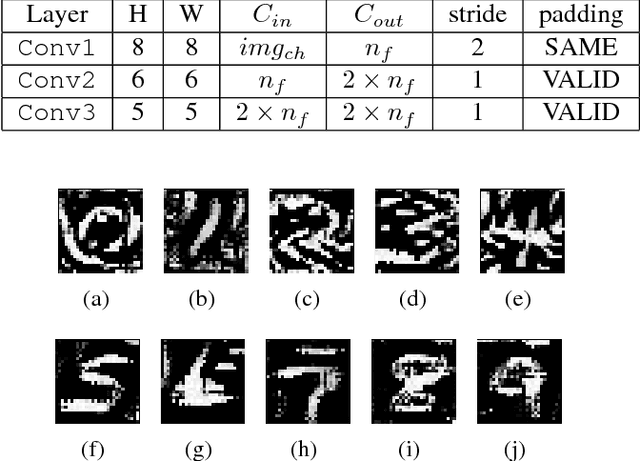

Abstract:Neural networks with low-precision weights and activations offer compelling efficiency advantages over their full-precision equivalents. The two most frequently discussed benefits of quantization are reduced memory consumption, and a faster forward pass when implemented with efficient bitwise operations. We propose a third benefit of very low-precision neural networks: improved robustness against some adversarial attacks, and in the worst case, performance that is on par with full-precision models. We focus on the very low-precision case where weights and activations are both quantized to $\pm$1, and note that stochastically quantizing weights in just one layer can sharply reduce the impact of iterative attacks. We observe that non-scaled binary neural networks exhibit a similar effect to the original defensive distillation procedure that led to gradient masking, and a false notion of security. We address this by conducting both black-box and white-box experiments with binary models that do not artificially mask gradients.

An Integrated Simulator and Dataset that Combines Grasping and Vision for Deep Learning

Apr 17, 2017

Abstract:Deep learning is an established framework for learning hierarchical data representations. While compute power is in abundance, one of the main challenges in applying this framework to robotic grasping has been obtaining the amount of data needed to learn these representations, and structuring the data to the task at hand. Among contemporary approaches in the literature, we highlight key properties that have encouraged the use of deep learning techniques, and in this paper, detail our experience in developing a simulator for collecting cylindrical precision grasps of a multi-fingered dexterous robotic hand.

The Ciona17 Dataset for Semantic Segmentation of Invasive Species in a Marine Aquaculture Environment

Feb 18, 2017

Abstract:An original dataset for semantic segmentation, Ciona17, is introduced, which to the best of the authors' knowledge, is the first dataset of its kind with pixel-level annotations pertaining to invasive species in a marine environment. Diverse outdoor illumination, a range of object shapes, colour, and severe occlusion provide a significant real world challenge for the computer vision community. An accompanying ground-truthing tool for superpixel labeling, Truth and Crop, is also introduced. Finally, we provide a baseline using a variant of Fully Convolutional Networks, and report results in terms of the standard mean intersection over union (mIoU) metric.

Modeling Grasp Motor Imagery through Deep Conditional Generative Models

Jan 11, 2017

Abstract:Grasping is a complex process involving knowledge of the object, the surroundings, and of oneself. While humans are able to integrate and process all of the sensory information required for performing this task, equipping machines with this capability is an extremely challenging endeavor. In this paper, we investigate how deep learning techniques can allow us to translate high-level concepts such as motor imagery to the problem of robotic grasp synthesis. We explore a paradigm based on generative models for learning integrated object-action representations, and demonstrate its capacity for capturing and generating multimodal, multi-finger grasp configurations on a simulated grasping dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge