Mateus Espadoto

Knowledge Graphs as Structured Memory for Embedding Spaces: From Training Clusters to Explainable Inference

Nov 18, 2025Abstract:We introduce Graph Memory (GM), a structured non-parametric framework that augments embedding-based inference with a compact, relational memory over region-level prototypes. Rather than treating each training instance in isolation, GM summarizes the embedding space into prototype nodes annotated with reliability indicators and connected by edges that encode geometric and contextual relations. This design unifies instance retrieval, prototype-based reasoning, and graph-based label propagation within a single inductive model that supports both efficient inference and faithful explanation. Experiments on synthetic and real datasets including breast histopathology (IDC) show that GM achieves accuracy competitive with $k$NN and Label Spreading while offering substantially better calibration and smoother decision boundaries, all with an order of magnitude fewer samples. By explicitly modeling reliability and relational structure, GM provides a principled bridge between local evidence and global consistency in non-parametric learning.

Creating User-steerable Projections with Interactive Semantic Mapping

Jun 18, 2025Abstract:Dimensionality reduction (DR) techniques map high-dimensional data into lower-dimensional spaces. Yet, current DR techniques are not designed to explore semantic structure that is not directly available in the form of variables or class labels. We introduce a novel user-guided projection framework for image and text data that enables customizable, interpretable, data visualizations via zero-shot classification with Multimodal Large Language Models (MLLMs). We enable users to steer projections dynamically via natural-language guiding prompts, to specify high-level semantic relationships of interest to the users which are not explicitly present in the data dimensions. We evaluate our method across several datasets and show that it not only enhances cluster separation, but also transforms DR into an interactive, user-driven process. Our approach bridges the gap between fully automated DR techniques and human-centered data exploration, offering a flexible and adaptive way to tailor projections to specific analytical needs.

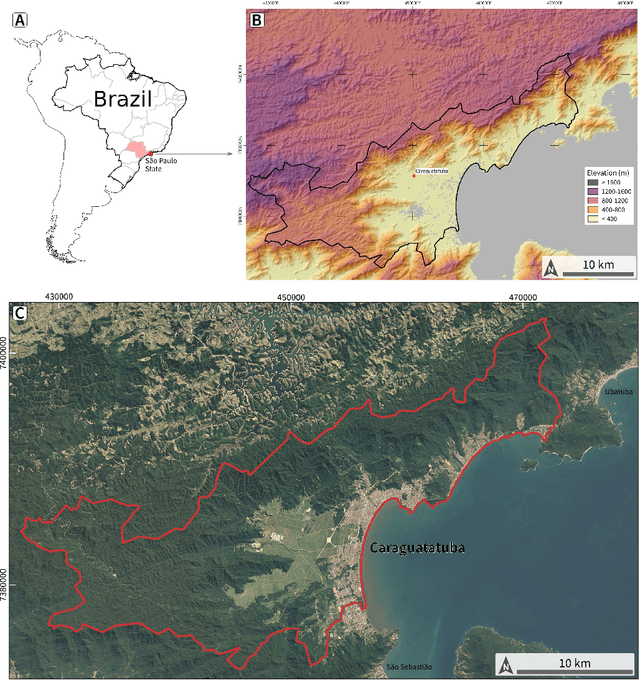

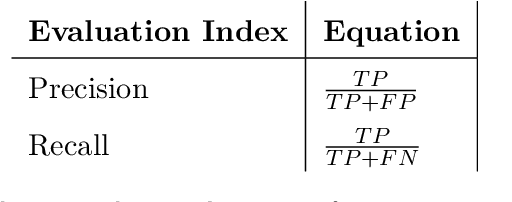

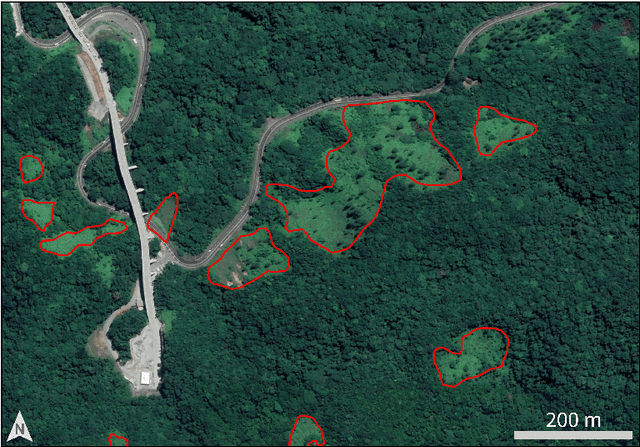

Relict landslide detection in rainforest areas using a combination of k-means clustering algorithm and Deep-Learning semantic segmentation models

Aug 04, 2022

Abstract:Landslides are destructive and recurrent natural disasters on steep slopes and represent a risk to lives and properties. Knowledge of relict landslides' location is vital to understand their mechanisms, update inventory maps and improve risk assessment. However, relict landslide mapping is complex in tropical regions covered with rainforest vegetation. A new CNN approach is proposed for semi-automatic detection of relict landslides, which uses a dataset generated by a k-means clustering algorithm and has a pre-training step. The weights computed in the pre-training are used to fine-tune the CNN training process. A comparison between the proposed and standard approaches is performed using CBERS-4A WPM images. Three CNNs for semantic segmentation are used (U-Net, FPN, Linknet) with two augmented datasets. A total of 42 combinations of CNNs are tested. Values of precision and recall were very similar between the combinations tested. Recall was higher than 75\% for every combination, but precision values were usually smaller than 20\%. False positives (FP) samples were addressed as the cause for these low precision values. Predictions of the proposed approach were more accurate and correctly detected more landslides. This work demonstrates that there are limitations for detecting relict landslides in areas covered with rainforest, mainly related to similarities between the spectral response of pastures and deforested areas with \textit{Gleichenella sp.} ferns, commonly used as an indicator of landslide scars.

UnProjection: Leveraging Inverse-Projections for Visual Analytics of High-Dimensional Data

Nov 02, 2021

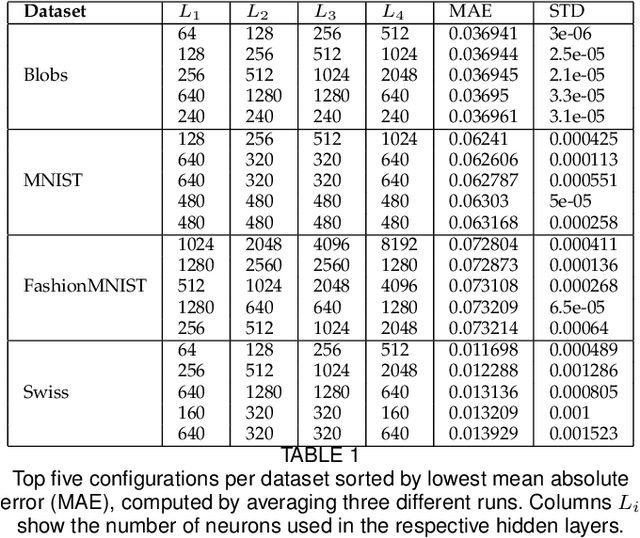

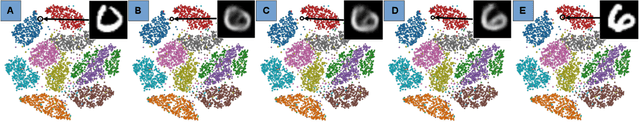

Abstract:Projection techniques are often used to visualize high-dimensional data, allowing users to better understand the overall structure of multi-dimensional spaces on a 2D screen. Although many such methods exist, comparably little work has been done on generalizable methods of inverse-projection -- the process of mapping the projected points, or more generally, the projection space back to the original high-dimensional space. In this paper we present NNInv, a deep learning technique with the ability to approximate the inverse of any projection or mapping. NNInv learns to reconstruct high-dimensional data from any arbitrary point on a 2D projection space, giving users the ability to interact with the learned high-dimensional representation in a visual analytics system. We provide an analysis of the parameter space of NNInv, and offer guidance in selecting these parameters. We extend validation of the effectiveness of NNInv through a series of quantitative and qualitative analyses. We then demonstrate the method's utility by applying it to three visualization tasks: interactive instance interpolation, classifier agreement, and gradient visualization.

HyperNP: Interactive Visual Exploration of Multidimensional Projection Hyperparameters

Jun 25, 2021

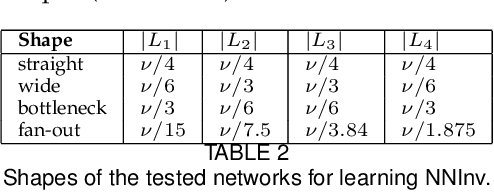

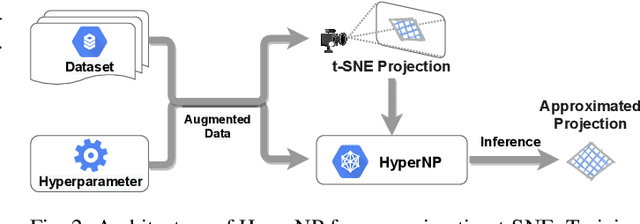

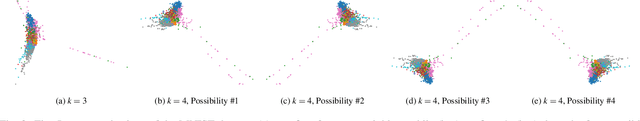

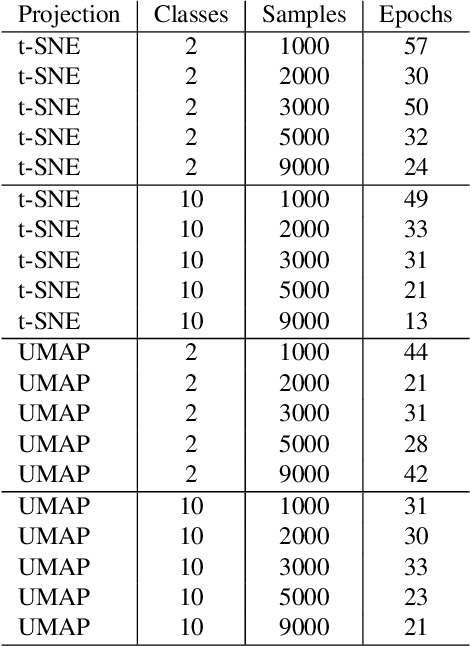

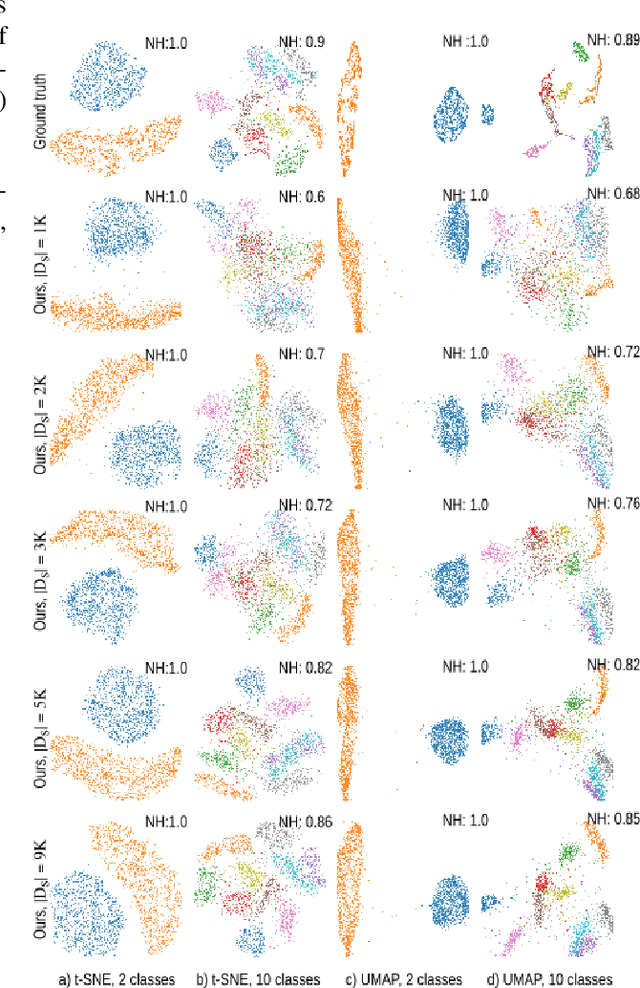

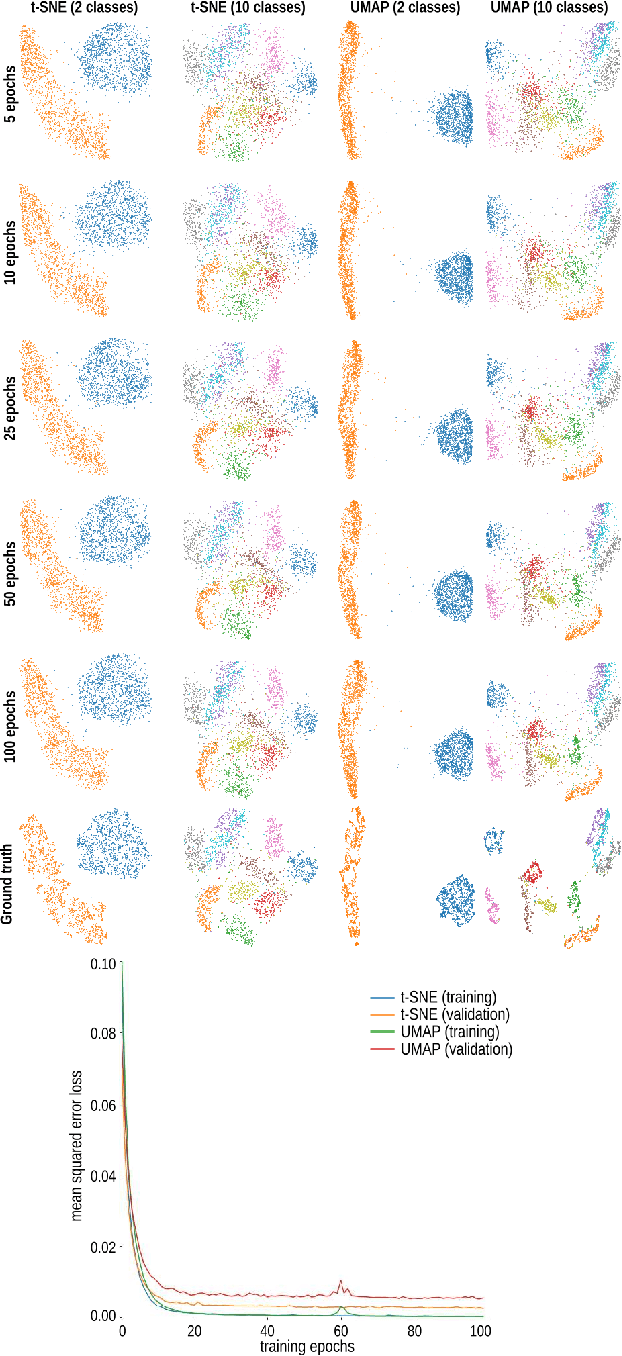

Abstract:Projection algorithms such as t-SNE or UMAP are useful for the visualization of high dimensional data, but depend on hyperparameters which must be tuned carefully. Unfortunately, iteratively recomputing projections to find the optimal hyperparameter value is computationally intensive and unintuitive due to the stochastic nature of these methods. In this paper we propose HyperNP, a scalable method that allows for real-time interactive hyperparameter exploration of projection methods by training neural network approximations. HyperNP can be trained on a fraction of the total data instances and hyperparameter configurations and can compute projections for new data and hyperparameters at interactive speeds. HyperNP is compact in size and fast to compute, thus allowing it to be embedded in lightweight visualization systems such as web browsers. We evaluate the performance of the HyperNP across three datasets in terms of performance and speed. The results suggest that HyperNP is accurate, scalable, interactive, and appropriate for use in real-world settings.

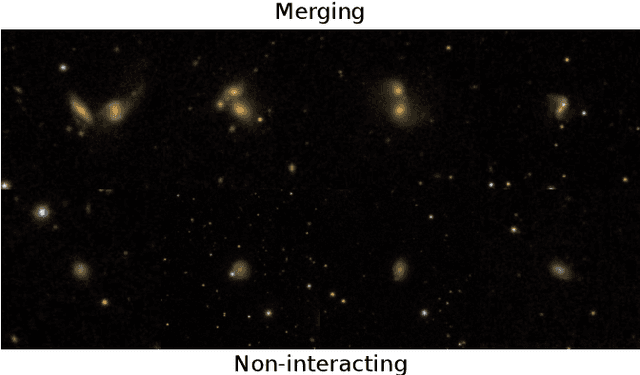

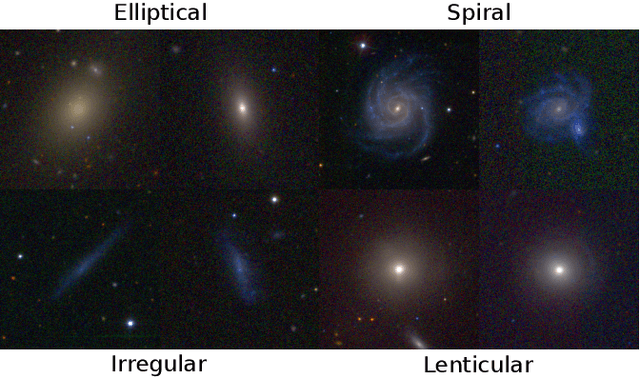

Self-supervised Learning for Astronomical Image Classification

Apr 23, 2020

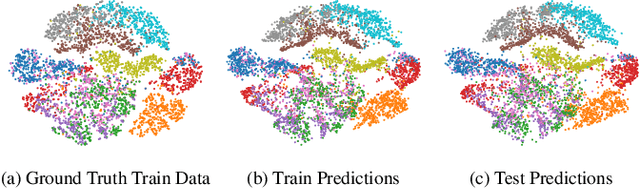

Abstract:In Astronomy, a huge amount of image data is generated daily by photometric surveys, which scan the sky to collect data from stars, galaxies and other celestial objects. In this paper, we propose a technique to leverage unlabeled astronomical images to pre-train deep convolutional neural networks, in order to learn a domain-specific feature extractor which improves the results of machine learning techniques in setups with small amounts of labeled data available. We show that our technique produces results which are in many cases better than using ImageNet pre-training.

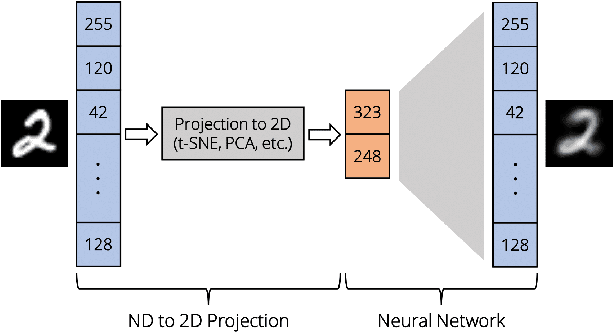

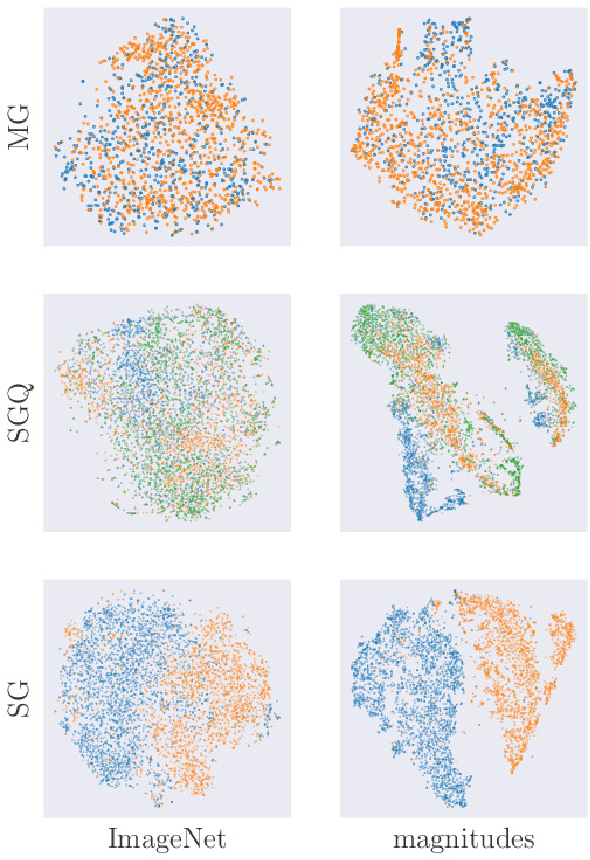

Deep Learning Multidimensional Projections

Feb 21, 2019

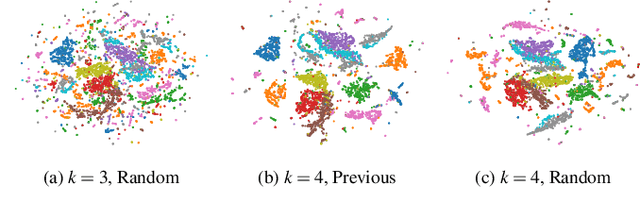

Abstract:Dimensionality reduction methods, also known as projections, are frequently used for exploring multidimensional data in machine learning, data science, and information visualization. Among these, t-SNE and its variants have become very popular for their ability to visually separate distinct data clusters. However, such methods are computationally expensive for large datasets, suffer from stability problems, and cannot directly handle out-of-sample data. We propose a learning approach to construct such projections. We train a deep neural network based on a collection of samples from a given data universe, and their corresponding projections, and next use the network to infer projections of data from the same, or similar, universes. Our approach generates projections with similar characteristics as the learned ones, is computationally two to three orders of magnitude faster than SNE-class methods, has no complex-to-set user parameters, handles out-of-sample data in a stable manner, and can be used to learn any projection technique. We demonstrate our proposal on several real-world high dimensional datasets from machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge