Alexandru Telea

ShaRP: Shape-Regularized Multidimensional Projections

Jun 01, 2023

Abstract:Projections, or dimensionality reduction methods, are techniques of choice for the visual exploration of high-dimensional data. Many such techniques exist, each one of them having a distinct visual signature - i.e., a recognizable way to arrange points in the resulting scatterplot. Such signatures are implicit consequences of algorithm design, such as whether the method focuses on local vs global data pattern preservation; optimization techniques; and hyperparameter settings. We present a novel projection technique - ShaRP - that provides users explicit control over the visual signature of the created scatterplot, which can cater better to interactive visualization scenarios. ShaRP scales well with dimensionality and dataset size, generically handles any quantitative dataset, and provides this extended functionality of controlling projection shapes at a small, user-controllable cost in terms of quality metrics.

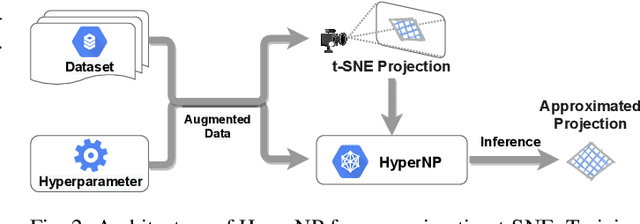

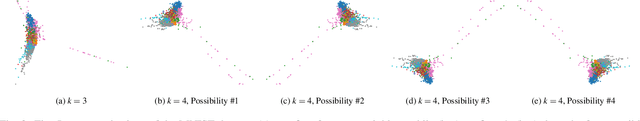

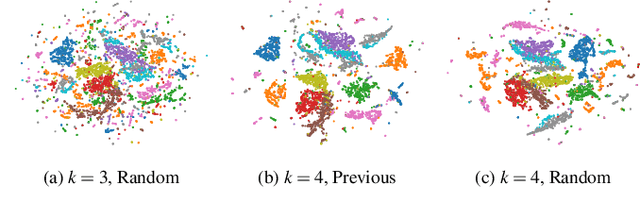

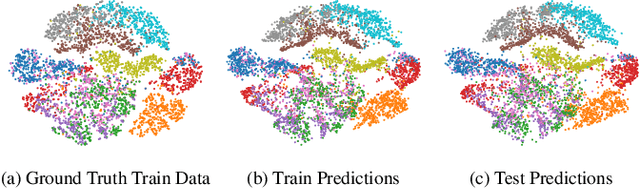

HyperNP: Interactive Visual Exploration of Multidimensional Projection Hyperparameters

Jun 25, 2021

Abstract:Projection algorithms such as t-SNE or UMAP are useful for the visualization of high dimensional data, but depend on hyperparameters which must be tuned carefully. Unfortunately, iteratively recomputing projections to find the optimal hyperparameter value is computationally intensive and unintuitive due to the stochastic nature of these methods. In this paper we propose HyperNP, a scalable method that allows for real-time interactive hyperparameter exploration of projection methods by training neural network approximations. HyperNP can be trained on a fraction of the total data instances and hyperparameter configurations and can compute projections for new data and hyperparameters at interactive speeds. HyperNP is compact in size and fast to compute, thus allowing it to be embedded in lightweight visualization systems such as web browsers. We evaluate the performance of the HyperNP across three datasets in terms of performance and speed. The results suggest that HyperNP is accurate, scalable, interactive, and appropriate for use in real-world settings.

Supporting Optimal Phase Space Reconstructions Using Neural Network Architecture for Time Series Modeling

Jun 19, 2020

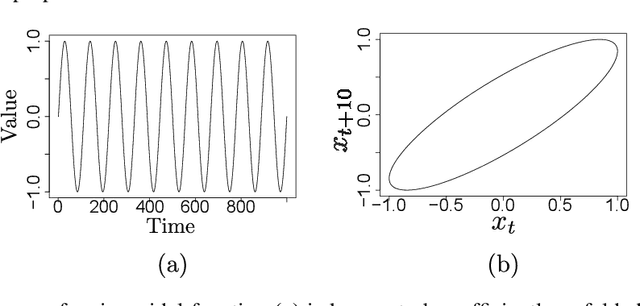

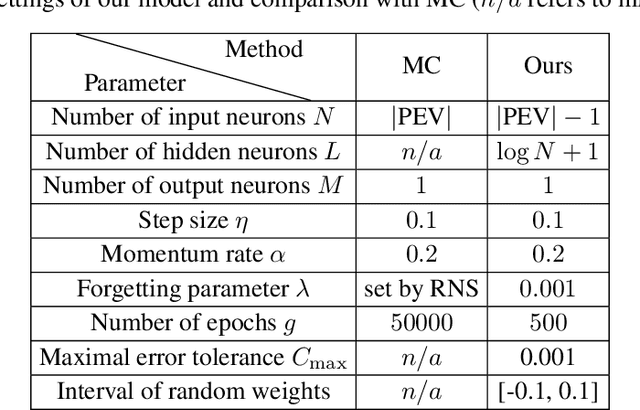

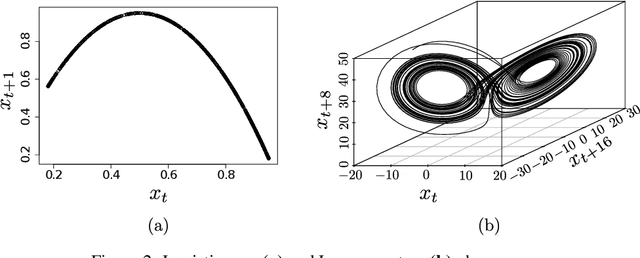

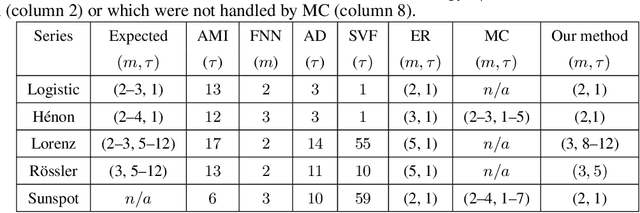

Abstract:The reconstruction of phase spaces is an essential step to analyze time series according to Dynamical System concepts. A regression performed on such spaces unveils the relationships among system states from which we can derive their generating rules, that is, the most probable set of functions responsible for generating observations along time. In this sense, most approaches rely on Takens' embedding theorem to unfold the phase space, which requires the embedding dimension and the time delay. Moreover, although several methods have been proposed to empirically estimate those parameters, they still face limitations due to their lack of consistency and robustness, which has motivated this paper. As an alternative, we here propose an artificial neural network with a forgetting mechanism to implicitly learn the phase spaces properties, whatever they are. Such network trains on forecasting errors and, after converging, its architecture is used to estimate the embedding parameters. Experimental results confirm that our approach is either as competitive as or better than most state-of-the-art strategies while revealing the temporal relationship among time-series observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge