Marius Pedersen

VoD: Learning Volume of Differences for Video-Based Deepfake Detection

Mar 10, 2025

Abstract:The rapid development of deep learning and generative AI technologies has profoundly transformed the digital contact landscape, creating realistic Deepfake that poses substantial challenges to public trust and digital media integrity. This paper introduces a novel Deepfake detention framework, Volume of Differences (VoD), designed to enhance detection accuracy by exploiting temporal and spatial inconsistencies between consecutive video frames. VoD employs a progressive learning approach that captures differences across multiple axes through the use of consecutive frame differences (CFD) and a network with stepwise expansions. We evaluate our approach with intra-dataset and cross-dataset testing scenarios on various well-known Deepfake datasets. Our findings demonstrate that VoD excels with the data it has been trained on and shows strong adaptability to novel, unseen data. Additionally, comprehensive ablation studies examine various configurations of segment length, sampling steps, and intervals, offering valuable insights for optimizing the framework. The code for our VoD framework is available at https://github.com/xuyingzhongguo/VoD.

Chaotic Map based Compression Approach to Classification

Feb 17, 2025

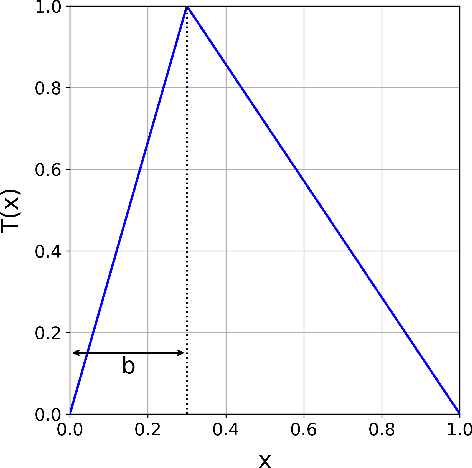

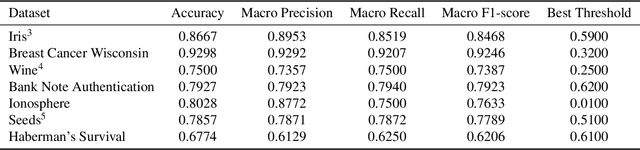

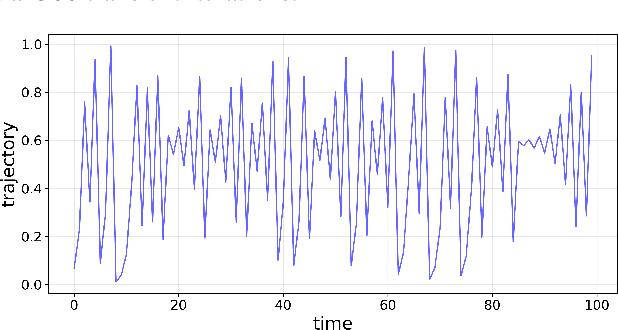

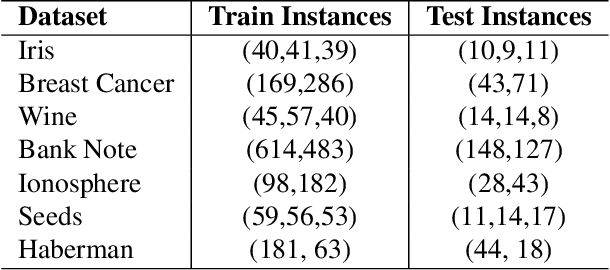

Abstract:Modern machine learning approaches often prioritize performance at the cost of increased complexity, computational demands, and reduced interpretability. This paper introduces a novel framework that challenges this trend by reinterpreting learning from an information-theoretic perspective, viewing it as a search for encoding schemes that capture intrinsic data structures through compact representations. Rather than following the conventional approach of fitting data to complex models, we propose a fundamentally different method that maps data to intervals of initial conditions in a dynamical system. Our GLS (Generalized L\"uroth Series) coding compression classifier employs skew tent maps - a class of chaotic maps - both for encoding data into initial conditions and for subsequent recovery. The effectiveness of this simple framework is noteworthy, with performance closely approaching that of well-established machine learning methods. On the breast cancer dataset, our approach achieves 92.98\% accuracy, comparable to Naive Bayes at 94.74\%. While these results do not exceed state-of-the-art performance, the significance of our contribution lies not in outperforming existing methods but in demonstrating that a fundamentally simpler, more interpretable approach can achieve competitive results.

Uncertainty-Aware Regularization for Image-to-Image Translation

Nov 24, 2024

Abstract:The importance of quantifying uncertainty in deep networks has become paramount for reliable real-world applications. In this paper, we propose a method to improve uncertainty estimation in medical Image-to-Image (I2I) translation. Our model integrates aleatoric uncertainty and employs Uncertainty-Aware Regularization (UAR) inspired by simple priors to refine uncertainty estimates and enhance reconstruction quality. We show that by leveraging simple priors on parameters, our approach captures more robust uncertainty maps, effectively refining them to indicate precisely where the network encounters difficulties, while being less affected by noise. Our experiments demonstrate that UAR not only improves translation performance, but also provides better uncertainty estimations, particularly in the presence of noise and artifacts. We validate our approach using two medical imaging datasets, showcasing its effectiveness in maintaining high confidence in familiar regions while accurately identifying areas of uncertainty in novel/ambiguous scenarios.

3D Reconstruction of the Human Colon from Capsule Endoscope Video

Jul 21, 2024

Abstract:As the number of people affected by diseases in the gastrointestinal system is ever-increasing, a higher demand on preventive screening is inevitable. This will significantly increase the workload on gastroenterologists. To help reduce the workload, tools from computer vision may be helpful. In this paper, we investigate the possibility of constructing 3D models of whole sections of the human colon using image sequences from wireless capsule endoscope video, providing enhanced viewing for gastroenterologists. As capsule endoscope images contain distortion and artifacts non-ideal for many 3D reconstruction algorithms, the problem is challenging. However, recent developments of virtual graphics-based models of the human gastrointestinal system, where distortion and artifacts can be enabled or disabled, makes it possible to ``dissect'' the problem. The graphical model also provides a ground truth, enabling computation of geometric distortion introduced by the 3D reconstruction method. In this paper, most distortions and artifacts are left out to determine if it is feasible to reconstruct whole sections of the human gastrointestinal system by existing methods. We demonstrate that 3D reconstruction is possible using simultaneous localization and mapping. Further, to reconstruct the gastrointestinal wall surface from resulting point clouds, varying greatly in density, Poisson surface reconstruction is a good option. The results are promising, encouraging further research on this problem.

Terrain-Informed Self-Supervised Learning: Enhancing Building Footprint Extraction from LiDAR Data with Limited Annotations

Nov 02, 2023

Abstract:Estimating building footprint maps from geospatial data is of paramount importance in urban planning, development, disaster management, and various other applications. Deep learning methodologies have gained prominence in building segmentation maps, offering the promise of precise footprint extraction without extensive post-processing. However, these methods face challenges in generalization and label efficiency, particularly in remote sensing, where obtaining accurate labels can be both expensive and time-consuming. To address these challenges, we propose terrain-aware self-supervised learning, tailored to remote sensing, using digital elevation models from LiDAR data. We propose to learn a model to differentiate between bare Earth and superimposed structures enabling the network to implicitly learn domain-relevant features without the need for extensive pixel-level annotations. We test the effectiveness of our approach by evaluating building segmentation performance on test datasets with varying label fractions. Remarkably, with only 1% of the labels (equivalent to 25 labeled examples), our method improves over ImageNet pre-training, showing the advantage of leveraging unlabeled data for feature extraction in the domain of remote sensing. The performance improvement is more pronounced in few-shot scenarios and gradually closes the gap with ImageNet pre-training as the label fraction increases. We test on a dataset characterized by substantial distribution shifts and labeling errors to demonstrate the generalizability of our approach. When compared to other baselines, including ImageNet pretraining and more complex architectures, our approach consistently performs better, demonstrating the efficiency and effectiveness of self-supervised terrain-aware feature learning.

Learning Pairwise Interaction for Generalizable DeepFake Detection

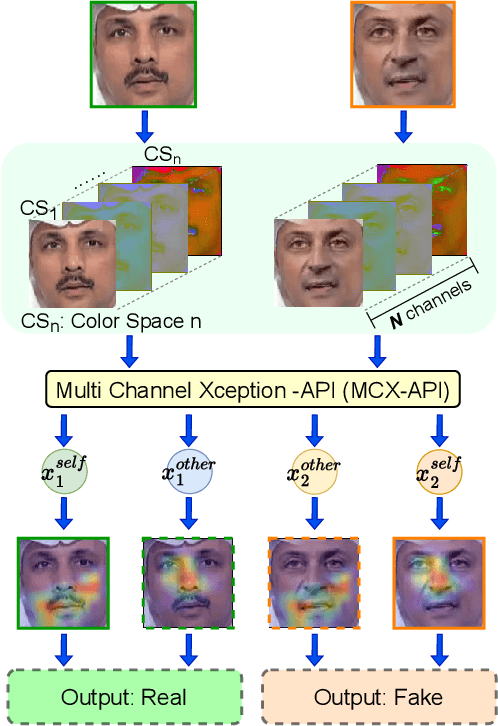

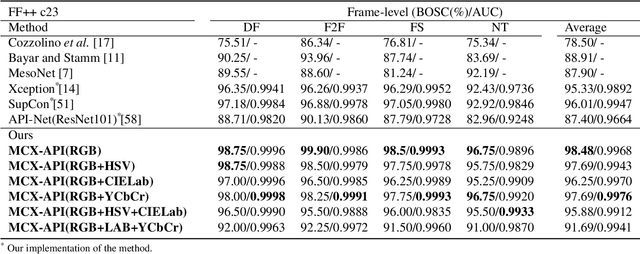

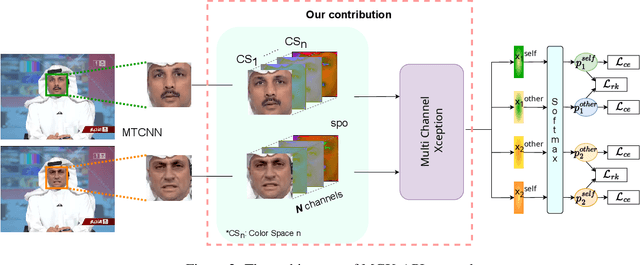

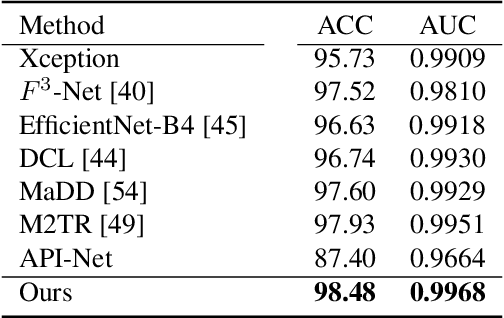

Feb 26, 2023

Abstract:A fast-paced development of DeepFake generation techniques challenge the detection schemes designed for known type DeepFakes. A reliable Deepfake detection approach must be agnostic to generation types, which can present diverse quality and appearance. Limited generalizability across different generation schemes will restrict the wide-scale deployment of detectors if they fail to handle unseen attacks in an open set scenario. We propose a new approach, Multi-Channel Xception Attention Pairwise Interaction (MCX-API), that exploits the power of pairwise learning and complementary information from different color space representations in a fine-grained manner. We first validate our idea on a publicly available dataset in a intra-class setting (closed set) with four different Deepfake schemes. Further, we report all the results using balanced-open-set-classification (BOSC) accuracy in an inter-class setting (open-set) using three public datasets. Our experiments indicate that our proposed method can generalize better than the state-of-the-art Deepfakes detectors. We obtain 98.48% BOSC accuracy on the FF++ dataset and 90.87% BOSC accuracy on the CelebDF dataset suggesting a promising direction for generalization of DeepFake detection. We further utilize t-SNE and attention maps to interpret and visualize the decision-making process of our proposed network. https://github.com/xuyingzhongguo/MCX-API

Evaluating clinical diversity and plausibility of synthetic capsule endoscopic images

Jan 16, 2023Abstract:Wireless Capsule Endoscopy (WCE) is being increasingly used as an alternative imaging modality for complete and non-invasive screening of the gastrointestinal tract. Although this is advantageous in reducing unnecessary hospital admissions, it also demands that a WCE diagnostic protocol be in place so larger populations can be effectively screened. This calls for training and education protocols attuned specifically to this modality. Like training in other modalities such as traditional endoscopy, CT, MRI, etc., a WCE training protocol would require an atlas comprising of a large corpora of images that show vivid descriptions of pathologies and abnormalities, ideally observed over a period of time. Since such comprehensive atlases are presently lacking in WCE, in this work, we propose a deep learning method for utilizing already available studies across different institutions for the creation of a realistic WCE atlas using StyleGAN. We identify clinically relevant attributes in WCE such that synthetic images can be generated with selected attributes on cue. Beyond this, we also simulate several disease progression scenarios. The generated images are evaluated for realism and plausibility through three subjective online experiments with the participation of eight gastroenterology experts from three geographical locations and a variety of years of experience. The results from the experiments indicate that the images are highly realistic and the disease scenarios plausible. The images comprising the atlas are available publicly for use in training applications as well as supplementing real datasets for deep learning.

This changes to that : Combining causal and non-causal explanations to generate disease progression in capsule endoscopy

Dec 05, 2022

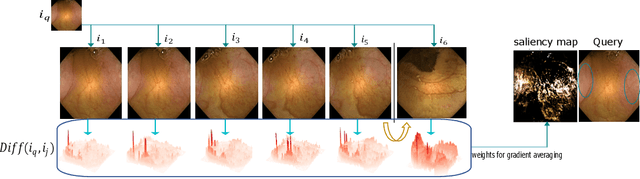

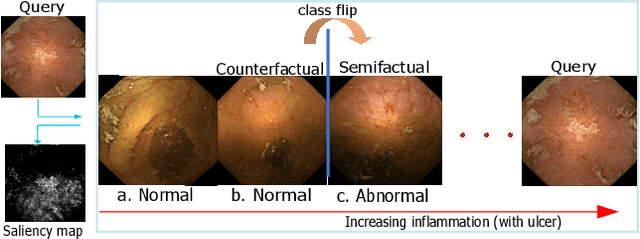

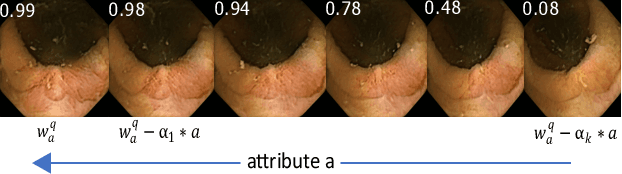

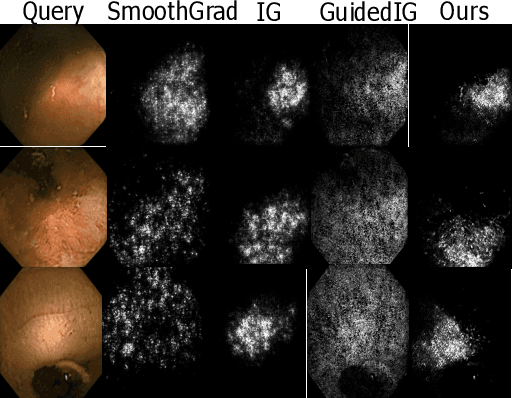

Abstract:Due to the unequivocal need for understanding the decision processes of deep learning networks, both modal-dependent and model-agnostic techniques have become very popular. Although both of these ideas provide transparency for automated decision making, most methodologies focus on either using the modal-gradients (model-dependent) or ignoring the model internal states and reasoning with a model's behavior/outcome (model-agnostic) to instances. In this work, we propose a unified explanation approach that given an instance combines both model-dependent and agnostic explanations to produce an explanation set. The generated explanations are not only consistent in the neighborhood of a sample but can highlight causal relationships between image content and the outcome. We use Wireless Capsule Endoscopy (WCE) domain to illustrate the effectiveness of our explanations. The saliency maps generated by our approach are comparable or better on the softmax information score.

A Comprehensive Analysis of AI Biases in DeepFake Detection With Massively Annotated Databases

Aug 11, 2022

Abstract:In recent years, image and video manipulations with DeepFake have become a severe concern for security and society. Therefore, many detection models and databases have been proposed to detect DeepFake data reliably. However, there is an increased concern that these models and training databases might be biased and thus, cause DeepFake detectors to fail. In this work, we tackle these issues by (a) providing large-scale demographic and non-demographic attribute annotations of 41 different attributes for five popular DeepFake datasets and (b) comprehensively analysing AI-bias of multiple state-of-the-art DeepFake detection models on these databases. The investigation analyses the influence of a large variety of distinctive attributes (from over 65M labels) on the detection performance, including demographic (age, gender, ethnicity) and non-demographic (hair, skin, accessories, etc.) information. The results indicate that investigated databases lack diversity and, more importantly, show that the utilised DeepFake detection models are strongly biased towards many investigated attributes. Moreover, the results show that the models' decision-making might be based on several questionable (biased) assumptions, such if a person is smiling or wearing a hat. Depending on the application of such DeepFake detection methods, these biases can lead to generalizability, fairness, and security issues. We hope that the findings of this study and the annotation databases will help to evaluate and mitigate bias in future DeepFake detection techniques. Our annotation datasets are made publicly available.

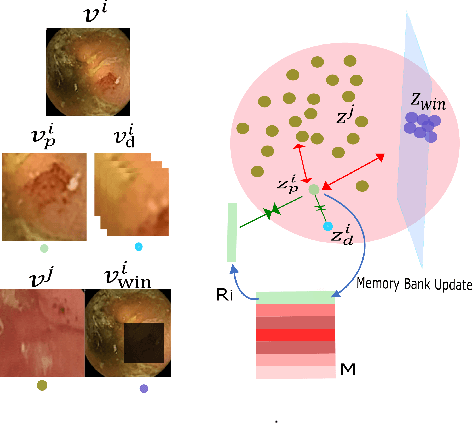

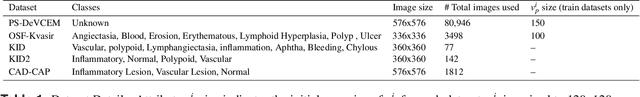

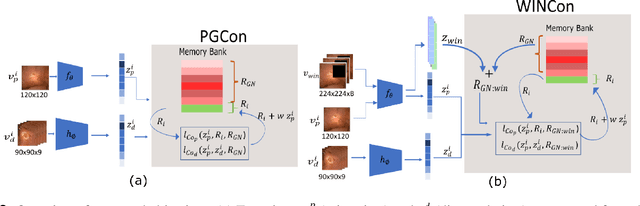

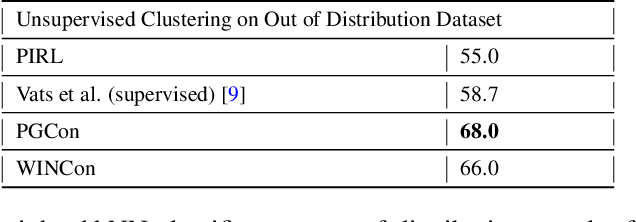

From Labels to Priors in Capsule Endoscopy: A Prior Guided Approach for Improving Generalization with Few Labels

Jun 10, 2022

Abstract:The lack of generalizability of deep learning approaches for the automated diagnosis of pathologies in Wireless Capsule Endoscopy (WCE) has prevented any significant advantages from trickling down to real clinical practices. As a result, disease management using WCE continues to depend on exhaustive manual investigations by medical experts. This explains its limited use despite several advantages. Prior works have considered using higher quality and quantity of labels as a way of tackling the lack of generalization, however this is hardly scalable considering pathology diversity not to mention that labeling large datasets encumbers the medical staff additionally. We propose using freely available domain knowledge as priors to learn more robust and generalizable representations. We experimentally show that domain priors can benefit representations by acting in proxy of labels, thereby significantly reducing the labeling requirement while still enabling fully unsupervised yet pathology-aware learning. We use the contrastive objective along with prior-guided views during pretraining, where the view choices inspire sensitivity to pathological information. Extensive experiments on three datasets show that our method performs better than (or closes gap with) the state-of-the-art in the domain, establishing a new benchmark in pathology classification and cross-dataset generalization, as well as scaling to unseen pathology categories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge