Ivar Farup

Uncertainty-Aware Regularization for Image-to-Image Translation

Nov 24, 2024

Abstract:The importance of quantifying uncertainty in deep networks has become paramount for reliable real-world applications. In this paper, we propose a method to improve uncertainty estimation in medical Image-to-Image (I2I) translation. Our model integrates aleatoric uncertainty and employs Uncertainty-Aware Regularization (UAR) inspired by simple priors to refine uncertainty estimates and enhance reconstruction quality. We show that by leveraging simple priors on parameters, our approach captures more robust uncertainty maps, effectively refining them to indicate precisely where the network encounters difficulties, while being less affected by noise. Our experiments demonstrate that UAR not only improves translation performance, but also provides better uncertainty estimations, particularly in the presence of noise and artifacts. We validate our approach using two medical imaging datasets, showcasing its effectiveness in maintaining high confidence in familiar regions while accurately identifying areas of uncertainty in novel/ambiguous scenarios.

3D Reconstruction of the Human Colon from Capsule Endoscope Video

Jul 21, 2024

Abstract:As the number of people affected by diseases in the gastrointestinal system is ever-increasing, a higher demand on preventive screening is inevitable. This will significantly increase the workload on gastroenterologists. To help reduce the workload, tools from computer vision may be helpful. In this paper, we investigate the possibility of constructing 3D models of whole sections of the human colon using image sequences from wireless capsule endoscope video, providing enhanced viewing for gastroenterologists. As capsule endoscope images contain distortion and artifacts non-ideal for many 3D reconstruction algorithms, the problem is challenging. However, recent developments of virtual graphics-based models of the human gastrointestinal system, where distortion and artifacts can be enabled or disabled, makes it possible to ``dissect'' the problem. The graphical model also provides a ground truth, enabling computation of geometric distortion introduced by the 3D reconstruction method. In this paper, most distortions and artifacts are left out to determine if it is feasible to reconstruct whole sections of the human gastrointestinal system by existing methods. We demonstrate that 3D reconstruction is possible using simultaneous localization and mapping. Further, to reconstruct the gastrointestinal wall surface from resulting point clouds, varying greatly in density, Poisson surface reconstruction is a good option. The results are promising, encouraging further research on this problem.

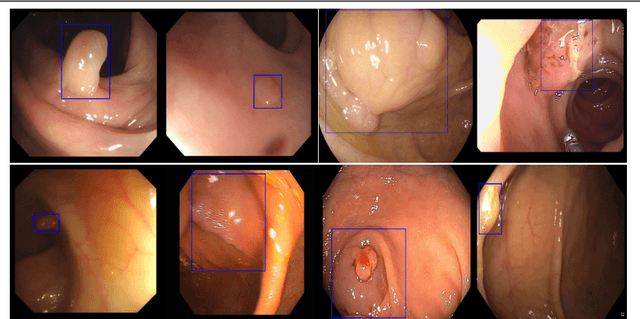

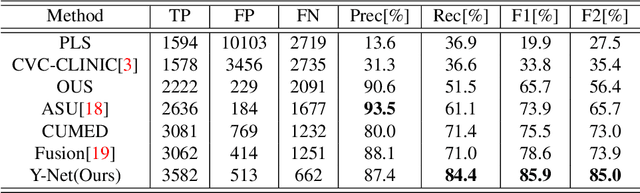

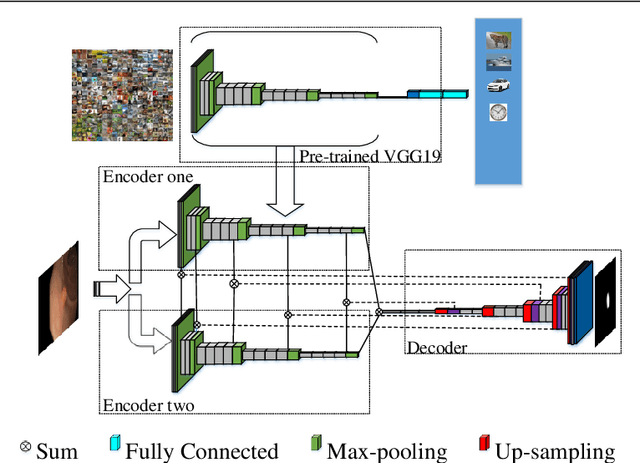

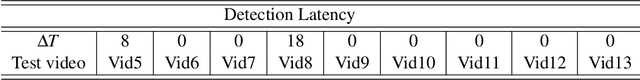

Y-Net: A deep Convolutional Neural Network for Polyp Detection

Jun 05, 2018

Abstract:Colorectal polyps are important precursors to colon cancer, the third most common cause of cancer mortality for both men and women. It is a disease where early detection is of crucial importance. Colonoscopy is commonly used for early detection of cancer and precancerous pathology. It is a demanding procedure requiring significant amount of time from specialized physicians and nurses, in addition to a significant miss-rates of polyps by specialists. Automated polyp detection in colonoscopy videos has been demonstrated to be a promising way to handle this problem. {However, polyps detection is a challenging problem due to the availability of limited amount of training data and large appearance variations of polyps. To handle this problem, we propose a novel deep learning method Y-Net that consists of two encoder networks with a decoder network. Our proposed Y-Net method} relies on efficient use of pre-trained and un-trained models with novel sum-skip-concatenation operations. Each of the encoders are trained with encoder specific learning rate along the decoder. Compared with the previous methods employing hand-crafted features or 2-D/3-D convolutional neural network, our approach outperforms state-of-the-art methods for polyp detection with 7.3% F1-score and 13% recall improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge