Marco Visentini-Scarzanella

Towards a Computed-Aided Diagnosis System in Colonoscopy: Automatic Polyp Segmentation Using Convolution Neural Networks

Jan 15, 2021

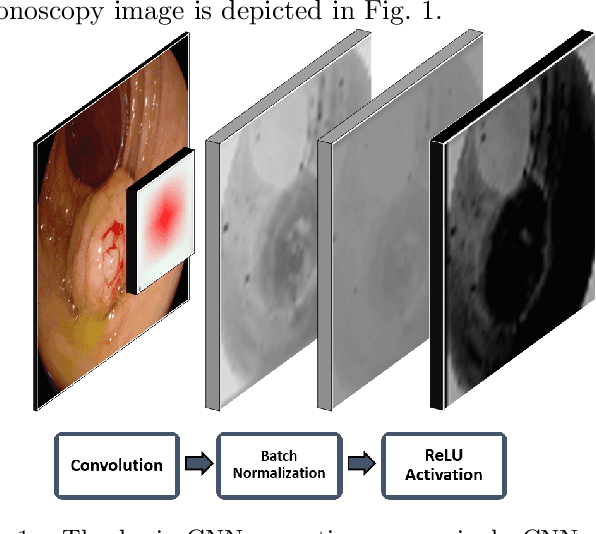

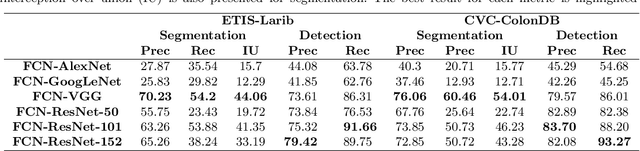

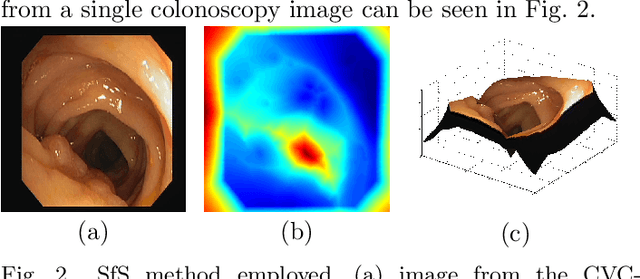

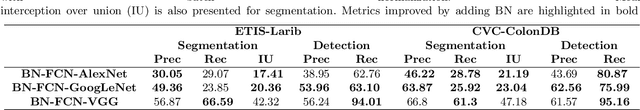

Abstract:Early diagnosis is essential for the successful treatment of bowel cancers including colorectal cancer (CRC) and capsule endoscopic imaging with robotic actuation can be a valuable diagnostic tool when combined with automated image analysis. We present a deep learning rooted detection and segmentation framework for recognizing lesions in colonoscopy and capsule endoscopy images. We restructure established convolution architectures, such as VGG and ResNets, by converting them into fully-connected convolution networks (FCNs), fine-tune them and study their capabilities for polyp segmentation and detection. We additionally use Shape from-Shading (SfS) to recover depth and provide a richer representation of the tissue's structure in colonoscopy images. Depth is incorporated into our network models as an additional input channel to the RGB information and we demonstrate that the resulting network yields improved performance. Our networks are tested on publicly available datasets and the most accurate segmentation model achieved a mean segmentation IU of 47.78% and 56.95% on the ETIS-Larib and CVC-Colon datasets, respectively. For polyp detection, the top performing models we propose surpass the current state of the art with detection recalls superior to 90% for all datasets tested. To our knowledge, we present the first work to use FCNs for polyp segmentation in addition to proposing a novel combination of SfS and RGB that boosts performance

* 10 pages, 6 figures

Unifying Heterogeneous Classifiers with Distillation

Apr 12, 2019

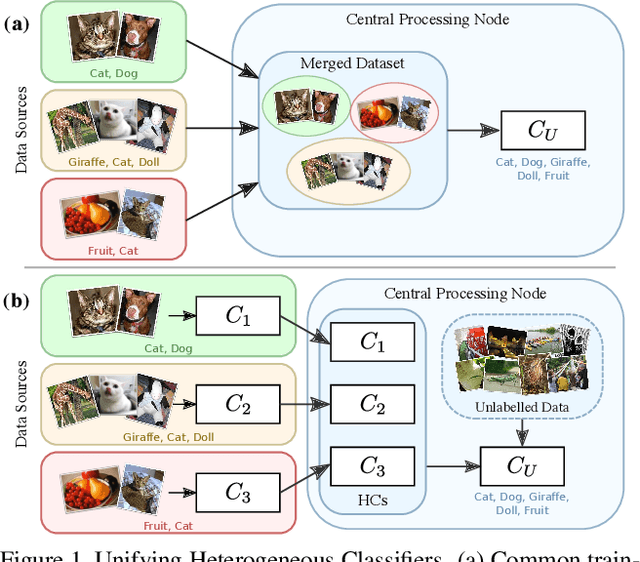

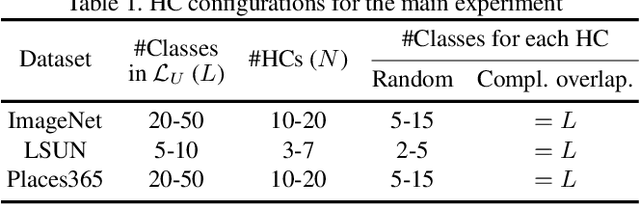

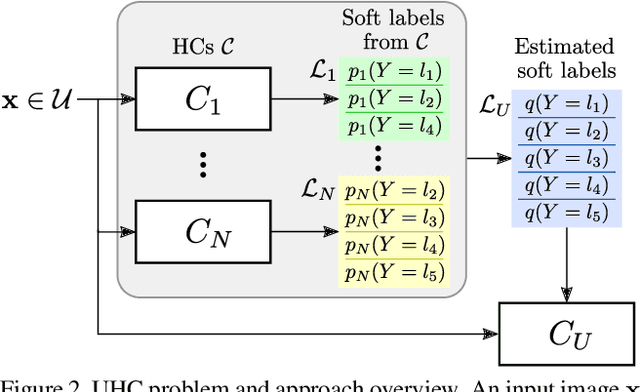

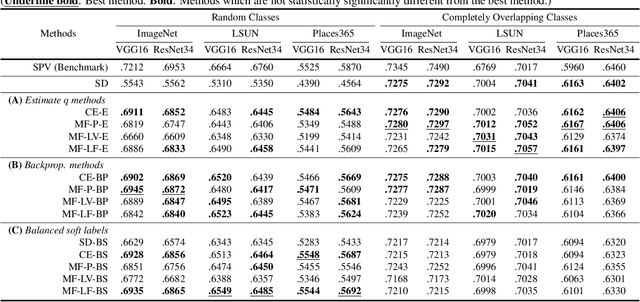

Abstract:In this paper, we study the problem of unifying knowledge from a set of classifiers with different architectures and target classes into a single classifier, given only a generic set of unlabelled data. We call this problem Unifying Heterogeneous Classifiers (UHC). This problem is motivated by scenarios where data is collected from multiple sources, but the sources cannot share their data, e.g., due to privacy concerns, and only privately trained models can be shared. In addition, each source may not be able to gather data to train all classes due to data availability at each source, and may not be able to train the same classification model due to different computational resources. To tackle this problem, we propose a generalisation of knowledge distillation to merge HCs. We derive a probabilistic relation between the outputs of HCs and the probability over all classes. Based on this relation, we propose two classes of methods based on cross-entropy minimisation and matrix factorisation, which allow us to estimate soft labels over all classes from unlabelled samples and use them in lieu of ground truth labels to train a unified classifier. Our extensive experiments on ImageNet, LSUN, and Places365 datasets show that our approaches significantly outperform a naive extension of distillation and can achieve almost the same accuracy as classifiers that are trained in a centralised, supervised manner.

Simultaneous independent image display technique on multiple 3D objects

Sep 10, 2016

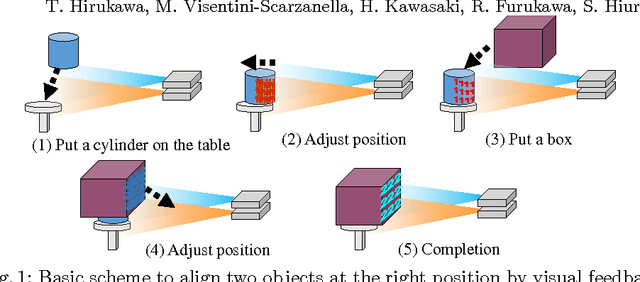

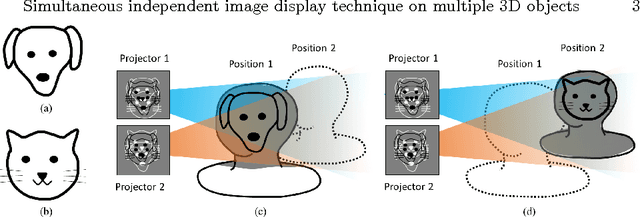

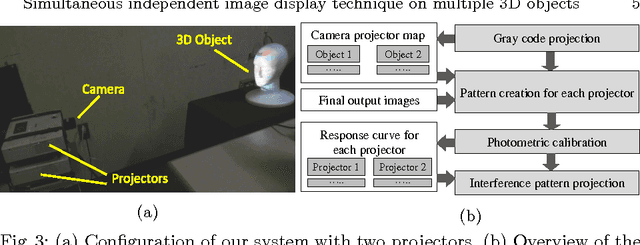

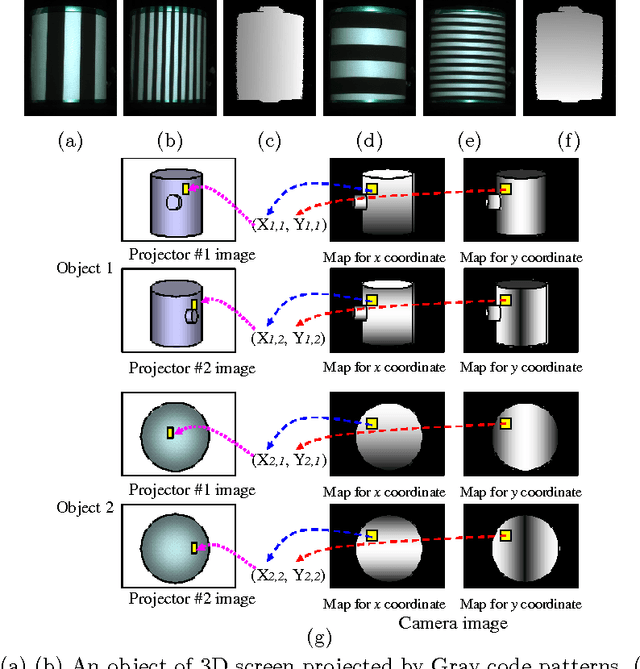

Abstract:We propose a new system to visualize depth-dependent patterns and images on solid objects with complex geometry using multiple projectors. The system, despite consisting of conventional passive LCD projectors, is able to project different images and patterns depending on the spatial location of the object. The technique is based on the simple principle that multiple patterns projected from multiple projectors interfere constructively with each other when their patterns are projected on the same object. Previous techniques based on the same principle can only achieve 1) low resolution volume colorization or 2) high resolution images but only on a limited number of flat planes. In this paper, we discretize a 3D object into a number of 3D points so that high resolution images can be projected onto the complex shapes. We also propose a dynamic ranges expansion technique as well as an efficient optimization procedure based on epipolar constraints. Such technique can be used to the extend projection mapping to have spatial dependency, which is desirable for practical applications. We also demonstrate the system potential as a visual instructor for object placement and assembling. Experiments prove the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge