Odysseas Zisimopoulos

Towards a Computed-Aided Diagnosis System in Colonoscopy: Automatic Polyp Segmentation Using Convolution Neural Networks

Jan 15, 2021

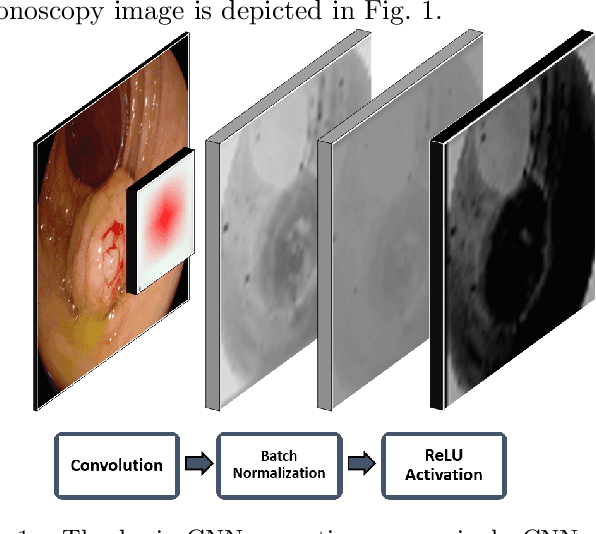

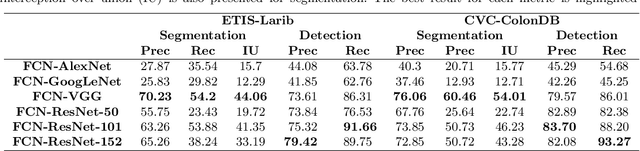

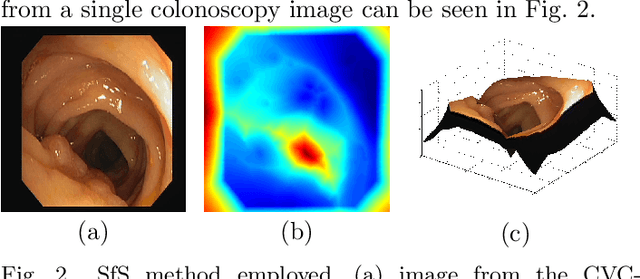

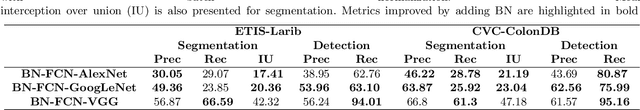

Abstract:Early diagnosis is essential for the successful treatment of bowel cancers including colorectal cancer (CRC) and capsule endoscopic imaging with robotic actuation can be a valuable diagnostic tool when combined with automated image analysis. We present a deep learning rooted detection and segmentation framework for recognizing lesions in colonoscopy and capsule endoscopy images. We restructure established convolution architectures, such as VGG and ResNets, by converting them into fully-connected convolution networks (FCNs), fine-tune them and study their capabilities for polyp segmentation and detection. We additionally use Shape from-Shading (SfS) to recover depth and provide a richer representation of the tissue's structure in colonoscopy images. Depth is incorporated into our network models as an additional input channel to the RGB information and we demonstrate that the resulting network yields improved performance. Our networks are tested on publicly available datasets and the most accurate segmentation model achieved a mean segmentation IU of 47.78% and 56.95% on the ETIS-Larib and CVC-Colon datasets, respectively. For polyp detection, the top performing models we propose surpass the current state of the art with detection recalls superior to 90% for all datasets tested. To our knowledge, we present the first work to use FCNs for polyp segmentation in addition to proposing a novel combination of SfS and RGB that boosts performance

* 10 pages, 6 figures

Fashion Outfit Generation for E-commerce

Mar 18, 2019

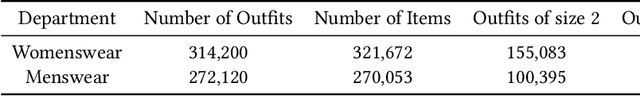

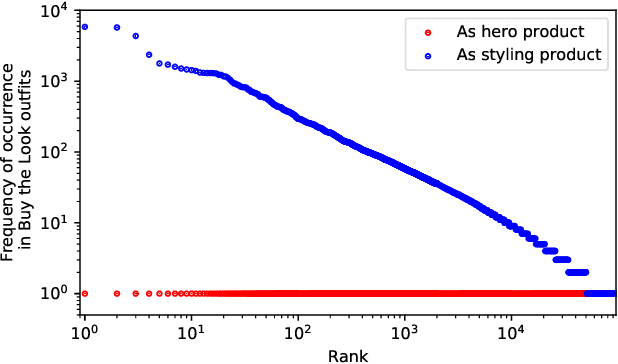

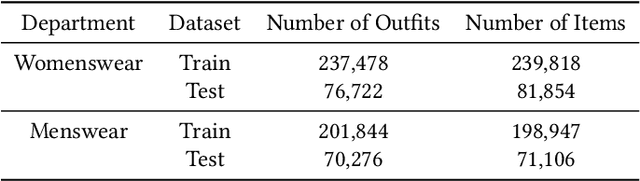

Abstract:Combining items of clothing into an outfit is a major task in fashion retail. Recommending sets of items that are compatible with a particular seed item is useful for providing users with guidance and inspiration, but is currently a manual process that requires expert stylists and is therefore not scalable or easy to personalise. We use a multilayer neural network fed by visual and textual features to learn embeddings of items in a latent style space such that compatible items of different types are embedded close to one another. We train our model using the ASOS outfits dataset, which consists of a large number of outfits created by professional stylists and which we release to the research community. Our model shows strong performance in an offline outfit compatibility prediction task. We use our model to generate outfits and for the first time in this field perform an AB test, comparing our generated outfits to those produced by a baseline model which matches appropriate product types but uses no information on style. Users approved of outfits generated by our model 21% and 34% more frequently than those generated by the baseline model for womenswear and menswear respectively.

FaceOff: Anonymizing Videos in the Operating Rooms

Aug 06, 2018

Abstract:Video capture in the surgical operating room (OR) is increasingly possible and has potential for use with computer assisted interventions (CAI), surgical data science and within smart OR integration. Captured video innately carries sensitive information that should not be completely visible in order to preserve the patient's and the clinical teams' identities. When surgical video streams are stored on a server, the videos must be anonymized prior to storage if taken outside of the hospital. In this article, we describe how a deep learning model, Faster R-CNN, can be used for this purpose and help to anonymize video data captured in the OR. The model detects and blurs faces in an effort to preserve anonymity. After testing an existing face detection trained model, a new dataset tailored to the surgical environment, with faces obstructed by surgical masks and caps, was collected for fine-tuning to achieve higher face-detection rates in the OR. We also propose a temporal regularisation kernel to improve recall rates. The fine-tuned model achieves a face detection recall of 88.05 % and 93.45 % before and after applying temporal-smoothing respectively.

DeepPhase: Surgical Phase Recognition in CATARACTS Videos

Jul 17, 2018

Abstract:Automated surgical workflow analysis and understanding can assist surgeons to standardize procedures and enhance post-surgical assessment and indexing, as well as, interventional monitoring. Computer-assisted interventional (CAI) systems based on video can perform workflow estimation through surgical instruments' recognition while linking them to an ontology of procedural phases. In this work, we adopt a deep learning paradigm to detect surgical instruments in cataract surgery videos which in turn feed a surgical phase inference recurrent network that encodes temporal aspects of phase steps within the phase classification. Our models present comparable to state-of-the-art results for surgical tool detection and phase recognition with accuracies of 99 and 78% respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge