Maliha Hossain

ORBIT-2: Scaling Exascale Vision Foundation Models for Weather and Climate Downscaling

May 07, 2025

Abstract:Sparse observations and coarse-resolution climate models limit effective regional decision-making, underscoring the need for robust downscaling. However, existing AI methods struggle with generalization across variables and geographies and are constrained by the quadratic complexity of Vision Transformer (ViT) self-attention. We introduce ORBIT-2, a scalable foundation model for global, hyper-resolution climate downscaling. ORBIT-2 incorporates two key innovations: (1) Residual Slim ViT (Reslim), a lightweight architecture with residual learning and Bayesian regularization for efficient, robust prediction; and (2) TILES, a tile-wise sequence scaling algorithm that reduces self-attention complexity from quadratic to linear, enabling long-sequence processing and massive parallelism. ORBIT-2 scales to 10 billion parameters across 32,768 GPUs, achieving up to 1.8 ExaFLOPS sustained throughput and 92-98% strong scaling efficiency. It supports downscaling to 0.9 km global resolution and processes sequences up to 4.2 billion tokens. On 7 km resolution benchmarks, ORBIT-2 achieves high accuracy with R^2 scores in the range of 0.98 to 0.99 against observation data.

Sparse Measurement Medical CT Reconstruction using Multi-Fused Block Matching Denoising Priors

Feb 03, 2025Abstract:A major challenge for medical X-ray CT imaging is reducing the number of X-ray projections to lower radiation dosage and reduce scan times without compromising image quality. However these under-determined inverse imaging problems rely on the formulation of an expressive prior model to constrain the solution space while remaining computationally tractable. Traditional analytical reconstruction methods like Filtered Back Projection (FBP) often fail with sparse measurements, producing artifacts due to their reliance on the Shannon-Nyquist Sampling Theorem. Consensus Equilibrium, which is a generalization of Plug and Play, is a recent advancement in Model-Based Iterative Reconstruction (MBIR), has facilitated the use of multiple denoisers are prior models in an optimization free framework to capture complex, non-linear prior information. However, 3D prior modelling in a Plug and Play approach for volumetric image reconstruction requires long processing time due to high computing requirement. Instead of directly using a 3D prior, this work proposes a BM3D Multi Slice Fusion (BM3D-MSF) prior that uses multiple 2D image denoisers fused to act as a fully 3D prior model in Plug and Play reconstruction approach. Our approach does not require training and are thus able to circumvent ethical issues related with patient training data and are readily deployable in varying noise and measurement sparsity levels. In addition, reconstruction with the BM3D-MSF prior achieves similar reconstruction image quality as fully 3D image priors, but with significantly reduced computational complexity. We test our method on clinical CT data and demonstrate that our approach improves reconstructed image quality.

High-Precision Inversion of Dynamic Radiography Using Hydrodynamic Features

Dec 02, 2021

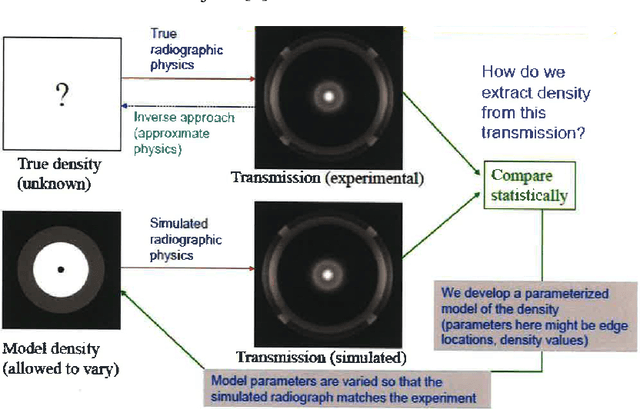

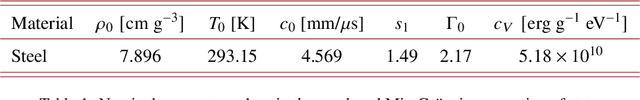

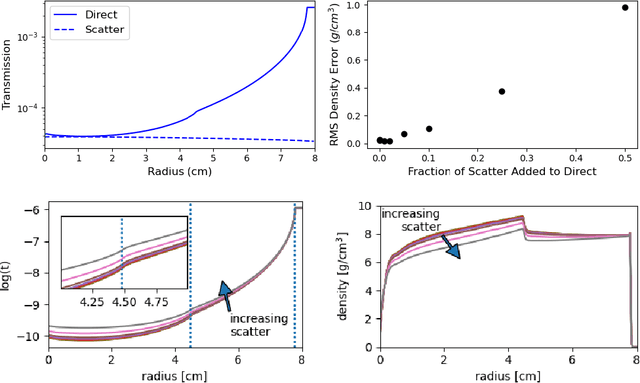

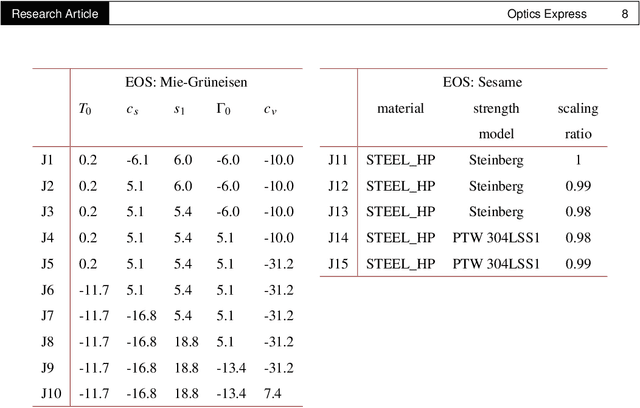

Abstract:Radiography is often used to probe complex, evolving density fields in dynamic systems and in so doing gain insight into the underlying physics. This technique has been used in numerous fields including materials science, shock physics, inertial confinement fusion, and other national security applications. In many of these applications, however, complications resulting from noise, scatter, complex beam dynamics, etc. prevent the reconstruction of density from being accurate enough to identify the underlying physics with sufficient confidence. As such, density reconstruction from static/dynamic radiography has typically been limited to identifying discontinuous features such as cracks and voids in a number of these applications. In this work, we propose a fundamentally new approach to reconstructing density from a temporal sequence of radiographic images. Using only the robust features identifiable in radiographs, we combine them with the underlying hydrodynamic equations of motion using a machine learning approach, namely, conditional generative adversarial networks (cGAN), to determine the density fields from a dynamic sequence of radiographs. Next, we seek to further enhance the hydrodynamic consistency of the ML-based density reconstruction through a process of parameter estimation and projection onto a hydrodynamic manifold. In this context, we note that the distance from the hydrodynamic manifold given by the training data to the test data in the parameter space considered both serves as a diagnostic of the robustness of the predictions and serves to augment the training database, with the expectation that the latter will further reduce future density reconstruction errors. Finally, we demonstrate the ability of this method to outperform a traditional radiographic reconstruction in capturing allowable hydrodynamic paths even when relatively small amounts of scatter are present.

Ultra-Sparse View Reconstruction for Flash X-Ray Imaging using Consensus Equilibrium

Apr 12, 2021

Abstract:A growing number of applications require the reconstructionof 3D objects from a very small number of views. In this research, we consider the problem of reconstructing a 3D object from only 4 Flash X-ray CT views taken during the impact of a Kolsky bar. For such ultra-sparse view datasets, even model-based iterative reconstruction (MBIR) methods produce poor quality results. In this paper, we present a framework based on a generalization of Plug-and-Play, known as Multi-Agent Consensus Equilibrium (MACE), for incorporating complex and nonlinear prior information into ultra-sparse CT reconstruction. The MACE method allows any number of agents to simultaneously enforce their own prior constraints on the solution. We apply our method on simulated and real data and demonstrate that MACE reduces artifacts, improves reconstructed image quality, and uncovers image features which were otherwise indiscernible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge