Jennifer L. Schei

Material Identification From Radiographs Without Energy Resolution

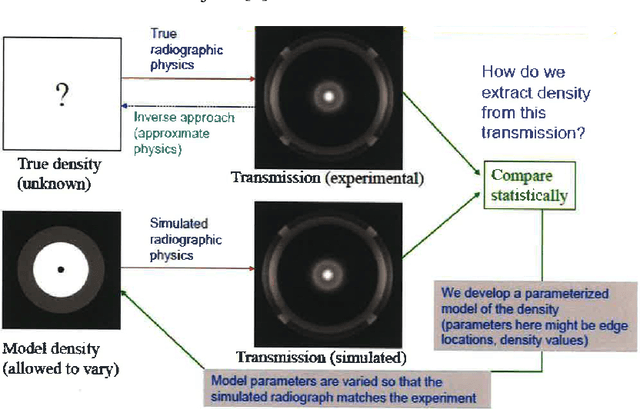

Mar 10, 2023Abstract:We propose a method for performing material identification from radiographs without energy-resolved measurements. Material identification has a wide variety of applications, including in biomedical imaging, nondestructive testing, and security. While existing techniques for radiographic material identification make use of dual energy sources, energy-resolving detectors, or additional (e.g., neutron) measurements, such setups are not always practical-requiring additional hardware and complicating imaging. We tackle material identification without energy resolution, allowing standard X-ray systems to provide material identification information without requiring additional hardware. Assuming a setting where the geometry of each object in the scene is known and the materials come from a known set of possible materials, we pose the problem as a combinatorial optimization with a loss function that accounts for the presence of scatter and an unknown gain and propose a branch and bound algorithm to efficiently solve it. We present experiments on both synthetic data and real, experimental data with relevance to security applications-thick, dense objects imaged with MeV X-rays. We show that material identification can be efficient and accurate, for example, in a scene with three shells (two copper, one aluminum), our algorithm ran in six minutes on a consumer-level laptop and identified the correct materials as being among the top 10 best matches out of 8,000 possibilities.

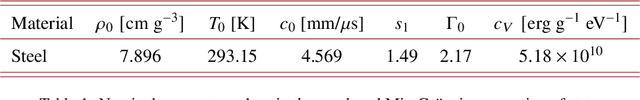

High-Precision Inversion of Dynamic Radiography Using Hydrodynamic Features

Dec 02, 2021

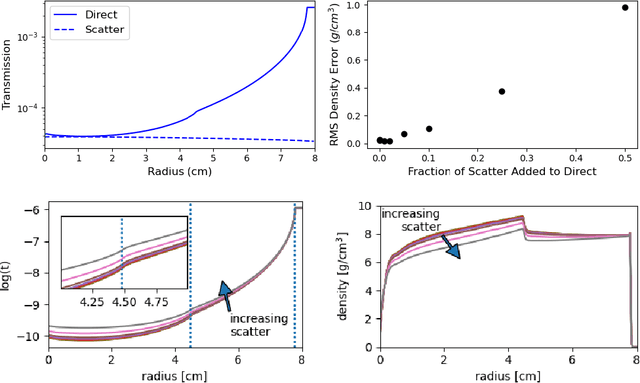

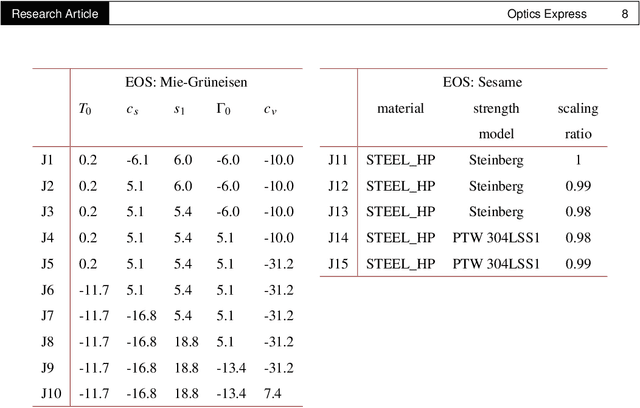

Abstract:Radiography is often used to probe complex, evolving density fields in dynamic systems and in so doing gain insight into the underlying physics. This technique has been used in numerous fields including materials science, shock physics, inertial confinement fusion, and other national security applications. In many of these applications, however, complications resulting from noise, scatter, complex beam dynamics, etc. prevent the reconstruction of density from being accurate enough to identify the underlying physics with sufficient confidence. As such, density reconstruction from static/dynamic radiography has typically been limited to identifying discontinuous features such as cracks and voids in a number of these applications. In this work, we propose a fundamentally new approach to reconstructing density from a temporal sequence of radiographic images. Using only the robust features identifiable in radiographs, we combine them with the underlying hydrodynamic equations of motion using a machine learning approach, namely, conditional generative adversarial networks (cGAN), to determine the density fields from a dynamic sequence of radiographs. Next, we seek to further enhance the hydrodynamic consistency of the ML-based density reconstruction through a process of parameter estimation and projection onto a hydrodynamic manifold. In this context, we note that the distance from the hydrodynamic manifold given by the training data to the test data in the parameter space considered both serves as a diagnostic of the robustness of the predictions and serves to augment the training database, with the expectation that the latter will further reduce future density reconstruction errors. Finally, we demonstrate the ability of this method to outperform a traditional radiographic reconstruction in capturing allowable hydrodynamic paths even when relatively small amounts of scatter are present.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge