Mainak Singha

bi-modal textual prompt learning for vision-language models in remote sensing

Jan 28, 2026Abstract:Prompt learning (PL) has emerged as an effective strategy to adapt vision-language models (VLMs), such as CLIP, for downstream tasks under limited supervision. While PL has demonstrated strong generalization on natural image datasets, its transferability to remote sensing (RS) imagery remains underexplored. RS data present unique challenges, including multi-label scenes, high intra-class variability, and diverse spatial resolutions, that hinder the direct applicability of existing PL methods. In particular, current prompt-based approaches often struggle to identify dominant semantic cues and fail to generalize to novel classes in RS scenarios. To address these challenges, we propose BiMoRS, a lightweight bi-modal prompt learning framework tailored for RS tasks. BiMoRS employs a frozen image captioning model (e.g., BLIP-2) to extract textual semantic summaries from RS images. These captions are tokenized using a BERT tokenizer and fused with high-level visual features from the CLIP encoder. A lightweight cross-attention module then conditions a learnable query prompt on the fused textual-visual representation, yielding contextualized prompts without altering the CLIP backbone. We evaluate BiMoRS on four RS datasets across three domain generalization (DG) tasks and observe consistent performance gains, outperforming strong baselines by up to 2% on average. Codes are available at https://github.com/ipankhi/BiMoRS.

MMLGNet: Cross-Modal Alignment of Remote Sensing Data using CLIP

Jan 13, 2026Abstract:In this paper, we propose a novel multimodal framework, Multimodal Language-Guided Network (MMLGNet), to align heterogeneous remote sensing modalities like Hyperspectral Imaging (HSI) and LiDAR with natural language semantics using vision-language models such as CLIP. With the increasing availability of multimodal Earth observation data, there is a growing need for methods that effectively fuse spectral, spatial, and geometric information while enabling semantic-level understanding. MMLGNet employs modality-specific encoders and aligns visual features with handcrafted textual embeddings in a shared latent space via bi-directional contrastive learning. Inspired by CLIP's training paradigm, our approach bridges the gap between high-dimensional remote sensing data and language-guided interpretation. Notably, MMLGNet achieves strong performance with simple CNN-based encoders, outperforming several established multimodal visual-only methods on two benchmark datasets, demonstrating the significant benefit of language supervision. Codes are available at https://github.com/AdityaChaudhary2913/CLIP_HSI.

SDHSI-Net: Learning Better Representations for Hyperspectral Images via Self-Distillation

Jan 12, 2026Abstract:Hyperspectral image (HSI) classification presents unique challenges due to its high spectral dimensionality and limited labeled data. Traditional deep learning models often suffer from overfitting and high computational costs. Self-distillation (SD), a variant of knowledge distillation where a network learns from its own predictions, has recently emerged as a promising strategy to enhance model performance without requiring external teacher networks. In this work, we explore the application of SD to HSI by treating earlier outputs as soft targets, thereby enforcing consistency between intermediate and final predictions. This process improves intra-class compactness and inter-class separability in the learned feature space. Our approach is validated on two benchmark HSI datasets and demonstrates significant improvements in classification accuracy and robustness, highlighting the effectiveness of SD for spectral-spatial learning. Codes are available at https://github.com/Prachet-Dev-Singh/SDHSI.

Reconstruction Guided Few-shot Network For Remote Sensing Image Classification

Jan 12, 2026Abstract:Few-shot remote sensing image classification is challenging due to limited labeled samples and high variability in land-cover types. We propose a reconstruction-guided few-shot network (RGFS-Net) that enhances generalization to unseen classes while preserving consistency for seen categories. Our method incorporates a masked image reconstruction task, where parts of the input are occluded and reconstructed to encourage semantically rich feature learning. This auxiliary task strengthens spatial understanding and improves class discrimination under low-data settings. We evaluated the efficacy of EuroSAT and PatternNet datasets under 1-shot and 5-shot protocols, our approach consistently outperforms existing baselines. The proposed method is simple, effective, and compatible with standard backbones, offering a robust solution for few-shot remote sensing classification. Codes are available at https://github.com/stark0908/RGFS.

Learning Under Laws: A Constraint-Projected Neural PDE Solver that Eliminates Hallucinations

Nov 05, 2025Abstract:Neural networks can approximate solutions to partial differential equations, but they often break the very laws they are meant to model-creating mass from nowhere, drifting shocks, or violating conservation and entropy. We address this by training within the laws of physics rather than beside them. Our framework, called Constraint-Projected Learning (CPL), keeps every update physically admissible by projecting network outputs onto the intersection of constraint sets defined by conservation, Rankine-Hugoniot balance, entropy, and positivity. The projection is differentiable and adds only about 10% computational overhead, making it fully compatible with back-propagation. We further stabilize training with total-variation damping (TVD) to suppress small oscillations and a rollout curriculum that enforces consistency over long prediction horizons. Together, these mechanisms eliminate both hard and soft violations: conservation holds at machine precision, total-variation growth vanishes, and entropy and error remain bounded. On Burgers and Euler systems, CPL produces stable, physically lawful solutions without loss of accuracy. Instead of hoping neural solvers will respect physics, CPL makes that behavior an intrinsic property of the learning process.

FedMVP: Federated Multi-modal Visual Prompt Tuning for Vision-Language Models

Apr 29, 2025

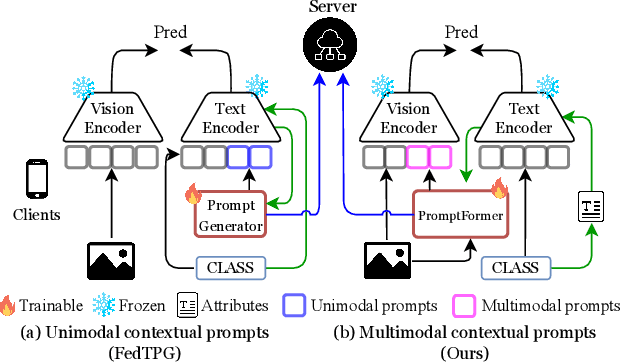

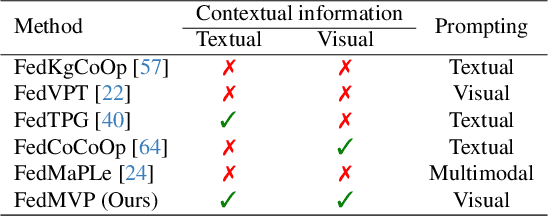

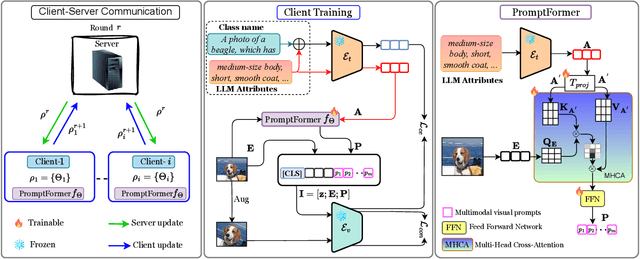

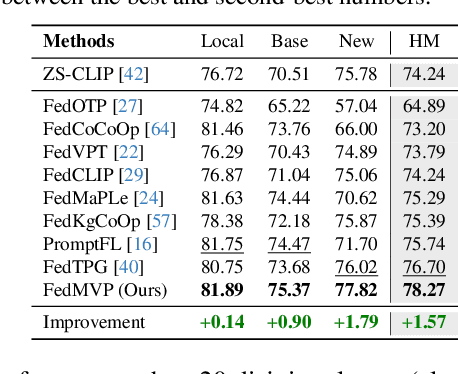

Abstract:Textual prompt tuning adapts Vision-Language Models (e.g., CLIP) in federated learning by tuning lightweight input tokens (or prompts) on local client data, while keeping network weights frozen. Post training, only the prompts are shared by the clients with the central server for aggregation. However, textual prompt tuning often struggles with overfitting to known concepts and may be overly reliant on memorized text features, limiting its adaptability to unseen concepts. To address this limitation, we propose Federated Multimodal Visual Prompt Tuning (FedMVP) that conditions the prompts on comprehensive contextual information -- image-conditioned features and textual attribute features of a class -- that is multimodal in nature. At the core of FedMVP is a PromptFormer module that synergistically aligns textual and visual features through cross-attention, enabling richer contexual integration. The dynamically generated multimodal visual prompts are then input to the frozen vision encoder of CLIP, and trained with a combination of CLIP similarity loss and a consistency loss. Extensive evaluation on 20 datasets spanning three generalization settings demonstrates that FedMVP not only preserves performance on in-distribution classes and domains, but also displays higher generalizability to unseen classes and domains when compared to state-of-the-art methods. Codes will be released upon acceptance.

OSLoPrompt: Bridging Low-Supervision Challenges and Open-Set Domain Generalization in CLIP

Mar 20, 2025Abstract:We introduce Low-Shot Open-Set Domain Generalization (LSOSDG), a novel paradigm unifying low-shot learning with open-set domain generalization (ODG). While prompt-based methods using models like CLIP have advanced DG, they falter in low-data regimes (e.g., 1-shot) and lack precision in detecting open-set samples with fine-grained semantics related to training classes. To address these challenges, we propose OSLOPROMPT, an advanced prompt-learning framework for CLIP with two core innovations. First, to manage limited supervision across source domains and improve DG, we introduce a domain-agnostic prompt-learning mechanism that integrates adaptable domain-specific cues and visually guided semantic attributes through a novel cross-attention module, besides being supported by learnable domain- and class-generic visual prompts to enhance cross-modal adaptability. Second, to improve outlier rejection during inference, we classify unfamiliar samples as "unknown" and train specialized prompts with systematically synthesized pseudo-open samples that maintain fine-grained relationships to known classes, generated through a targeted query strategy with off-the-shelf foundation models. This strategy enhances feature learning, enabling our model to detect open samples with varied granularity more effectively. Extensive evaluations across five benchmarks demonstrate that OSLOPROMPT establishes a new state-of-the-art in LSOSDG, significantly outperforming existing methods.

GraphVL: Graph-Enhanced Semantic Modeling via Vision-Language Models for Generalized Class Discovery

Nov 04, 2024

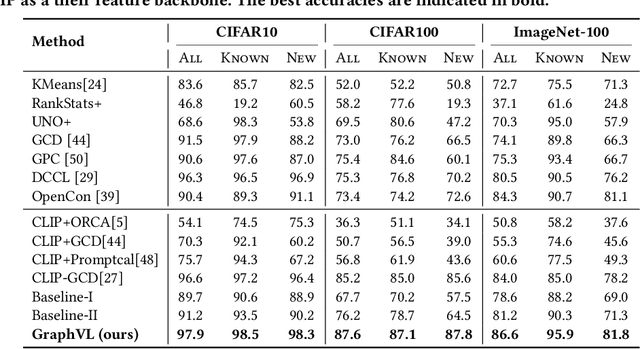

Abstract:Generalized Category Discovery (GCD) aims to cluster unlabeled images into known and novel categories using labeled images from known classes. To address the challenge of transferring features from known to unknown classes while mitigating model bias, we introduce GraphVL, a novel approach for vision-language modeling in GCD, leveraging CLIP. Our method integrates a graph convolutional network (GCN) with CLIP's text encoder to preserve class neighborhood structure. We also employ a lightweight visual projector for image data, ensuring discriminative features through margin-based contrastive losses for image-text mapping. This neighborhood preservation criterion effectively regulates the semantic space, making it less sensitive to known classes. Additionally, we learn textual prompts from known classes and align them to create a more contextually meaningful semantic feature space for the GCN layer using a contextual similarity loss. Finally, we represent unlabeled samples based on their semantic distance to class prompts from the GCN, enabling semi-supervised clustering for class discovery and minimizing errors. Our experiments on seven benchmark datasets consistently demonstrate the superiority of GraphVL when integrated with the CLIP backbone.

COSMo: CLIP Talks on Open-Set Multi-Target Domain Adaptation

Aug 31, 2024

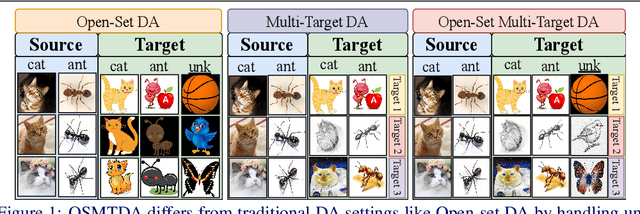

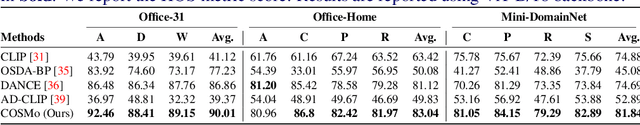

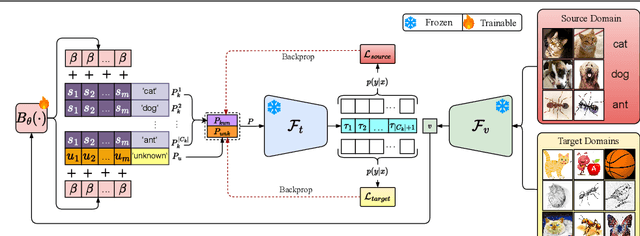

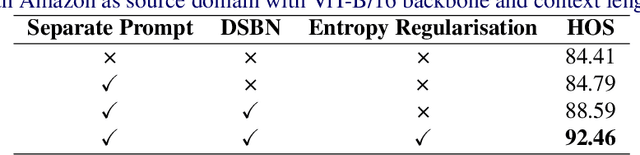

Abstract:Multi-Target Domain Adaptation (MTDA) entails learning domain-invariant information from a single source domain and applying it to multiple unlabeled target domains. Yet, existing MTDA methods predominantly focus on addressing domain shifts within visual features, often overlooking semantic features and struggling to handle unknown classes, resulting in what is known as Open-Set (OS) MTDA. While large-scale vision-language foundation models like CLIP show promise, their potential for MTDA remains largely unexplored. This paper introduces COSMo, a novel method that learns domain-agnostic prompts through source domain-guided prompt learning to tackle the MTDA problem in the prompt space. By leveraging a domain-specific bias network and separate prompts for known and unknown classes, COSMo effectively adapts across domain and class shifts. To the best of our knowledge, COSMo is the first method to address Open-Set Multi-Target DA (OSMTDA), offering a more realistic representation of real-world scenarios and addressing the challenges of both open-set and multi-target DA. COSMo demonstrates an average improvement of $5.1\%$ across three challenging datasets: Mini-DomainNet, Office-31, and Office-Home, compared to other related DA methods adapted to operate within the OSMTDA setting. Code is available at: https://github.com/munish30monga/COSMo

Elevating All Zero-Shot Sketch-Based Image Retrieval Through Multimodal Prompt Learning

Jul 05, 2024

Abstract:We address the challenges inherent in sketch-based image retrieval (SBIR) across various settings, including zero-shot SBIR, generalized zero-shot SBIR, and fine-grained zero-shot SBIR, by leveraging the vision-language foundation model, CLIP. While recent endeavors have employed CLIP to enhance SBIR, these approaches predominantly follow uni-modal prompt processing and overlook to fully exploit CLIP's integrated visual and textual capabilities. To bridge this gap, we introduce SpLIP, a novel multi-modal prompt learning scheme designed to operate effectively with frozen CLIP backbones. We diverge from existing multi-modal prompting methods that either treat visual and textual prompts independently or integrate them in a limited fashion, leading to suboptimal generalization. SpLIP implements a bi-directional prompt-sharing strategy that enables mutual knowledge exchange between CLIP's visual and textual encoders, fostering a more cohesive and synergistic prompt processing mechanism that significantly reduces the semantic gap between the sketch and photo embeddings. In addition to pioneering multi-modal prompt learning, we propose two innovative strategies for further refining the embedding space. The first is an adaptive margin generation for the sketch-photo triplet loss, regulated by CLIP's class textual embeddings. The second introduces a novel task, termed conditional cross-modal jigsaw, aimed at enhancing fine-grained sketch-photo alignment, by focusing on implicitly modelling the viable patch arrangement of sketches using knowledge of unshuffled photos. Our comprehensive experimental evaluations across multiple benchmarks demonstrate the superior performance of SpLIP in all three SBIR scenarios. Code is available at https://github.com/mainaksingha01/SpLIP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge