Ludovico Boratto

University of Cagliari, Italy

A Reproducible and Fair Evaluation of Partition-aware Collaborative Filtering

Dec 18, 2025

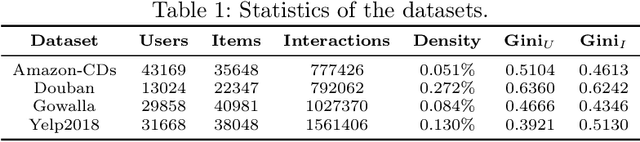

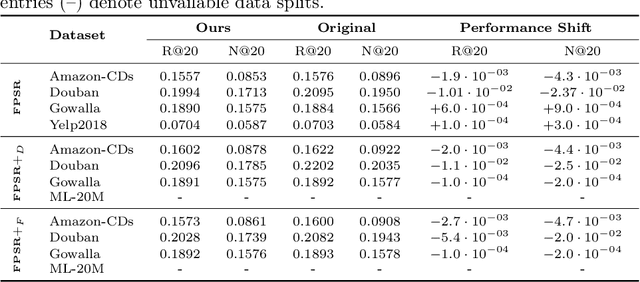

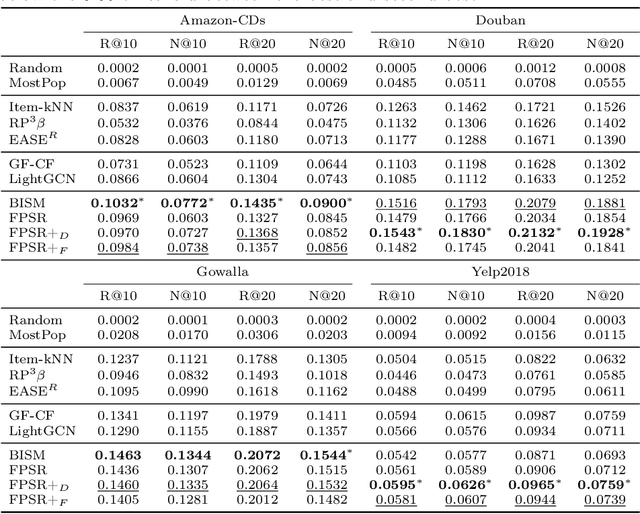

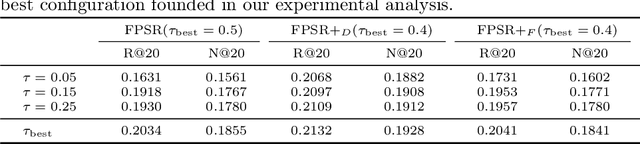

Abstract:Similarity-based collaborative filtering (CF) models have long demonstrated strong offline performance and conceptual simplicity. However, their scalability is limited by the quadratic cost of maintaining dense item-item similarity matrices. Partitioning-based paradigms have recently emerged as an effective strategy for balancing effectiveness and efficiency, enabling models to learn local similarities within coherent subgraphs while maintaining a limited global context. In this work, we focus on the Fine-tuning Partition-aware Similarity Refinement (FPSR) framework, a prominent representative of this family, as well as its extension, FPSR+. Reproducible evaluation of partition-aware collaborative filtering remains challenging, as prior FPSR/FPSR+ reports often rely on splits of unclear provenance and omit some similarity-based baselines, thereby complicating fair comparison. We present a transparent, fully reproducible benchmark of FPSR and FPSR+. Based on our results, the family of FPSR models does not consistently perform at the highest level. Overall, it remains competitive, validates its design choices, and shows significant advantages in long-tail scenarios. This highlights the accuracy-coverage trade-offs resulting from partitioning, global components, and hub design. Our investigation clarifies when partition-aware similarity modeling is most beneficial and offers actionable guidance for scalable recommender system design under reproducible protocols.

How Fair is Your Diffusion Recommender Model?

Sep 06, 2024

Abstract:Diffusion-based recommender systems have recently proven to outperform traditional generative recommendation approaches, such as variational autoencoders and generative adversarial networks. Nevertheless, the machine learning literature has raised several concerns regarding the possibility that diffusion models, while learning the distribution of data samples, may inadvertently carry information bias and lead to unfair outcomes. In light of this aspect, and considering the relevance that fairness has held in recommendations over the last few decades, we conduct one of the first fairness investigations in the literature on DiffRec, a pioneer approach in diffusion-based recommendation. First, we propose an experimental setting involving DiffRec (and its variant L-DiffRec) along with nine state-of-the-art recommendation models, two popular recommendation datasets from the fairness-aware literature, and six metrics accounting for accuracy and consumer/provider fairness. Then, we perform a twofold analysis, one assessing models' performance under accuracy and recommendation fairness separately, and the other identifying if and to what extent such metrics can strike a performance trade-off. Experimental results from both studies confirm the initial unfairness warnings but pave the way for how to address them in future research directions.

Fair Augmentation for Graph Collaborative Filtering

Aug 22, 2024Abstract:Recent developments in recommendation have harnessed the collaborative power of graph neural networks (GNNs) in learning users' preferences from user-item networks. Despite emerging regulations addressing fairness of automated systems, unfairness issues in graph collaborative filtering remain underexplored, especially from the consumer's perspective. Despite numerous contributions on consumer unfairness, only a few of these works have delved into GNNs. A notable gap exists in the formalization of the latest mitigation algorithms, as well as in their effectiveness and reliability on cutting-edge models. This paper serves as a solid response to recent research highlighting unfairness issues in graph collaborative filtering by reproducing one of the latest mitigation methods. The reproduced technique adjusts the system fairness level by learning a fair graph augmentation. Under an experimental setup based on 11 GNNs, 5 non-GNN models, and 5 real-world networks across diverse domains, our investigation reveals that fair graph augmentation is consistently effective on high-utility models and large datasets. Experiments on the transferability of the fair augmented graph open new issues for future recommendation studies. Source code: https://github.com/jackmedda/FA4GCF.

User Modeling and User Profiling: A Comprehensive Survey

Feb 20, 2024

Abstract:The integration of artificial intelligence (AI) into daily life, particularly through information retrieval and recommender systems, has necessitated advanced user modeling and profiling techniques to deliver personalized experiences. These techniques aim to construct accurate user representations based on the rich amounts of data generated through interactions with these systems. This paper presents a comprehensive survey of the current state, evolution, and future directions of user modeling and profiling research. We provide a historical overview, tracing the development from early stereotype models to the latest deep learning techniques, and propose a novel taxonomy that encompasses all active topics in this research area, including recent trends. Our survey highlights the paradigm shifts towards more sophisticated user profiling methods, emphasizing implicit data collection, multi-behavior modeling, and the integration of graph data structures. We also address the critical need for privacy-preserving techniques and the push towards explainability and fairness in user modeling approaches. By examining the definitions of core terminology, we aim to clarify ambiguities and foster a clearer understanding of the field by proposing two novel encyclopedic definitions of the main terms. Furthermore, we explore the application of user modeling in various domains, such as fake news detection, cybersecurity, and personalized education. This survey serves as a comprehensive resource for researchers and practitioners, offering insights into the evolution of user modeling and profiling and guiding the development of more personalized, ethical, and effective AI systems.

Robustness in Fairness against Edge-level Perturbations in GNN-based Recommendation

Jan 26, 2024Abstract:Efforts in the recommendation community are shifting from the sole emphasis on utility to considering beyond-utility factors, such as fairness and robustness. Robustness of recommendation models is typically linked to their ability to maintain the original utility when subjected to attacks. Limited research has explored the robustness of a recommendation model in terms of fairness, e.g., the parity in performance across groups, under attack scenarios. In this paper, we aim to assess the robustness of graph-based recommender systems concerning fairness, when exposed to attacks based on edge-level perturbations. To this end, we considered four different fairness operationalizations, including both consumer and provider perspectives. Experiments on three datasets shed light on the impact of perturbations on the targeted fairness notion, uncovering key shortcomings in existing evaluation protocols for robustness. As an example, we observed perturbations affect consumer fairness on a higher extent than provider fairness, with alarming unfairness for the former. Source code: https://github.com/jackmedda/CPFairRobust

A Cost-Sensitive Meta-Learning Strategy for Fair Provider Exposure in Recommendation

Jan 24, 2024Abstract:When devising recommendation services, it is important to account for the interests of all content providers, encompassing not only newcomers but also minority demographic groups. In various instances, certain provider groups find themselves underrepresented in the item catalog, a situation that can influence recommendation results. Hence, platform owners often seek to regulate the exposure of these provider groups in the recommended lists. In this paper, we propose a novel cost-sensitive approach designed to guarantee these target exposure levels in pairwise recommendation models. This approach quantifies, and consequently mitigate, the discrepancies between the volume of recommendations allocated to groups and their contribution in the item catalog, under the principle of equity. Our results show that this approach, while aligning groups exposure with their assigned levels, does not compromise to the original recommendation utility. Source code and pre-processed data can be retrieved at https://github.com/alessandraperniciano/meta-learning-strategy-fair-provider-exposure.

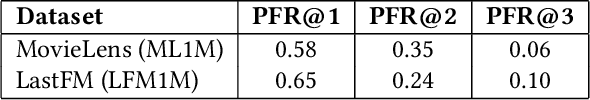

MOReGIn: Multi-Objective Recommendation at the Global and Individual Levels

Jan 23, 2024Abstract:Multi-Objective Recommender Systems (MORSs) emerged as a paradigm to guarantee multiple (often conflicting) goals. Besides accuracy, a MORS can operate at the global level, where additional beyond-accuracy goals are met for the system as a whole, or at the individual level, meaning that the recommendations are tailored to the needs of each user. The state-of-the-art MORSs either operate at the global or individual level, without assuming the co-existence of the two perspectives. In this study, we show that when global and individual objectives co-exist, MORSs are not able to meet both types of goals. To overcome this issue, we present an approach that regulates the recommendation lists so as to guarantee both global and individual perspectives, while preserving its effectiveness. Specifically, as individual perspective, we tackle genre calibration and, as global perspective, provider fairness. We validate our approach on two real-world datasets, publicly released with this paper.

Faithful Path Language Modelling for Explainable Recommendation over Knowledge Graph

Oct 25, 2023

Abstract:Path reasoning methods over knowledge graphs have gained popularity for their potential to improve transparency in recommender systems. However, the resulting models still rely on pre-trained knowledge graph embeddings, fail to fully exploit the interdependence between entities and relations in the KG for recommendation, and may generate inaccurate explanations. In this paper, we introduce PEARLM, a novel approach that efficiently captures user behaviour and product-side knowledge through language modelling. With our approach, knowledge graph embeddings are directly learned from paths over the KG by the language model, which also unifies entities and relations in the same optimisation space. Constraints on the sequence decoding additionally guarantee path faithfulness with respect to the KG. Experiments on two datasets show the effectiveness of our approach compared to state-of-the-art baselines. Source code and datasets: AVAILABLE AFTER GETTING ACCEPTED.

Counterfactual Graph Augmentation for Consumer Unfairness Mitigation in Recommender Systems

Aug 23, 2023Abstract:In recommendation literature, explainability and fairness are becoming two prominent perspectives to consider. However, prior works have mostly addressed them separately, for instance by explaining to consumers why a certain item was recommended or mitigating disparate impacts in recommendation utility. None of them has leveraged explainability techniques to inform unfairness mitigation. In this paper, we propose an approach that relies on counterfactual explanations to augment the set of user-item interactions, such that using them while inferring recommendations leads to fairer outcomes. Modeling user-item interactions as a bipartite graph, our approach augments the latter by identifying new user-item edges that not only can explain the original unfairness by design, but can also mitigate it. Experiments on two public data sets show that our approach effectively leads to a better trade-off between fairness and recommendation utility compared with state-of-the-art mitigation procedures. We further analyze the characteristics of added edges to highlight key unfairness patterns. Source code available at https://github.com/jackmedda/RS-BGExplainer/tree/cikm2023.

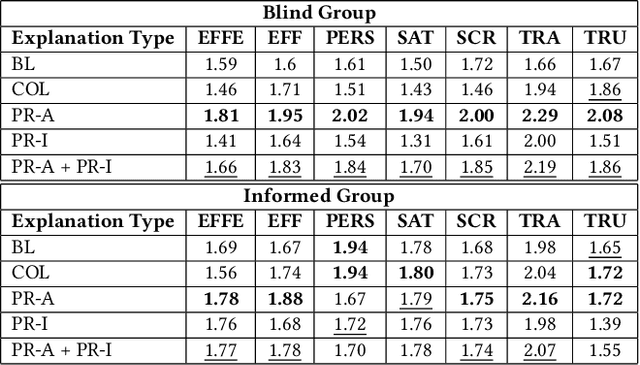

Looks Can Be Deceiving: Linking User-Item Interactions and User's Propensity Towards Multi-Objective Recommendations

Jul 02, 2023Abstract:Multi-objective recommender systems (MORS) provide suggestions to users according to multiple (and possibly conflicting) goals. When a system optimizes its results at the individual-user level, it tailors them on a user's propensity towards the different objectives. Hence, the capability to understand users' fine-grained needs towards each goal is crucial. In this paper, we present the results of a user study in which we monitored the way users interacted with recommended items, as well as their self-proclaimed propensities towards relevance, novelty and diversity objectives. The study was divided into several sessions, where users evaluated recommendation lists originating from a relevance-only single-objective baseline as well as MORS. We show that despite MORS-based recommendations attracted less selections, its presence in the early sessions is crucial for users' satisfaction in the later stages. Surprisingly, the self-proclaimed willingness of users to interact with novel and diverse items is not always reflected in the recommendations they accept. Post-study questionnaires provide insights on how to deal with this matter, suggesting that MORS-based results should be accompanied by elements that allow users to understand the recommendations, so as to facilitate their acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge