Giacomo Balloccu

Faithful Path Language Modelling for Explainable Recommendation over Knowledge Graph

Oct 25, 2023

Abstract:Path reasoning methods over knowledge graphs have gained popularity for their potential to improve transparency in recommender systems. However, the resulting models still rely on pre-trained knowledge graph embeddings, fail to fully exploit the interdependence between entities and relations in the KG for recommendation, and may generate inaccurate explanations. In this paper, we introduce PEARLM, a novel approach that efficiently captures user behaviour and product-side knowledge through language modelling. With our approach, knowledge graph embeddings are directly learned from paths over the KG by the language model, which also unifies entities and relations in the same optimisation space. Constraints on the sequence decoding additionally guarantee path faithfulness with respect to the KG. Experiments on two datasets show the effectiveness of our approach compared to state-of-the-art baselines. Source code and datasets: AVAILABLE AFTER GETTING ACCEPTED.

Knowledge is Power, Understanding is Impact: Utility and Beyond Goals, Explanation Quality, and Fairness in Path Reasoning Recommendation

Jan 14, 2023

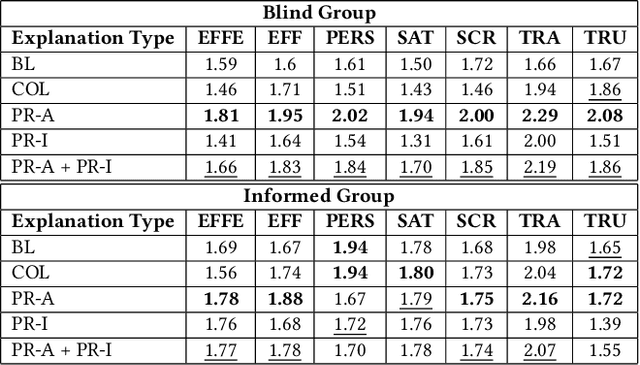

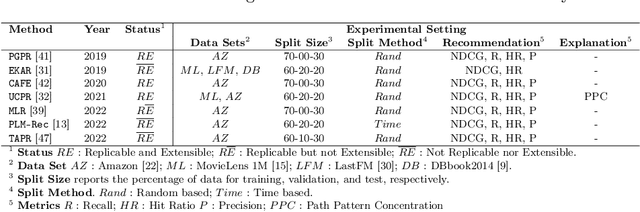

Abstract:Path reasoning is a notable recommendation approach that models high-order user-product relations, based on a Knowledge Graph (KG). This approach can extract reasoning paths between recommended products and already experienced products and, then, turn such paths into textual explanations for the user. Unfortunately, evaluation protocols in this field appear heterogeneous and limited, making it hard to contextualize the impact of the existing methods. In this paper, we replicated three state-of-the-art relevant path reasoning recommendation methods proposed in top-tier conferences. Under a common evaluation protocol, based on two public data sets and in comparison with other knowledge-aware methods, we then studied the extent to which they meet recommendation utility and beyond objectives, explanation quality, and consumer and provider fairness. Our study provides a picture of the progress in this field, highlighting open issues and future directions. Source code: \url{https://github.com/giacoballoccu/rep-path-reasoning-recsys}.

Reinforcement Recommendation Reasoning through Knowledge Graphs for Explanation Path Quality

Sep 11, 2022

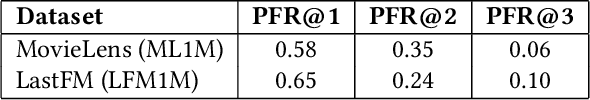

Abstract:Numerous Knowledge Graphs (KGs) are being created to make recommender systems not only intelligent but also knowledgeable. Reinforcement recommendation reasoning is a recent approach able to model high-order user-product relations, according to the KG. This type of approach makes it possible to extract reasoning paths between the recommended product and already experienced products. These paths can be in turn translated into textual explanations to be provided to the user for a given recommendation. However, none of the existing approaches has investigated user-level properties of a single or a group of reasoning paths. In this paper, we propose a series of quantitative properties that monitor the quality of the reasoning paths, based on recency, popularity, and diversity. We then combine in- and post-processing approaches to optimize for both recommendation quality and reasoning path quality. Experiments on three public data sets show that our approaches significantly increase reasoning path quality according to the proposed properties, while preserving recommendation quality. Source code, data sets, and KGs are available at https://tinyurl.com/bdbfzr4n.

Post Processing Recommender Systems with Knowledge Graphs for Recency, Popularity, and Diversity of Explanations

Apr 24, 2022

Abstract:Existing explainable recommender systems have mainly modeled relationships between recommended and already experienced products, and shaped explanation types accordingly (e.g., movie "x" starred by actress "y" recommended to a user because that user watched other movies with "y" as an actress). However, none of these systems has investigated the extent to which properties of a single explanation (e.g., the recency of interaction with that actress) and of a group of explanations for a recommended list (e.g., the diversity of the explanation types) can influence the perceived explaination quality. In this paper, we conceptualized three novel properties that model the quality of the explanations (linking interaction recency, shared entity popularity, and explanation type diversity) and proposed re-ranking approaches able to optimize for these properties. Experiments on two public data sets showed that our approaches can increase explanation quality according to the proposed properties, fairly across demographic groups, while preserving recommendation utility. The source code and data are available at https://github.com/giacoballoccu/explanation-quality-recsys.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge