Luca Di Giammarino

ActLoc: Learning to Localize on the Move via Active Viewpoint Selection

Aug 28, 2025Abstract:Reliable localization is critical for robot navigation, yet most existing systems implicitly assume that all viewing directions at a location are equally informative. In practice, localization becomes unreliable when the robot observes unmapped, ambiguous, or uninformative regions. To address this, we present ActLoc, an active viewpoint-aware planning framework for enhancing localization accuracy for general robot navigation tasks. At its core, ActLoc employs a largescale trained attention-based model for viewpoint selection. The model encodes a metric map and the camera poses used during map construction, and predicts localization accuracy across yaw and pitch directions at arbitrary 3D locations. These per-point accuracy distributions are incorporated into a path planner, enabling the robot to actively select camera orientations that maximize localization robustness while respecting task and motion constraints. ActLoc achieves stateof-the-art results on single-viewpoint selection and generalizes effectively to fulltrajectory planning. Its modular design makes it readily applicable to diverse robot navigation and inspection tasks.

MAD-BA: 3D LiDAR Bundle Adjustment -- from Uncertainty Modelling to Structure Optimization

Jan 07, 2025

Abstract:The joint optimization of sensor poses and 3D structure is fundamental for state estimation in robotics and related fields. Current LiDAR systems often prioritize pose optimization, with structure refinement either omitted or treated separately using representations like signed distance functions or neural networks. This paper introduces a framework for simultaneous optimization of sensor poses and 3D map, represented as surfels. A generalized LiDAR uncertainty model is proposed to address degraded or less reliable measurements in varying scenarios. Experimental results on public datasets demonstrate improved performance over most comparable state-of-the-art methods. The system is provided as open-source software to support further research.

Learning Where to Look: Self-supervised Viewpoint Selection for Active Localization using Geometrical Information

Jul 22, 2024

Abstract:Accurate localization in diverse environments is a fundamental challenge in computer vision and robotics. The task involves determining a sensor's precise position and orientation, typically a camera, within a given space. Traditional localization methods often rely on passive sensing, which may struggle in scenarios with limited features or dynamic environments. In response, this paper explores the domain of active localization, emphasizing the importance of viewpoint selection to enhance localization accuracy. Our contributions involve using a data-driven approach with a simple architecture designed for real-time operation, a self-supervised data training method, and the capability to consistently integrate our map into a planning framework tailored for real-world robotics applications. Our results demonstrate that our method performs better than the existing one, targeting similar problems and generalizing on synthetic and real data. We also release an open-source implementation to benefit the community.

MAD-ICP: It Is All About Matching Data -- Robust and Informed LiDAR Odometry

May 09, 2024Abstract:LiDAR odometry is the task of estimating the ego-motion of the sensor from sequential laser scans. This problem has been addressed by the community for more than two decades, and many effective solutions are available nowadays. Most of these systems implicitly rely on assumptions about the operating environment, the sensor used, and motion pattern. When these assumptions are violated, several well-known systems tend to perform poorly. This paper presents a LiDAR odometry system that can overcome these limitations and operate well under different operating conditions while achieving performance comparable with domain-specific methods. Our algorithm follows the well-known ICP paradigm that leverages a PCA-based kd-tree implementation that is used to extract structural information about the clouds being registered and to compute the minimization metric for the alignment. The drift is bound by managing the local map based on the estimated uncertainty of the tracked pose. To benefit the community, we release an open-source C++ anytime real-time implementation.

VBR: A Vision Benchmark in Rome

Apr 17, 2024Abstract:This paper presents a vision and perception research dataset collected in Rome, featuring RGB data, 3D point clouds, IMU, and GPS data. We introduce a new benchmark targeting visual odometry and SLAM, to advance the research in autonomous robotics and computer vision. This work complements existing datasets by simultaneously addressing several issues, such as environment diversity, motion patterns, and sensor frequency. It uses up-to-date devices and presents effective procedures to accurately calibrate the intrinsic and extrinsic of the sensors while addressing temporal synchronization. During recording, we cover multi-floor buildings, gardens, urban and highway scenarios. Combining handheld and car-based data collections, our setup can simulate any robot (quadrupeds, quadrotors, autonomous vehicles). The dataset includes an accurate 6-dof ground truth based on a novel methodology that refines the RTK-GPS estimate with LiDAR point clouds through Bundle Adjustment. All sequences divided in training and testing are accessible through our website.

Ca$^2$Lib: Simple and Accurate LiDAR-RGB Calibration using Small Common Markers

Sep 14, 2023Abstract:In many fields of robotics, knowing the relative position and orientation between two sensors is a mandatory precondition to operate with multiple sensing modalities. In this context, the pair LiDAR-RGB cameras offer complementary features: LiDARs yield sparse high quality range measurements, while RGB cameras provide a dense color measurement of the environment. Existing techniques often rely either on complex calibration targets that are expensive to obtain, or extracted virtual correspondences that can hinder the estimate's accuracy. In this paper we address the problem of LiDAR-RGB calibration using typical calibration patterns (i.e. A3 chessboard) with minimal human intervention. Our approach exploits the planarity of the target to find correspondences between the sensors measurements, leading to features that are robust to LiDAR noise. Moreover, we estimate a solution by solving a joint non-linear optimization problem. We validated our approach by carrying on quantitative and comparative experiments with other state-of-the-art approaches. Our results show that our simple schema performs on par or better than other approches using complex calibration targets. Finally, we release an open-source C++ implementation at \url{https://github.com/srrg-sapienza/ca2lib}

Photometric LiDAR and RGB-D Bundle Adjustment

Mar 29, 2023

Abstract:The joint optimization of the sensor trajectory and 3D map is a crucial characteristic of Simultaneous Localization and Mapping (SLAM) systems. To achieve this, the gold standard is Bundle Adjustment (BA). Modern 3D LiDARs now retain higher resolutions that enable the creation of point cloud images resembling those taken by conventional cameras. Nevertheless, the typical effective global refinement techniques employed for RGB-D sensors are not widely applied to LiDARs. This paper presents a novel BA photometric strategy that accounts for both RGB-D and LiDAR in the same way. Our work can be used on top of any SLAM/GNSS estimate to improve and refine the initial trajectory. We conducted different experiments using these two depth sensors on public benchmarks. Our results show that our system performs on par or better compared to other state-of-the-art ad-hoc SLAM/BA strategies, free from data association and without making assumptions about the environment. In addition, we present the benefit of jointly using RGB-D and LiDAR within our unified method. We finally release an open-source CUDA/C++ implementation.

Low Frequency Spinning LiDAR De-Skewing

Mar 13, 2023Abstract:Most commercially available Light Detection and Ranging (LiDAR)s measure the distances along a 2D section of the environment by sequentially sampling the free range along directions centered at the sensor's origin. When the sensor moves during the acquisition, the measured ranges are affected by a phenomenon known as skewing, which appears as a distortion in the acquired scan. Skewing potentially affects all systems that rely on LiDAR data, however it could be compensated if the position of the sensor were known each time a single range is measured. Most methods to de-skew a LiDAR are based on external sensors such as IMU or wheel odometry, to estimate these intermediate LiDAR positions. In this paper we present a method that relies exclusively on range measurements to effectively estimate the robot velocities which are then used for de-skewing. Our approach is suitable for low frequency LiDAR where the skewing is more evident. It can be seamlessly integrated into existing pipelines, enhancing their performance at negligible computational cost. We validated the proposed method with statistical experiments characterizing different operating conditions

HiPE: Hierarchical Initialization for Pose Graphs

Jul 04, 2022

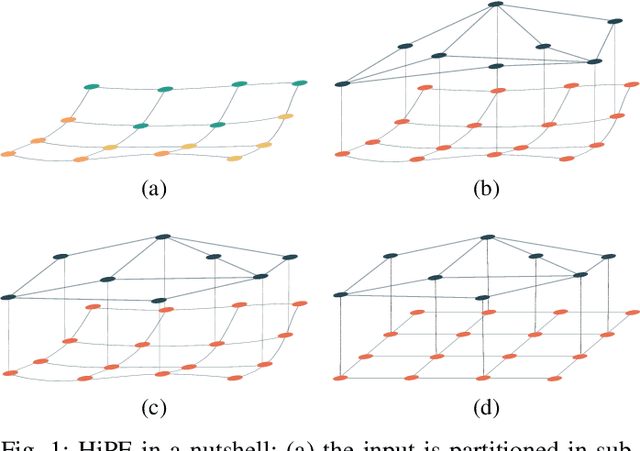

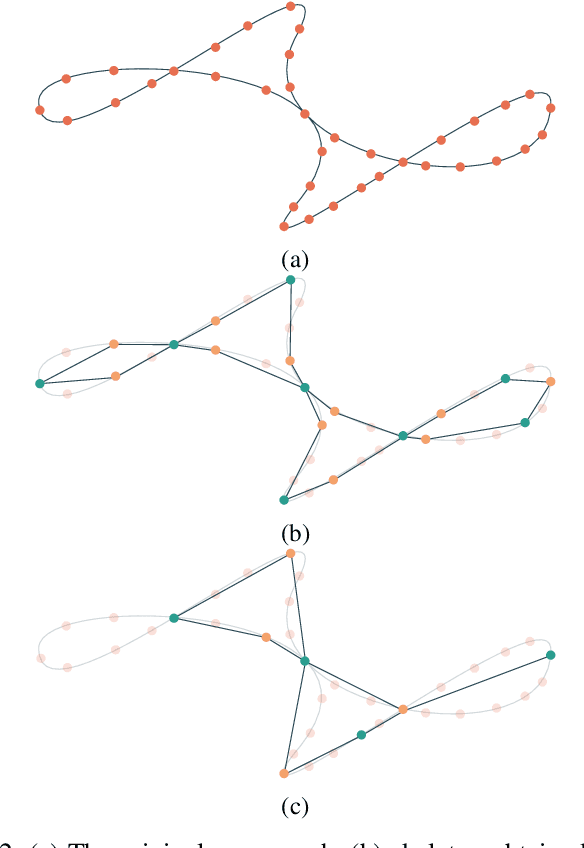

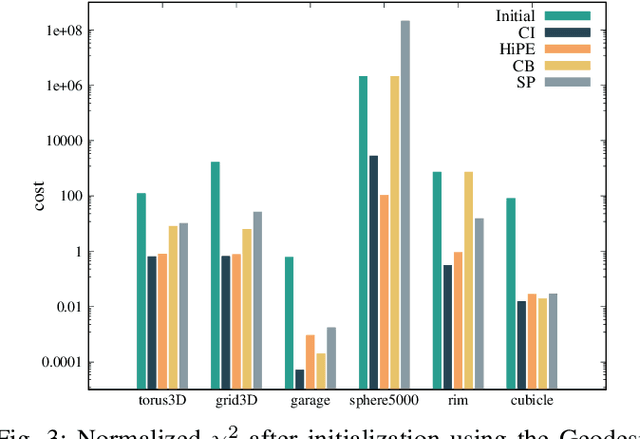

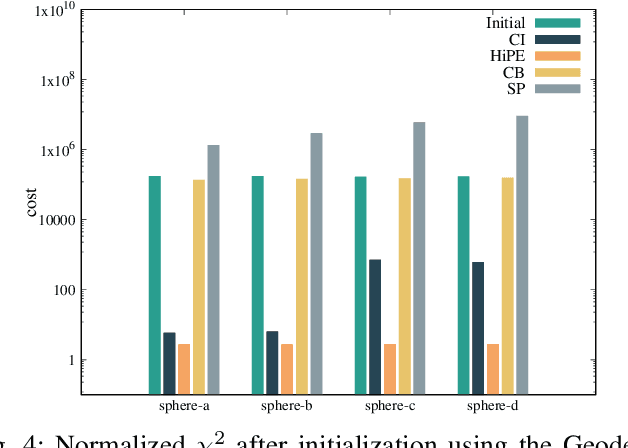

Abstract:Pose graph optimization is a non-convex optimization problem encountered in many areas of robotics perception. Its convergence to an accurate solution is conditioned by two factors: the non-linearity of the cost function in use and the initial configuration of the pose variables. In this paper, we present HiPE, a novel hierarchical algorithm for pose graph initialization. Our approach exploits a coarse-grained graph that encodes an abstract representation of the problem geometry. We construct this graph by combining maximum likelihood estimates coming from local regions of the input. By leveraging the sparsity of this representation, we can initialize the pose graph in a non-linear fashion, without computational overhead compared to existing methods. The resulting initial guess can effectively bootstrap the fine-grained optimization that is used to obtain the final solution. In addition, we perform an empirical analysis on the impact of different cost functions on the final estimate. Our experimental evaluation shows that the usage of HiPE leads to a more efficient and robust optimization process, comparing favorably with state-of-the-art methods.

MD-SLAM: Multi-cue Direct SLAM

Mar 24, 2022

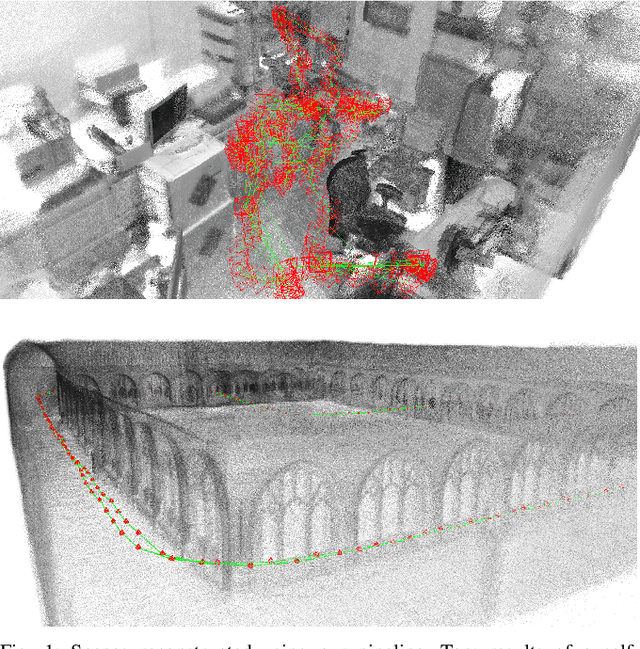

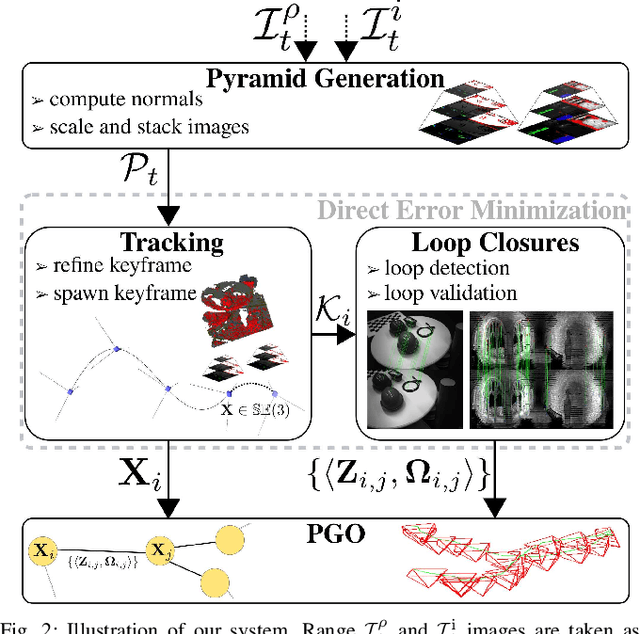

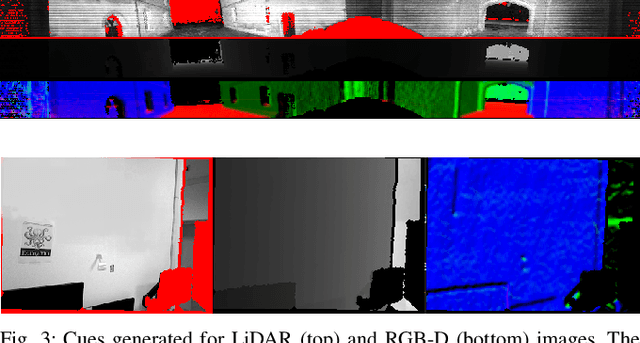

Abstract:Simultaneous Localization and Mapping (SLAM) systems are fundamental building blocks for any autonomous robot navigating in unknown environments. The SLAM implementation heavily depends on the sensor modality employed on the mobile platform. For this reason, assumptions on the scene's structure are often made to maximize estimation accuracy. This paper presents a novel direct 3D SLAM pipeline that works independently for RGB-D and LiDAR sensors. Building upon prior work on multi-cue photometric frame-to-frame alignment, our proposed approach provides an easy-to-extend and generic SLAM system. Our pipeline requires only minor adaptations within the projection model to handle different sensor modalities. We couple a position tracking system with an appearance-based relocalization mechanism that handles large loop closures. Loop closures are validated by the same direct registration algorithm used for odometry estimation. We present comparative experiments with state-of-the-art approaches on publicly available benchmarks using RGB-D cameras and 3D LiDARs. Our system performs well in heterogeneous datasets compared to other sensor-specific methods while making no assumptions about the environment. Finally, we release an open-source C++ implementation of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge