Irvin Aloise

Augmented Reality without Borders: Achieving Precise Localization Without Maps

Sep 04, 2024

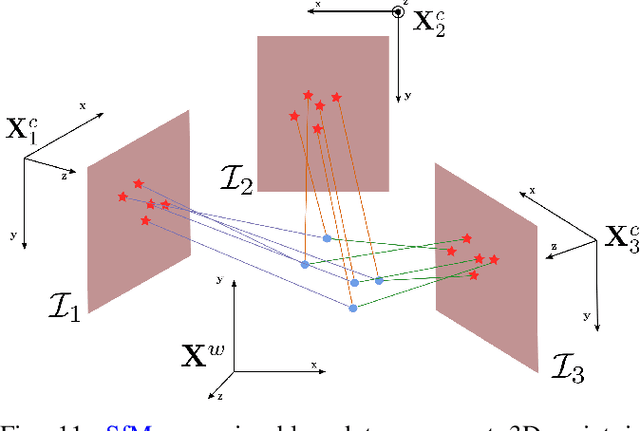

Abstract:Visual localization is crucial for Computer Vision and Augmented Reality (AR) applications, where determining the camera or device's position and orientation is essential to accurately interact with the physical environment. Traditional methods rely on detailed 3D maps constructed using Structure from Motion (SfM) or Simultaneous Localization and Mapping (SLAM), which is computationally expensive and impractical for dynamic or large-scale environments. We introduce MARLoc, a novel localization framework for AR applications that uses known relative transformations within image sequences to perform intra-sequence triangulation, generating 3D-2D correspondences for pose estimation and refinement. MARLoc eliminates the need for pre-built SfM maps, providing accurate and efficient localization suitable for dynamic outdoor environments. Evaluation with benchmark datasets and real-world experiments demonstrates MARLoc's state-of-the-art performance and robustness. By integrating MARLoc into an AR device, we highlight its capability to achieve precise localization in real-world outdoor scenarios, showcasing its practical effectiveness and potential to enhance visual localization in AR applications.

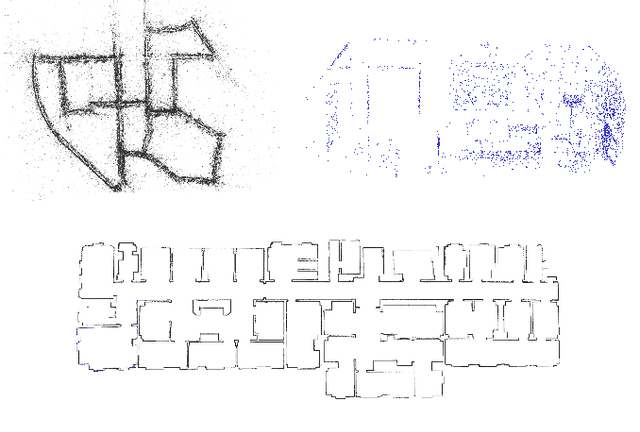

Visual Place Recognition using LiDAR Intensity Information

Mar 17, 2021

Abstract:Robots and autonomous systems need to know where they are within a map to navigate effectively. Thus, simultaneous localization and mapping or SLAM is a common building block of robot navigation systems. When building a map via a SLAM system, robots need to re-recognize places to find loop closure and reduce the odometry drift. Image-based place recognition received a lot of attention in computer vision, and in this work, we investigate how such approaches can be used for 3D LiDAR data. Recent LiDAR sensors produce high-resolution 3D scans in combination with comparably stable intensity measurements. Through a cylindrical projection, we can turn this information into a panoramic image. As a result, we can apply techniques from visual place recognition to LiDAR intensity data. The question of how well this approach works in practice has not been answered so far. This paper provides an analysis of how such visual techniques can be with LiDAR data, and we provide an evaluation on different datasets. Our results suggest that this form of place recognition is possible and an effective means for determining loop closures.

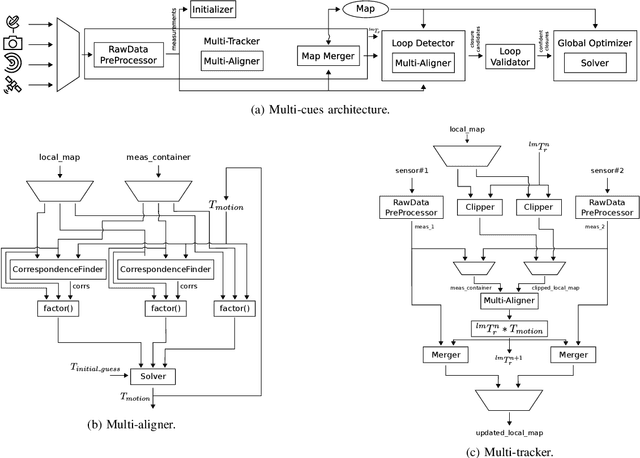

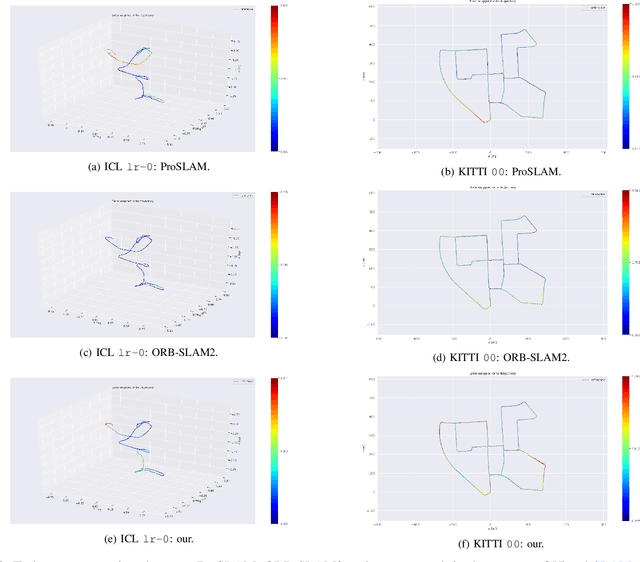

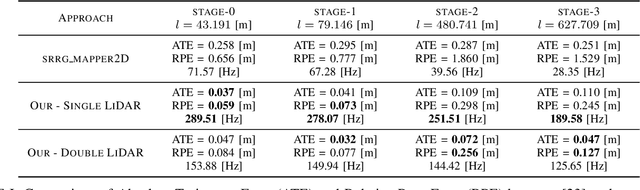

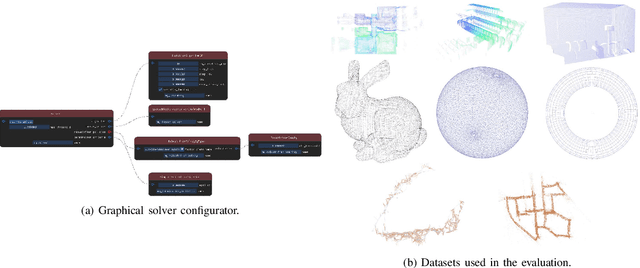

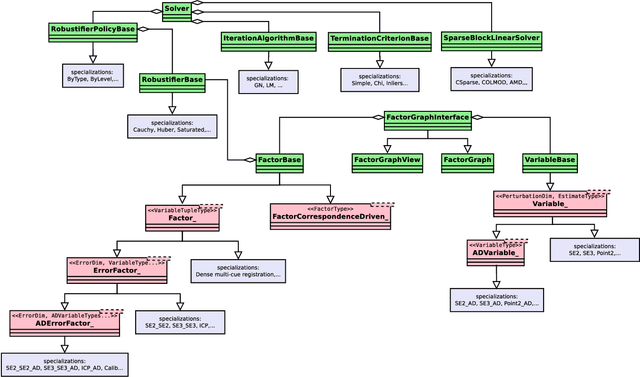

Plug-and-Play SLAM: A Unified SLAM Architecture for Modularity and Ease of Use

Mar 02, 2020

Abstract:Nowadays, SLAM (Simultaneous Localization and Mapping) is considered by the Robotics community to be a mature field. Currently, there are many open-source systems that are able to deliver fast and accurate estimation in typical real-world scenarios. Still, all these systems often provide an ad-hoc implementation that entailed to predefined sensor configurations. In this work, we tackle this issue, proposing a novel SLAM architecture specifically designed to address heterogeneous sensors' configuration and to standardize SLAM solutions. Thanks to its modularity and to specific design patterns, the presented architecture is easy to extend, enhancing code reuse and efficiency. Finally, adopting our solution, we conducted comparative experiments for a variety of sensor configurations, showing competitive results that confirm state-of-the-art performance.

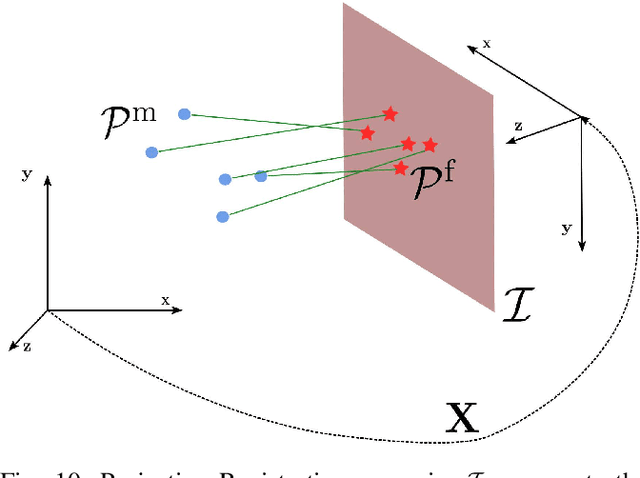

Least Squares Optimization: from Theory to Practice

Feb 26, 2020

Abstract:Nowadays, Non-Linear Least-Squares embodies the foundation of many Robotics and Computer Vision systems. The research community deeply investigated this topic in the last years, and this resulted in the development of several open-source solvers to approach constantly increasing classes of problems. In this work, we propose a unified methodology to design and develop efficient Least-Squares Optimization algorithms, focusing on the structures and patterns of each specific domain. Furthermore, we present a novel open-source optimization system, that addresses transparently problems with a different structure and designed to be easy to extend. The system is written in modern C++ and can run efficiently on embedded systems. Source code: https://srrg.gitlab.io/srrg2-solver.html. We validated our approach by conducting comparative experiments on several problems using standard datasets. The results show that our system achieves state-of-the-art performances in all tested scenarios.

Matrix Difference in Pose-Graph Optimization

Sep 04, 2018

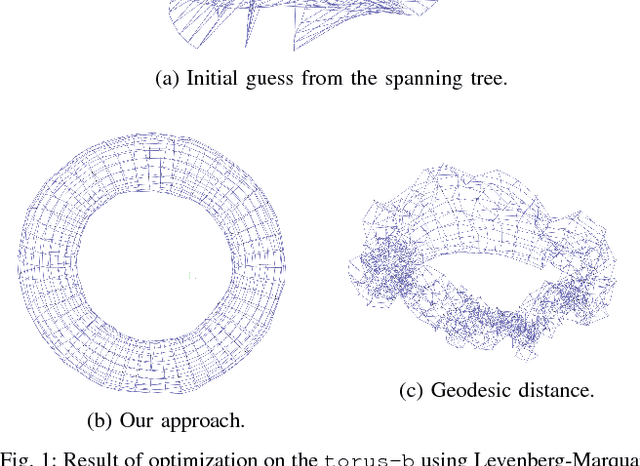

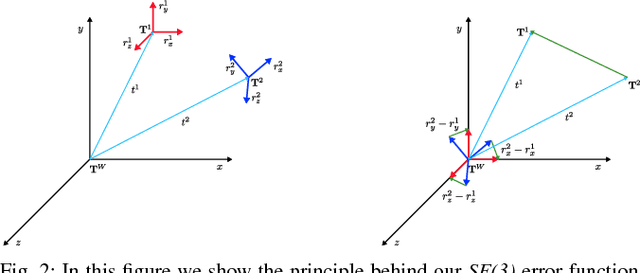

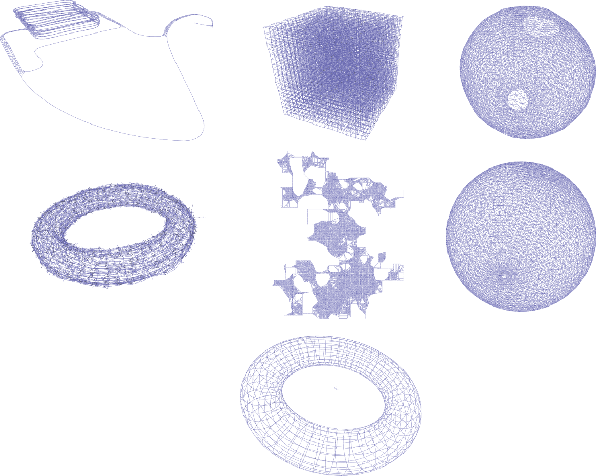

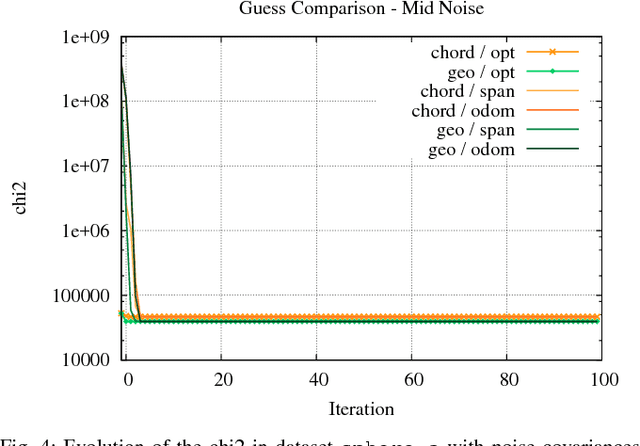

Abstract:Pose-Graph optimization is a crucial component of many modern SLAM systems. Most prominent state of the art systems address this problem by iterative non-linear least squares. Both number of iterations and convergence basin of these approaches depend on the error functions used to describe the problem. The smoother and more convex the error function with respect to perturbations of the state variables, the better the least-squares solver will perform. In this paper we propose an alternative error function obtained by removing some non-linearities from the standard used one - i.e. the geodesic error function. Comparative experiments conducted on common benchmarking datasets confirm that our function is more robust to noise that affects the rotational component of the pose measurements and, thus, exhibits a larger convergence basin than the geodesic. Furthermore, its implementation is relatively easy compared to the geodesic distance. This property leads to rather simple derivatives and nice numerical properties of the Jacobians resulting from the effective computation of the quadratic approximation used by Gauss-Newton algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge