Longyuan Li

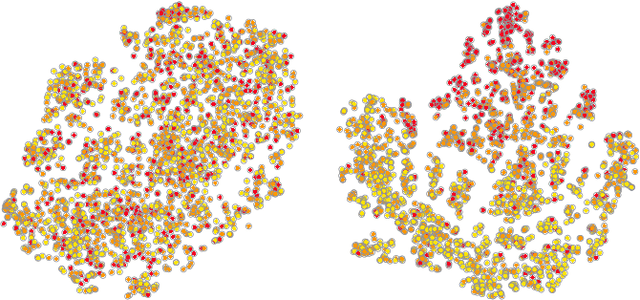

Enhancing Unsupervised Anomaly Detection with Score-Guided Network

Sep 10, 2021

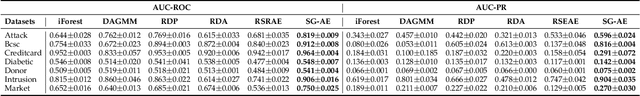

Abstract:Anomaly detection plays a crucial role in various real-world applications, including healthcare and finance systems. Owing to the limited number of anomaly labels in these complex systems, unsupervised anomaly detection methods have attracted great attention in recent years. Two major challenges faced by the existing unsupervised methods are: (i) distinguishing between normal and abnormal data in the transition field, where normal and abnormal data are highly mixed together; (ii) defining an effective metric to maximize the gap between normal and abnormal data in a hypothesis space, which is built by a representation learner. To that end, this work proposes a novel scoring network with a score-guided regularization to learn and enlarge the anomaly score disparities between normal and abnormal data. With such score-guided strategy, the representation learner can gradually learn more informative representation during the model training stage, especially for the samples in the transition field. We next propose a score-guided autoencoder (SG-AE), incorporating the scoring network into an autoencoder framework for anomaly detection, as well as other three state-of-the-art models, to further demonstrate the effectiveness and transferability of the design. Extensive experiments on both synthetic and real-world datasets demonstrate the state-of-the-art performance of these score-guided models (SGMs).

Anomaly Detection of Time Series with Smoothness-Inducing Sequential Variational Auto-Encoder

Feb 02, 2021

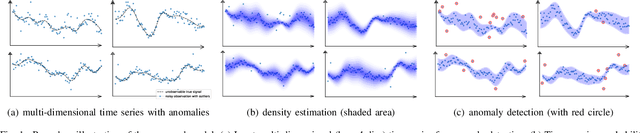

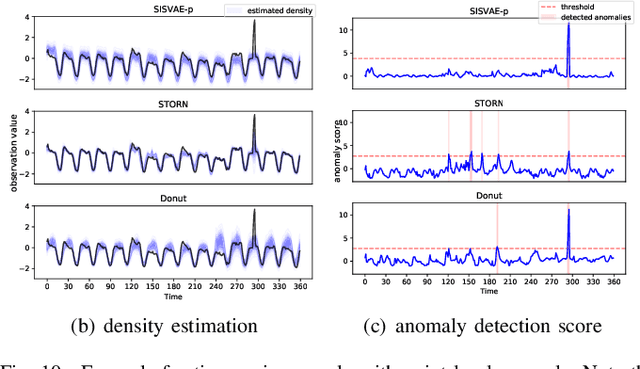

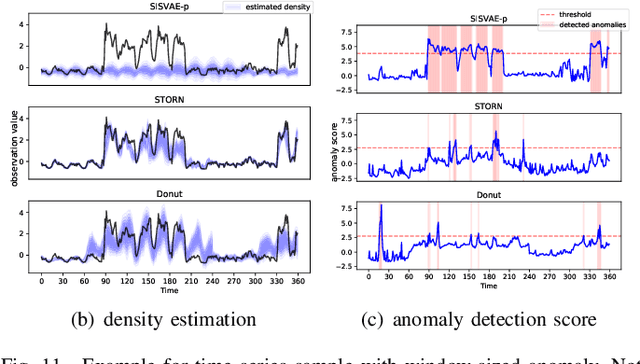

Abstract:Deep generative models have demonstrated their effectiveness in learning latent representation and modeling complex dependencies of time series. In this paper, we present a Smoothness-Inducing Sequential Variational Auto-Encoder (SISVAE) model for robust estimation and anomaly detection of multi-dimensional time series. Our model is based on Variational Auto-Encoder (VAE), and its backbone is fulfilled by a Recurrent Neural Network to capture latent temporal structures of time series for both generative model and inference model. Specifically, our model parameterizes mean and variance for each time-stamp with flexible neural networks, resulting in a non-stationary model that can work without the assumption of constant noise as commonly made by existing Markov models. However, such a flexibility may cause the model fragile to anomalies. To achieve robust density estimation which can also benefit detection tasks, we propose a smoothness-inducing prior over possible estimations. The proposed prior works as a regularizer that places penalty at non-smooth reconstructions. Our model is learned efficiently with a novel stochastic gradient variational Bayes estimator. In particular, we study two decision criteria for anomaly detection: reconstruction probability and reconstruction error. We show the effectiveness of our model on both synthetic datasets and public real-world benchmarks.

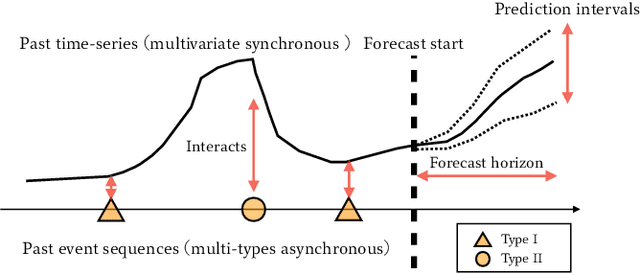

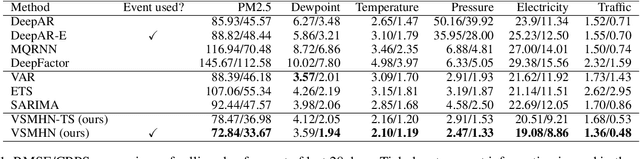

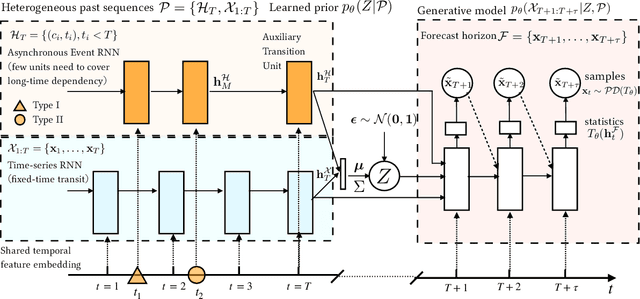

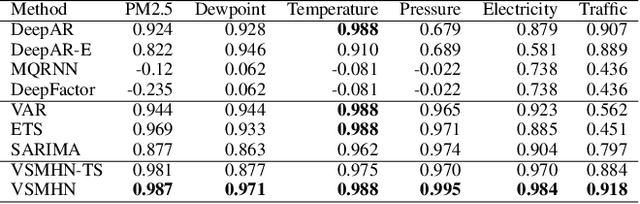

Synergetic Learning of Heterogeneous Temporal Sequences for Multi-Horizon Probabilistic Forecasting

Jan 31, 2021

Abstract:Time-series is ubiquitous across applications, such as transportation, finance and healthcare. Time-series is often influenced by external factors, especially in the form of asynchronous events, making forecasting difficult. However, existing models are mainly designated for either synchronous time-series or asynchronous event sequence, and can hardly provide a synthetic way to capture the relation between them. We propose Variational Synergetic Multi-Horizon Network (VSMHN), a novel deep conditional generative model. To learn complex correlations across heterogeneous sequences, a tailored encoder is devised to combine the advances in deep point processes models and variational recurrent neural networks. In addition, an aligned time coding and an auxiliary transition scheme are carefully devised for batched training on unaligned sequences. Our model can be trained effectively using stochastic variational inference and generates probabilistic predictions with Monte-Carlo simulation. Furthermore, our model produces accurate, sharp and more realistic probabilistic forecasts. We also show that modeling asynchronous event sequences is crucial for multi-horizon time-series forecasting.

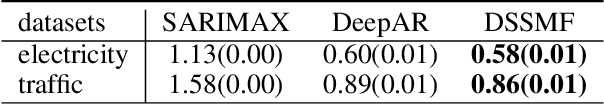

Learning Interpretable Deep State Space Model for Probabilistic Time Series Forecasting

Jan 31, 2021

Abstract:Probabilistic time series forecasting involves estimating the distribution of future based on its history, which is essential for risk management in downstream decision-making. We propose a deep state space model for probabilistic time series forecasting whereby the non-linear emission model and transition model are parameterized by networks and the dependency is modeled by recurrent neural nets. We take the automatic relevance determination (ARD) view and devise a network to exploit the exogenous variables in addition to time series. In particular, our ARD network can incorporate the uncertainty of the exogenous variables and eventually helps identify useful exogenous variables and suppress those irrelevant for forecasting. The distribution of multi-step ahead forecasts are approximated by Monte Carlo simulation. We show in experiments that our model produces accurate and sharp probabilistic forecasts. The estimated uncertainty of our forecasting also realistically increases over time, in a spontaneous manner.

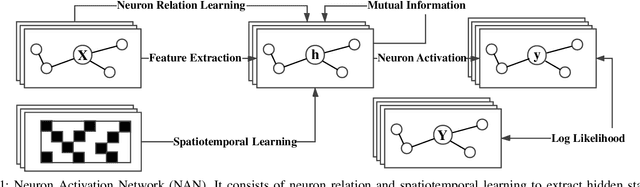

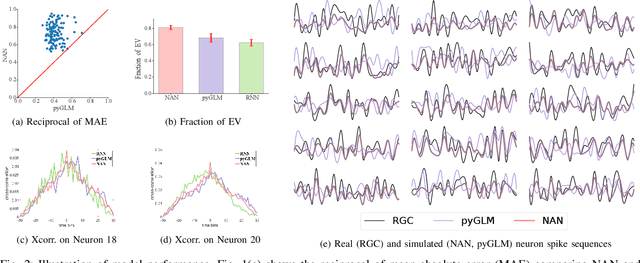

Decoding Spiking Mechanism with Dynamic Learning on Neuron Population

Nov 21, 2019

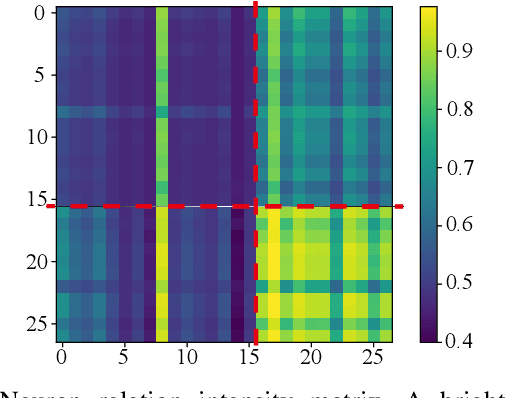

Abstract:A main concern in cognitive neuroscience is to decode the overt neural spike train observations and infer latent representations under neural circuits. However, traditional methods entail strong prior on network structure and hardly meet the demand for real spike data. Here we propose a novel neural network approach called Neuron Activation Network that extracts neural information explicitly from single trial neuron population spike trains. Our proposed method consists of a spatiotemporal learning procedure on sensory environment and a message passing mechanism on population graph, followed by a neuron activation process in a recursive fashion. Our model is aimed to reconstruct neuron information while inferring representations of neuron spiking states. We apply our model to retinal ganglion cells and the experimental results suggest that our model holds a more potent capability in generating neural spike sequences with high fidelity than the state-of-the-art methods, as well as being more expressive and having potential to disclose latent spiking mechanism. The source code will be released with the final paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge