Lixuan Che

Deep Rib Fracture Instance Segmentation and Classification from CT on the RibFrac Challenge

Feb 14, 2024Abstract:Rib fractures are a common and potentially severe injury that can be challenging and labor-intensive to detect in CT scans. While there have been efforts to address this field, the lack of large-scale annotated datasets and evaluation benchmarks has hindered the development and validation of deep learning algorithms. To address this issue, the RibFrac Challenge was introduced, providing a benchmark dataset of over 5,000 rib fractures from 660 CT scans, with voxel-level instance mask annotations and diagnosis labels for four clinical categories (buckle, nondisplaced, displaced, or segmental). The challenge includes two tracks: a detection (instance segmentation) track evaluated by an FROC-style metric and a classification track evaluated by an F1-style metric. During the MICCAI 2020 challenge period, 243 results were evaluated, and seven teams were invited to participate in the challenge summary. The analysis revealed that several top rib fracture detection solutions achieved performance comparable or even better than human experts. Nevertheless, the current rib fracture classification solutions are hardly clinically applicable, which can be an interesting area in the future. As an active benchmark and research resource, the data and online evaluation of the RibFrac Challenge are available at the challenge website. As an independent contribution, we have also extended our previous internal baseline by incorporating recent advancements in large-scale pretrained networks and point-based rib segmentation techniques. The resulting FracNet+ demonstrates competitive performance in rib fracture detection, which lays a foundation for further research and development in AI-assisted rib fracture detection and diagnosis.

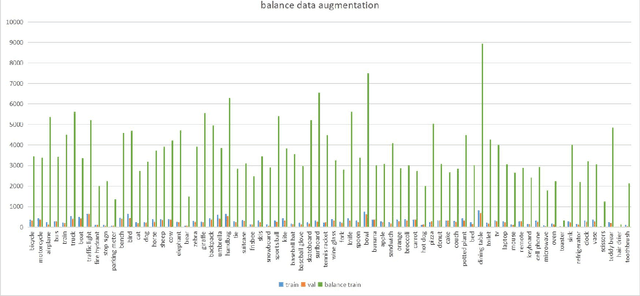

VIPriors Object Detection Challenge

Jul 16, 2020

Abstract:This paper is a brief report to our submission to the VIPriors Object Detection Challenge. Object Detection has attracted many researchers' attention for its full application, but it is still a challenging task. In this paper, we study analysis the characteristics of the data, and an effective data enhancement method is proposed. We carefully choose the model which is more suitable for training from scratch. We benefit a lot from using softnms and model fusion skillfully.

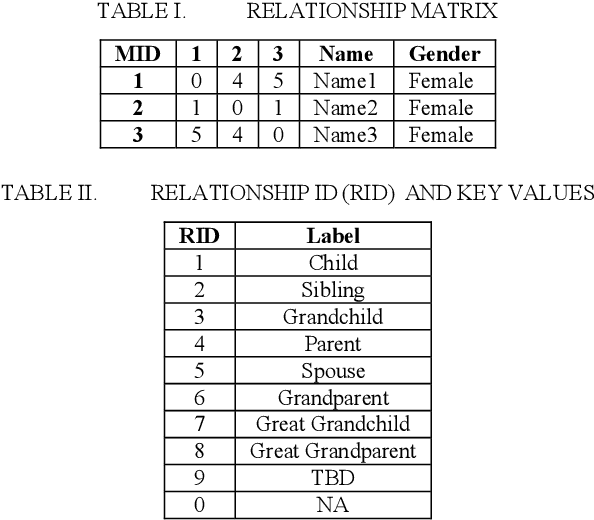

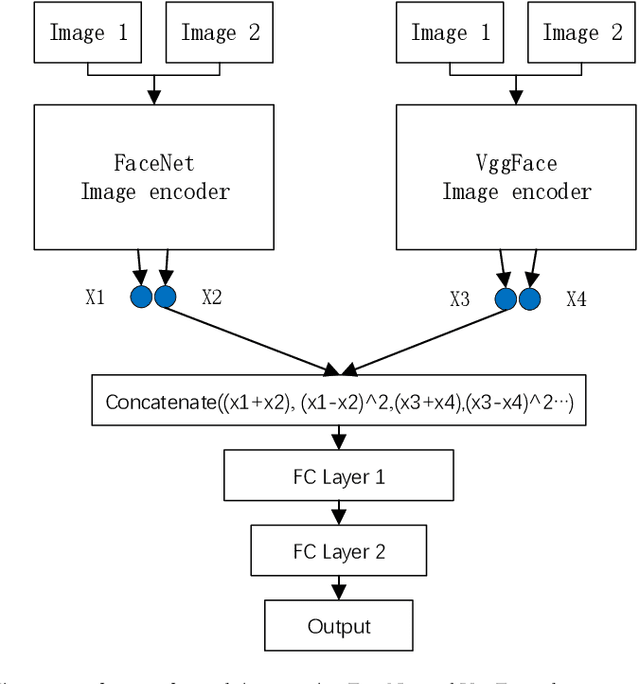

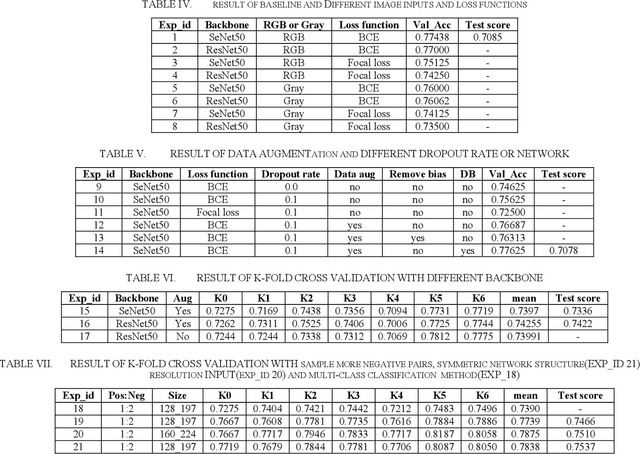

Challenge report: Recognizing Families In the Wild Data Challenge

May 30, 2020

Abstract:This paper is a brief report to our submission to the Recognizing Families In the Wild Data Challenge (4th Edition), in conjunction with FG 2020 Forum. Automatic kinship recognition has attracted many researchers' attention for its full application, but it is still a very challenging task because of the limited information that can be used to determine whether a pair of faces are blood relatives or not. In this paper, we studied previous methods and proposed our method. We try many methods, like deep metric learning-based, to extract deep embedding feature for every image, then determine if they are blood relatives by Euclidean distance or method based on classes. Finally, we find some tricks like sampling more negative samples and high resolution that can help get better performance. Moreover, we proposed a symmetric network with a binary classification based method to get our best score in all tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge