Lisen Mu

LiloDriver: A Lifelong Learning Framework for Closed-loop Motion Planning in Long-tail Autonomous Driving Scenarios

May 22, 2025Abstract:Recent advances in autonomous driving research towards motion planners that are robust, safe, and adaptive. However, existing rule-based and data-driven planners lack adaptability to long-tail scenarios, while knowledge-driven methods offer strong reasoning but face challenges in representation, control, and real-world evaluation. To address these challenges, we present LiloDriver, a lifelong learning framework for closed-loop motion planning in long-tail autonomous driving scenarios. By integrating large language models (LLMs) with a memory-augmented planner generation system, LiloDriver continuously adapts to new scenarios without retraining. It features a four-stage architecture including perception, scene encoding, memory-based strategy refinement, and LLM-guided reasoning. Evaluated on the nuPlan benchmark, LiloDriver achieves superior performance in both common and rare driving scenarios, outperforming static rule-based and learning-based planners. Our results highlight the effectiveness of combining structured memory and LLM reasoning to enable scalable, human-like motion planning in real-world autonomous driving. Our code is available at https://github.com/Hyan-Yao/LiloDriver.

Drive in Corridors: Enhancing the Safety of End-to-end Autonomous Driving via Corridor Learning and Planning

Apr 10, 2025Abstract:Safety remains one of the most critical challenges in autonomous driving systems. In recent years, the end-to-end driving has shown great promise in advancing vehicle autonomy in a scalable manner. However, existing approaches often face safety risks due to the lack of explicit behavior constraints. To address this issue, we uncover a new paradigm by introducing the corridor as the intermediate representation. Widely adopted in robotics planning, the corridors represents spatio-temporal obstacle-free zones for the vehicle to traverse. To ensure accurate corridor prediction in diverse traffic scenarios, we develop a comprehensive learning pipeline including data annotation, architecture refinement and loss formulation. The predicted corridor is further integrated as the constraint in a trajectory optimization process. By extending the differentiability of the optimization, we enable the optimized trajectory to be seamlessly trained within the end-to-end learning framework, improving both safety and interpretability. Experimental results on the nuScenes dataset demonstrate state-of-the-art performance of our approach, showing a 66.7% reduction in collisions with agents and a 46.5% reduction with curbs, significantly enhancing the safety of end-to-end driving. Additionally, incorporating the corridor contributes to higher success rates in closed-loop evaluations.

Reinforced Evolutionary Neural Architecture Search

Sep 07, 2018

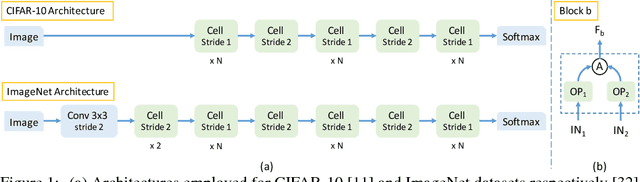

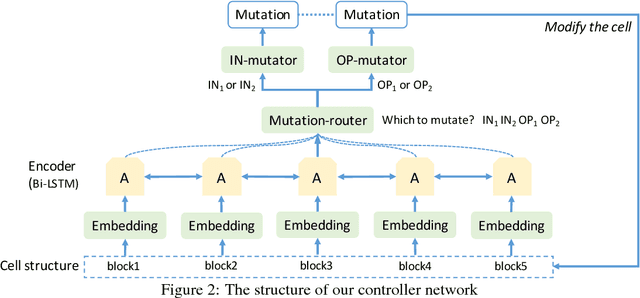

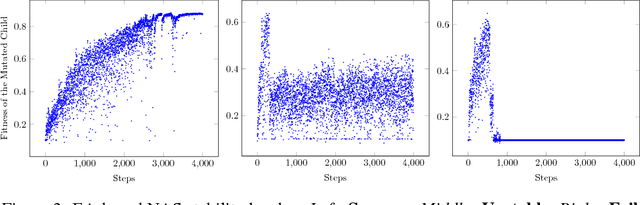

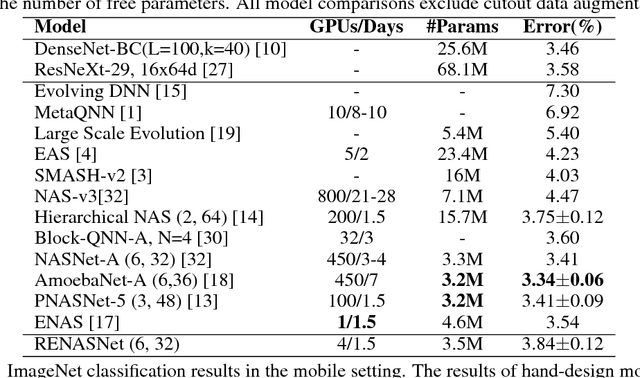

Abstract:Neural architecture search (NAS) is an important yet challenging task in network design due to its high computational consumption and low stability. To address these two issues, we propose the Reinforced Evolutionary Neural Architecture Search (RENAS), which is an evolutionary method with reinforced mutation for NAS. Our method integrates reinforced mutation into an evolution algorithm for neural architecture exploration, in which a mutation controller to learn the effects of slight modifications and make mutation actions. The reinforced mutation controller instructs the model population to evolve efficiently in a suitable direction. Furthermore, as child models can inherit parameters from their parents during evolution, our method requires very limited computational resources. We conduct the proposed search method on CIFAR-10 with 4 GPUs (Titan Xp) across 1.5 days and discover a powerful network architecture. This architecture achieves a competitive result on CIFAR-10. We further apply the explored network architecture to the mobile setting ImageNet. The network achieves a new state-of-the-art accuracy, i.e., 75.7\% top-1 accuracy with 5.36M parameters.

Elements of Effective Deep Reinforcement Learning towards Tactical Driving Decision Making

Feb 01, 2018

Abstract:Tactical driving decision making is crucial for autonomous driving systems and has attracted considerable interest in recent years. In this paper, we propose several practical components that can speed up deep reinforcement learning algorithms towards tactical decision making tasks: 1) non-uniform action skipping as a more stable alternative to action-repetition frame skipping, 2) a counter-based penalty for lanes on which ego vehicle has less right-of-road, and 3) heuristic inference-time action masking for apparently undesirable actions. We evaluate the proposed components in a realistic driving simulator and compare them with several baselines. Results show that the proposed scheme provides superior performance in terms of safety, efficiency, and comfort.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge