Lihua Dou

School of Automation, Beijing Institute of Technology, Beijing, China

SyreaNet: A Physically Guided Underwater Image Enhancement Framework Integrating Synthetic and Real Images

Feb 16, 2023

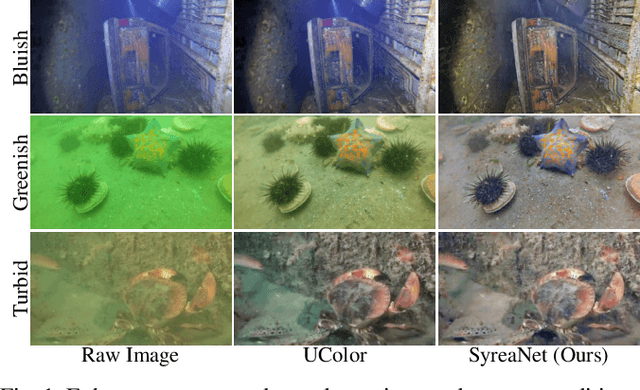

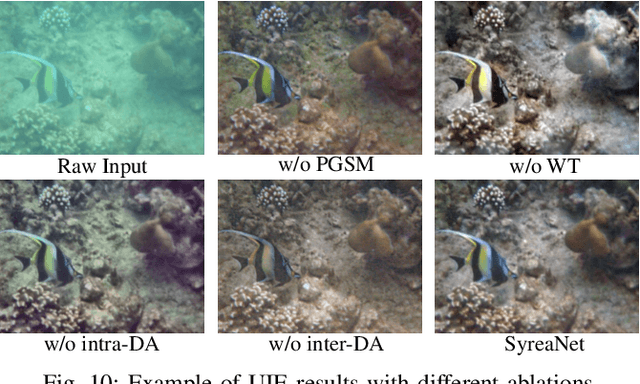

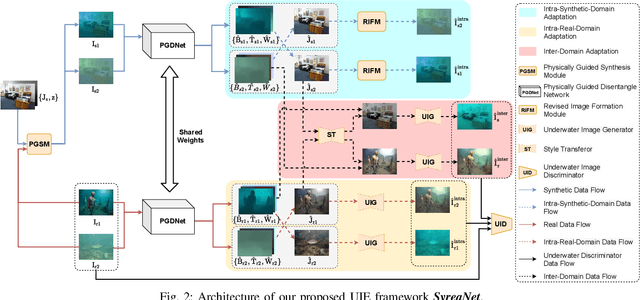

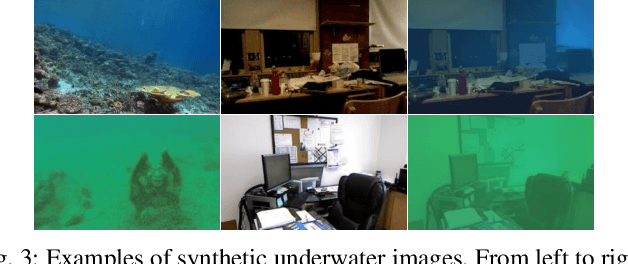

Abstract:Underwater image enhancement (UIE) is vital for high-level vision-related underwater tasks. Although learning-based UIE methods have made remarkable achievements in recent years, it's still challenging for them to consistently deal with various underwater conditions, which could be caused by: 1) the use of the simplified atmospheric image formation model in UIE may result in severe errors; 2) the network trained solely with synthetic images might have difficulty in generalizing well to real underwater images. In this work, we, for the first time, propose a framework \textit{SyreaNet} for UIE that integrates both synthetic and real data under the guidance of the revised underwater image formation model and novel domain adaptation (DA) strategies. First, an underwater image synthesis module based on the revised model is proposed. Then, a physically guided disentangled network is designed to predict the clear images by combining both synthetic and real underwater images. The intra- and inter-domain gaps are abridged by fully exchanging the domain knowledge. Extensive experiments demonstrate the superiority of our framework over other state-of-the-art (SOTA) learning-based UIE methods qualitatively and quantitatively. The code and dataset are publicly available at https://github.com/RockWenJJ/SyreaNet.git.

TJ-FlyingFish: Design and Implementation of an Aerial-Aquatic Quadrotor with Tiltable Propulsion Units

Feb 07, 2023

Abstract:Aerial-aquatic vehicles are capable to move in the two most dominant fluids, making them more promising for a wide range of applications. We propose a prototype with special designs for propulsion and thruster configuration to cope with the vast differences in the fluid properties of water and air. For propulsion, the operating range is switched for the different mediums by the dual-speed propulsion unit, providing sufficient thrust and also ensuring output efficiency. For thruster configuration, thrust vectoring is realized by the rotation of the propulsion unit around the mount arm, thus enhancing the underwater maneuverability. This paper presents a quadrotor prototype of this concept and the design details and realization in practice.

Image classification based on support vector machine and the fusion of complementary features

Nov 05, 2015

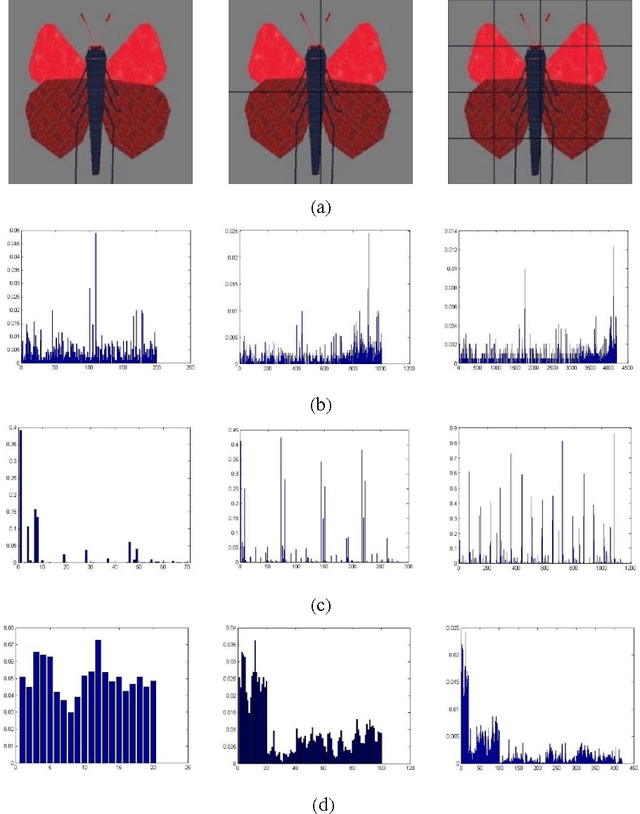

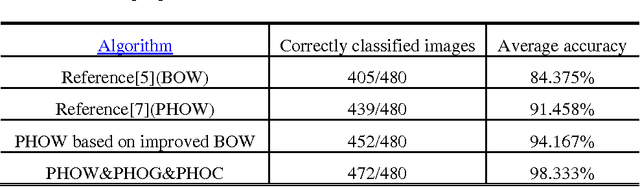

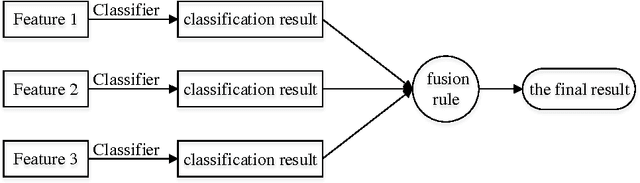

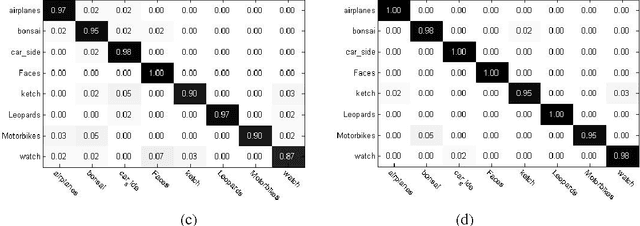

Abstract:Image Classification based on BOW (Bag-of-words) has broad application prospect in pattern recognition field but the shortcomings are existed because of single feature and low classification accuracy. To this end we combine three ingredients: (i) Three features with functions of mutual complementation are adopted to describe the images, including PHOW (Pyramid Histogram of Words), PHOC (Pyramid Histogram of Color) and PHOG (Pyramid Histogram of Orientated Gradients). (ii) The improvement of traditional BOW model is presented by using dense sample and an improved K-means clustering method for constructing the visual dictionary. (iii) An adaptive feature-weight adjusted image categorization algorithm based on the SVM and the fusion of multiple features is adopted. Experiments carried out on Caltech 101 database confirm the validity of the proposed approach. From the experimental results can be seen that the classification accuracy rate of the proposed method is improved by 7%-17% higher than that of the traditional BOW methods. This algorithm makes full use of global, local and spatial information and has significant improvements to the classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge