Li Ke

Generative Video Matting

Aug 11, 2025Abstract:Video matting has traditionally been limited by the lack of high-quality ground-truth data. Most existing video matting datasets provide only human-annotated imperfect alpha and foreground annotations, which must be composited to background images or videos during the training stage. Thus, the generalization capability of previous methods in real-world scenarios is typically poor. In this work, we propose to solve the problem from two perspectives. First, we emphasize the importance of large-scale pre-training by pursuing diverse synthetic and pseudo-labeled segmentation datasets. We also develop a scalable synthetic data generation pipeline that can render diverse human bodies and fine-grained hairs, yielding around 200 video clips with a 3-second duration for fine-tuning. Second, we introduce a novel video matting approach that can effectively leverage the rich priors from pre-trained video diffusion models. This architecture offers two key advantages. First, strong priors play a critical role in bridging the domain gap between synthetic and real-world scenes. Second, unlike most existing methods that process video matting frame-by-frame and use an independent decoder to aggregate temporal information, our model is inherently designed for video, ensuring strong temporal consistency. We provide a comprehensive quantitative evaluation across three benchmark datasets, demonstrating our approach's superior performance, and present comprehensive qualitative results in diverse real-world scenes, illustrating the strong generalization capability of our method. The code is available at https://github.com/aim-uofa/GVM.

Score-based Generative Modeling for Conditional Independence Testing

May 29, 2025Abstract:Determining conditional independence (CI) relationships between random variables is a fundamental yet challenging task in machine learning and statistics, especially in high-dimensional settings. Existing generative model-based CI testing methods, such as those utilizing generative adversarial networks (GANs), often struggle with undesirable modeling of conditional distributions and training instability, resulting in subpar performance. To address these issues, we propose a novel CI testing method via score-based generative modeling, which achieves precise Type I error control and strong testing power. Concretely, we first employ a sliced conditional score matching scheme to accurately estimate conditional score and use Langevin dynamics conditional sampling to generate null hypothesis samples, ensuring precise Type I error control. Then, we incorporate a goodness-of-fit stage into the method to verify generated samples and enhance interpretability in practice. We theoretically establish the error bound of conditional distributions modeled by score-based generative models and prove the validity of our CI tests. Extensive experiments on both synthetic and real-world datasets show that our method significantly outperforms existing state-of-the-art methods, providing a promising way to revitalize generative model-based CI testing.

Lightweight Multiscale Feature Fusion Super-Resolution Network Based on Two-branch Convolution and Transformer

Sep 10, 2024

Abstract:The single image super-resolution(SISR) algorithms under deep learning currently have two main models, one based on convolutional neural networks and the other based on Transformer. The former uses the stacking of convolutional layers with different convolutional kernel sizes to design the model, which enables the model to better extract the local features of the image; the latter uses the self-attention mechanism to design the model, which allows the model to establish long-distance dependencies between image pixel points through the self-attention mechanism and then better extract the global features of the image. However, both of the above methods face their problems. Based on this, this paper proposes a new lightweight multi-scale feature fusion network model based on two-way complementary convolutional and Transformer, which integrates the respective features of Transformer and convolutional neural networks through a two-branch network architecture, to realize the mutual fusion of global and local information. Meanwhile, considering the partial loss of information caused by the low-pixel images trained by the deep neural network, this paper designs a modular connection method of multi-stage feature supplementation to fuse the feature maps extracted from the shallow stage of the model with those extracted from the deep stage of the model, to minimize the loss of the information in the feature images that is beneficial to the image restoration as much as possible, to facilitate the obtaining of a higher-quality restored image. The practical results finally show that the model proposed in this paper is optimal in image recovery performance when compared with other lightweight models with the same amount of parameters.

Compare learning: bi-attention network for few-shot learning

Mar 25, 2022

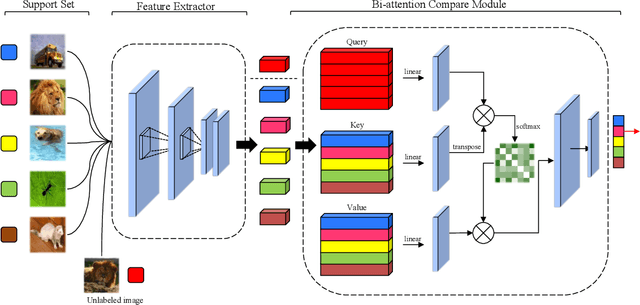

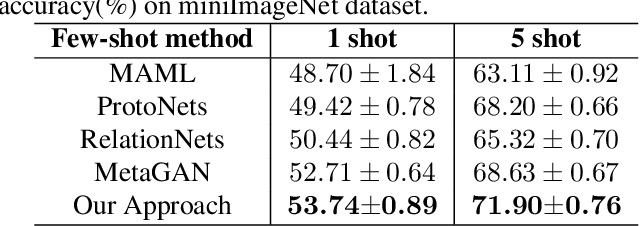

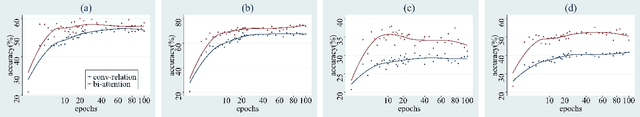

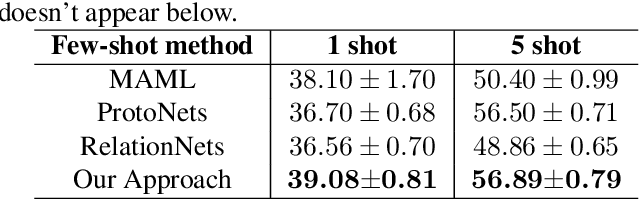

Abstract:Learning with few labeled data is a key challenge for visual recognition, as deep neural networks tend to overfit using a few samples only. One of the Few-shot learning methods called metric learning addresses this challenge by first learning a deep distance metric to determine whether a pair of images belong to the same category, then applying the trained metric to instances from other test set with limited labels. This method makes the most of the few samples and limits the overfitting effectively. However, extant metric networks usually employ Linear classifiers or Convolutional neural networks (CNN) that are not precise enough to globally capture the subtle differences between vectors. In this paper, we propose a novel approach named Bi-attention network to compare the instances, which can measure the similarity between embeddings of instances precisely, globally and efficiently. We verify the effectiveness of our model on two benchmarks. Experiments show that our approach achieved improved accuracy and convergence speed over baseline models.

Extracting PICO elements from RCT abstracts using 1-2gram analysis and multitask classification

Jan 24, 2019

Abstract:The core of evidence-based medicine is to read and analyze numerous papers in the medical literature on a specific clinical problem and summarize the authoritative answers to that problem. Currently, to formulate a clear and focused clinical problem, the popular PICO framework is usually adopted, in which each clinical problem is considered to consist of four parts: patient/problem (P), intervention (I), comparison (C) and outcome (O). In this study, we compared several classification models that are commonly used in traditional machine learning. Next, we developed a multitask classification model based on a soft-margin SVM with a specialized feature engineering method that combines 1-2gram analysis with TF-IDF analysis. Finally, we trained and tested several generic models on an open-source data set from BioNLP 2018. The results show that the proposed multitask SVM classification model based on 1-2gram TF-IDF features exhibits the best performance among the tested models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge