Lexing Ying

Solving Inverse Problems via Diffusion-Based Priors: An Approximation-Free Ensemble Sampling Approach

Jun 05, 2025Abstract:Diffusion models (DMs) have proven to be effective in modeling high-dimensional distributions, leading to their widespread adoption for representing complex priors in Bayesian inverse problems (BIPs). However, current DM-based posterior sampling methods proposed for solving common BIPs rely on heuristic approximations to the generative process. To exploit the generative capability of DMs and avoid the usage of such approximations, we propose an ensemble-based algorithm that performs posterior sampling without the use of heuristic approximations. Our algorithm is motivated by existing works that combine DM-based methods with the sequential Monte Carlo (SMC) method. By examining how the prior evolves through the diffusion process encoded by the pre-trained score function, we derive a modified partial differential equation (PDE) governing the evolution of the corresponding posterior distribution. This PDE includes a modified diffusion term and a reweighting term, which can be simulated via stochastic weighted particle methods. Theoretically, we prove that the error between the true posterior distribution can be bounded in terms of the training error of the pre-trained score function and the number of particles in the ensemble. Empirically, we validate our algorithm on several inverse problems in imaging to show that our method gives more accurate reconstructions compared to existing DM-based methods.

A Unified Approach to Analysis and Design of Denoising Markov Models

Apr 02, 2025Abstract:Probabilistic generative models based on measure transport, such as diffusion and flow-based models, are often formulated in the language of Markovian stochastic dynamics, where the choice of the underlying process impacts both algorithmic design choices and theoretical analysis. In this paper, we aim to establish a rigorous mathematical foundation for denoising Markov models, a broad class of generative models that postulate a forward process transitioning from the target distribution to a simple, easy-to-sample distribution, alongside a backward process particularly constructed to enable efficient sampling in the reverse direction. Leveraging deep connections with nonequilibrium statistical mechanics and generalized Doob's $h$-transform, we propose a minimal set of assumptions that ensure: (1) explicit construction of the backward generator, (2) a unified variational objective directly minimizing the measure transport discrepancy, and (3) adaptations of the classical score-matching approach across diverse dynamics. Our framework unifies existing formulations of continuous and discrete diffusion models, identifies the most general form of denoising Markov models under certain regularity assumptions on forward generators, and provides a systematic recipe for designing denoising Markov models driven by arbitrary L\'evy-type processes. We illustrate the versatility and practical effectiveness of our approach through novel denoising Markov models employing geometric Brownian motion and jump processes as forward dynamics, highlighting the framework's potential flexibility and capability in modeling complex distributions.

HyperDPO: Hypernetwork-based Multi-Objective Fine-Tuning Framework

Oct 10, 2024

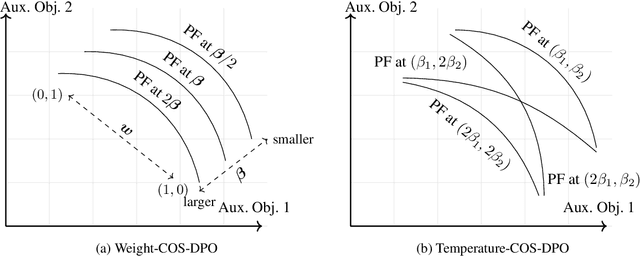

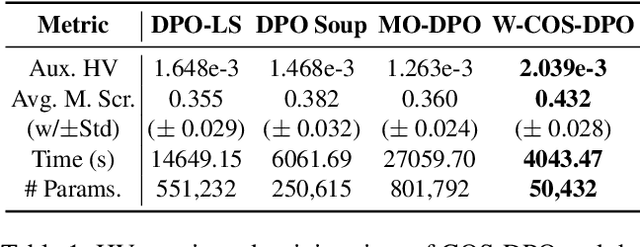

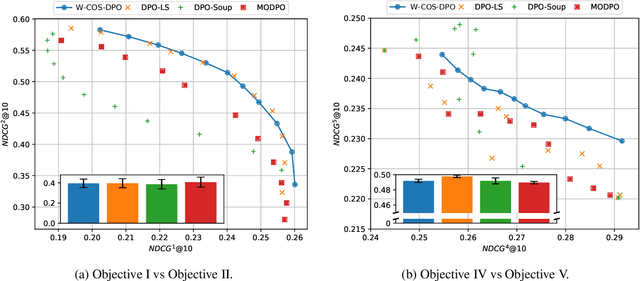

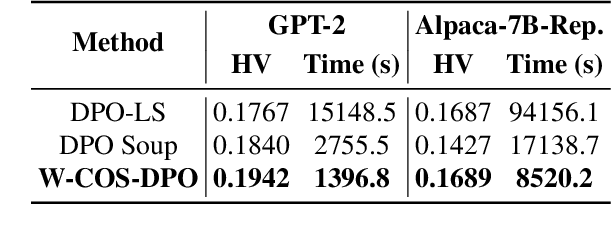

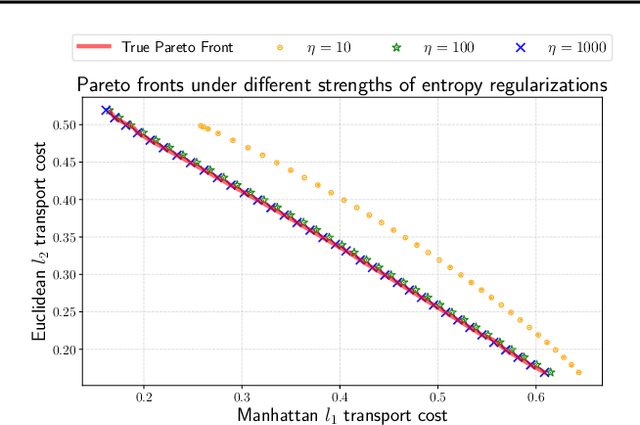

Abstract:In LLM alignment and many other ML applications, one often faces the Multi-Objective Fine-Tuning (MOFT) problem, i.e. fine-tuning an existing model with datasets labeled w.r.t. different objectives simultaneously. To address the challenge, we propose the HyperDPO framework, a hypernetwork-based approach that extends the Direct Preference Optimization (DPO) technique, originally developed for efficient LLM alignment with preference data, to accommodate the MOFT settings. By substituting the Bradley-Terry-Luce model in DPO with the Plackett-Luce model, our framework is capable of handling a wide range of MOFT tasks that involve listwise ranking datasets. Compared with previous approaches, HyperDPO enjoys an efficient one-shot training process for profiling the Pareto front of auxiliary objectives, and offers flexible post-training control over trade-offs. Additionally, we propose a novel Hyper Prompt Tuning design, that conveys continuous weight across objectives to transformer-based models without altering their architecture. We demonstrate the effectiveness and efficiency of the HyperDPO framework through its applications to various tasks, including Learning-to-Rank (LTR) and LLM alignment, highlighting its viability for large-scale ML deployments.

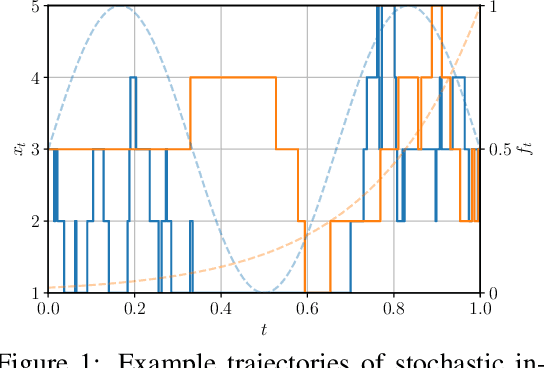

How Discrete and Continuous Diffusion Meet: Comprehensive Analysis of Discrete Diffusion Models via a Stochastic Integral Framework

Oct 04, 2024

Abstract:Discrete diffusion models have gained increasing attention for their ability to model complex distributions with tractable sampling and inference. However, the error analysis for discrete diffusion models remains less well-understood. In this work, we propose a comprehensive framework for the error analysis of discrete diffusion models based on L\'evy-type stochastic integrals. By generalizing the Poisson random measure to that with a time-independent and state-dependent intensity, we rigorously establish a stochastic integral formulation of discrete diffusion models and provide the corresponding change of measure theorems that are intriguingly analogous to It\^o integrals and Girsanov's theorem for their continuous counterparts. Our framework unifies and strengthens the current theoretical results on discrete diffusion models and obtains the first error bound for the $\tau$-leaping scheme in KL divergence. With error sources clearly identified, our analysis gives new insight into the mathematical properties of discrete diffusion models and offers guidance for the design of efficient and accurate algorithms for real-world discrete diffusion model applications.

Tangent differential privacy

Jun 06, 2024Abstract:Differential privacy is a framework for protecting the identity of individual data points in the decision-making process. In this note, we propose a new form of differential privacy called tangent differential privacy. Compared with the usual differential privacy that is defined uniformly across data distributions, tangent differential privacy is tailored towards a specific data distribution of interest. It also allows for general distribution distances such as total variation distance and Wasserstein distance. In the case of risk minimization, we show that entropic regularization guarantees tangent differential privacy under rather general conditions on the risk function.

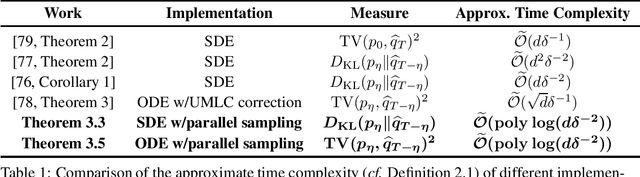

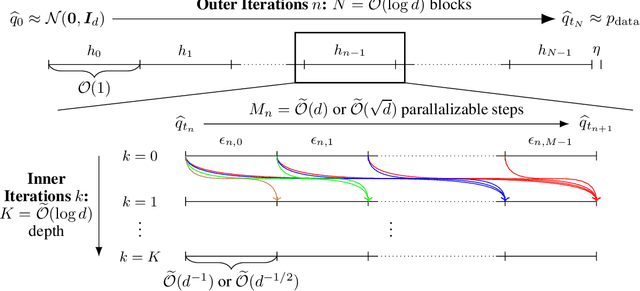

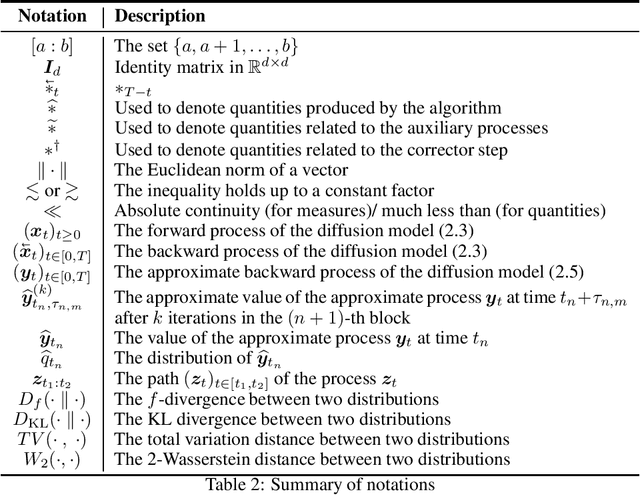

Accelerating Diffusion Models with Parallel Sampling: Inference at Sub-Linear Time Complexity

May 24, 2024

Abstract:Diffusion models have become a leading method for generative modeling of both image and scientific data. As these models are costly to train and evaluate, reducing the inference cost for diffusion models remains a major goal. Inspired by the recent empirical success in accelerating diffusion models via the parallel sampling technique~\cite{shih2024parallel}, we propose to divide the sampling process into $\mathcal{O}(1)$ blocks with parallelizable Picard iterations within each block. Rigorous theoretical analysis reveals that our algorithm achieves $\widetilde{\mathcal{O}}(\mathrm{poly} \log d)$ overall time complexity, marking the first implementation with provable sub-linear complexity w.r.t. the data dimension $d$. Our analysis is based on a generalized version of Girsanov's theorem and is compatible with both the SDE and probability flow ODE implementations. Our results shed light on the potential of fast and efficient sampling of high-dimensional data on fast-evolving modern large-memory GPU clusters.

A note on continuous-time online learning

May 16, 2024Abstract:In online learning, the data is provided in a sequential order, and the goal of the learner is to make online decisions to minimize overall regrets. This note is concerned with continuous-time models and algorithms for several online learning problems: online linear optimization, adversarial bandit, and adversarial linear bandit. For each problem, we extend the discrete-time algorithm to the continuous-time setting and provide a concise proof of the optimal regret bound.

Orthogonal Bootstrap: Efficient Simulation of Input Uncertainty

May 01, 2024Abstract:Bootstrap is a popular methodology for simulating input uncertainty. However, it can be computationally expensive when the number of samples is large. We propose a new approach called \textbf{Orthogonal Bootstrap} that reduces the number of required Monte Carlo replications. We decomposes the target being simulated into two parts: the \textit{non-orthogonal part} which has a closed-form result known as Infinitesimal Jackknife and the \textit{orthogonal part} which is easier to be simulated. We theoretically and numerically show that Orthogonal Bootstrap significantly reduces the computational cost of Bootstrap while improving empirical accuracy and maintaining the same width of the constructed interval.

A Sinkhorn-type Algorithm for Constrained Optimal Transport

Mar 08, 2024

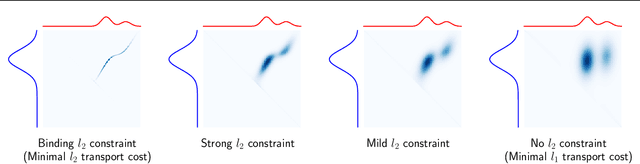

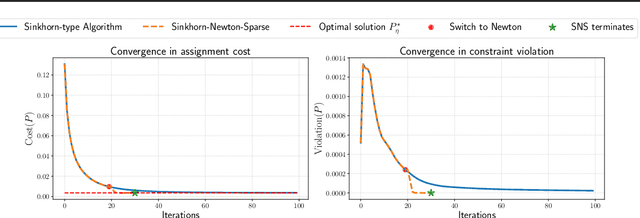

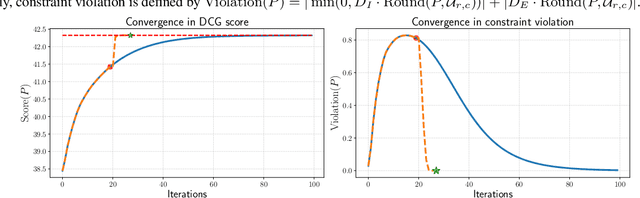

Abstract:Entropic optimal transport (OT) and the Sinkhorn algorithm have made it practical for machine learning practitioners to perform the fundamental task of calculating transport distance between statistical distributions. In this work, we focus on a general class of OT problems under a combination of equality and inequality constraints. We derive the corresponding entropy regularization formulation and introduce a Sinkhorn-type algorithm for such constrained OT problems supported by theoretical guarantees. We first bound the approximation error when solving the problem through entropic regularization, which reduces exponentially with the increase of the regularization parameter. Furthermore, we prove a sublinear first-order convergence rate of the proposed Sinkhorn-type algorithm in the dual space by characterizing the optimization procedure with a Lyapunov function. To achieve fast and higher-order convergence under weak entropy regularization, we augment the Sinkhorn-type algorithm with dynamic regularization scheduling and second-order acceleration. Overall, this work systematically combines recent theoretical and numerical advances in entropic optimal transport with the constrained case, allowing practitioners to derive approximate transport plans in complex scenarios.

Multidimensional unstructured sparse recovery via eigenmatrix

Feb 27, 2024Abstract:This note considers the multidimensional unstructured sparse recovery problems. Examples include Fourier inversion and sparse deconvolution. The eigenmatrix is a data-driven construction with desired approximate eigenvalues and eigenvectors proposed for the one-dimensional problems. This note extends the eigenmatrix approach to multidimensional problems. Numerical results are provided to demonstrate the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge