Laurie E. Cutting

Personalized White Matter Bundle Segmentation for Early Childhood

Feb 04, 2026Abstract:White matter segmentation methods from diffusion magnetic resonance imaging range from streamline clustering-based approaches to bundle mask delineation, but none have proposed a pediatric-specific approach. We hypothesize that a deep learning model with a similar approach to TractSeg will improve similarity between an algorithm-generated mask and an expert-labeled ground truth. Given a cohort of 56 manually labelled white matter bundles, we take inspiration from TractSeg's 2D UNet architecture, and we modify inputs to match bundle definitions as determined by pediatric experts, evaluation to use k fold cross validation, the loss function to masked Dice loss. We evaluate Dice score, volume overlap, and volume overreach of 16 major regions of interest compared to the expert labeled dataset. To test whether our approach offers statistically significant improvements over TractSeg, we compare Dice voxels, volume overlap, and adjacency voxels with a Wilcoxon signed rank test followed by false discovery rate correction. We find statistical significance across all bundles for all metrics with one exception in volume overlap. After we run TractSeg and our model, we combine their output masks into a 60 label atlas to evaluate if TractSeg and our model combined can generate a robust, individualized atlas, and observe smoothed, continuous masks in cases that TractSeg did not produce an anatomically plausible output. With the improvement of white matter pathway segmentation masks, we can further understand neurodevelopment on a population level scale, and we can produce reliable estimates of individualized anatomy in pediatric white matter diseases and disorders.

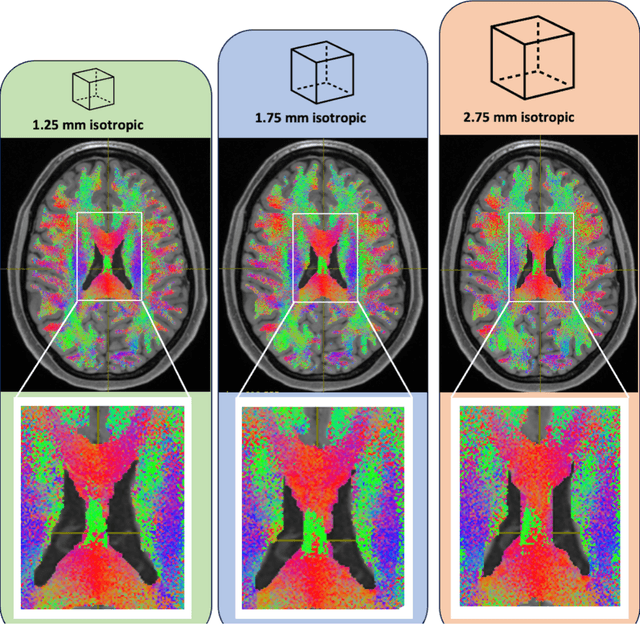

Sensitivity of quantitative diffusion MRI tractography and microstructure to anisotropic spatial sampling

Sep 26, 2024Abstract:Purpose: Diffusion weighted MRI (dMRI) and its models of neural structure provide insight into human brain organization and variations in white matter. A recent study by McMaster, et al. showed that complex graph measures of the connectome, the graphical representation of a tractogram, vary with spatial sampling changes, but biases introduced by anisotropic voxels in the process have not been well characterized. This study uses microstructural measures (fractional anisotropy and mean diffusivity) and white matter bundle properties (bundle volume, length, and surface area) to further understand the effect of anisotropic voxels on microstructure and tractography. Methods: The statistical significance of the selected measures derived from dMRI data were assessed by comparing three white matter bundles at different spatial resolutions with 44 subjects from the Human Connectome Project Young Adult dataset scan/rescan data using the Wilcoxon Signed Rank test. The original isotropic resolution (1.25 mm isotropic) was explored with six anisotropic resolutions with 0.25 mm incremental steps in the z dimension. Then, all generated resolutions were upsampled to 1.25 mm isotropic and 1 mm isotropic. Results: There were statistically significant differences between at least one microstructural and one bundle measure at every resolution (p less than or equal to 0.05, corrected for multiple comparisons). Cohen's d coefficient evaluated the effect size of anisotropic voxels on microstructure and tractography. Conclusion: Fractional anisotropy and mean diffusivity cannot be recovered with basic up sampling from low quality data with gold standard data. However, the bundle measures from tractogram become more repeatable when voxels are resampled to 1 mm isotropic.

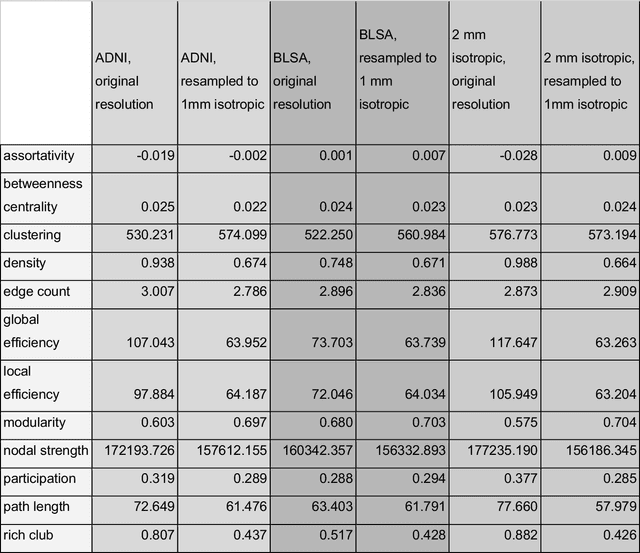

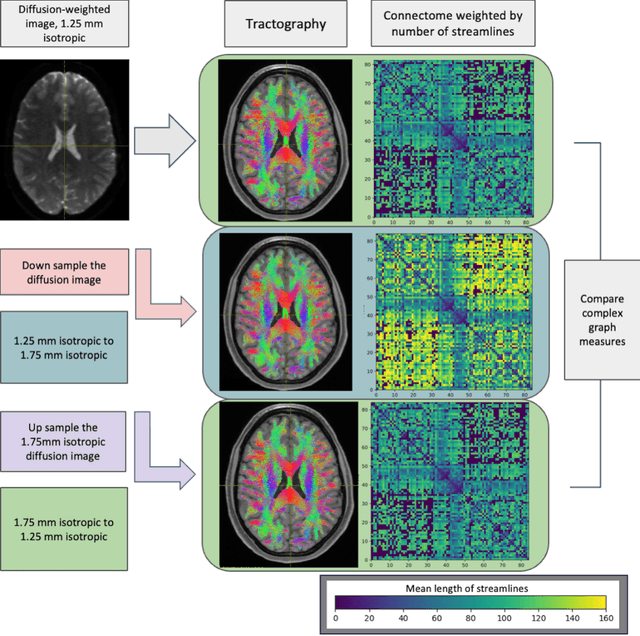

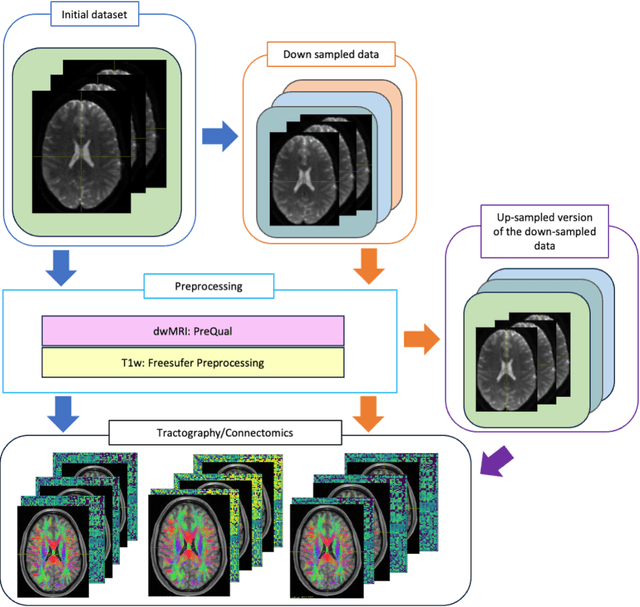

Harmonized connectome resampling for variance in voxel sizes

Aug 02, 2024

Abstract:To date, there has been no comprehensive study characterizing the effect of diffusion-weighted magnetic resonance imaging voxel resolution on the resulting connectome for high resolution subject data. Similarity in results improved with higher resolution, even after initial down-sampling. To ensure robust tractography and connectomes, resample data to 1 mm isotropic resolution.

3D Whole Brain Segmentation using Spatially Localized Atlas Network Tiles

Mar 28, 2019

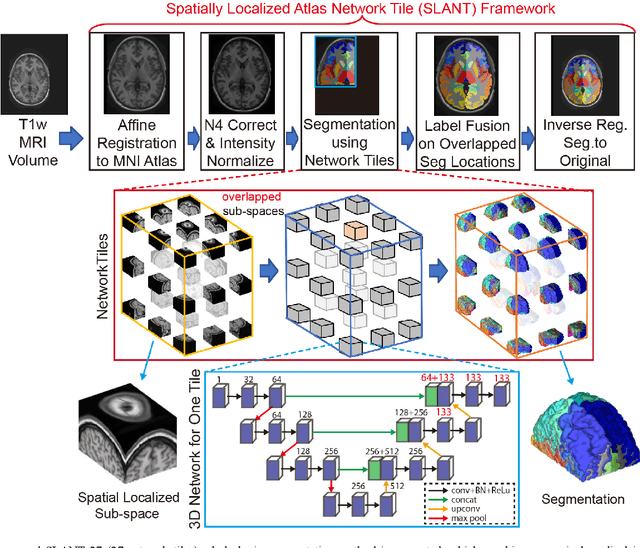

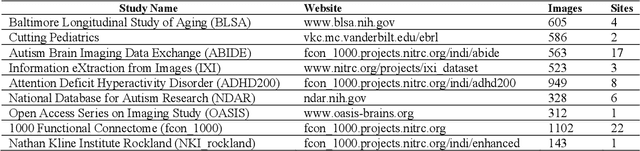

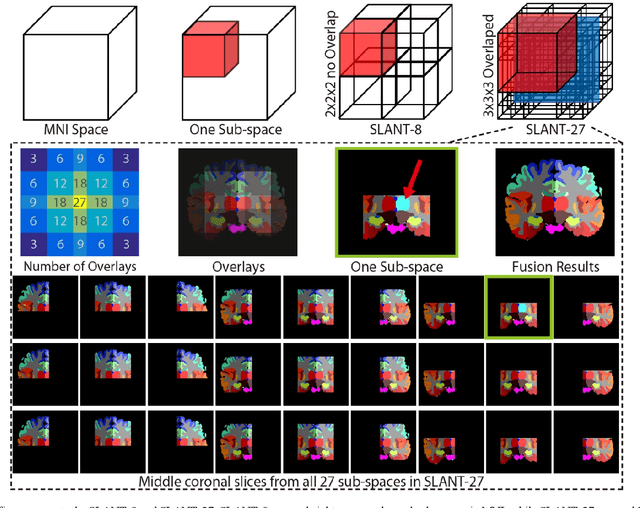

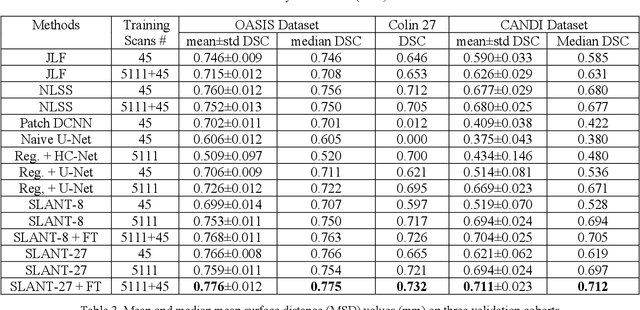

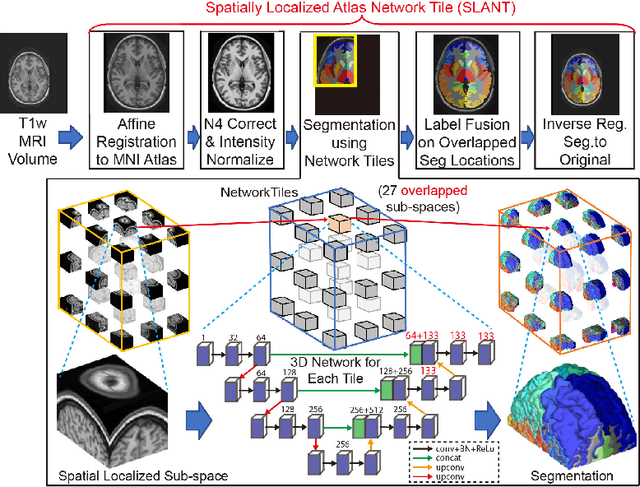

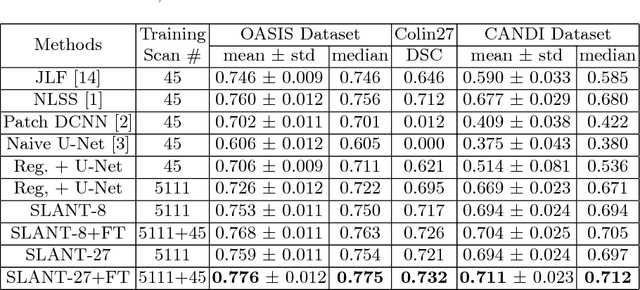

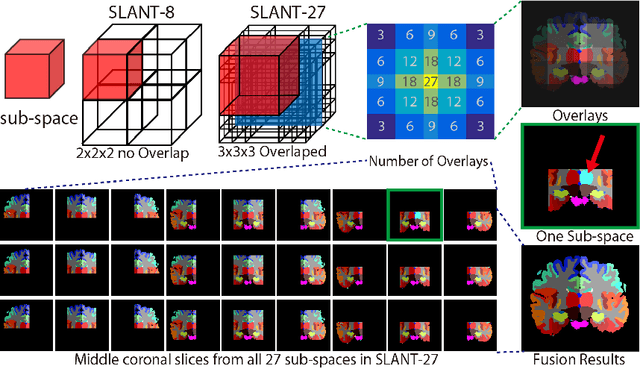

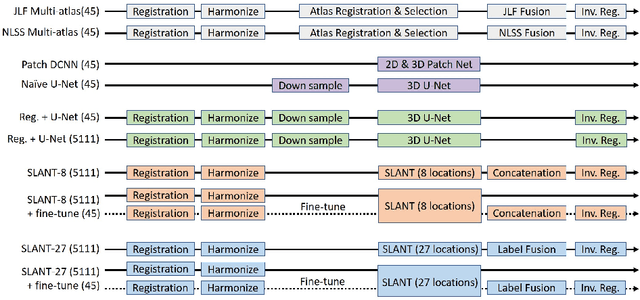

Abstract:Detailed whole brain segmentation is an essential quantitative technique, which provides a non-invasive way of measuring brain regions from a structural magnetic resonance imaging (MRI). Recently, deep convolution neural network (CNN) has been applied to whole brain segmentation. However, restricted by current GPU memory, 2D based methods, downsampling based 3D CNN methods, and patch-based high-resolution 3D CNN methods have been the de facto standard solutions. 3D patch-based high resolution methods typically yield superior performance among CNN approaches on detailed whole brain segmentation (>100 labels), however, whose performance are still commonly inferior compared with multi-atlas segmentation methods (MAS) due to the following challenges: (1) a single network is typically used to learn both spatial and contextual information for the patches, (2) limited manually traced whole brain volumes are available (typically less than 50) for training a network. In this work, we propose the spatially localized atlas network tiles (SLANT) method to distribute multiple independent 3D fully convolutional networks (FCN) for high-resolution whole brain segmentation. To address the first challenge, multiple spatially distributed networks were used in the SLANT method, in which each network learned contextual information for a fixed spatial location. To address the second challenge, auxiliary labels on 5111 initially unlabeled scans were created by multi-atlas segmentation for training. Since the method integrated multiple traditional medical image processing methods with deep learning, we developed a containerized pipeline to deploy the end-to-end solution. From the results, the proposed method achieved superior performance compared with multi-atlas segmentation methods, while reducing the computational time from >30 hours to 15 minutes (https://github.com/MASILab/SLANTbrainSeg).

Data-driven Probabilistic Atlases Capture Whole-brain Individual Variation

Jun 06, 2018

Abstract:Probabilistic atlases provide essential spatial contextual information for image interpretation, Bayesian modeling, and algorithmic processing. Such atlases are typically constructed by grouping subjects with similar demographic information. Importantly, use of the same scanner minimizes inter-group variability. However, generalizability and spatial specificity of such approaches is more limited than one might like. Inspired by Commowick "Frankenstein's creature paradigm" which builds a personal specific anatomical atlas, we propose a data-driven framework to build a personal specific probabilistic atlas under the large-scale data scheme. The data-driven framework clusters regions with similar features using a point distribution model to learn different anatomical phenotypes. Regional structural atlases and corresponding regional probabilistic atlases are used as indices and targets in the dictionary. By indexing the dictionary, the whole brain probabilistic atlases adapt to each new subject quickly and can be used as spatial priors for visualization and processing. The novelties of this approach are (1) it provides a new perspective of generating personal specific whole brain probabilistic atlases (132 regions) under data-driven scheme across sites. (2) The framework employs the large amount of heterogeneous data (2349 images). (3) The proposed framework achieves low computational cost since only one affine registration and Pearson correlation operation are required for a new subject. Our method matches individual regions better with higher Dice similarity value when testing the probabilistic atlases. Importantly, the advantage the large-scale scheme is demonstrated by the better performance of using large-scale training data (1888 images) than smaller training set (720 images).

Spatially Localized Atlas Network Tiles Enables 3D Whole Brain Segmentation from Limited Data

Jun 05, 2018

Abstract:Whole brain segmentation on a structural magnetic resonance imaging (MRI) is essential in non-invasive investigation for neuroanatomy. Historically, multi-atlas segmentation (MAS) has been regarded as the de facto standard method for whole brain segmentation. Recently, deep neural network approaches have been applied to whole brain segmentation by learning random patches or 2D slices. Yet, few previous efforts have been made on detailed whole brain segmentation using 3D networks due to the following challenges: (1) fitting entire whole brain volume into 3D networks is restricted by the current GPU memory, and (2) the large number of targeting labels (e.g., > 100 labels) with limited number of training 3D volumes (e.g., < 50 scans). In this paper, we propose the spatially localized atlas network tiles (SLANT) method to distribute multiple independent 3D fully convolutional networks to cover overlapped sub-spaces in a standard atlas space. This strategy simplifies the whole brain learning task to localized sub-tasks, which was enabled by combing canonical registration and label fusion techniques with deep learning. To address the second challenge, auxiliary labels on 5111 initially unlabeled scans were created by MAS for pre-training. From empirical validation, the state-of-the-art MAS method achieved mean Dice value of 0.76, 0.71, and 0.68, while the proposed method achieved 0.78, 0.73, and 0.71 on three validation cohorts. Moreover, the computational time reduced from > 30 hours using MAS to ~15 minutes using the proposed method. The source code is available online https://github.com/MASILab/SLANTbrainSeg

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge