Laura Oliva

ECG-FM: An Open Electrocardiogram Foundation Model

Aug 09, 2024

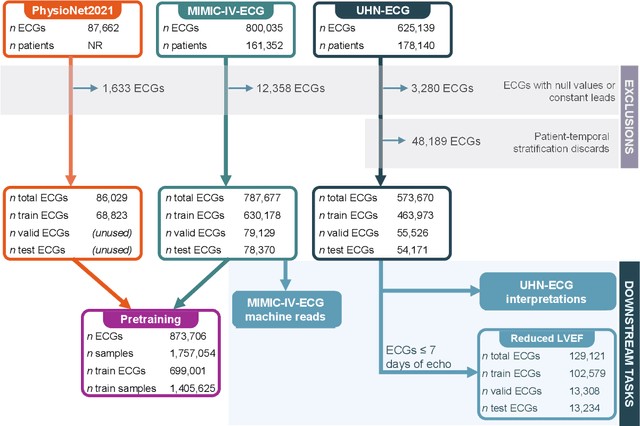

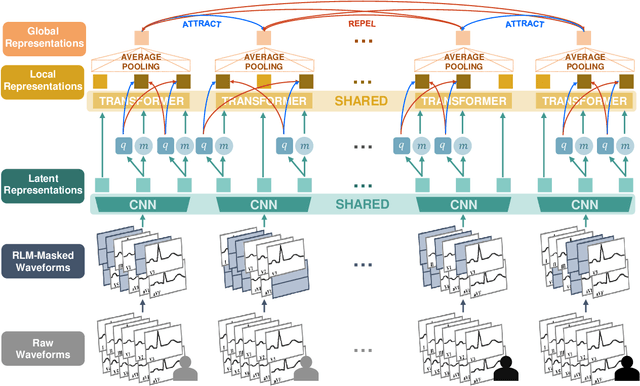

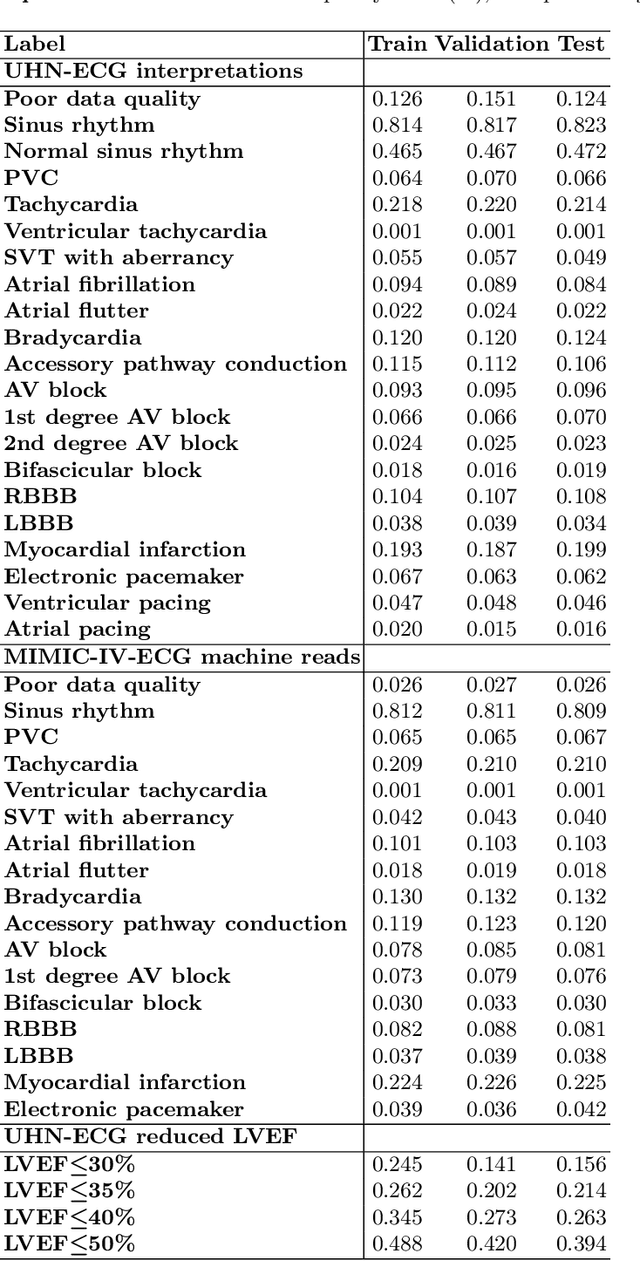

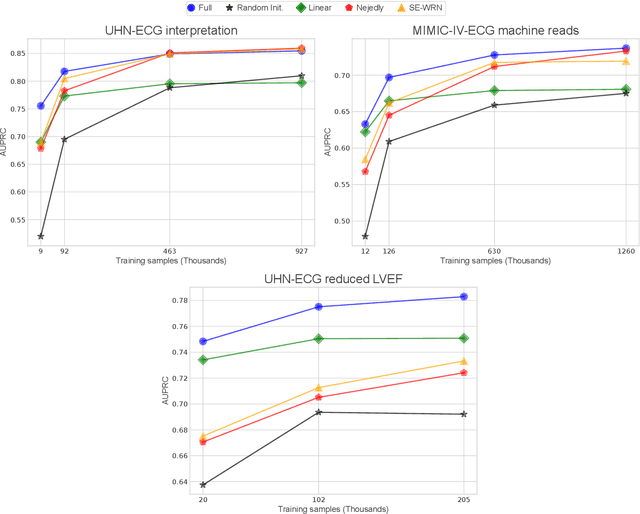

Abstract:The electrocardiogram (ECG) is a ubiquitous diagnostic test. Conventional task-specific ECG analysis models require large numbers of expensive ECG annotations or associated labels to train. Transfer learning techniques have been shown to improve generalization and reduce reliance on labeled data. We present ECG-FM, an open foundation model for ECG analysis, and conduct a comprehensive study performed on a dataset of 1.66 million ECGs sourced from both publicly available and private institutional sources. ECG-FM adopts a transformer-based architecture and is pretrained on 2.5 million samples using ECG-specific augmentations and contrastive learning, as well as a continuous signal masking objective. Our transparent evaluation includes a diverse range of downstream tasks, where we predict ECG interpretation labels, reduced left ventricular ejection fraction, and abnormal cardiac troponin. Affirming ECG-FM's effectiveness as a foundation model, we demonstrate how its command of contextual information results in strong performance, rich pretrained embeddings, and reliable interpretability. Due to a lack of open-weight practices, we highlight how ECG analysis is lagging behind other medical machine learning subfields in terms of foundation model adoption. Our code is available at https://github.com/bowang-lab/ECG-FM/.

Decentralised, Collaborative, and Privacy-preserving Machine Learning for Multi-Hospital Data

Jan 31, 2024Abstract:Machine Learning (ML) has demonstrated its great potential on medical data analysis. Large datasets collected from diverse sources and settings are essential for ML models in healthcare to achieve better accuracy and generalizability. Sharing data across different healthcare institutions is challenging because of complex and varying privacy and regulatory requirements. Hence, it is hard but crucial to allow multiple parties to collaboratively train an ML model leveraging the private datasets available at each party without the need for direct sharing of those datasets or compromising the privacy of the datasets through collaboration. In this paper, we address this challenge by proposing Decentralized, Collaborative, and Privacy-preserving ML for Multi-Hospital Data (DeCaPH). It offers the following key benefits: (1) it allows different parties to collaboratively train an ML model without transferring their private datasets; (2) it safeguards patient privacy by limiting the potential privacy leakage arising from any contents shared across the parties during the training process; and (3) it facilitates the ML model training without relying on a centralized server. We demonstrate the generalizability and power of DeCaPH on three distinct tasks using real-world distributed medical datasets: patient mortality prediction using electronic health records, cell-type classification using single-cell human genomes, and pathology identification using chest radiology images. We demonstrate that the ML models trained with DeCaPH framework have an improved utility-privacy trade-off, showing it enables the models to have good performance while preserving the privacy of the training data points. In addition, the ML models trained with DeCaPH framework in general outperform those trained solely with the private datasets from individual parties, showing that DeCaPH enhances the model generalizability.

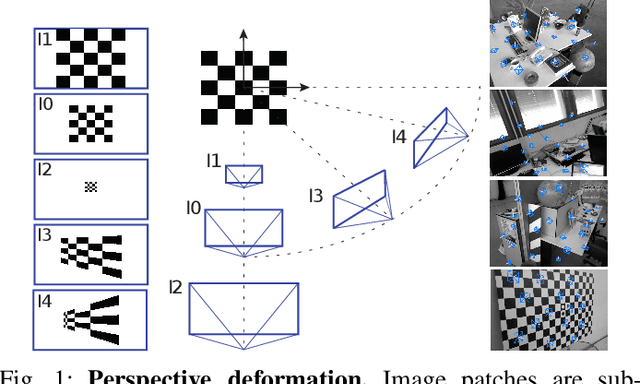

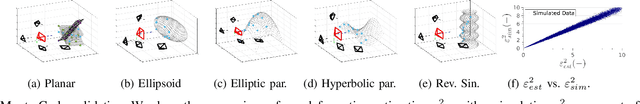

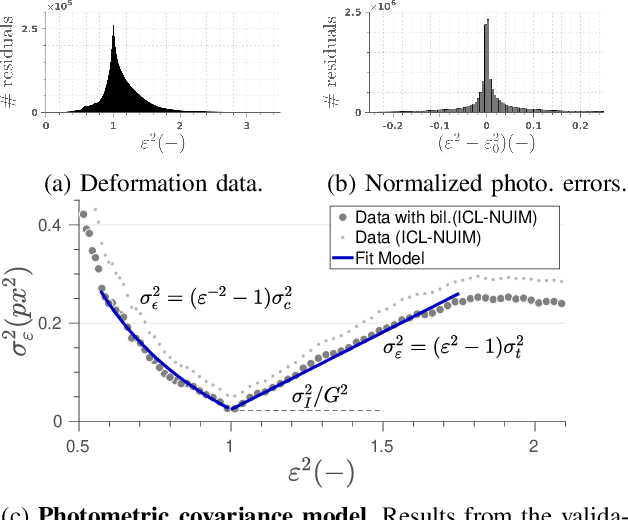

A Model for Multi-View Residual Covariances based on Perspective Deformation

Feb 01, 2022

Abstract:In this work, we derive a model for the covariance of the visual residuals in multi-view SfM, odometry and SLAM setups. The core of our approach is the formulation of the residual covariances as a combination of geometric and photometric noise sources. And our key novel contribution is the derivation of a term modelling how local 2D patches suffer from perspective deformation when imaging 3D surfaces around a point. Together, these add up to an efficient and general formulation which not only improves the accuracy of both feature-based and direct methods, but can also be used to estimate more accurate measures of the state entropy and hence better founded point visibility thresholds. We validate our model with synthetic and real data and integrate it into photometric and feature-based Bundle Adjustment, improving their accuracy with a negligible overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge