Barry Rubin

EchoJEPA: A Latent Predictive Foundation Model for Echocardiography

Feb 02, 2026Abstract:Foundation models for echocardiography promise to reduce annotation burden and improve diagnostic consistency by learning generalizable representations from large unlabeled video archives. However, current approaches fail to disentangle anatomical signal from the stochastic speckle and acquisition artifacts that dominate ultrasound imagery. We present EchoJEPA, a foundation model for echocardiography trained on 18 million echocardiograms across 300K patients, the largest pretraining corpus for this modality to date. We also introduce a novel multi-view probing framework with factorized stream embeddings that standardizes evaluation under frozen backbones. Compared to prior methods, EchoJEPA reduces left ventricular ejection fraction estimation error by 19% and achieves 87.4% view classification accuracy. EchoJEPA exhibits strong sample efficiency, reaching 78.6% accuracy with only 1% of labeled data versus 42.1% for the best baseline trained on 100%. Under acoustic perturbations, EchoJEPA degrades by only 2.3% compared to 16.8% for the next best model, and transfers zero-shot to pediatric patients with 15% lower error than the next best model, outperforming all fine-tuned baselines. These results establish latent prediction as a superior paradigm for ultrasound foundation models.

ECG-FM: An Open Electrocardiogram Foundation Model

Aug 09, 2024

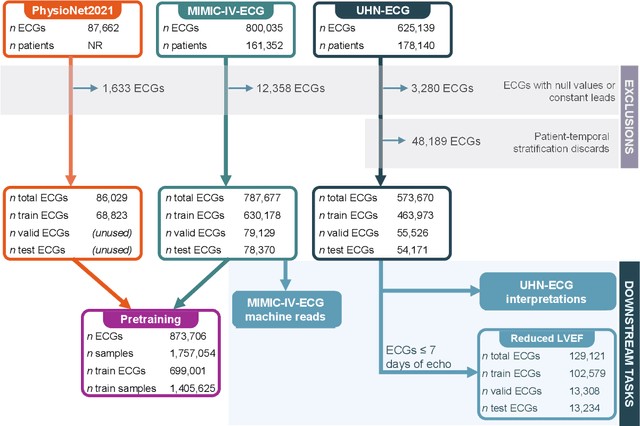

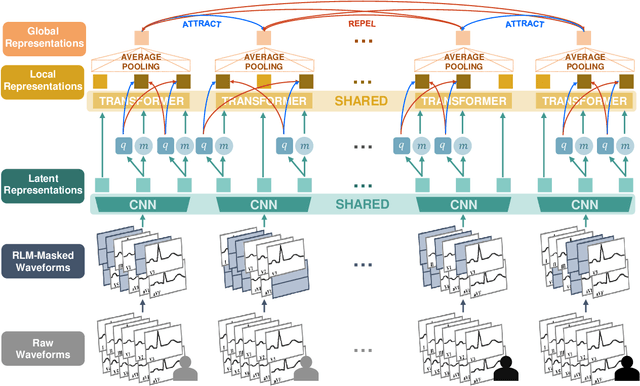

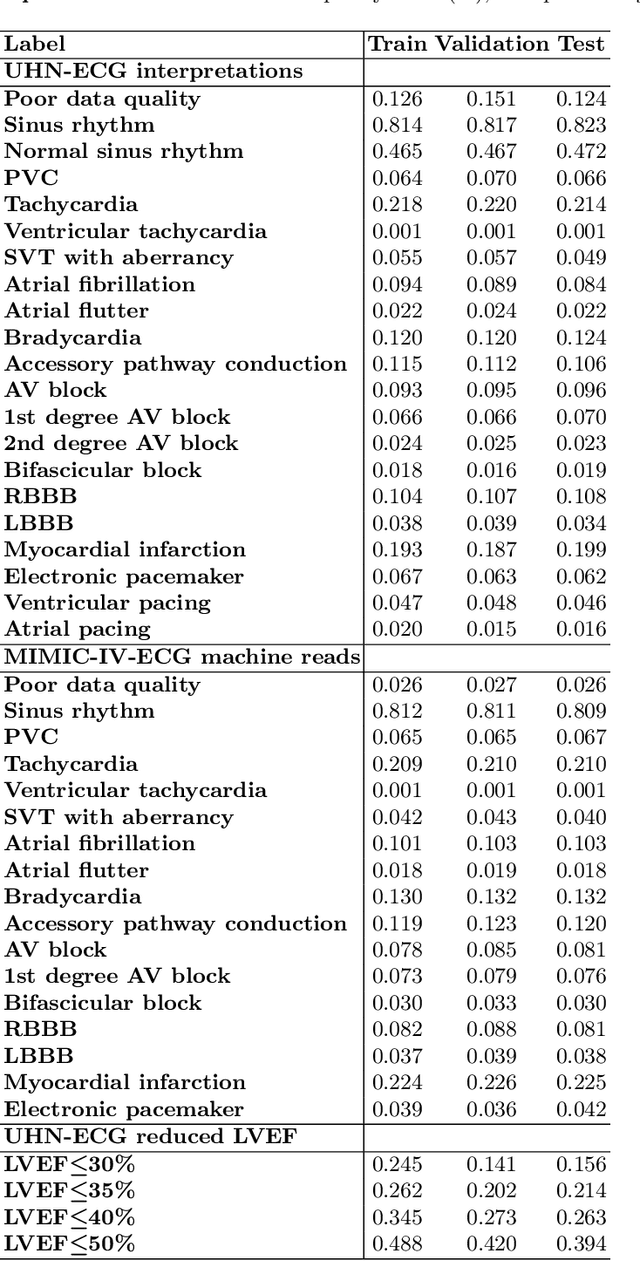

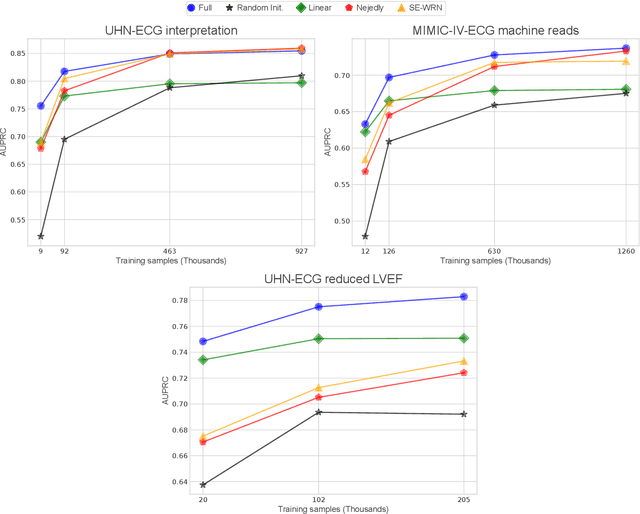

Abstract:The electrocardiogram (ECG) is a ubiquitous diagnostic test. Conventional task-specific ECG analysis models require large numbers of expensive ECG annotations or associated labels to train. Transfer learning techniques have been shown to improve generalization and reduce reliance on labeled data. We present ECG-FM, an open foundation model for ECG analysis, and conduct a comprehensive study performed on a dataset of 1.66 million ECGs sourced from both publicly available and private institutional sources. ECG-FM adopts a transformer-based architecture and is pretrained on 2.5 million samples using ECG-specific augmentations and contrastive learning, as well as a continuous signal masking objective. Our transparent evaluation includes a diverse range of downstream tasks, where we predict ECG interpretation labels, reduced left ventricular ejection fraction, and abnormal cardiac troponin. Affirming ECG-FM's effectiveness as a foundation model, we demonstrate how its command of contextual information results in strong performance, rich pretrained embeddings, and reliable interpretability. Due to a lack of open-weight practices, we highlight how ECG analysis is lagging behind other medical machine learning subfields in terms of foundation model adoption. Our code is available at https://github.com/bowang-lab/ECG-FM/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge