L. Taylor Davis

Reproducibility Evaluation of SLANT Whole Brain Segmentation Across Clinical Magnetic Resonance Imaging Protocols

Jan 07, 2019

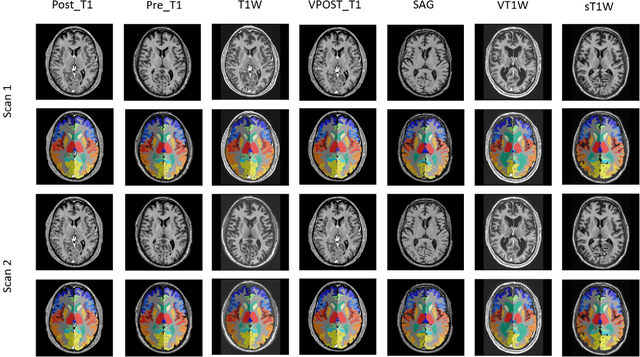

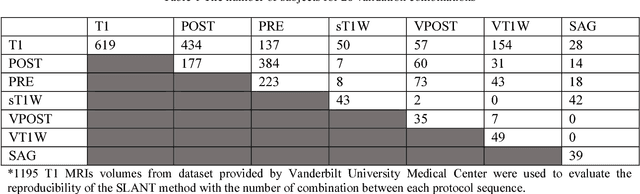

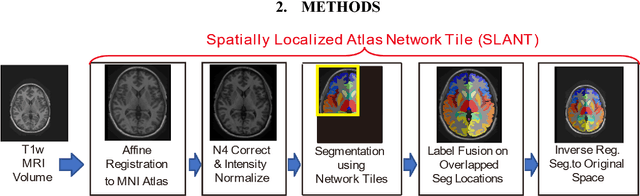

Abstract:Whole brain segmentation on structural magnetic resonance imaging (MRI) is essential for understanding neuroanatomical-functional relationships. Traditionally, multi-atlas segmentation has been regarded as the standard method for whole brain segmentation. In past few years, deep convolutional neural network (DCNN) segmentation methods have demonstrated their advantages in both accuracy and computational efficiency. Recently, we proposed the spatially localized atlas network tiles (SLANT) method, which is able to segment a 3D MRI brain scan into 132 anatomical regions. Commonly, DCNN segmentation methods yield inferior performance under external validations, especially when the testing patterns were not presented in the training cohorts. Recently, we obtained a clinically acquired, multi-sequence MRI brain cohort with 1480 clinically acquired, de-identified brain MRI scans on 395 patients using seven different MRI protocols. Moreover, each subject has at least two scans from different MRI protocols. Herein, we assess the SLANT method's intra- and inter-protocol reproducibility. SLANT achieved less than 0.05 coefficient of variation (CV) for intra-protocol experiments and less than 0.15 CV for inter-protocol experiments. The results show that the SLANT method achieved high intra- and inter- protocol reproducibility.

Inter-Scanner Harmonization of High Angular Resolution DW-MRI using Null Space Deep Learning

Oct 09, 2018

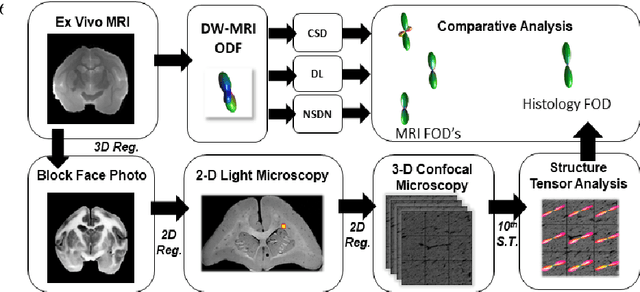

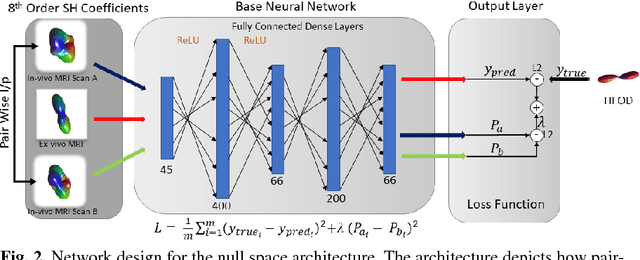

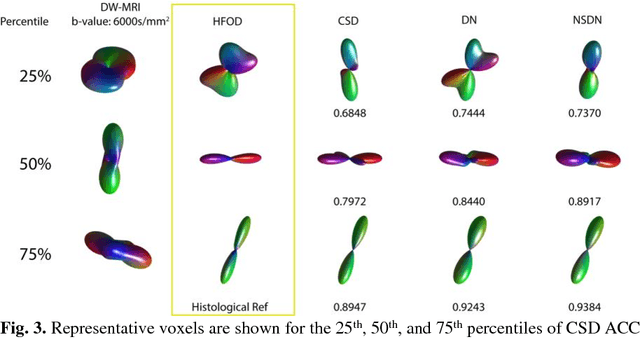

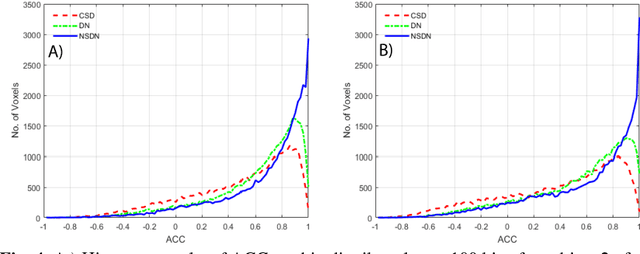

Abstract:Diffusion-weighted magnetic resonance imaging (DW-MRI) allows for non-invasive imaging of the local fiber architecture of the human brain at a millimetric scale. Multiple classical approaches have been proposed to detect both single (e.g., tensors) and multiple (e.g., constrained spherical deconvolution, CSD) fiber population orientations per voxel. However, existing techniques generally exhibit low reproducibility across MRI scanners. Herein, we propose a data-driven tech-nique using a neural network design which exploits two categories of data. First, training data were acquired on three squirrel monkey brains using ex-vivo DW-MRI and histology of the brain. Second, repeated scans of human subjects were acquired on two different scanners to augment the learning of the network pro-posed. To use these data, we propose a new network architecture, the null space deep network (NSDN), to simultaneously learn on traditional observed/truth pairs (e.g., MRI-histology voxels) along with repeated observations without a known truth (e.g., scan-rescan MRI). The NSDN was tested on twenty percent of the histology voxels that were kept completely blind to the network. NSDN significantly improved absolute performance relative to histology by 3.87% over CSD and 1.42% over a recently proposed deep neural network approach. More-over, it improved reproducibility on the paired data by 21.19% over CSD and 10.09% over a recently proposed deep approach. Finally, NSDN improved gen-eralizability of the model to a third in vivo human scanner (which was not used in training) by 16.08% over CSD and 10.41% over a recently proposed deep learn-ing approach. This work suggests that data-driven approaches for local fiber re-construction are more reproducible, informative and precise and offers a novel, practical method for determining these models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge