Kuniaki Saito

Towards Safer Mobile Agents: Scalable Generation and Evaluation of Diverse Scenarios for VLMs

Jan 13, 2026Abstract:Vision Language Models (VLMs) are increasingly deployed in autonomous vehicles and mobile systems, making it crucial to evaluate their ability to support safer decision-making in complex environments. However, existing benchmarks inadequately cover diverse hazardous situations, especially anomalous scenarios with spatio-temporal dynamics. While image editing models are a promising means to synthesize such hazards, it remains challenging to generate well-formulated scenarios that include moving, intrusive, and distant objects frequently observed in the real world. To address this gap, we introduce \textbf{HazardForge}, a scalable pipeline that leverages image editing models to generate these scenarios with layout decision algorithms, and validation modules. Using HazardForge, we construct \textbf{MovSafeBench}, a multiple-choice question (MCQ) benchmark comprising 7,254 images and corresponding QA pairs across 13 object categories, covering both normal and anomalous objects. Experiments using MovSafeBench show that VLM performance degrades notably under conditions including anomalous objects, with the largest drop in scenarios requiring nuanced motion understanding.

CaptionSmiths: Flexibly Controlling Language Pattern in Image Captioning

Jul 02, 2025Abstract:An image captioning model flexibly switching its language pattern, e.g., descriptiveness and length, should be useful since it can be applied to diverse applications. However, despite the dramatic improvement in generative vision-language models, fine-grained control over the properties of generated captions is not easy due to two reasons: (i) existing models are not given the properties as a condition during training and (ii) existing models cannot smoothly transition its language pattern from one state to the other. Given this challenge, we propose a new approach, CaptionSmiths, to acquire a single captioning model that can handle diverse language patterns. First, our approach quantifies three properties of each caption, length, descriptiveness, and uniqueness of a word, as continuous scalar values, without human annotation. Given the values, we represent the conditioning via interpolation between two endpoint vectors corresponding to the extreme states, e.g., one for a very short caption and one for a very long caption. Empirical results demonstrate that the resulting model can smoothly change the properties of the output captions and show higher lexical alignment than baselines. For instance, CaptionSmiths reduces the error in controlling caption length by 506\% despite better lexical alignment. Code will be available on https://github.com/omron-sinicx/captionsmiths.

SBS Figures: Pre-training Figure QA from Stage-by-Stage Synthesized Images

Dec 23, 2024

Abstract:Building a large-scale figure QA dataset requires a considerable amount of work, from gathering and selecting figures to extracting attributes like text, numbers, and colors, and generating QAs. Although recent developments in LLMs have led to efforts to synthesize figures, most of these focus primarily on QA generation. Additionally, creating figures directly using LLMs often encounters issues such as code errors, similar-looking figures, and repetitive content in figures. To address this issue, we present SBSFigures (Stage-by-Stage Synthetic Figures), a dataset for pre-training figure QA. Our proposed pipeline enables the creation of chart figures with complete annotations of the visualized data and dense QA annotations without any manual annotation process. Our stage-by-stage pipeline makes it possible to create diverse topic and appearance figures efficiently while minimizing code errors. Our SBSFigures demonstrate a strong pre-training effect, making it possible to achieve efficient training with a limited amount of real-world chart data starting from our pre-trained weights.

Is Large-Scale Pretraining the Secret to Good Domain Generalization?

Dec 03, 2024Abstract:Multi-Source Domain Generalization (DG) is the task of training on multiple source domains and achieving high classification performance on unseen target domains. Recent methods combine robust features from web-scale pretrained backbones with new features learned from source data, and this has dramatically improved benchmark results. However, it remains unclear if DG finetuning methods are becoming better over time, or if improved benchmark performance is simply an artifact of stronger pre-training. Prior studies have shown that perceptual similarity to pre-training data correlates with zero-shot performance, but we find the effect limited in the DG setting. Instead, we posit that having perceptually similar data in pretraining is not enough; and that it is how well these data were learned that determines performance. This leads us to introduce the Alignment Hypothesis, which states that the final DG performance will be high if and only if alignment of image and class label text embeddings is high. Our experiments confirm the Alignment Hypothesis is true, and we use it as an analysis tool of existing DG methods evaluated on DomainBed datasets by splitting evaluation data into In-pretraining (IP) and Out-of-pretraining (OOP). We show that all evaluated DG methods struggle on DomainBed-OOP, while recent methods excel on DomainBed-IP. Put together, our findings highlight the need for DG methods which can generalize beyond pretraining alignment.

Weak-to-Strong Compositional Learning from Generative Models for Language-based Object Detection

Jul 21, 2024

Abstract:Vision-language (VL) models often exhibit a limited understanding of complex expressions of visual objects (e.g., attributes, shapes, and their relations), given complex and diverse language queries. Traditional approaches attempt to improve VL models using hard negative synthetic text, but their effectiveness is limited. In this paper, we harness the exceptional compositional understanding capabilities of generative foundational models. We introduce a novel method for structured synthetic data generation aimed at enhancing the compositional understanding of VL models in language-based object detection. Our framework generates densely paired positive and negative triplets (image, text descriptions, and bounding boxes) in both image and text domains. By leveraging these synthetic triplets, we transform 'weaker' VL models into 'stronger' models in terms of compositional understanding, a process we call "Weak-to-Strong Compositional Learning" (WSCL). To achieve this, we propose a new compositional contrastive learning formulation that discovers semantics and structures in complex descriptions from synthetic triplets. As a result, VL models trained with our synthetic data generation exhibit a significant performance boost in the Omnilabel benchmark by up to +5AP and the D3 benchmark by +6.9AP upon existing baselines.

Unsupervised LLM Adaptation for Question Answering

Feb 16, 2024

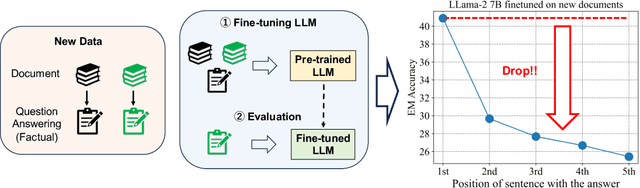

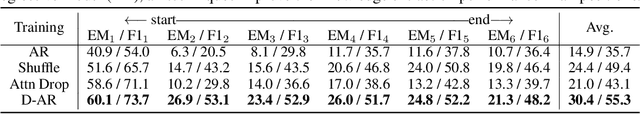

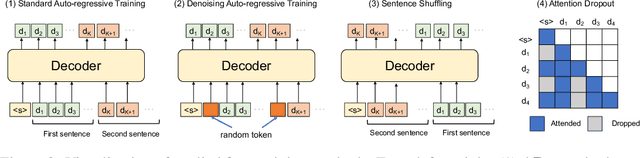

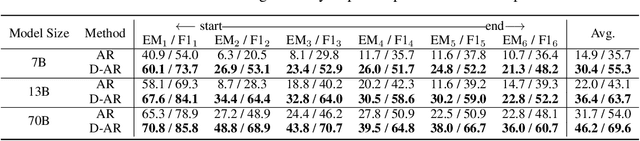

Abstract:Large language models (LLM) learn diverse knowledge present in the large-scale training dataset via self-supervised training. Followed by instruction-tuning, LLM acquires the ability to return correct information for diverse questions. However, adapting these pre-trained LLMs to new target domains, such as different organizations or periods, for the question-answering (QA) task incurs a substantial annotation cost. To tackle this challenge, we propose a novel task, unsupervised LLM adaptation for question answering. In this task, we leverage a pre-trained LLM, a publicly available QA dataset (source data), and unlabeled documents from the target domain. Our goal is to learn LLM that can answer questions about the target domain. We introduce one synthetic and two real datasets to evaluate models fine-tuned on the source and target data, and reveal intriguing insights; (i) fine-tuned models exhibit the ability to provide correct answers for questions about the target domain even though they do not see any questions about the information described in the unlabeled documents, but (ii) they have difficulties in accessing information located in the middle or at the end of documents, and (iii) this challenge can be partially mitigated by replacing input tokens with random ones during adaptation.

ERM++: An Improved Baseline for Domain Generalization

Apr 04, 2023Abstract:Multi-source Domain Generalization (DG) measures a classifier's ability to generalize to new distributions of data it was not trained on, given several training domains. While several multi-source DG methods have been proposed, they incur additional complexity during training by using domain labels. Recent work has shown that a well-tuned Empirical Risk Minimization (ERM) training procedure, that is simply minimizing the empirical risk on the source domains, can outperform most existing DG methods. We identify several key candidate techniques to further improve ERM performance, such as better utilization of training data, model parameter selection, and weight-space regularization. We call the resulting method ERM++, and show it significantly improves the performance of DG on five multi-source datasets by over 5% compared to standard ERM, and beats state-of-the-art despite being less computationally expensive. Additionally, we demonstrate the efficacy of ERM++ on the WILDS-FMOW dataset, a challenging DG benchmark. We hope that ERM++ becomes a strong baseline for future DG research. Code is released at https://github.com/piotr-teterwak/erm_plusplus.

Mind the Backbone: Minimizing Backbone Distortion for Robust Object Detection

Mar 26, 2023

Abstract:Building object detectors that are robust to domain shifts is critical for real-world applications. Prior approaches fine-tune a pre-trained backbone and risk overfitting it to in-distribution (ID) data and distorting features useful for out-of-distribution (OOD) generalization. We propose to use Relative Gradient Norm (RGN) as a way to measure the vulnerability of a backbone to feature distortion, and show that high RGN is indeed correlated with lower OOD performance. Our analysis of RGN yields interesting findings: some backbones lose OOD robustness during fine-tuning, but others gain robustness because their architecture prevents the parameters from changing too much from the initial model. Given these findings, we present recipes to boost OOD robustness for both types of backbones. Specifically, we investigate regularization and architectural choices for minimizing gradient updates so as to prevent the tuned backbone from losing generalizable features. Our proposed techniques complement each other and show substantial improvements over baselines on diverse architectures and datasets.

Pic2Word: Mapping Pictures to Words for Zero-shot Composed Image Retrieval

Feb 06, 2023

Abstract:In Composed Image Retrieval (CIR), a user combines a query image with text to describe their intended target. Existing methods rely on supervised learning of CIR models using labeled triplets consisting of the query image, text specification, and the target image. Labeling such triplets is expensive and hinders broad applicability of CIR. In this work, we propose to study an important task, Zero-Shot Composed Image Retrieval (ZS-CIR), whose goal is to build a CIR model without requiring labeled triplets for training. To this end, we propose a novel method, called Pic2Word, that requires only weakly labeled image-caption pairs and unlabeled image datasets to train. Unlike existing supervised CIR models, our model trained on weakly labeled or unlabeled datasets shows strong generalization across diverse ZS-CIR tasks, e.g., attribute editing, object composition, and domain conversion. Our approach outperforms several supervised CIR methods on the common CIR benchmark, CIRR and Fashion-IQ. Code will be made publicly available at https://github.com/google-research/composed_image_retrieval.

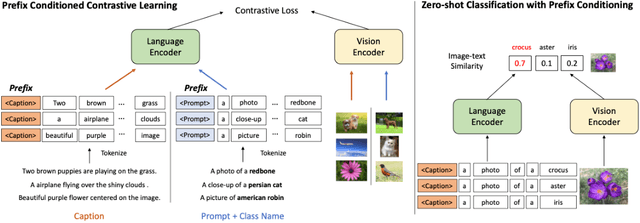

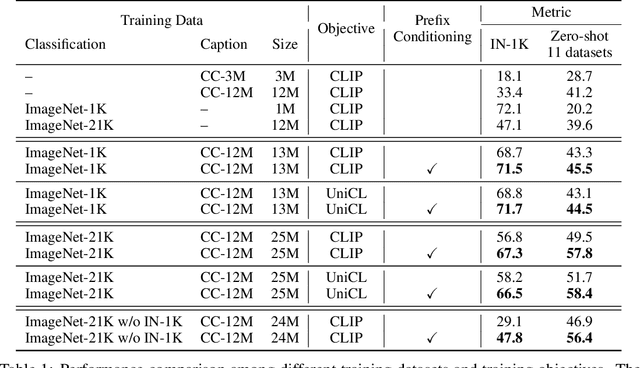

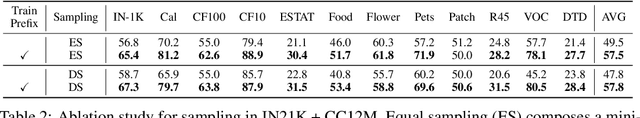

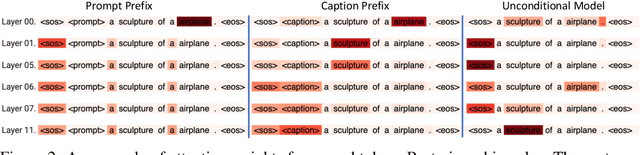

Prefix Conditioning Unifies Language and Label Supervision

Jun 02, 2022

Abstract:Vision-language contrastive learning suggests a new learning paradigm by leveraging a large amount of image-caption-pair data. The caption supervision excels at providing wide coverage in vocabulary that enables strong zero-shot image recognition performance. On the other hand, label supervision offers to learn more targeted visual representations that are label-oriented and can cover rare categories. To gain the complementary advantages of both kinds of supervision for contrastive image-caption pre-training, recent works have proposed to convert class labels into a sentence with pre-defined templates called prompts. However, a naive unification of the real caption and the prompt sentences could lead to a complication in learning, as the distribution shift in text may not be handled properly in the language encoder. In this work, we propose a simple yet effective approach to unify these two types of supervision using prefix tokens that inform a language encoder of the type of the input sentence (e.g., caption or prompt) at training time. Our method is generic and can be easily integrated into existing VL pre-training objectives such as CLIP or UniCL. In experiments, we show that this simple technique dramatically improves the performance in zero-shot image recognition accuracy of the pre-trained model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge