Kumar Shaurya Shankar

MRFMap: Online Probabilistic 3D Mapping using Forward Ray Sensor Models

Jun 13, 2020

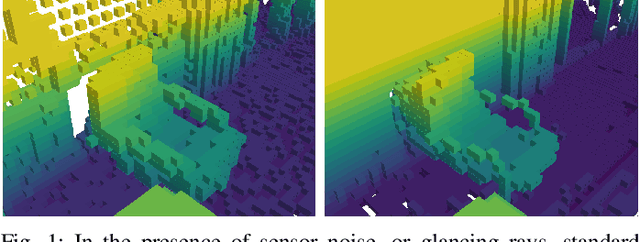

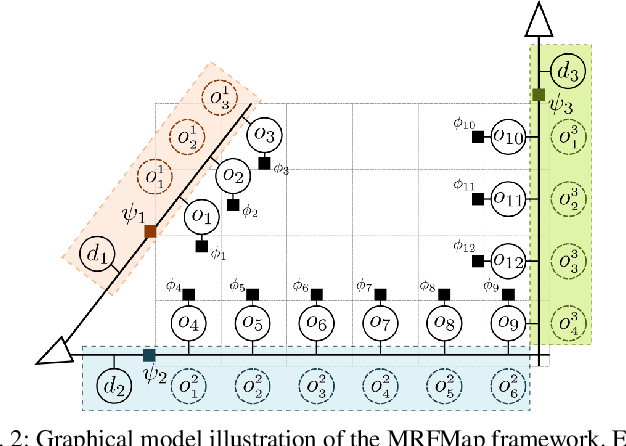

Abstract:Traditional dense volumetric representations for robotic mapping make simplifying assumptions about sensor noise characteristics due to computational constraints. We present a framework that, unlike conventional occupancy grid maps, explicitly models the sensor ray formation for a depth sensor via a Markov Random Field and performs loopy belief propagation to infer the marginal probability of occupancy at each voxel in a map. By explicitly reasoning about occlusions our approach models the correlations between adjacent voxels in the map. Further, by incorporating learnt sensor noise characteristics we perform accurate inference even with noisy sensor data without ad-hoc definitions of sensor uncertainty. We propose a new metric for evaluating probabilistic volumetric maps and demonstrate the higher fidelity of our approach on simulated as well as real-world datasets.

RaD-VIO: Rangefinder-aided Downward Visual-Inertial Odometry

Oct 19, 2018

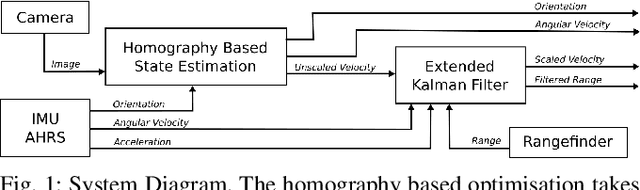

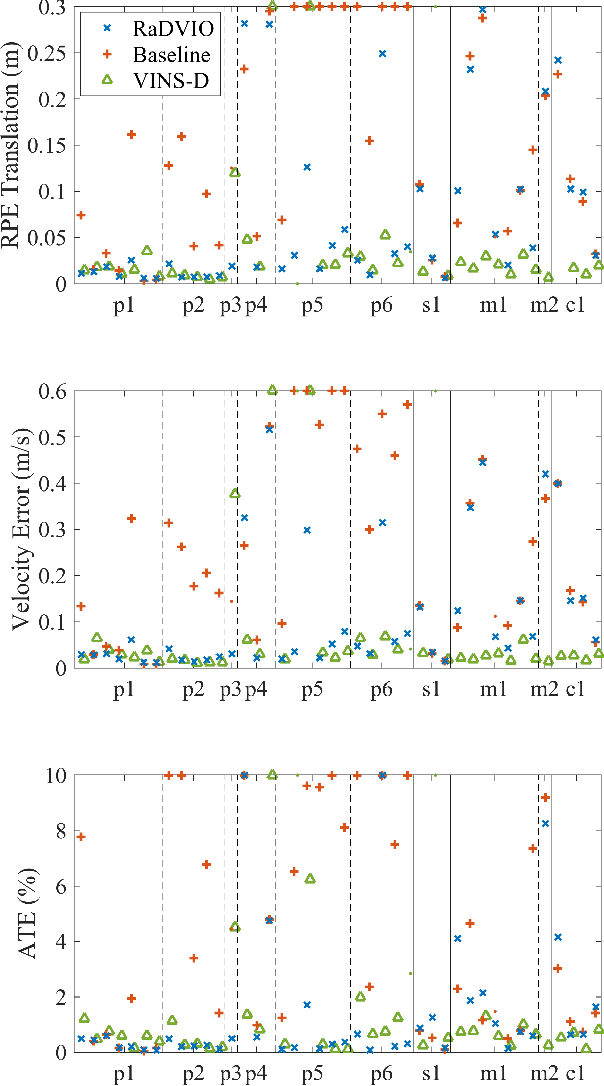

Abstract:State-of-the-art forward facing monocular visual-inertial odometry algorithms are often brittle in practice, especially whilst dealing with initialisation and motion in directions that render the state unobservable. In such cases having a reliable complementary odometry algorithm enables robust and resilient flight. Using the common local planarity assumption, we present a fast, dense, and direct frame-to-frame visual-inertial odometry algorithm for downward facing cameras that minimises a joint cost function involving a homography based photometric cost and an IMU regularisation term. Via extensive evaluation in a variety of scenarios we demonstrate superior performance than existing state-of-the-art downward facing odometry algorithms for Micro Aerial Vehicles (MAVs).

Fast Monte-Carlo Localization on Aerial Vehicles using Approximate Continuous Belief Representations

Mar 29, 2018

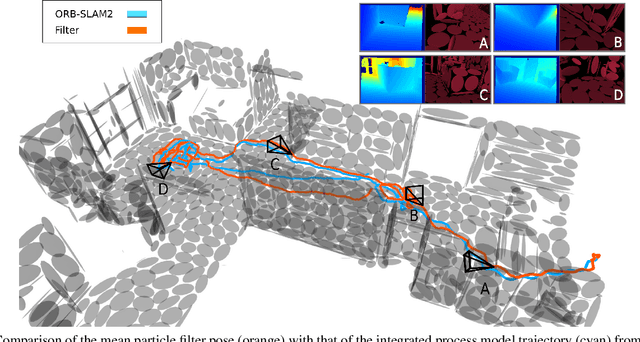

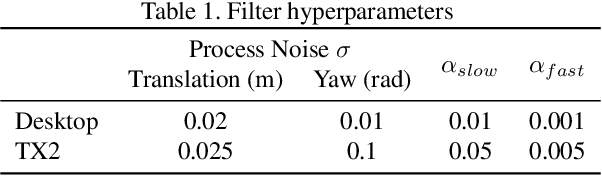

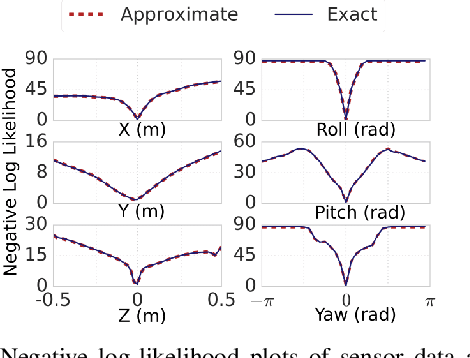

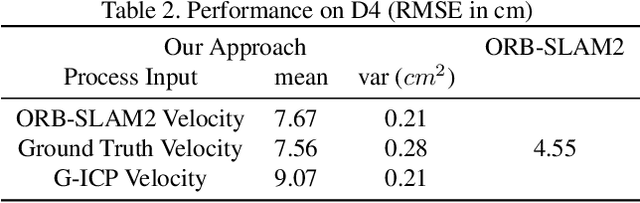

Abstract:Size, weight, and power constrained platforms impose constraints on computational resources that introduce unique challenges in implementing localization algorithms. We present a framework to perform fast localization on such platforms enabled by the compressive capabilities of Gaussian Mixture Model representations of point cloud data. Given raw structural data from a depth sensor and pitch and roll estimates from an on-board attitude reference system, a multi-hypothesis particle filter localizes the vehicle by exploiting the likelihood of the data originating from the mixture model. We demonstrate analysis of this likelihood in the vicinity of the ground truth pose and detail its utilization in a particle filter-based vehicle localization strategy, and later present results of real-time implementations on a desktop system and an off-the-shelf embedded platform that outperform localization results from running a state-of-the-art algorithm on the same environment.

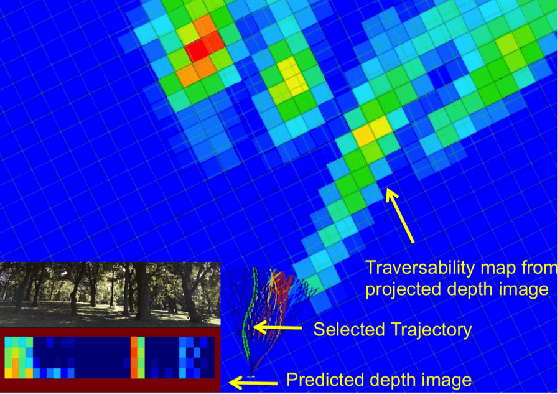

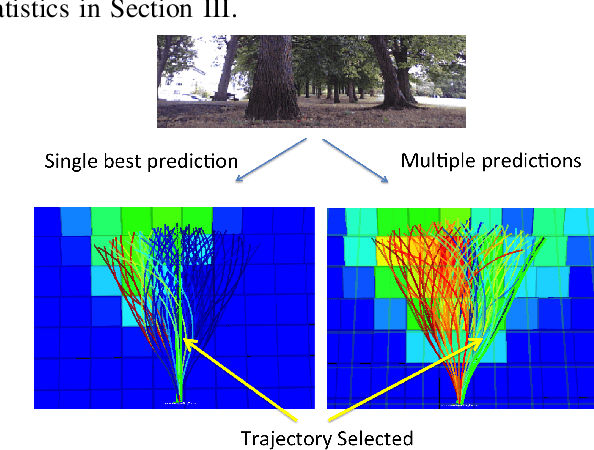

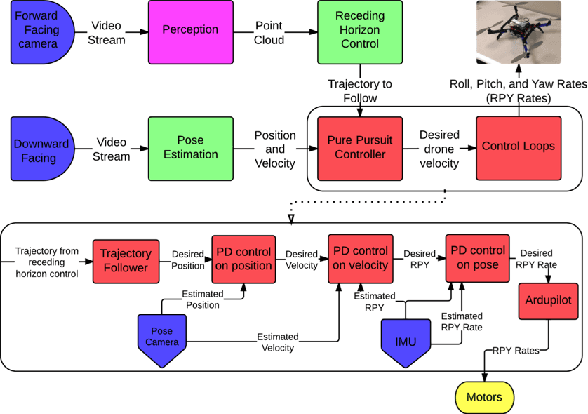

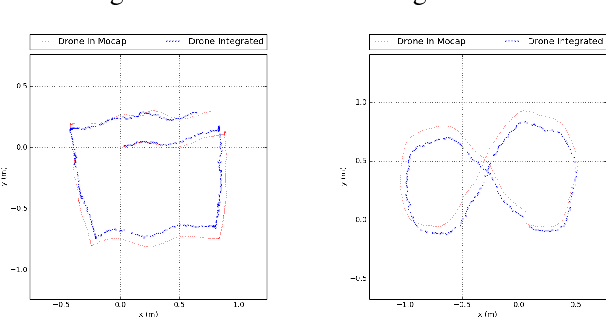

Vision and Learning for Deliberative Monocular Cluttered Flight

Nov 24, 2014

Abstract:Cameras provide a rich source of information while being passive, cheap and lightweight for small and medium Unmanned Aerial Vehicles (UAVs). In this work we present the first implementation of receding horizon control, which is widely used in ground vehicles, with monocular vision as the only sensing mode for autonomous UAV flight in dense clutter. We make it feasible on UAVs via a number of contributions: novel coupling of perception and control via relevant and diverse, multiple interpretations of the scene around the robot, leveraging recent advances in machine learning to showcase anytime budgeted cost-sensitive feature selection, and fast non-linear regression for monocular depth prediction. We empirically demonstrate the efficacy of our novel pipeline via real world experiments of more than 2 kms through dense trees with a quadrotor built from off-the-shelf parts. Moreover our pipeline is designed to combine information from other modalities like stereo and lidar as well if available.

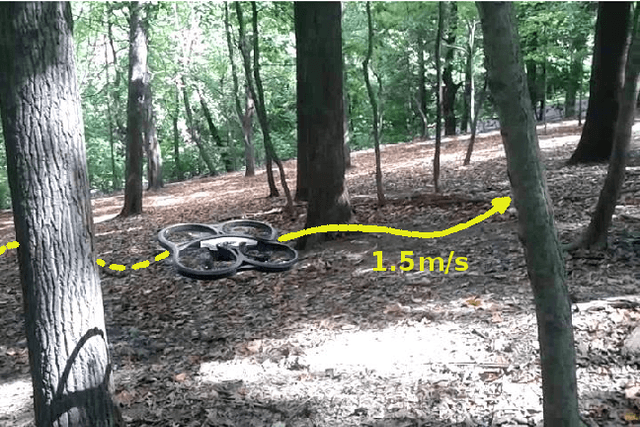

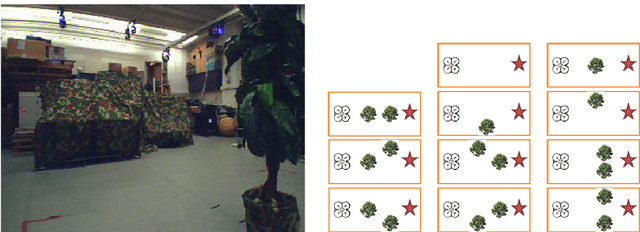

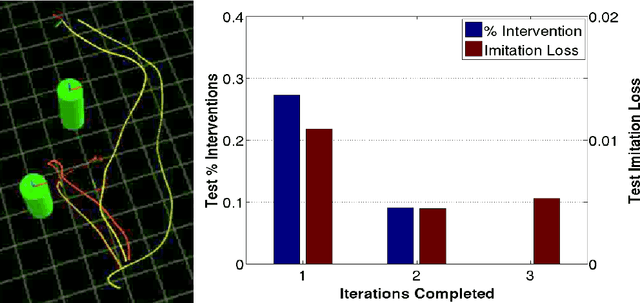

Learning Monocular Reactive UAV Control in Cluttered Natural Environments

Nov 07, 2012

Abstract:Autonomous navigation for large Unmanned Aerial Vehicles (UAVs) is fairly straight-forward, as expensive sensors and monitoring devices can be employed. In contrast, obstacle avoidance remains a challenging task for Micro Aerial Vehicles (MAVs) which operate at low altitude in cluttered environments. Unlike large vehicles, MAVs can only carry very light sensors, such as cameras, making autonomous navigation through obstacles much more challenging. In this paper, we describe a system that navigates a small quadrotor helicopter autonomously at low altitude through natural forest environments. Using only a single cheap camera to perceive the environment, we are able to maintain a constant velocity of up to 1.5m/s. Given a small set of human pilot demonstrations, we use recent state-of-the-art imitation learning techniques to train a controller that can avoid trees by adapting the MAVs heading. We demonstrate the performance of our system in a more controlled environment indoors, and in real natural forest environments outdoors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge