Sam Zeng

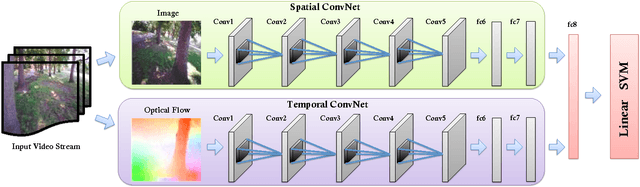

Introspective Perception: Learning to Predict Failures in Vision Systems

Jul 28, 2016

Abstract:As robots aspire for long-term autonomous operations in complex dynamic environments, the ability to reliably take mission-critical decisions in ambiguous situations becomes critical. This motivates the need to build systems that have situational awareness to assess how qualified they are at that moment to make a decision. We call this self-evaluating capability as introspection. In this paper, we take a small step in this direction and propose a generic framework for introspective behavior in perception systems. Our goal is to learn a model to reliably predict failures in a given system, with respect to a task, directly from input sensor data. We present this in the context of vision-based autonomous MAV flight in outdoor natural environments, and show that it effectively handles uncertain situations.

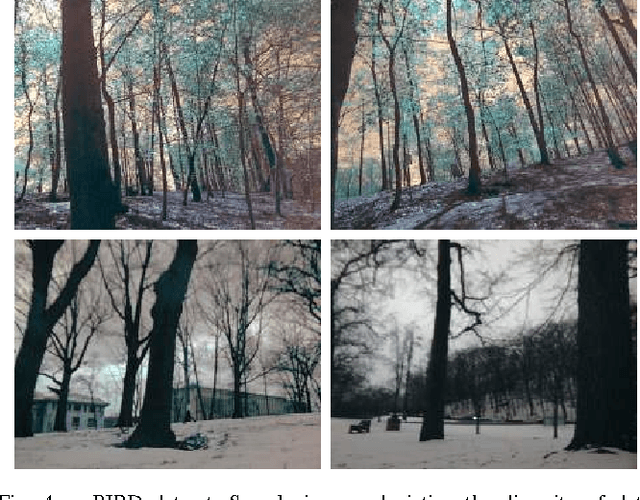

Robust Monocular Flight in Cluttered Outdoor Environments

Apr 16, 2016

Abstract:Recently, there have been numerous advances in the development of biologically inspired lightweight Micro Aerial Vehicles (MAVs). While autonomous navigation is fairly straight-forward for large UAVs as expensive sensors and monitoring devices can be employed, robust methods for obstacle avoidance remains a challenging task for MAVs which operate at low altitude in cluttered unstructured environments. Due to payload and power constraints, it is necessary for such systems to have autonomous navigation and flight capabilities using mostly passive sensors such as cameras. In this paper, we describe a robust system that enables autonomous navigation of small agile quad-rotors at low altitude through natural forest environments. We present a direct depth estimation approach that is capable of producing accurate, semi-dense depth-maps in real time. Furthermore, a novel wind-resistant control scheme is presented that enables stable way-point tracking even in the presence of strong winds. We demonstrate the performance of our system through extensive experiments on real images and field tests in a cluttered outdoor environment.

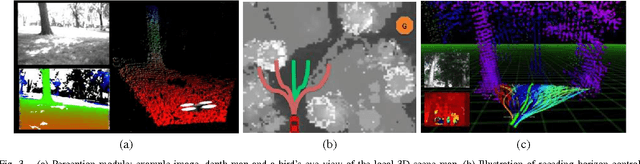

Vision and Learning for Deliberative Monocular Cluttered Flight

Nov 24, 2014

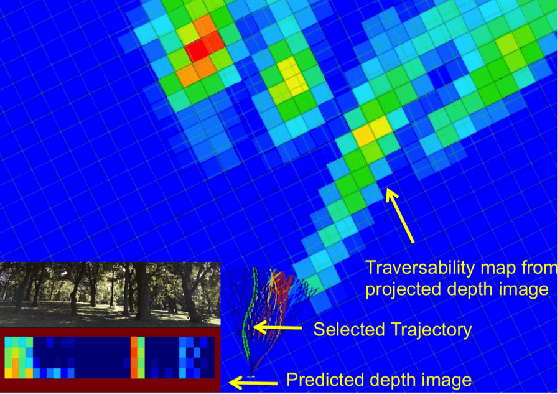

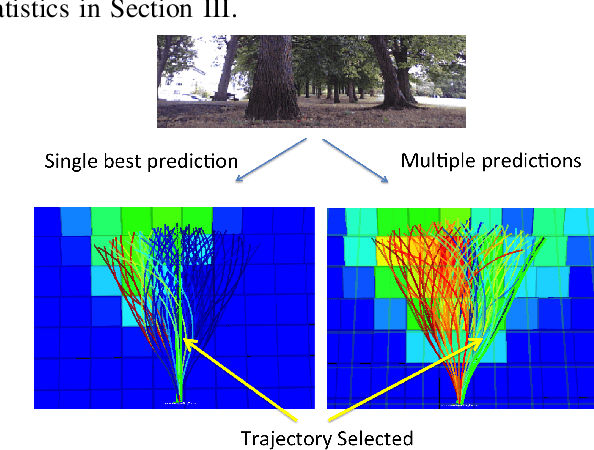

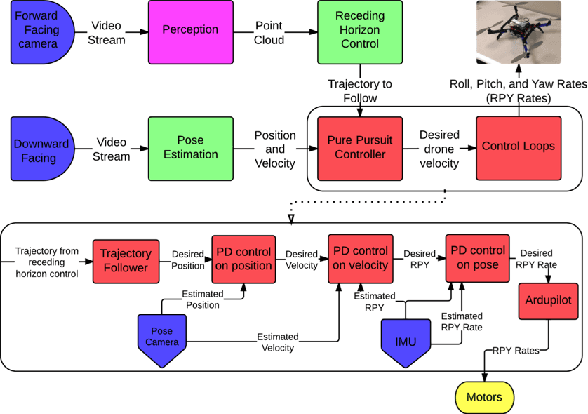

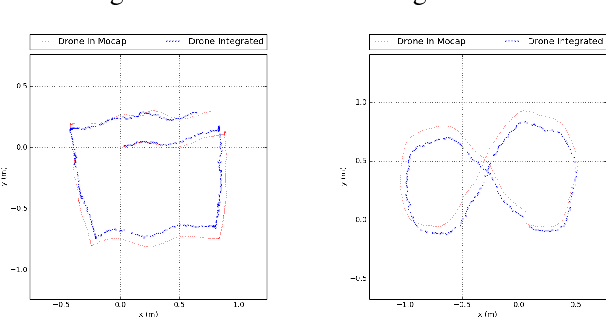

Abstract:Cameras provide a rich source of information while being passive, cheap and lightweight for small and medium Unmanned Aerial Vehicles (UAVs). In this work we present the first implementation of receding horizon control, which is widely used in ground vehicles, with monocular vision as the only sensing mode for autonomous UAV flight in dense clutter. We make it feasible on UAVs via a number of contributions: novel coupling of perception and control via relevant and diverse, multiple interpretations of the scene around the robot, leveraging recent advances in machine learning to showcase anytime budgeted cost-sensitive feature selection, and fast non-linear regression for monocular depth prediction. We empirically demonstrate the efficacy of our novel pipeline via real world experiments of more than 2 kms through dense trees with a quadrotor built from off-the-shelf parts. Moreover our pipeline is designed to combine information from other modalities like stereo and lidar as well if available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge