MRFMap: Online Probabilistic 3D Mapping using Forward Ray Sensor Models

Paper and Code

Jun 13, 2020

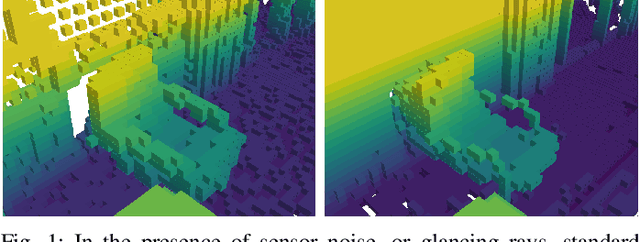

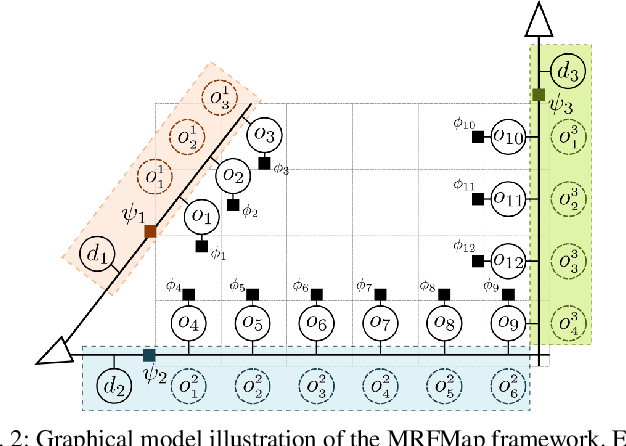

Traditional dense volumetric representations for robotic mapping make simplifying assumptions about sensor noise characteristics due to computational constraints. We present a framework that, unlike conventional occupancy grid maps, explicitly models the sensor ray formation for a depth sensor via a Markov Random Field and performs loopy belief propagation to infer the marginal probability of occupancy at each voxel in a map. By explicitly reasoning about occlusions our approach models the correlations between adjacent voxels in the map. Further, by incorporating learnt sensor noise characteristics we perform accurate inference even with noisy sensor data without ad-hoc definitions of sensor uncertainty. We propose a new metric for evaluating probabilistic volumetric maps and demonstrate the higher fidelity of our approach on simulated as well as real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge