Kuan-Hsun Chen

Reducing Compute Waste in LLMs through Kernel-Level DVFS

Jan 13, 2026Abstract:The rapid growth of AI has fueled the expansion of accelerator- or GPU-based data centers. However, the rising operational energy consumption has emerged as a critical bottleneck and a major sustainability concern. Dynamic Voltage and Frequency Scaling (DVFS) is a well-known technique used to reduce energy consumption, and thus improve energy-efficiency, since it requires little effort and works with existing hardware. Reducing the energy consumption of training and inference of Large Language Models (LLMs) through DVFS or power capping is feasible: related work has shown energy savings can be significant, but at the cost of significant slowdowns. In this work, we focus on reducing waste in LLM operations: i.e., reducing energy consumption without losing performance. We propose a fine-grained, kernel-level, DVFS approach that explores new frequency configurations, and prove these save more energy than previous, pass- or iteration-level solutions. For example, for a GPT-3 training run, a pass-level approach could reduce energy consumption by 2% (without losing performance), while our kernel-level approach saves as much as 14.6% (with a 0.6% slowdown). We further investigate the effect of data and tensor parallelism, and show our discovered clock frequencies translate well for both. We conclude that kernel-level DVFS is a suitable technique to reduce waste in LLM operations, providing significant energy savings with negligible slow-down.

InTreeger: An End-to-End Framework for Integer-Only Decision Tree Inference

May 21, 2025Abstract:Integer quantization has emerged as a critical technique to facilitate deployment on resource-constrained devices. Although they do reduce the complexity of the learning models, their inference performance is often prone to quantization-induced errors. To this end, we introduce InTreeger: an end-to-end framework that takes a training dataset as input, and outputs an architecture-agnostic integer-only C implementation of tree-based machine learning model, without loss of precision. This framework enables anyone, even those without prior experience in machine learning, to generate a highly optimized integer-only classification model that can run on any hardware simply by providing an input dataset and target variable. We evaluated our generated implementations across three different architectures (ARM, x86, and RISC-V), resulting in significant improvements in inference latency. In addition, we show the energy efficiency compared to typical decision tree implementations that rely on floating-point arithmetic. The results underscore the advantages of integer-only inference, making it particularly suitable for energy- and area-constrained devices such as embedded systems and edge computing platforms, while also enabling the execution of decision trees on existing ultra-low power devices.

WCDT: Systematic WCET Optimization for Decision Tree Implementations

Jan 29, 2025

Abstract:Machine-learning models are increasingly deployed on resource-constrained embedded systems with strict timing constraints. In such scenarios, the worst-case execution time (WCET) of the models is required to ensure safe operation. Specifically, decision trees are a prominent class of machine-learning models and the main building blocks of tree-based ensemble models (e.g., random forests), which are commonly employed in resource-constrained embedded systems. In this paper, we develop a systematic approach for WCET optimization of decision tree implementations. To this end, we introduce a linear surrogate model that estimates the execution time of individual paths through a decision tree based on the path's length and the number of taken branches. We provide an optimization algorithm that constructively builds a WCET-optimal implementation of a given decision tree with respect to this surrogate model. We experimentally evaluate both the surrogate model and the WCET-optimization algorithm. The evaluation shows that the optimization algorithm improves analytically determined WCET by up to $17\%$ compared to an unoptimized implementation.

TREE: Tree Regularization for Efficient Execution

Jun 18, 2024

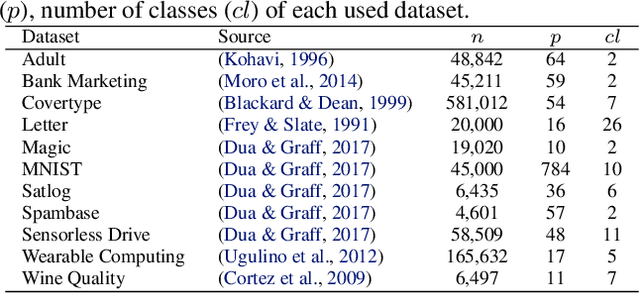

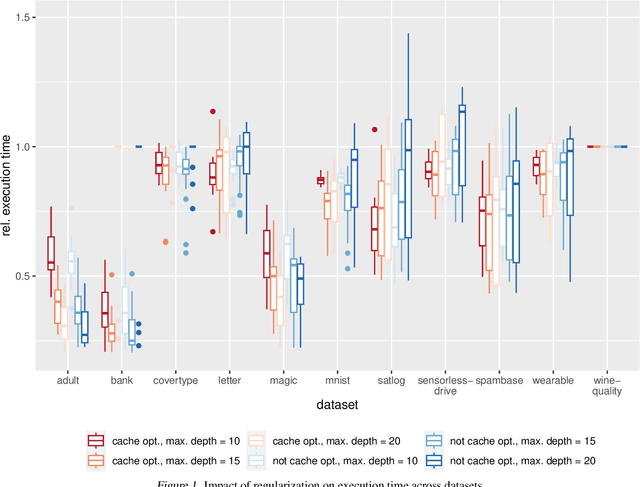

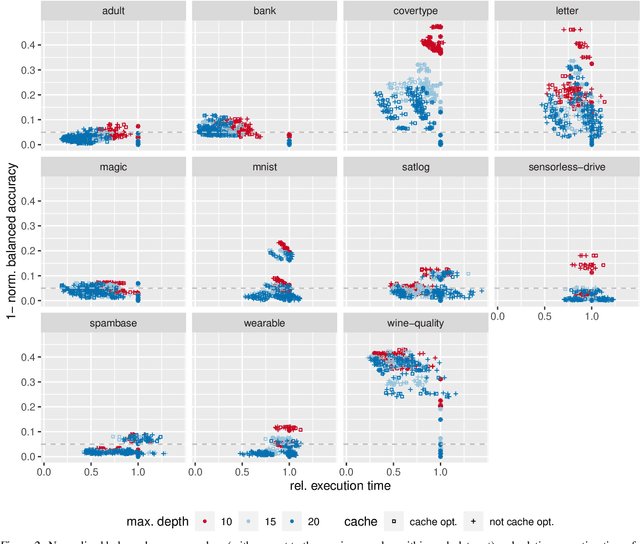

Abstract:The rise of machine learning methods on heavily resource constrained devices requires not only the choice of a suitable model architecture for the target platform, but also the optimization of the chosen model with regard to execution time consumption for inference in order to optimally utilize the available resources. Random forests and decision trees are shown to be a suitable model for such a scenario, since they are not only heavily tunable towards the total model size, but also offer a high potential for optimizing their executions according to the underlying memory architecture. In addition to the straightforward strategy of enforcing shorter paths through decision trees and hence reducing the execution time for inference, hardware-aware implementations can optimize the execution time in an orthogonal manner. One particular hardware-aware optimization is to layout the memory of decision trees in such a way, that higher probably paths are less likely to be evicted from system caches. This works particularly well when splits within tree nodes are uneven and have a high probability to visit one of the child nodes. In this paper, we present a method to reduce path lengths by rewarding uneven probability distributions during the training of decision trees at the cost of a minimal accuracy degradation. Specifically, we regularize the impurity computation of the CART algorithm in order to favor not only low impurity, but also highly asymmetric distributions for the evaluation of split criteria and hence offer a high optimization potential for a memory architecture-aware implementation. We show that especially for binary classification data sets and data sets with many samples, this form of regularization can lead to an reduction of up to approximately four times in the execution time with a minimal accuracy degradation.

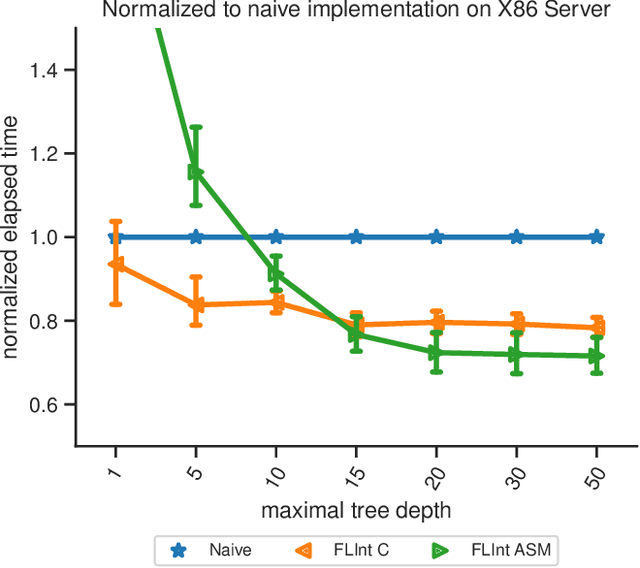

Register Your Forests: Decision Tree Ensemble Optimization by Explicit CPU Register Allocation

Apr 10, 2024

Abstract:Bringing high-level machine learning models to efficient and well-suited machine implementations often invokes a bunch of tools, e.g.~code generators, compilers, and optimizers. Along such tool chains, abstractions have to be applied. This leads to not optimally used CPU registers. This is a shortcoming, especially in resource constrained embedded setups. In this work, we present a code generation approach for decision tree ensembles, which produces machine assembly code within a single conversion step directly from the high-level model representation. Specifically, we develop various approaches to effectively allocate registers for the inference of decision tree ensembles. Extensive evaluations of the proposed method are conducted in comparison to the basic realization of C code from the high-level machine learning model and succeeding compilation. The results show that the performance of decision tree ensemble inference can be significantly improved (by up to $\approx1.6\times$), if the methods are applied carefully to the appropriate scenario.

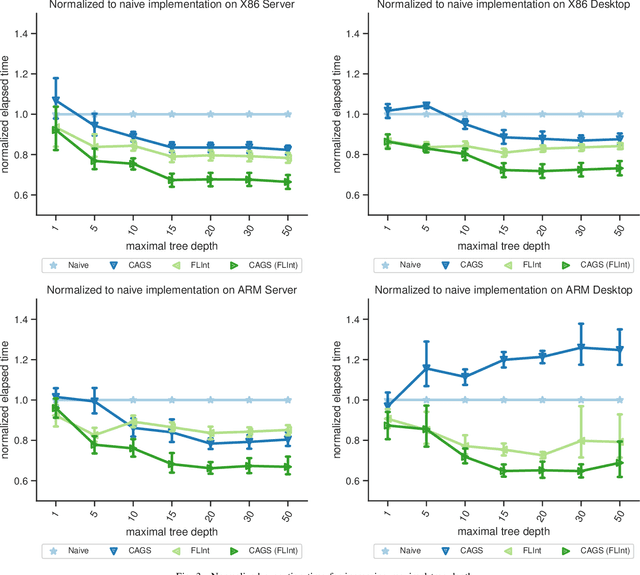

FLInt: Exploiting Floating Point Enabled Integer Arithmetic for Efficient Random Forest Inference

Sep 09, 2022

Abstract:In many machine learning applications, e.g., tree-based ensembles, floating point numbers are extensively utilized due to their expressiveness. Nowadays performing data analysis on embedded devices from dynamic data masses becomes available, but such systems often lack hardware capabilities to process floating point numbers, introducing large overheads for their processing. Even if such hardware is present in general computing systems, using integer operations instead of floating point operations promises to reduce operation overheads and improve the performance. In this paper, we provide \mdname, a full precision floating point comparison for random forests, by only using integer and logic operations. To ensure the same functionality preserves, we formally prove the correctness of this comparison. Since random forests only require comparison of floating point numbers during inference, we implement \mdname~in low level realizations and therefore eliminate the need for floating point hardware entirely, by keeping the model accuracy unchanged. The usage of \mdname~basically boils down to a one-by-one replacement of conditions: For instance, a comparison statement in C: if(pX[3]<=(float)10.074347) becomes if((*(((int*)(pX))+3))<=((int)(0x41213087))). Experimental evaluation on X86 and ARMv8 desktop and server class systems shows that the execution time can be reduced by up to $\approx 30\%$ with our novel approach.

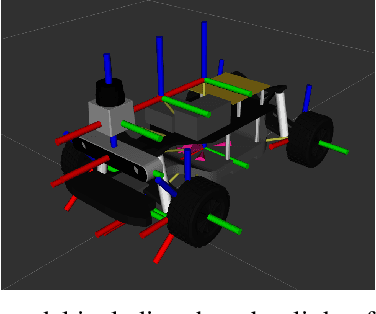

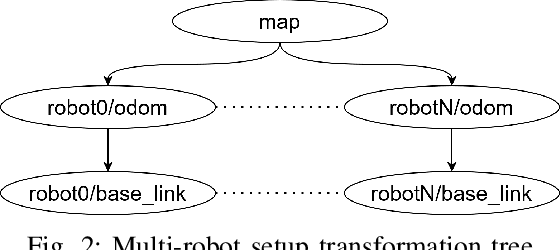

AuNa: Modularly Integrated Simulation Framework for Cooperative Autonomous Navigation

Jul 12, 2022

Abstract:In the near future, the development of autonomous driving will get more complex as the vehicles will not only rely on their own sensors but also communicate with other vehicles and the infrastructure to cooperate and improve the driving experience. Towards this, several research areas, such as robotics, communication, and control, are required to collaborate in order to implement future-ready methods. However, each area focuses on the development of its own components first, while the effects the components may have on the whole system are only considered at a later stage. In this work, we integrate the simulation tools of robotics, communication and control namely ROS2, OMNeT++, and MATLAB to evaluate cooperative driving scenarios. The framework can be utilized to develop the individual components using the designated tools, while the final evaluation can be conducted in a complete scenario, enabling the simulation of advanced multi-robot applications for cooperative driving. Furthermore, it can be used to integrate additional tools, as the integration is done in a modular way. We showcase the framework by demonstrating a platooning scenario under cooperative adaptive cruise control (CACC) and the ETSI ITS-G5 communication architecture. Additionally, we compare the differences of the controller performance between the theoretical analysis and practical case study.

Bit Error Tolerance Metrics for Binarized Neural Networks

Feb 02, 2021

Abstract:To reduce the resource demand of neural network (NN) inference systems, it has been proposed to use approximate memory, in which the supply voltage and the timing parameters are tuned trading accuracy with energy consumption and performance. Tuning these parameters aggressively leads to bit errors, which can be tolerated by NNs when bit flips are injected during training. However, bit flip training, which is the state of the art for achieving bit error tolerance, does not scale well; it leads to massive overheads and cannot be applied for high bit error rates (BERs). Alternative methods to achieve bit error tolerance in NNs are needed, but the underlying principles behind the bit error tolerance of NNs have not been reported yet. With this lack of understanding, further progress in the research on NN bit error tolerance will be restrained. In this study, our objective is to investigate the internal changes in the NNs that bit flip training causes, with a focus on binarized NNs (BNNs). To this end, we quantify the properties of bit error tolerant BNNs with two metrics. First, we propose a neuron-level bit error tolerance metric, which calculates the margin between the pre-activation values and batch normalization thresholds. Secondly, to capture the effects of bit error tolerance on the interplay of neurons, we propose an inter-neuron bit error tolerance metric, which measures the importance of each neuron and computes the variance over all importance values. Our experimental results support that these two metrics are strongly related to bit error tolerance.

Towards Explainable Bit Error Tolerance of Resistive RAM-Based Binarized Neural Networks

Feb 03, 2020

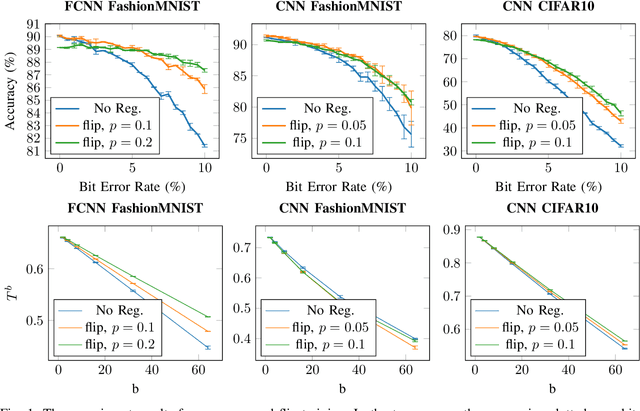

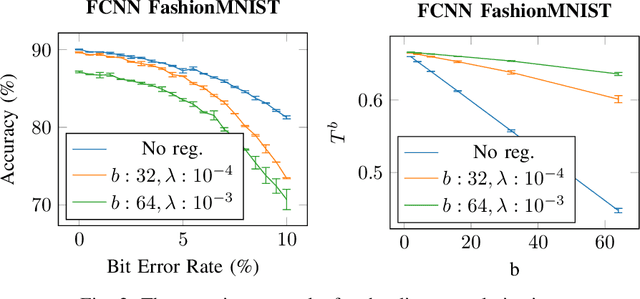

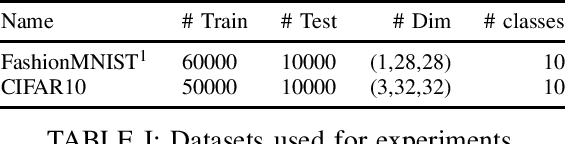

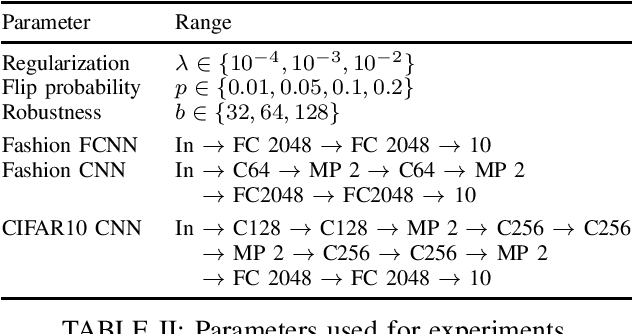

Abstract:Non-volatile memory, such as resistive RAM (RRAM), is an emerging energy-efficient storage, especially for low-power machine learning models on the edge. It is reported, however, that the bit error rate of RRAMs can be up to 3.3% in the ultra low-power setting, which might be crucial for many use cases. Binary neural networks (BNNs), a resource efficient variant of neural networks (NNs), can tolerate a certain percentage of errors without a loss in accuracy and demand lower resources in computation and storage. The bit error tolerance (BET) in BNNs can be achieved by flipping the weight signs during training, as proposed by Hirtzlin et al., but their method has a significant drawback, especially for fully connected neural networks (FCNN): The FCNNs overfit to the error rate used in training, which leads to low accuracy under lower error rates. In addition, the underlying principles of BET are not investigated. In this work, we improve the training for BET of BNNs and aim to explain this property. We propose straight-through gradient approximation to improve the weight-sign-flip training, by which BNNs adapt less to the bit error rates. To explain the achieved robustness, we define a metric that aims to measure BET without fault injection. We evaluate the metric and find that it correlates with accuracy over error rate for all FCNNs tested. Finally, we explore the influence of a novel regularizer that optimizes with respect to this metric, with the aim of providing a configurable trade-off in accuracy and BET.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge