Kokil Jaidka

Learning Through Dialogue: Unpacking the Dynamics of Human-LLM Conversations on Political Issues

Jan 12, 2026Abstract:Large language models (LLMs) are increasingly used as conversational partners for learning, yet the interactional dynamics supporting users' learning and engagement are understudied. We analyze the linguistic and interactional features from both LLM and participant chats across 397 human-LLM conversations about socio-political issues to identify the mechanisms and conditions under which LLM explanations shape changes in political knowledge and confidence. Mediation analyses reveal that LLM explanatory richness partially supports confidence by fostering users' reflective insight, whereas its effect on knowledge gain operates entirely through users' cognitive engagement. Moderation analyses show that these effects are highly conditional and vary by political efficacy. Confidence gains depend on how high-efficacy users experience and resolve uncertainty. Knowledge gains depend on high-efficacy users' ability to leverage extended interaction, with longer conversations benefiting primarily reflective users. In summary, we find that learning from LLMs is an interactional achievement, not a uniform outcome of better explanations. The findings underscore the importance of aligning LLM explanatory behavior with users' engagement states to support effective learning in designing Human-AI interactive systems.

From Passive to Persuasive: Steering Emotional Nuance in Human-AI Negotiation

Nov 16, 2025Abstract:Large Language Models (LLMs) demonstrate increasing conversational fluency, yet instilling them with nuanced, human-like emotional expression remains a significant challenge. Current alignment techniques often address surface-level output or require extensive fine-tuning. This paper demonstrates that targeted activation engineering can steer LLaMA 3.1-8B to exhibit more human-like emotional nuances. We first employ attribution patching to identify causally influential components, to find a key intervention locus by observing activation patterns during diagnostic conversational tasks. We then derive emotional expression vectors from the difference in the activations generated by contrastive text pairs (positive vs. negative examples of target emotions). Applying these vectors to new conversational prompts significantly enhances emotional characteristics: steered responses show increased positive sentiment (e.g., joy, trust) and more frequent first-person pronoun usage, indicative of greater personal engagement. Our findings offer a precise and interpretable framework and new directions for the study of conversational AI.

Conversations: Love Them, Hate Them, Steer Them

May 23, 2025Abstract:Large Language Models (LLMs) demonstrate increasing conversational fluency, yet instilling them with nuanced, human-like emotional expression remains a significant challenge. Current alignment techniques often address surface-level output or require extensive fine-tuning. This paper demonstrates that targeted activation engineering can steer LLaMA 3.1-8B to exhibit more human-like emotional nuances. We first employ attribution patching to identify causally influential components, to find a key intervention locus by observing activation patterns during diagnostic conversational tasks. We then derive emotional expression vectors from the difference in the activations generated by contrastive text pairs (positive vs. negative examples of target emotions). Applying these vectors to new conversational prompts significantly enhances emotional characteristics: steered responses show increased positive sentiment (e.g., joy, trust) and more frequent first-person pronoun usage, indicative of greater personal engagement. Our findings offer a precise and interpretable method for controlling specific emotional attributes in LLMs, contributing to developing more aligned and empathetic conversational AI.

On the Limitations of Steering in Language Model Alignment

May 02, 2025Abstract:Steering vectors are a promising approach to aligning language model behavior at inference time. In this paper, we propose a framework to assess the limitations of steering vectors as alignment mechanisms. Using a framework of transformer hook interventions and antonym-based function vectors, we evaluate the role of prompt structure and context complexity in steering effectiveness. Our findings indicate that steering vectors are promising for specific alignment tasks, such as value alignment, but may not provide a robust foundation for general-purpose alignment in LLMs, particularly in complex scenarios. We establish a methodological foundation for future investigations into steering capabilities of reasoning models.

Improving User Behavior Prediction: Leveraging Annotator Metadata in Supervised Machine Learning Models

Mar 26, 2025Abstract:Supervised machine-learning models often underperform in predicting user behaviors from conversational text, hindered by poor crowdsourced label quality and low NLP task accuracy. We introduce the Metadata-Sensitive Weighted-Encoding Ensemble Model (MSWEEM), which integrates annotator meta-features like fatigue and speeding. First, our results show MSWEEM outperforms standard ensembles by 14\% on held-out data and 12\% on an alternative dataset. Second, we find that incorporating signals of annotator behavior, such as speed and fatigue, significantly boosts model performance. Third, we find that annotators with higher qualifications, such as Master's, deliver more consistent and faster annotations. Given the increasing uncertainty over annotation quality, our experiments show that understanding annotator patterns is crucial for enhancing model accuracy in user behavior prediction.

MediaSpin: Exploring Media Bias Through Fine-Grained Analysis of News Headlines

Dec 03, 2024Abstract:In this paper, we introduce the MediaSpin dataset aiming to help in the development of models that can detect different forms of media bias present in news headlines, developed through human-supervised and -validated Large Language Model (LLM) labeling of media bias. This corpus comprises 78,910 pairs of news headlines and annotations with explanations of the 13 distinct types of media bias categories assigned. We demonstrate the usefulness of our dataset for automated bias detection in news edits.

Fine-Tuning LLMs with Noisy Data for Political Argument Generation

Nov 25, 2024Abstract:The incivility in social media discourse complicates the deployment of automated text generation models for politically sensitive content. Fine-tuning and prompting strategies are critical, but underexplored, solutions to mitigate toxicity in such contexts. This study investigates the fine-tuning and prompting effects on GPT-3.5 Turbo using subsets of the CLAPTON dataset of political discussion posts, comprising Twitter and Reddit data labeled for their justification, reciprocity and incivility. Fine-tuned models on Reddit data scored highest on discussion quality, while combined noisy data led to persistent toxicity. Prompting strategies reduced specific toxic traits, such as personal attacks, but had limited broader impact. The findings emphasize that high-quality data and well-crafted prompts are essential to reduce incivility and improve rhetorical quality in automated political discourse generation.

Hateful Meme Detection through Context-Sensitive Prompting and Fine-Grained Labeling

Nov 13, 2024Abstract:The prevalence of multi-modal content on social media complicates automated moderation strategies. This calls for an enhancement in multi-modal classification and a deeper understanding of understated meanings in images and memes. Although previous efforts have aimed at improving model performance through fine-tuning, few have explored an end-to-end optimization pipeline that accounts for modalities, prompting, labeling, and fine-tuning. In this study, we propose an end-to-end conceptual framework for model optimization in complex tasks. Experiments support the efficacy of this traditional yet novel framework, achieving the highest accuracy and AUROC. Ablation experiments demonstrate that isolated optimizations are not ineffective on their own.

Impact of Decoding Methods on Human Alignment of Conversational LLMs

Jul 28, 2024

Abstract:To be included into chatbot systems, Large language models (LLMs) must be aligned with human conversational conventions. However, being trained mainly on web-scraped data gives existing LLMs a voice closer to informational text than actual human speech. In this paper, we examine the effect of decoding methods on the alignment between LLM-generated and human conversations, including Beam Search, Top K Sampling, and Nucleus Sampling. We present new measures of alignment in substance, style, and psychometric orientation, and experiment with two conversation datasets. Our results provide subtle insights: better alignment is attributed to fewer beams in Beam Search and lower values of P in Nucleus Sampling. We also find that task-oriented and open-ended datasets perform differently in terms of alignment, indicating the significance of taking into account the context of the interaction.

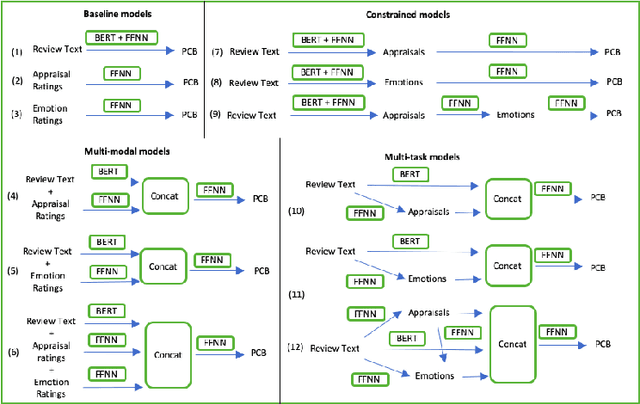

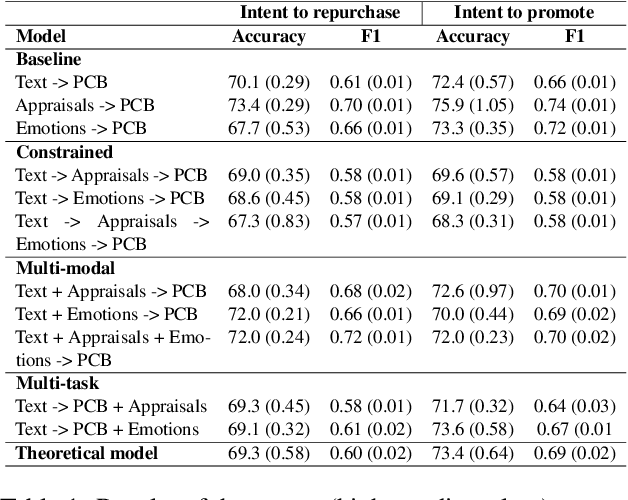

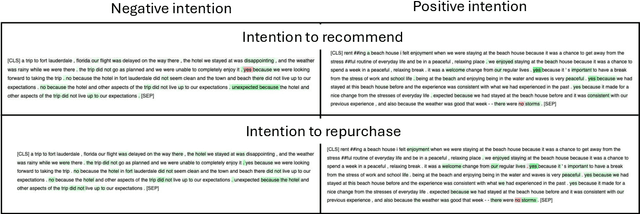

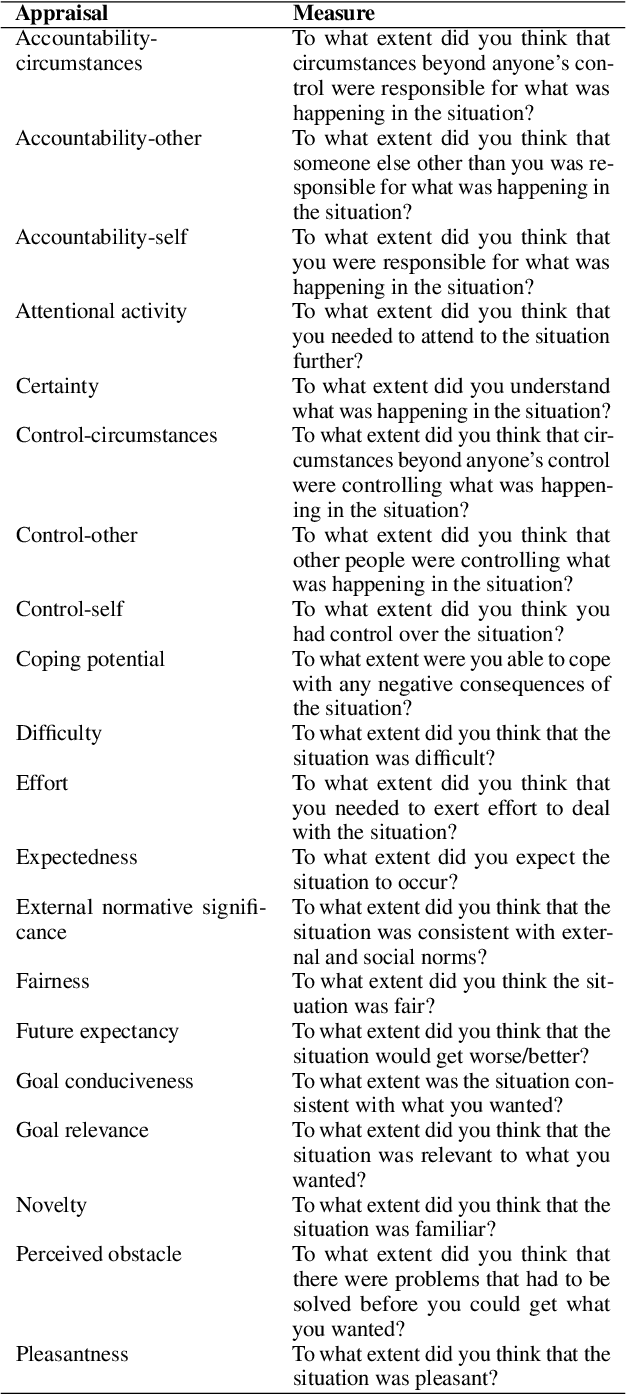

Beyond Text: Leveraging Multi-Task Learning and Cognitive Appraisal Theory for Post-Purchase Intention Analysis

Jul 11, 2024

Abstract:Supervised machine-learning models for predicting user behavior offer a challenging classification problem with lower average prediction performance scores than other text classification tasks. This study evaluates multi-task learning frameworks grounded in Cognitive Appraisal Theory to predict user behavior as a function of users' self-expression and psychological attributes. Our experiments show that users' language and traits improve predictions above and beyond models predicting only from text. Our findings highlight the importance of integrating psychological constructs into NLP to enhance the understanding and prediction of user actions. We close with a discussion of the implications for future applications of large language models for computational psychology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge