Kiran Madhusudhanan

University of Hildesheim

TabResFlow: A Normalizing Spline Flow Model for Probabilistic Univariate Tabular Regression

Aug 23, 2025Abstract:Tabular regression is a well-studied problem with numerous industrial applications, yet most existing approaches focus on point estimation, often leading to overconfident predictions. This issue is particularly critical in industrial automation, where trustworthy decision-making is essential. Probabilistic regression models address this challenge by modeling prediction uncertainty. However, many conventional methods assume a fixed-shape distribution (typically Gaussian), and resort to estimating distribution parameters. This assumption is often restrictive, as real-world target distributions can be highly complex. To overcome this limitation, we introduce TabResFlow, a Normalizing Spline Flow model designed specifically for univariate tabular regression, where commonly used simple flow networks like RealNVP and Masked Autoregressive Flow (MAF) are unsuitable. TabResFlow consists of three key components: (1) An MLP encoder for each numerical feature. (2) A fully connected ResNet backbone for expressive feature extraction. (3) A conditional spline-based normalizing flow for flexible and tractable density estimation. We evaluate TabResFlow on nine public benchmark datasets, demonstrating that it consistently surpasses existing probabilistic regression models on likelihood scores. Our results demonstrate 9.64% improvement compared to the strongest probabilistic regression model (TreeFlow), and on average 5.6 times speed-up in inference time compared to the strongest deep learning alternative (NodeFlow). Additionally, we validate the practical applicability of TabResFlow in a real-world used car price prediction task under selective regression. To measure performance in this setting, we introduce a novel Area Under Risk Coverage (AURC) metric and show that TabResFlow achieves superior results across this metric.

Wave-Mask/Mix: Exploring Wavelet-Based Augmentations for Time Series Forecasting

Aug 20, 2024Abstract:Data augmentation is important for improving machine learning model performance when faced with limited real-world data. In time series forecasting (TSF), where accurate predictions are crucial in fields like finance, healthcare, and manufacturing, traditional augmentation methods for classification tasks are insufficient to maintain temporal coherence. This research introduces two augmentation approaches using the discrete wavelet transform (DWT) to adjust frequency elements while preserving temporal dependencies in time series data. Our methods, Wavelet Masking (WaveMask) and Wavelet Mixing (WaveMix), are evaluated against established baselines across various forecasting horizons. To the best of our knowledge, this is the first study to conduct extensive experiments on multivariate time series using Discrete Wavelet Transform as an augmentation technique. Experimental results demonstrate that our techniques achieve competitive results with previous methods. We also explore cold-start forecasting using downsampled training datasets, comparing outcomes to baseline methods.

Marginalization Consistent Mixture of Separable Flows for Probabilistic Irregular Time Series Forecasting

Jun 11, 2024Abstract:Probabilistic forecasting models for joint distributions of targets in irregular time series are a heavily under-researched area in machine learning with, to the best of our knowledge, only three models researched so far: GPR, the Gaussian Process Regression model~\citep{Durichen2015.Multitask}, TACTiS, the Transformer-Attentional Copulas for Time Series~\cite{Drouin2022.Tactis, ashok2024tactis} and ProFITi \citep{Yalavarthi2024.Probabilistica}, a multivariate normalizing flow model based on invertible attention layers. While ProFITi, thanks to using multivariate normalizing flows, is the more expressive model with better predictive performance, we will show that it suffers from marginalization inconsistency: it does not guarantee that the marginal distributions of a subset of variables in its predictive distributions coincide with the directly predicted distributions of these variables. Also, TACTiS does not provide any guarantees for marginalization consistency. We develop a novel probabilistic irregular time series forecasting model, Marginalization Consistent Mixtures of Separable Flows (moses), that mixes several normalizing flows with (i) Gaussian Processes with full covariance matrix as source distributions and (ii) a separable invertible transformation, aiming to combine the expressivity of normalizing flows with the marginalization consistency of Gaussians. In experiments on four different datasets we show that moses outperforms other state-of-the-art marginalization consistent models, performs on par with ProFITi, but different from ProFITi, guarantee marginalization consistency.

Hyperparameter Tuning MLPs for Probabilistic Time Series Forecasting

Mar 07, 2024Abstract:Time series forecasting attempts to predict future events by analyzing past trends and patterns. Although well researched, certain critical aspects pertaining to the use of deep learning in time series forecasting remain ambiguous. Our research primarily focuses on examining the impact of specific hyperparameters related to time series, such as context length and validation strategy, on the performance of the state-of-the-art MLP model in time series forecasting. We have conducted a comprehensive series of experiments involving 4800 configurations per dataset across 20 time series forecasting datasets, and our findings demonstrate the importance of tuning these parameters. Furthermore, in this work, we introduce the largest metadataset for timeseries forecasting to date, named TSBench, comprising 97200 evaluations, which is a twentyfold increase compared to previous works in the field. Finally, we demonstrate the utility of the created metadataset on multi-fidelity hyperparameter optimization tasks.

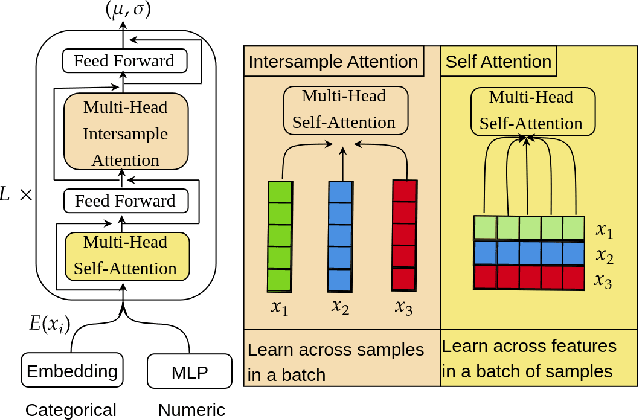

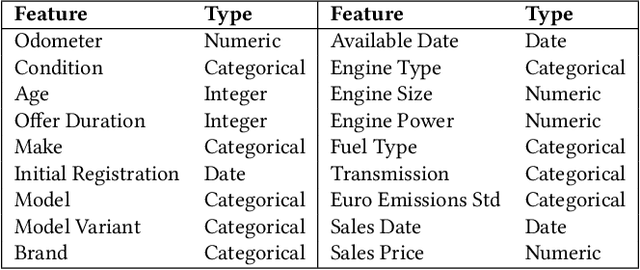

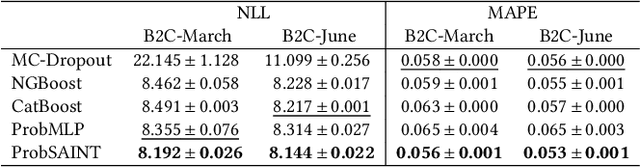

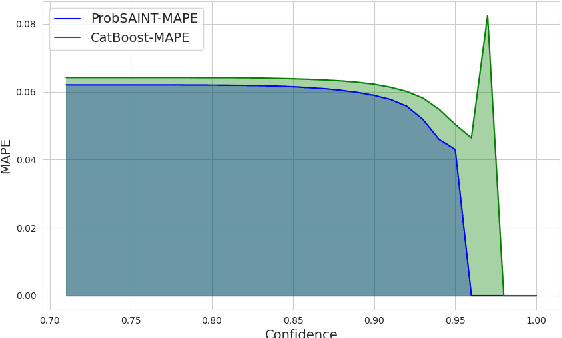

ProbSAINT: Probabilistic Tabular Regression for Used Car Pricing

Mar 06, 2024

Abstract:Used car pricing is a critical aspect of the automotive industry, influenced by many economic factors and market dynamics. With the recent surge in online marketplaces and increased demand for used cars, accurate pricing would benefit both buyers and sellers by ensuring fair transactions. However, the transition towards automated pricing algorithms using machine learning necessitates the comprehension of model uncertainties, specifically the ability to flag predictions that the model is unsure about. Although recent literature proposes the use of boosting algorithms or nearest neighbor-based approaches for swift and precise price predictions, encapsulating model uncertainties with such algorithms presents a complex challenge. We introduce ProbSAINT, a model that offers a principled approach for uncertainty quantification of its price predictions, along with accurate point predictions that are comparable to state-of-the-art boosting techniques. Furthermore, acknowledging that the business prefers pricing used cars based on the number of days the vehicle was listed for sale, we show how ProbSAINT can be used as a dynamic forecasting model for predicting price probabilities for different expected offer duration. Our experiments further indicate that ProbSAINT is especially accurate on instances where it is highly certain. This proves the applicability of its probabilistic predictions in real-world scenarios where trustworthiness is crucial.

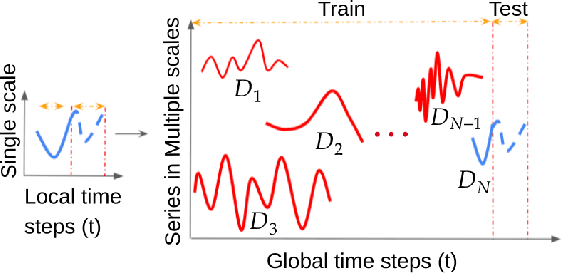

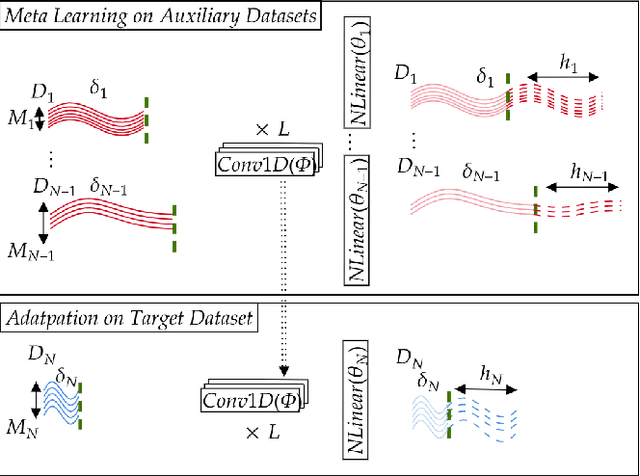

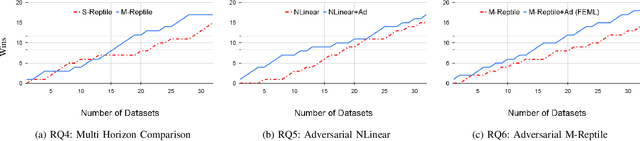

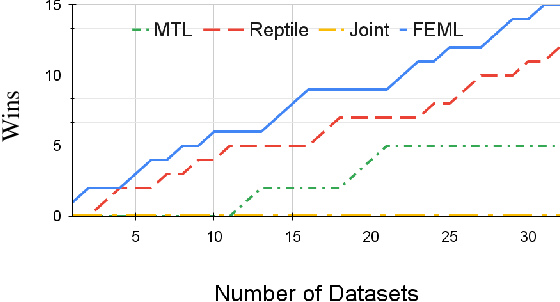

Forecasting Early with Meta Learning

Jul 19, 2023

Abstract:In the early observation period of a time series, there might be only a few historic observations available to learn a model. However, in cases where an existing prior set of datasets is available, Meta learning methods can be applicable. In this paper, we devise a Meta learning method that exploits samples from additional datasets and learns to augment time series through adversarial learning as an auxiliary task for the target dataset. Our model (FEML), is equipped with a shared Convolutional backbone that learns features for varying length inputs from different datasets and has dataset specific heads to forecast for different output lengths. We show that FEML can meta learn across datasets and by additionally learning on adversarial generated samples as auxiliary samples for the target dataset, it can improve the forecasting performance compared to single task learning, and various solutions adapted from Joint learning, Multi-task learning and classic forecasting baselines.

Put Attention to Temporal Saliency Patterns of Multi-Horizon Time Series

Dec 15, 2022Abstract:Time series, sets of sequences in chronological order, are essential data in statistical research with many forecasting applications. Although recent performance in many Transformer-based models has been noticeable, long multi-horizon time series forecasting remains a very challenging task. Going beyond transformers in sequence translation and transduction research, we observe the effects of down-and-up samplings that can nudge temporal saliency patterns to emerge in time sequences. Motivated by the mentioned observation, in this paper, we propose a novel architecture, Temporal Saliency Detection (TSD), on top of the attention mechanism and apply it to multi-horizon time series prediction. We renovate the traditional encoder-decoder architecture by making as a series of deep convolutional blocks to work in tandem with the multi-head self-attention. The proposed TSD approach facilitates the multiresolution of saliency patterns upon condensed multi-heads, thus progressively enhancing complex time series forecasting. Experimental results illustrate that our proposed approach has significantly outperformed existing state-of-the-art methods across multiple standard benchmark datasets in many far-horizon forecasting settings. Overall, TSD achieves 31% and 46% relative improvement over the current state-of-the-art models in multivariate and univariate time series forecasting scenarios on standard benchmarks. The Git repository is available at https://github.com/duongtrung/time-series-temporal-saliency-patterns.

A.I. and Data-Driven Mobility at Volkswagen Financial Services AG

Feb 09, 2022Abstract:Machine learning is being widely adapted in industrial applications owing to the capabilities of commercially available hardware and rapidly advancing research. Volkswagen Financial Services (VWFS), as a market leader in vehicle leasing services, aims to leverage existing proprietary data and the latest research to enhance existing and derive new business processes. The collaboration between Information Systems and Machine Learning Lab (ISMLL) and VWFS serves to realize this goal. In this paper, we propose methods in the fields of recommender systems, object detection, and forecasting that enable data-driven decisions for the vehicle life-cycle at VWFS.

Yformer: U-Net Inspired Transformer Architecture for Far Horizon Time Series Forecasting

Oct 13, 2021

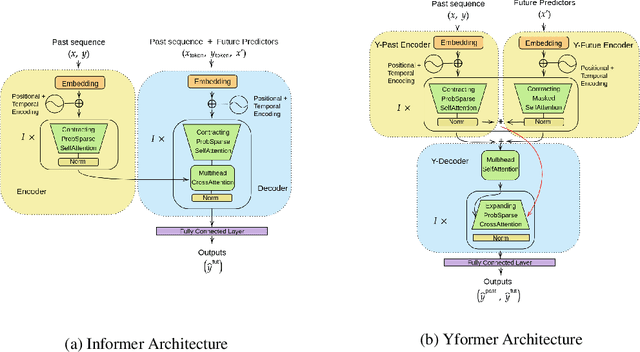

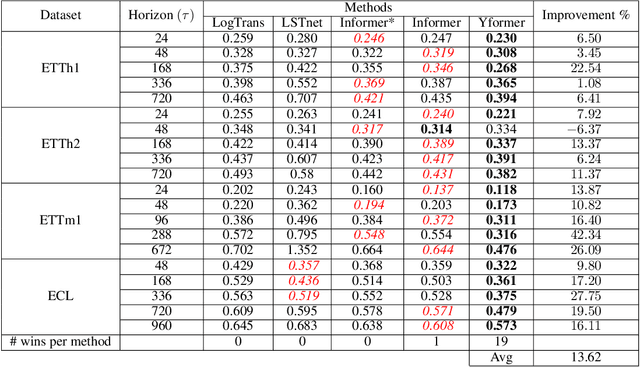

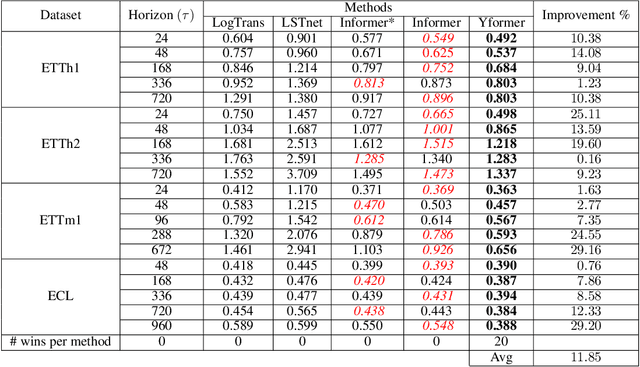

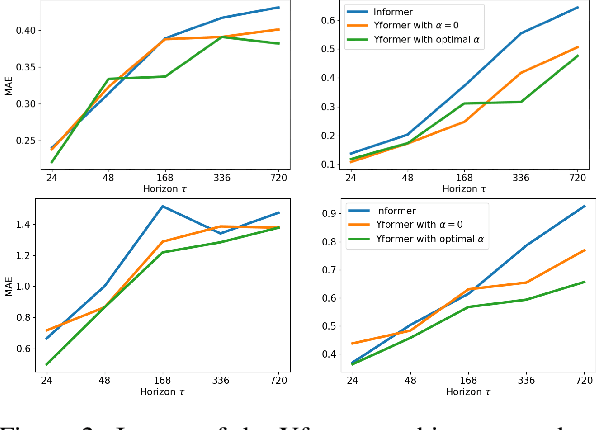

Abstract:Time series data is ubiquitous in research as well as in a wide variety of industrial applications. Effectively analyzing the available historical data and providing insights into the far future allows us to make effective decisions. Recent research has witnessed the superior performance of transformer-based architectures, especially in the regime of far horizon time series forecasting. However, the current state of the art sparse Transformer architectures fail to couple down- and upsampling procedures to produce outputs in a similar resolution as the input. We propose the Yformer model, based on a novel Y-shaped encoder-decoder architecture that (1) uses direct connection from the downscaled encoder layer to the corresponding upsampled decoder layer in a U-Net inspired architecture, (2) Combines the downscaling/upsampling with sparse attention to capture long-range effects, and (3) stabilizes the encoder-decoder stacks with the addition of an auxiliary reconstruction loss. Extensive experiments have been conducted with relevant baselines on four benchmark datasets, demonstrating an average improvement of 19.82, 18.41 percentage MSE and 13.62, 11.85 percentage MAE in comparison to the current state of the art for the univariate and the multivariate settings respectively.

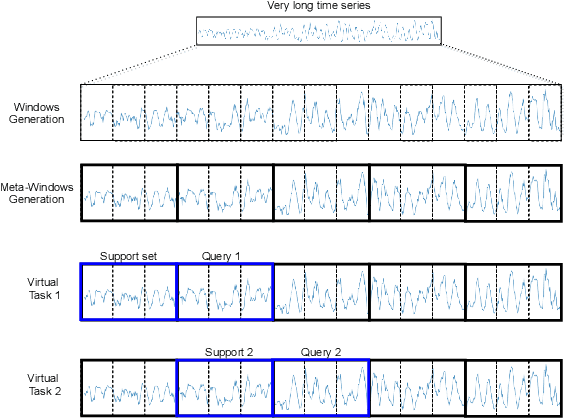

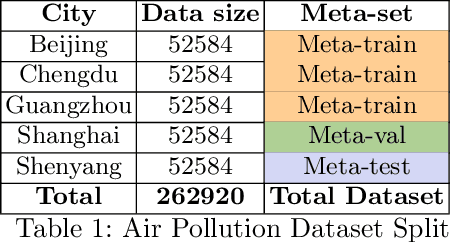

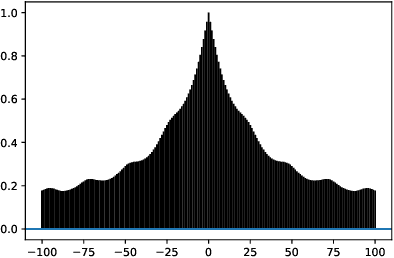

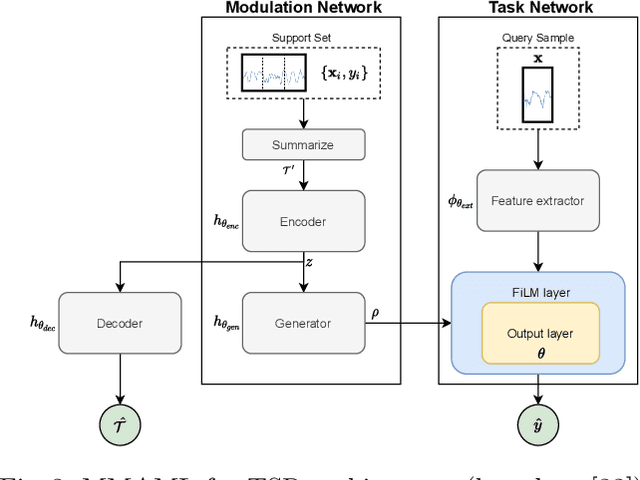

Multimodal Meta-Learning for Time Series Regression

Aug 05, 2021

Abstract:Recent work has shown the efficiency of deep learning models such as Fully Convolutional Networks (FCN) or Recurrent Neural Networks (RNN) to deal with Time Series Regression (TSR) problems. These models sometimes need a lot of data to be able to generalize, yet the time series are sometimes not long enough to be able to learn patterns. Therefore, it is important to make use of information across time series to improve learning. In this paper, we will explore the idea of using meta-learning for quickly adapting model parameters to new short-history time series by modifying the original idea of Model Agnostic Meta-Learning (MAML) \cite{finn2017model}. Moreover, based on prior work on multimodal MAML \cite{vuorio2019multimodal}, we propose a method for conditioning parameters of the model through an auxiliary network that encodes global information of the time series to extract meta-features. Finally, we apply the data to time series of different domains, such as pollution measurements, heart-rate sensors, and electrical battery data. We show empirically that our proposed meta-learning method learns TSR with few data fast and outperforms the baselines in 9 of 12 experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge