Keshi Ge

Merak: An Efficient Distributed DNN Training Framework with Automated 3D Parallelism for Giant Foundation Models

Jun 21, 2022

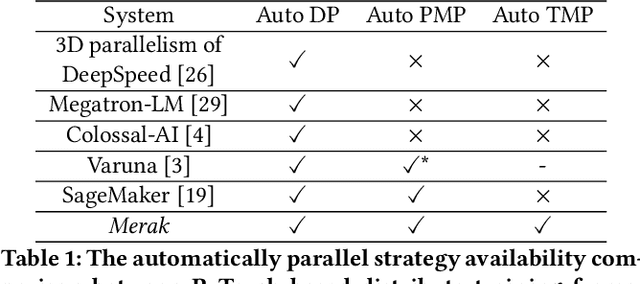

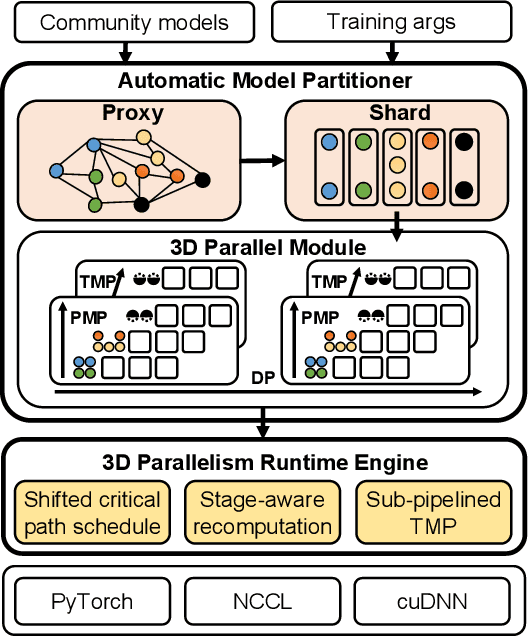

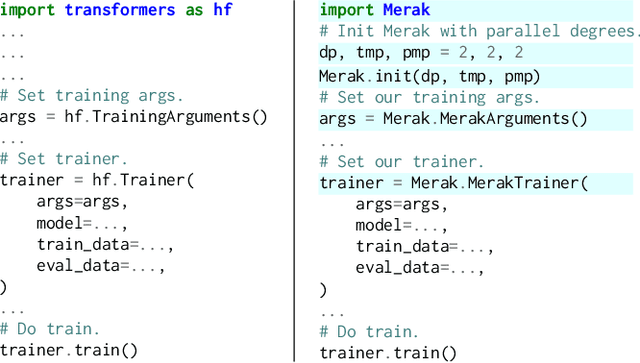

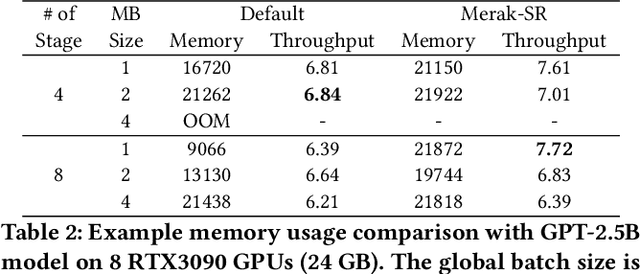

Abstract:Foundation models are becoming the dominant deep learning technologies. Pretraining a foundation model is always time-consumed due to the large scale of both the model parameter and training dataset. Besides being computing-intensive, the training process is extremely memory-intensive and communication-intensive. These features make it necessary to apply 3D parallelism, which integrates data parallelism, pipeline model parallelism and tensor model parallelism, to achieve high training efficiency. To achieve this goal, some custom software frameworks such as Megatron-LM and DeepSpeed are developed. However, current 3D parallelism frameworks still meet two issues: i) they are not transparent to model developers, which need to manually modify the model to parallelize training. ii) their utilization of computation, GPU memory and network bandwidth are not sufficient. We propose Merak, an automated 3D parallelism deep learning training framework with high resource utilization. Merak automatically deploys with an automatic model partitioner, which uses a graph sharding algorithm on a proxy representation of the model. Merak also presents the non-intrusive API for scaling out foundation model training with minimal code modification. In addition, we design a high-performance 3D parallel runtime engine in Merak. It uses several techniques to exploit available training resources, including shifted critical path pipeline schedule that brings a higher computation utilization, stage-aware recomputation that makes use of idle worker memory, and sub-pipelined tensor model parallelism that overlaps communication and computation. Experiments on 64 GPUs show Merak can speedup the training performance over the state-of-the-art 3D parallelism frameworks of models with 1.5, 2.5, 8.3, and 20 billion parameters by up to 1.42X, 1.39X, 1.43X, and 1.61X, respectively.

S2 Reducer: High-Performance Sparse Communication to Accelerate Distributed Deep Learning

Oct 05, 2021

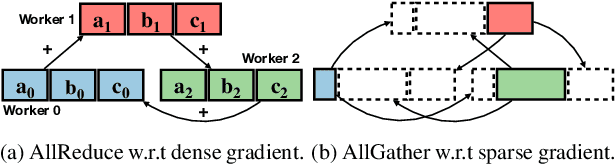

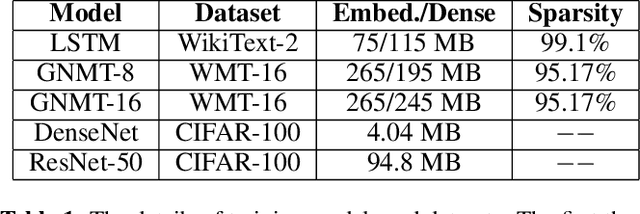

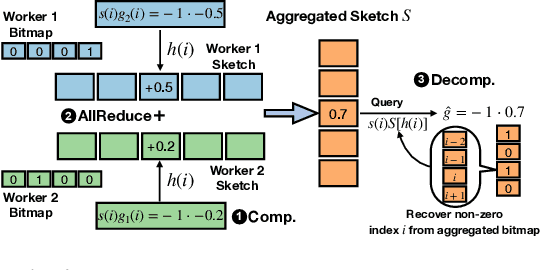

Abstract:Distributed stochastic gradient descent (SGD) approach has been widely used in large-scale deep learning, and the gradient collective method is vital to ensure the training scalability of the distributed deep learning system. Collective communication such as AllReduce has been widely adopted for the distributed SGD process to reduce the communication time. However, AllReduce incurs large bandwidth resources while most gradients are sparse in many cases since many gradient values are zeros and should be efficiently compressed for bandwidth saving. To reduce the sparse gradient communication overhead, we propose Sparse-Sketch Reducer (S2 Reducer), a novel sketch-based sparse gradient aggregation method with convergence guarantees. S2 Reducer reduces the communication cost by only compressing the non-zero gradients with count-sketch and bitmap, and enables the efficient AllReduce operators for parallel SGD training. We perform extensive evaluation against four state-of-the-art methods over five training models. Our results show that S2 Reducer converges to the same accuracy, reduces 81\% sparse communication overhead, and achieves 1.8$ \times $ speedup compared to state-of-the-art approaches.

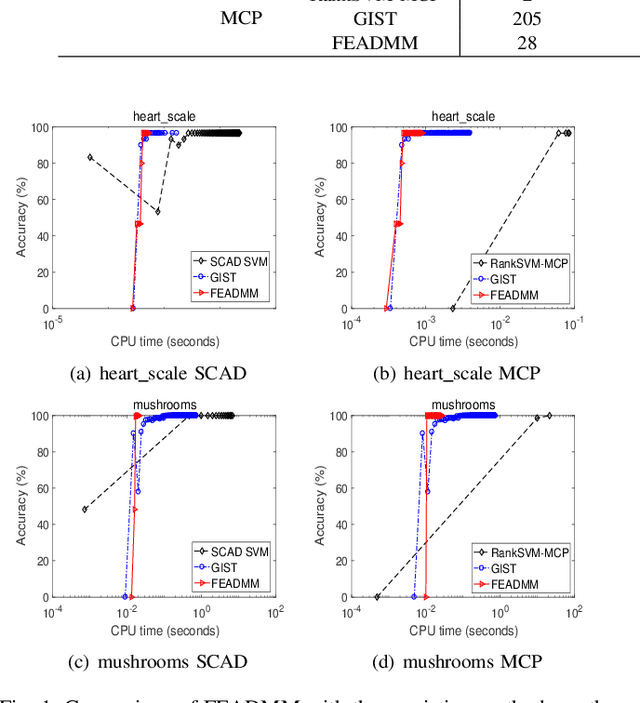

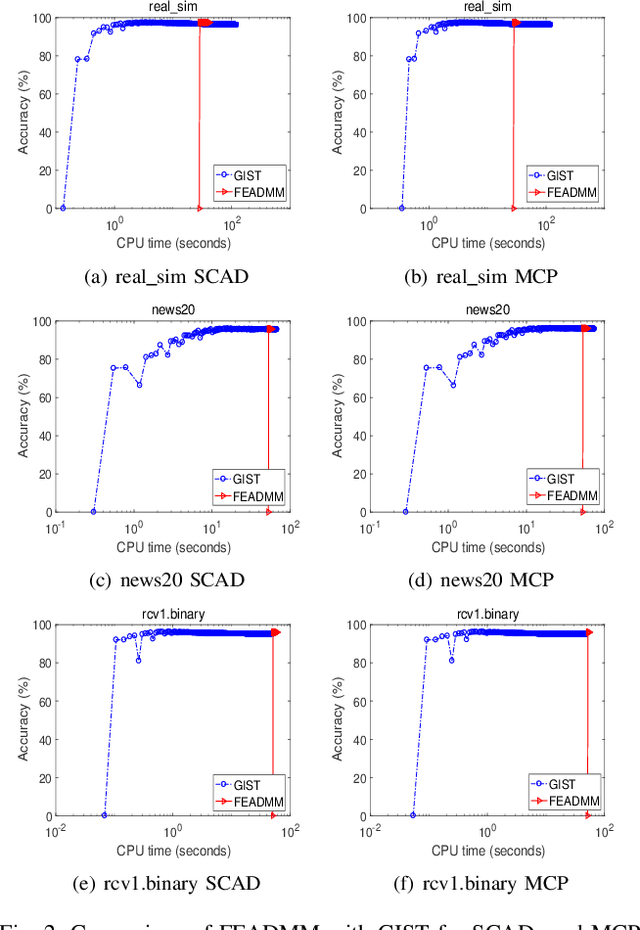

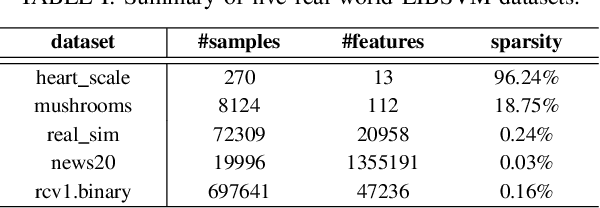

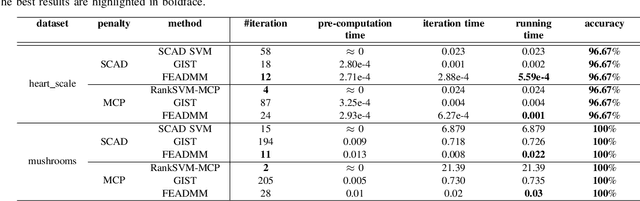

An Efficient ADMM-Based Algorithm to Nonconvex Penalized Support Vector Machines

Sep 11, 2018

Abstract:Support vector machines (SVMs) with sparsity-inducing nonconvex penalties have received considerable attentions for the characteristics of automatic classification and variable selection. However, it is quite challenging to solve the nonconvex penalized SVMs due to their nondifferentiability, nonsmoothness and nonconvexity. In this paper, we propose an efficient ADMM-based algorithm to the nonconvex penalized SVMs. The proposed algorithm covers a large class of commonly used nonconvex regularization terms including the smooth clipped absolute deviation (SCAD) penalty, minimax concave penalty (MCP), log-sum penalty (LSP) and capped-$\ell_1$ penalty. The computational complexity analysis shows that the proposed algorithm enjoys low computational cost. Moreover, the convergence of the proposed algorithm is guaranteed. Extensive experimental evaluations on five benchmark datasets demonstrate the superior performance of the proposed algorithm to other three state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge