Kersten Diers

Impact of Leakage on Data Harmonization in Machine Learning Pipelines in Class Imbalance Across Sites

Oct 25, 2024

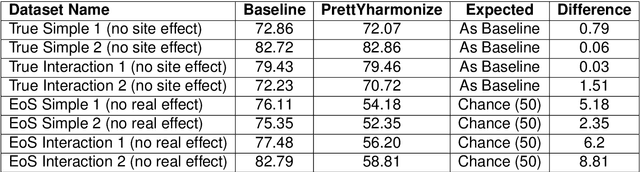

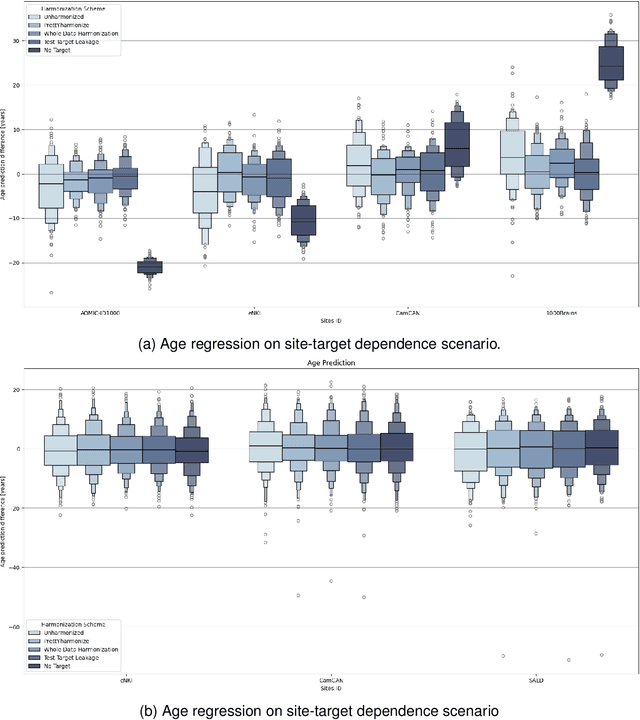

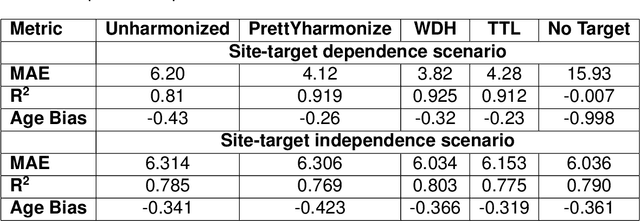

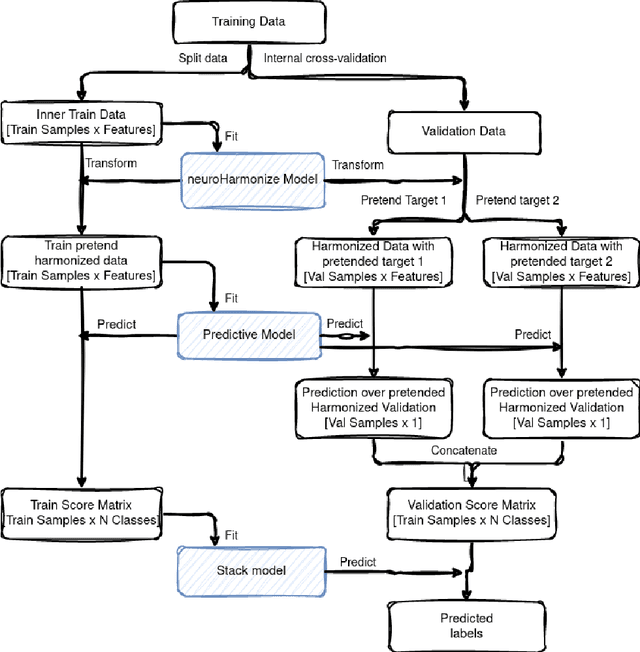

Abstract:Machine learning (ML) models benefit from large datasets. Collecting data in biomedical domains is costly and challenging, hence, combining datasets has become a common practice. However, datasets obtained under different conditions could present undesired site-specific variability. Data harmonization methods aim to remove site-specific variance while retaining biologically relevant information. This study evaluates the effectiveness of popularly used ComBat-based methods for harmonizing data in scenarios where the class balance is not equal across sites. We find that these methods struggle with data leakage issues. To overcome this problem, we propose a novel approach PrettYharmonize, designed to harmonize data by pretending the target labels. We validate our approach using controlled datasets designed to benchmark the utility of harmonization. Finally, using real-world MRI and clinical data, we compare leakage-prone methods with PrettYharmonize and show that it achieves comparable performance while avoiding data leakage, particularly in site-target-dependence scenarios.

An automated, geometry-based method for the analysis of hippocampal thickness

Feb 01, 2023Abstract:The hippocampus is one of the most studied neuroanatomical structures due to its involvement in attention, learning, and memory as well as its atrophy in ageing, neurological, and psychiatric diseases. Hippocampal shape changes, however, are complex and cannot be fully characterized by a single summary metric such as hippocampal volume as determined from MR images. In this work, we propose an automated, geometry-based approach for the unfolding, point-wise correspondence, and local analysis of hippocampal shape features such as thickness and curvature. Starting from an automated segmentation of hippocampal subfields, we create a 3D tetrahedral mesh model as well as a 3D intrinsic coordinate system of the hippocampal body. From this coordinate system, we derive local curvature and thickness estimates as well as a 2D sheet for hippocampal unfolding. We evaluate the performance of our algorithm with a series of experiments to quantify neurodegenerative changes in Mild Cognitive Impairment and Alzheimer's disease dementia. We find that hippocampal thickness estimates detect known differences between clinical groups and can determine the location of these effects on the hippocampal sheet. Further, thickness estimates improve classification of clinical groups and cognitively unimpaired controls when added as an additional predictor. Comparable results are obtained with different datasets and segmentation algorithms. Taken together, we replicate canonical findings on hippocampal volume/shape changes in dementia, extend them by gaining insight into their spatial localization on the hippocampal sheet, and provide additional, complementary information beyond traditional measures. We provide a new set of sensitive processing and analysis tools for the analysis of hippocampal geometry that allows comparisons across studies without relying on image registration or requiring manual intervention.

Automated Olfactory Bulb Segmentation on High Resolutional T2-Weighted MRI

Aug 09, 2021

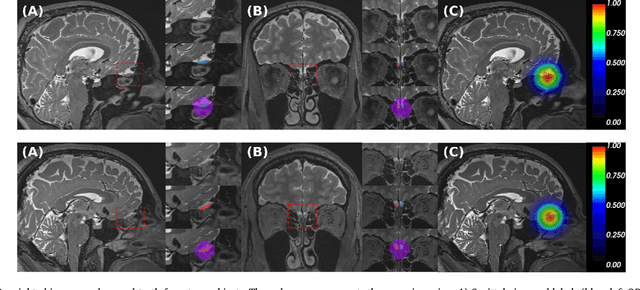

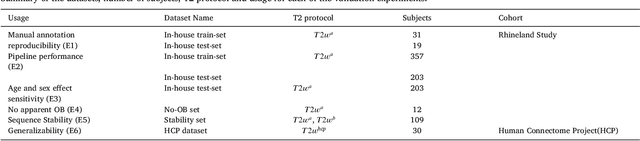

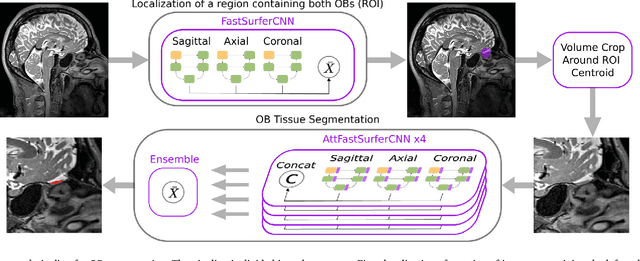

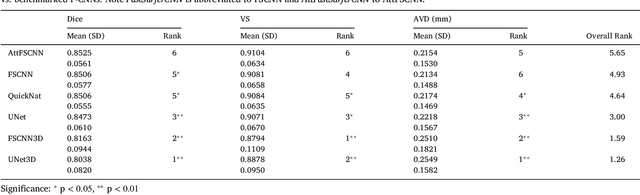

Abstract:The neuroimage analysis community has neglected the automated segmentation of the olfactory bulb (OB) despite its crucial role in olfactory function. The lack of an automatic processing method for the OB can be explained by its challenging properties. Nonetheless, recent advances in MRI acquisition techniques and resolution have allowed raters to generate more reliable manual annotations. Furthermore, the high accuracy of deep learning methods for solving semantic segmentation problems provides us with an option to reliably assess even small structures. In this work, we introduce a novel, fast, and fully automated deep learning pipeline to accurately segment OB tissue on sub-millimeter T2-weighted (T2w) whole-brain MR images. To this end, we designed a three-stage pipeline: (1) Localization of a region containing both OBs using FastSurferCNN, (2) Segmentation of OB tissue within the localized region through four independent AttFastSurferCNN - a novel deep learning architecture with a self-attention mechanism to improve modeling of contextual information, and (3) Ensemble of the predicted label maps. The OB pipeline exhibits high performance in terms of boundary delineation, OB localization, and volume estimation across a wide range of ages in 203 participants of the Rhineland Study. Moreover, it also generalizes to scans of an independent dataset never encountered during training, the Human Connectome Project (HCP), with different acquisition parameters and demographics, evaluated in 30 cases at the native 0.7mm HCP resolution, and the default 0.8mm pipeline resolution. We extensively validated our pipeline not only with respect to segmentation accuracy but also to known OB volume effects, where it can sensitively replicate age effects.

FastSurfer -- A fast and accurate deep learning based neuroimaging pipeline

Oct 09, 2019

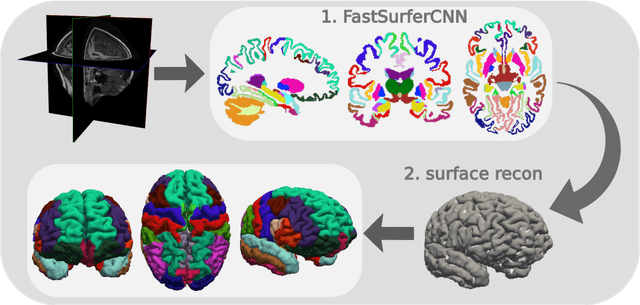

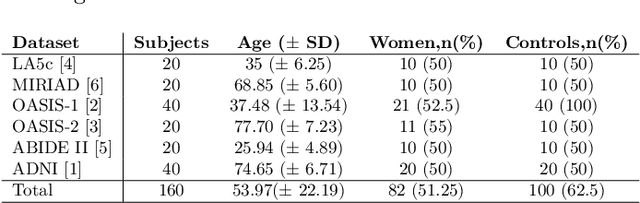

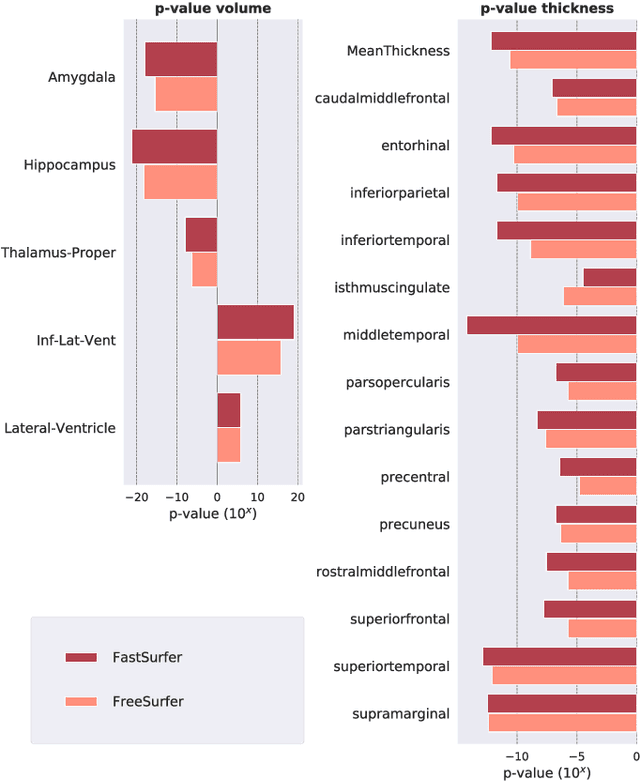

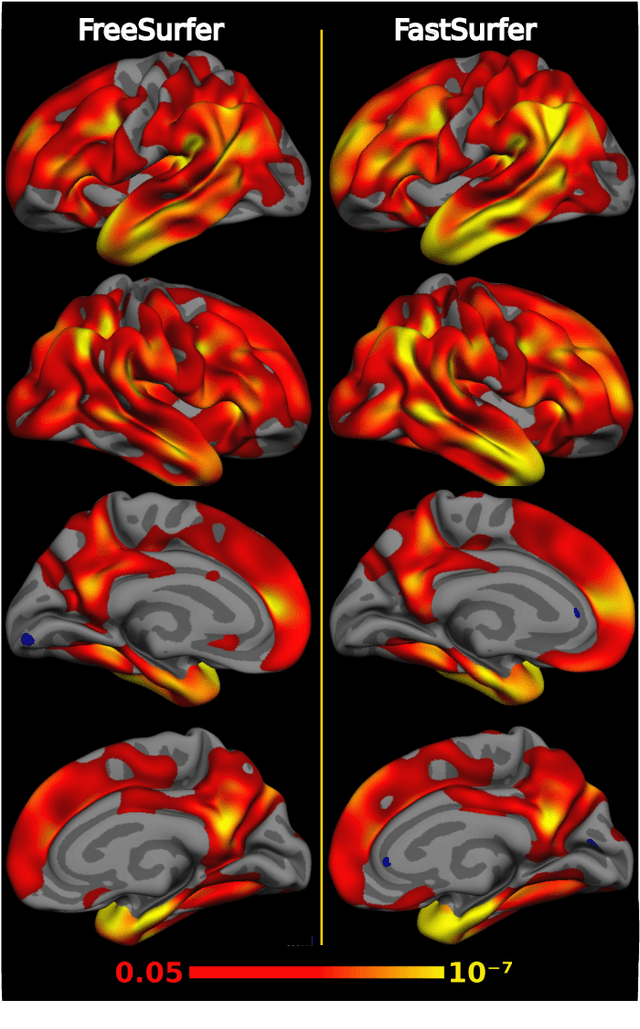

Abstract:Traditional neuroimage analysis pipelines involve computationally intensive, time-consuming optimization steps, and thus, do not scale well to large cohort studies with thousands or tens of thousands of individuals. In this work we propose a fast and accurate deep learning based neuroimaging pipeline for the automated processing of structural human brain MRI scans, including surface reconstruction and cortical parcellation. To this end, we introduce an advanced deep learning architecture capable of whole brain segmentation into 95 classes in under 1 minute, mimicking FreeSurfer's anatomical segmentation and cortical parcellation. The network architecture incorporates local and global competition via competitive dense blocks and competitive skip pathways, as well as multi-slice information aggregation that specifically tailor network performance towards accurate segmentation of both cortical and sub-cortical structures. Further, we perform fast cortical surface reconstruction and thickness analysis by introducing a spectral spherical embedding and by directly mapping the cortical labels from the image to the surface. This approach provides a full FreeSurfer alternative for volumetric analysis (within 1 minute) and surface-based thickness analysis (within only around 1h run time). For sustainability of this approach we perform extensive validation: we assert high segmentation accuracy on several unseen datasets, measure generalizability and demonstrate increased test-retest reliability, and increased sensitivity to disease effects relative to traditional FreeSurfer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge