Ken Satoh

Data Augmented Pipeline for Legal Information Extraction and Reasoning

Jan 09, 2026Abstract:In this paper, we propose a pipeline leveraging Large Language Models (LLMs) for data augmentation in Information Extraction tasks within the legal domain. The proposed method is both simple and effective, significantly reducing the manual effort required for data annotation while enhancing the robustness of Information Extraction systems. Furthermore, the method is generalizable, making it applicable to various Natural Language Processing (NLP) tasks beyond the legal domain.

Can Legislation Be Made Machine-Readable in PROLEG?

Jan 04, 2026Abstract:The anticipated positive social impact of regulatory processes requires both the accuracy and efficiency of their application. Modern artificial intelligence technologies, including natural language processing and machine-assisted reasoning, hold great promise for addressing this challenge. We present a framework to address the challenge of tools for regulatory application, based on current state-of-the-art (SOTA) methods for natural language processing (large language models or LLMs) and formalization of legal reasoning (the legal representation system PROLEG). As an example, we focus on Article 6 of the European General Data Protection Regulation (GDPR). In our framework, a single LLM prompt simultaneously transforms legal text into if-then rules and a corresponding PROLEG encoding, which are then validated and refined by legal domain experts. The final output is an executable PROLEG program that can produce human-readable explanations for instances of GDPR decisions. We describe processes to support the end-to-end transformation of a segment of a regulatory document (Article 6 from GDPR), including the prompting frame to guide an LLM to "compile" natural language text to if-then rules, then to further "compile" the vetted if-then rules to PROLEG. Finally, we produce an instance that shows the PROLEG execution. We conclude by summarizing the value of this approach and note observed limitations with suggestions to further develop such technologies for capturing and deploying regulatory frameworks.

FC-CONAN: An Exhaustively Paired Dataset for Robust Evaluation of Retrieval Systems

Jan 04, 2026Abstract:Hate speech (HS) is a critical issue in online discourse, and one promising strategy to counter it is through the use of counter-narratives (CNs). Datasets linking HS with CNs are essential for advancing counterspeech research. However, even flagship resources like CONAN (Chung et al., 2019) annotate only a sparse subset of all possible HS-CN pairs, limiting evaluation. We introduce FC-CONAN (Fully Connected CONAN), the first dataset created by exhaustively considering all combinations of 45 English HS messages and 129 CNs. A two-stage annotation process involving nine annotators and four validators produces four partitions-Diamond, Gold, Silver, and Bronze-that balance reliability and scale. None of the labeled pairs overlap with CONAN, uncovering hundreds of previously unlabelled positives. FC-CONAN enables more faithful evaluation of counterspeech retrieval systems and facilitates detailed error analysis. The dataset is publicly available.

Multi-Agent Legal Verifier Systems for Data Transfer Planning

Nov 14, 2025Abstract:Legal compliance in AI-driven data transfer planning is becoming increasingly critical under stringent privacy regulations such as the Japanese Act on the Protection of Personal Information (APPI). We propose a multi-agent legal verifier that decomposes compliance checking into specialized agents for statutory interpretation, business context evaluation, and risk assessment, coordinated through a structured synthesis protocol. Evaluated on a stratified dataset of 200 Amended APPI Article 16 cases with clearly defined ground truth labels and multiple performance metrics, the system achieves 72% accuracy, which is 21 percentage points higher than a single-agent baseline, including 90% accuracy on clear compliance cases (vs. 16% for the baseline) while maintaining perfect detection of clear violations. While challenges remain in ambiguous scenarios, these results show that domain specialization and coordinated reasoning can meaningfully improve legal AI performance, providing a scalable and regulation-aware framework for trustworthy and interpretable automated compliance verification.

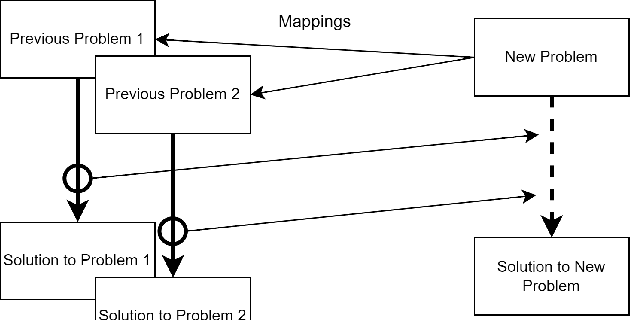

An Argumentative Explanation Framework for Generalized Reason Model with Inconsistent Precedents

Oct 22, 2025Abstract:Precedential constraint is one foundation of case-based reasoning in AI and Law. It generally assumes that the underlying set of precedents must be consistent. To relax this assumption, a generalized notion of the reason model has been introduced. While several argumentative explanation approaches exist for reasoning with precedents based on the traditional consistent reason model, there has been no corresponding argumentative explanation method developed for this generalized reasoning framework accommodating inconsistent precedents. To address this question, this paper examines an extension of the derivation state argumentation framework (DSA-framework) to explain the reasoning according to the generalized notion of the reason model.

Anonymous Public Announcements

Apr 21, 2025

Abstract:We formalise the notion of an anonymous public announcement in the tradition of public announcement logic. Such announcements can be seen as in-between a public announcement from ``the outside" (an announcement of $\phi$) and a public announcement by one of the agents (an announcement of $K_a\phi$): we get more information than just $\phi$, but not (necessarily) about exactly who made it. Even if such an announcement is prima facie anonymous, depending on the background knowledge of the agents it might reveal the identity of the announcer: if I post something on a message board, the information might reveal who I am even if I don't sign my name. Furthermore, like in the Russian Cards puzzle, if we assume that the announcer's intention was to stay anonymous, that in fact might reveal more information. In this paper we first look at the case when no assumption about intentions are made, in which case the logic with an anonymous public announcement operator is reducible to epistemic logic. We then look at the case when we assume common knowledge of the intention to stay anonymous, which is both more complex and more interesting: in several ways it boils down to the notion of a ``safe" announcement (again, similarly to Russian Cards). Main results include formal expressivity results and axiomatic completeness for key logical languages.

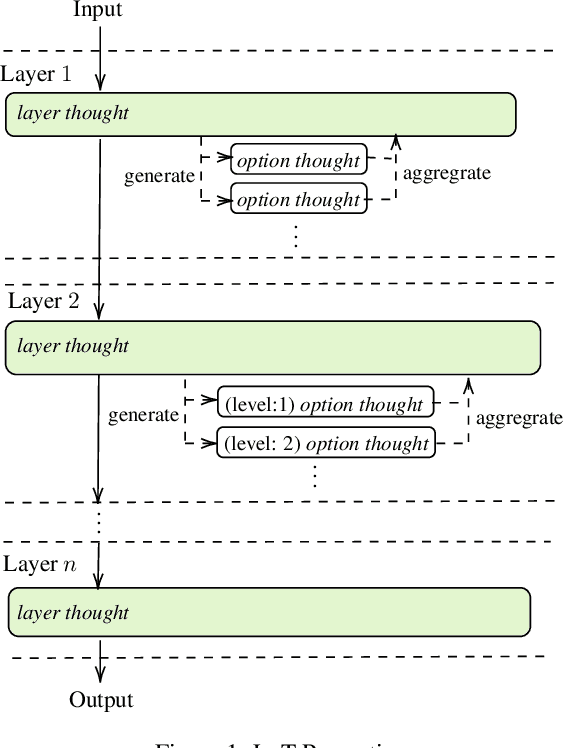

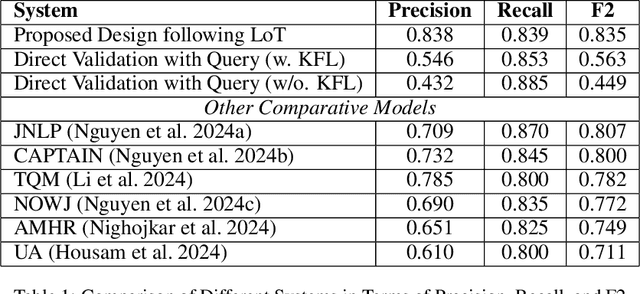

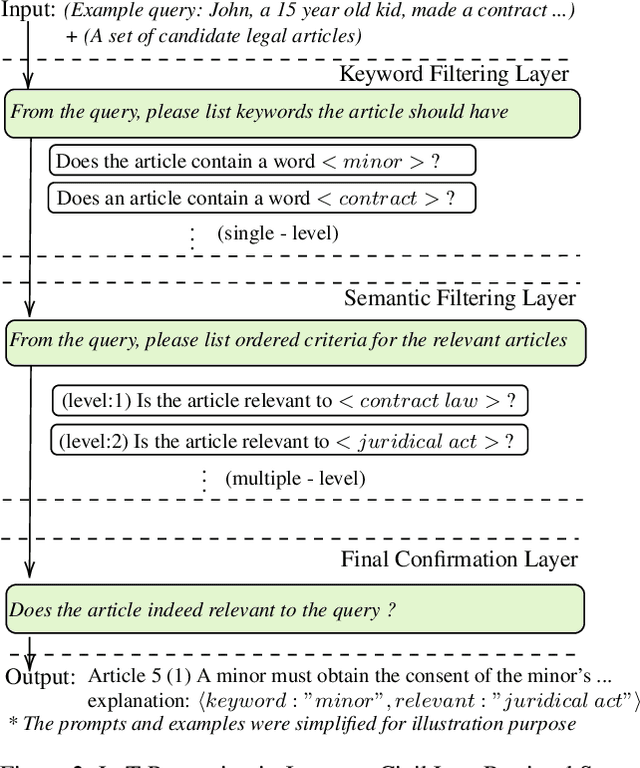

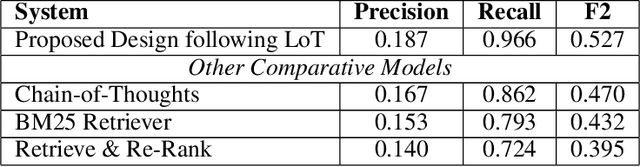

Layer-of-Thoughts Prompting (LoT): Leveraging LLM-Based Retrieval with Constraint Hierarchies

Oct 16, 2024

Abstract:This paper presents a novel approach termed Layer-of-Thoughts Prompting (LoT), which utilizes constraint hierarchies to filter and refine candidate responses to a given query. By integrating these constraints, our method enables a structured retrieval process that enhances explainability and automation. Existing methods have explored various prompting techniques but often present overly generalized frameworks without delving into the nuances of prompts in multi-turn interactions. Our work addresses this gap by focusing on the hierarchical relationships among prompts. We demonstrate that the efficacy of thought hierarchy plays a critical role in developing efficient and interpretable retrieval algorithms. Leveraging Large Language Models (LLMs), LoT significantly improves the accuracy and comprehensibility of information retrieval tasks.

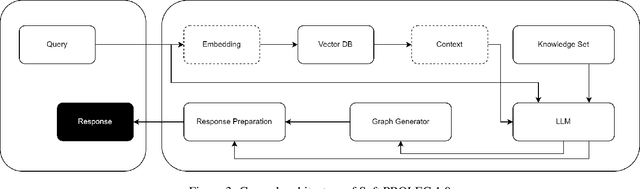

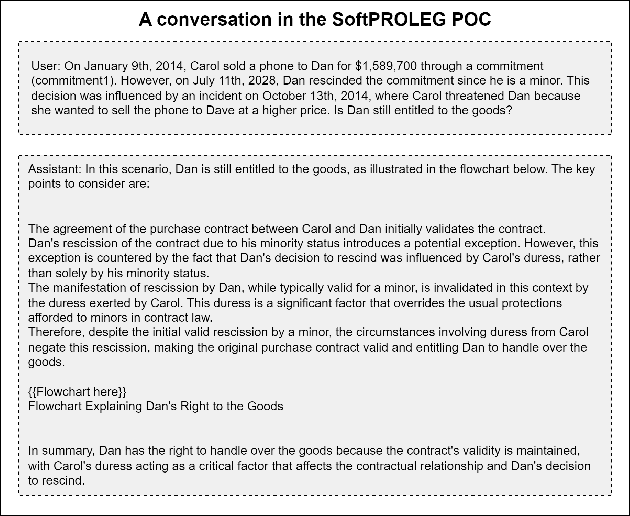

KRAG Framework for Enhancing LLMs in the Legal Domain

Oct 10, 2024

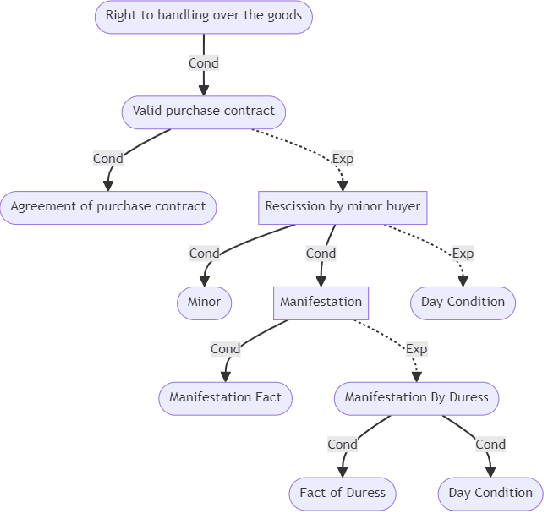

Abstract:This paper introduces Knowledge Representation Augmented Generation (KRAG), a novel framework designed to enhance the capabilities of Large Language Models (LLMs) within domain-specific applications. KRAG points to the strategic inclusion of critical knowledge entities and relationships that are typically absent in standard data sets and which LLMs do not inherently learn. In the context of legal applications, we present Soft PROLEG, an implementation model under KRAG, which uses inference graphs to aid LLMs in delivering structured legal reasoning, argumentation, and explanations tailored to user inquiries. The integration of KRAG, either as a standalone framework or in tandem with retrieval augmented generation (RAG), markedly improves the ability of language models to navigate and solve the intricate challenges posed by legal texts and terminologies. This paper details KRAG's methodology, its implementation through Soft PROLEG, and potential broader applications, underscoring its significant role in advancing natural language understanding and processing in specialized knowledge domains.

An Argumentative Approach for Explaining Preemption in Soft-Constraint Based Norms

Sep 06, 2024

Abstract:Although various aspects of soft-constraint based norms have been explored, it is still challenging to understand preemption. Preemption is a situation where higher-level norms override lower-level norms when new information emerges. To address this, we propose a derivation state argumentation framework (DSA-framework). DSA-framework incorporates derivation states to explain how preemption arises based on evolving situational knowledge. Based on DSA-framework, we present an argumentative approach for explaining preemption. We formally prove that, under local optimality, DSA-framework can provide explanations why one consequence is obligatory or forbidden by soft-constraint based norms represented as logical constraint hierarchies.

Enhancing Legal Document Retrieval: A Multi-Phase Approach with Large Language Models

Mar 26, 2024Abstract:Large language models with billions of parameters, such as GPT-3.5, GPT-4, and LLaMA, are increasingly prevalent. Numerous studies have explored effective prompting techniques to harness the power of these LLMs for various research problems. Retrieval, specifically in the legal data domain, poses a challenging task for the direct application of Prompting techniques due to the large number and substantial length of legal articles. This research focuses on maximizing the potential of prompting by placing it as the final phase of the retrieval system, preceded by the support of two phases: BM25 Pre-ranking and BERT-based Re-ranking. Experiments on the COLIEE 2023 dataset demonstrate that integrating prompting techniques on LLMs into the retrieval system significantly improves retrieval accuracy. However, error analysis reveals several existing issues in the retrieval system that still need resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge