Thi-Hai-Yen Vuong

NOWJ @BioCreative IX ToxHabits: An Ensemble Deep Learning Approach for Detecting Substance Use and Contextual Information in Clinical Texts

Feb 10, 2026Abstract:Extracting drug use information from unstructured Electronic Health Records remains a major challenge in clinical Natural Language Processing. While Large Language Models demonstrate advancements, their use in clinical NLP is limited by concerns over trust, control, and efficiency. To address this, we present NOWJ submission to the ToxHabits Shared Task at BioCreative IX. This task targets the detection of toxic substance use and contextual attributes in Spanish clinical texts, a domain-specific, low-resource setting. We propose a multi-output ensemble system tackling both Subtask 1 - ToxNER and Subtask 2 - ToxUse. Our system integrates BETO with a CRF layer for sequence labeling, employs diverse training strategies, and uses sentence filtering to boost precision. Our top run achieved 0.94 F1 and 0.97 precision for Trigger Detection, and 0.91 F1 for Argument Detection.

NOWJ@COLIEE 2025: A Multi-stage Framework Integrating Embedding Models and Large Language Models for Legal Retrieval and Entailment

Sep 09, 2025Abstract:This paper presents the methodologies and results of the NOWJ team's participation across all five tasks at the COLIEE 2025 competition, emphasizing advancements in the Legal Case Entailment task (Task 2). Our comprehensive approach systematically integrates pre-ranking models (BM25, BERT, monoT5), embedding-based semantic representations (BGE-m3, LLM2Vec), and advanced Large Language Models (Qwen-2, QwQ-32B, DeepSeek-V3) for summarization, relevance scoring, and contextual re-ranking. Specifically, in Task 2, our two-stage retrieval system combined lexical-semantic filtering with contextualized LLM analysis, achieving first place with an F1 score of 0.3195. Additionally, in other tasks--including Legal Case Retrieval, Statute Law Retrieval, Legal Textual Entailment, and Legal Judgment Prediction--we demonstrated robust performance through carefully engineered ensembles and effective prompt-based reasoning strategies. Our findings highlight the potential of hybrid models integrating traditional IR techniques with contemporary generative models, providing a valuable reference for future advancements in legal information processing.

Exploiting LLMs' Reasoning Capability to Infer Implicit Concepts in Legal Information Retrieval

Oct 16, 2024Abstract:Statutory law retrieval is a typical problem in legal language processing, that has various practical applications in law engineering. Modern deep learning-based retrieval methods have achieved significant results for this problem. However, retrieval systems relying on semantic and lexical correlations often exhibit limitations, particularly when handling queries that involve real-life scenarios, or use the vocabulary that is not specific to the legal domain. In this work, we focus on overcoming this weaknesses by utilizing the logical reasoning capabilities of large language models (LLMs) to identify relevant legal terms and facts related to the situation mentioned in the query. The proposed retrieval system integrates additional information from the term--based expansion and query reformulation to improve the retrieval accuracy. The experiments on COLIEE 2022 and COLIEE 2023 datasets show that extra knowledge from LLMs helps to improve the retrieval result of both lexical and semantic ranking models. The final ensemble retrieval system outperformed the highest results among all participating teams in the COLIEE 2022 and 2023 competitions.

Enhancing Legal Document Retrieval: A Multi-Phase Approach with Large Language Models

Mar 26, 2024Abstract:Large language models with billions of parameters, such as GPT-3.5, GPT-4, and LLaMA, are increasingly prevalent. Numerous studies have explored effective prompting techniques to harness the power of these LLMs for various research problems. Retrieval, specifically in the legal data domain, poses a challenging task for the direct application of Prompting techniques due to the large number and substantial length of legal articles. This research focuses on maximizing the potential of prompting by placing it as the final phase of the retrieval system, preceded by the support of two phases: BM25 Pre-ranking and BERT-based Re-ranking. Experiments on the COLIEE 2023 dataset demonstrate that integrating prompting techniques on LLMs into the retrieval system significantly improves retrieval accuracy. However, error analysis reveals several existing issues in the retrieval system that still need resolution.

NOWJ1@ALQAC 2023: Enhancing Legal Task Performance with Classic Statistical Models and Pre-trained Language Models

Sep 16, 2023Abstract:This paper describes the NOWJ1 Team's approach for the Automated Legal Question Answering Competition (ALQAC) 2023, which focuses on enhancing legal task performance by integrating classical statistical models and Pre-trained Language Models (PLMs). For the document retrieval task, we implement a pre-processing step to overcome input limitations and apply learning-to-rank methods to consolidate features from various models. The question-answering task is split into two sub-tasks: sentence classification and answer extraction. We incorporate state-of-the-art models to develop distinct systems for each sub-task, utilizing both classic statistical models and pre-trained Language Models. Experimental results demonstrate the promising potential of our proposed methodology in the competition.

Constructing a Knowledge Graph for Vietnamese Legal Cases with Heterogeneous Graphs

Sep 16, 2023Abstract:This paper presents a knowledge graph construction method for legal case documents and related laws, aiming to organize legal information efficiently and enhance various downstream tasks. Our approach consists of three main steps: data crawling, information extraction, and knowledge graph deployment. First, the data crawler collects a large corpus of legal case documents and related laws from various sources, providing a rich database for further processing. Next, the information extraction step employs natural language processing techniques to extract entities such as courts, cases, domains, and laws, as well as their relationships from the unstructured text. Finally, the knowledge graph is deployed, connecting these entities based on their extracted relationships, creating a heterogeneous graph that effectively represents legal information and caters to users such as lawyers, judges, and scholars. The established baseline model leverages unsupervised learning methods, and by incorporating the knowledge graph, it demonstrates the ability to identify relevant laws for a given legal case. This approach opens up opportunities for various applications in the legal domain, such as legal case analysis, legal recommendation, and decision support.

RMDM: A Multilabel Fakenews Dataset for Vietnamese Evidence Verification

Sep 16, 2023Abstract:In this study, we present a novel and challenging multilabel Vietnamese dataset (RMDM) designed to assess the performance of large language models (LLMs), in verifying electronic information related to legal contexts, focusing on fake news as potential input for electronic evidence. The RMDM dataset comprises four labels: real, mis, dis, and mal, representing real information, misinformation, disinformation, and mal-information, respectively. By including these diverse labels, RMDM captures the complexities of differing fake news categories and offers insights into the abilities of different language models to handle various types of information that could be part of electronic evidence. The dataset consists of a total of 1,556 samples, with 389 samples for each label. Preliminary tests on the dataset using GPT-based and BERT-based models reveal variations in the models' performance across different labels, indicating that the dataset effectively challenges the ability of various language models to verify the authenticity of such information. Our findings suggest that verifying electronic information related to legal contexts, including fake news, remains a difficult problem for language models, warranting further attention from the research community to advance toward more reliable AI models for potential legal applications.

NeCo@ALQAC 2023: Legal Domain Knowledge Acquisition for Low-Resource Languages through Data Enrichment

Sep 11, 2023Abstract:In recent years, natural language processing has gained significant popularity in various sectors, including the legal domain. This paper presents NeCo Team's solutions to the Vietnamese text processing tasks provided in the Automated Legal Question Answering Competition 2023 (ALQAC 2023), focusing on legal domain knowledge acquisition for low-resource languages through data enrichment. Our methods for the legal document retrieval task employ a combination of similarity ranking and deep learning models, while for the second task, which requires extracting an answer from a relevant legal article in response to a question, we propose a range of adaptive techniques to handle different question types. Our approaches achieve outstanding results on both tasks of the competition, demonstrating the potential benefits and effectiveness of question answering systems in the legal field, particularly for low-resource languages.

Improving Vietnamese Legal Question--Answering System based on Automatic Data Enrichment

Jun 08, 2023

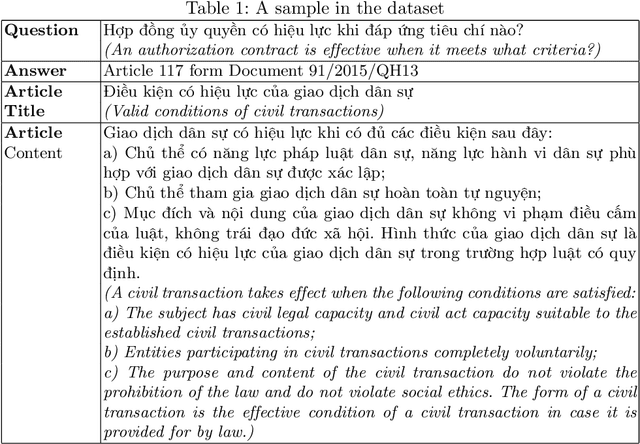

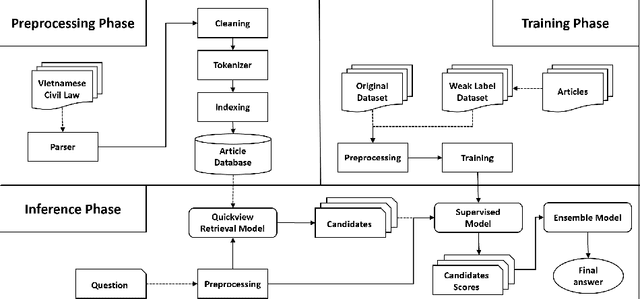

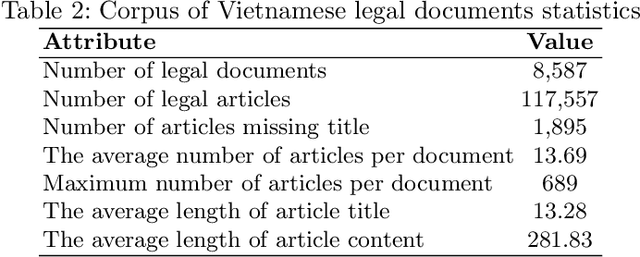

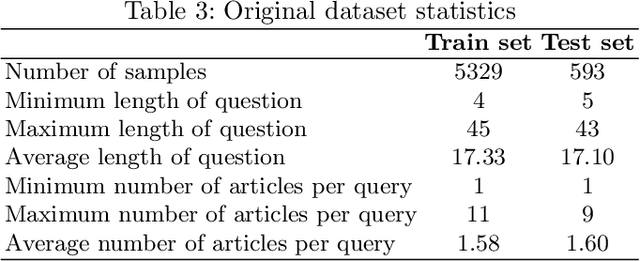

Abstract:Question answering (QA) in law is a challenging problem because legal documents are much more complicated than normal texts in terms of terminology, structure, and temporal and logical relationships. It is even more difficult to perform legal QA for low-resource languages like Vietnamese where labeled data are rare and pre-trained language models are still limited. In this paper, we try to overcome these limitations by implementing a Vietnamese article-level retrieval-based legal QA system and introduce a novel method to improve the performance of language models by improving data quality through weak labeling. Our hypothesis is that in contexts where labeled data are limited, efficient data enrichment can help increase overall performance. Our experiments are designed to test multiple aspects, which demonstrate the effectiveness of the proposed technique.

NOWJ at COLIEE 2023 -- Multi-Task and Ensemble Approaches in Legal Information Processing

Jun 08, 2023Abstract:This paper presents the NOWJ team's approach to the COLIEE 2023 Competition, which focuses on advancing legal information processing techniques and applying them to real-world legal scenarios. Our team tackles the four tasks in the competition, which involve legal case retrieval, legal case entailment, statute law retrieval, and legal textual entailment. We employ state-of-the-art machine learning models and innovative approaches, such as BERT, Longformer, BM25-ranking algorithm, and multi-task learning models. Although our team did not achieve state-of-the-art results, our findings provide valuable insights and pave the way for future improvements in legal information processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge